Can AI Moderators Save Streamers From Toxic Trolls?

By combining tech with better policy, streaming platforms like Twitch and YouTube could be more pleasant for gamers.

The internet can be your best friend or your worst enemy. Today it’s easier than ever to hop on the web, make connections with the like-minded, learn something new or even launch a career, like game streaming. Streamers build a following by putting their skills and personality out there, and you can’t be a popular streamer without interacting with your viewers and the broader community on Twitch YouTube and the like. But what happens when that community is plagued with toxic behavior, from hate speech to harassment, that can seem louder than the kudos and positive emojis? As a technology business, can innovations like artificial intelligence serve as the elixir for an industry made unpleasant by its own customers?

Trolls Gone Wild

If you’re internet-savvy enough to have made it to this website, chances are you’ve seen or even experienced how nasty people can be online. With streamers, the insults can come in real-time and become more personal as your face and image are put on display. In fact, according to Intel research,10% of players who quit gaming cited toxicity as the reason.

In an op-ed, a Twitch streamer known as ZombaeKillz detailed her own experiences as black female streamer.

“In two decades of online gaming, I have been stalked offline, threatened with rape and murder and even saw users steal my kids' pictures from Facebook so they could mock them in online groups,” she wrote. “The feeling of helplessness and violation is something you never forget. But with gaming giants … embracing the Black Lives Matter movement in recent months, you might think that these companies would be more conscientious about stopping bigotry on their platforms.

However, based on my experiences and those of other streamers of color I speak to, it’s clear that the reality doesn’t live up to the rhetoric.”

It’s no secret that various minority groups, including the LGBTQIA community, feel “vulnerable” in these environments, as ZombaeKillz put it. And while you can block people on Twitch and other game streaming platforms, most grant enough leeway for multiple offenses, whether calling someone ugly or calling someone a racial slur, before getting banned -- if they are even disciplined at all.

Vague Policies Leave Streamers Vulnerable

To help police its community, Twitch relies heavily on a team of human moderators, but could an influx of tech help make the platform, which has an average of 1.5 million viewers at any moment, more user-friendly?

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Twitch’s content moderation is built upon its Terms of Service and Community guidelines. Streamers also have the option to build additional layers of standards on top of that. For example, streamers can use volunteer moderators to help moderate content on their channel. These volunteer moderations “play a vital role in the Twitch community,” a Twitch spokesperson told Tom’s Hardware.

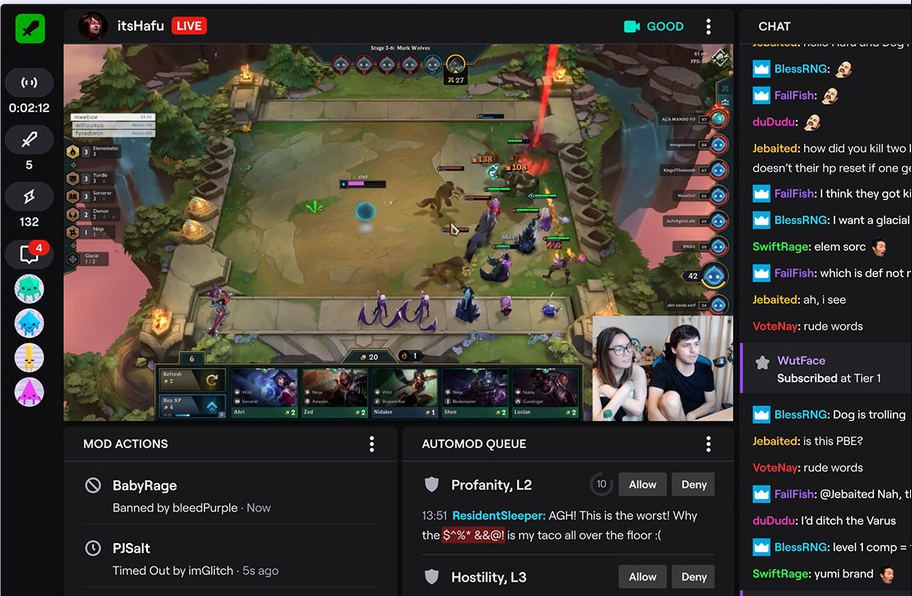

“We invest heavily in this area and support them with tools and resources to make their jobs easier, (such as Mod View), and improve the overall moderation structure on Twitch,” the spokesperson said.

Twitch’s Mod View feature is just one of the ways we see tech starting to make moderation of over 4 million unique streamers’ channels more plausible. Announced in March, Mod View is a home base of tools for Twitch moderators that replaces the need to type commands in chat.

When it comes to dealing with actual complaints, Twitch uses “a combination of human moderators and automation”the spokesperson told us. One of the platform’s biggest investments is in “trust & Safety,” they said. This spring, the company launched the Twitch Safety Advisory Council. It’s also hiring a VP of global trust and safety.

“We have doubled the size of our safety operations team this past year, enabling us to process reports much faster, and added new tools for moderators, [such as] moderator chat logs, and viewers to control their experience, [such as] chat filters,” Twitch’s spokesperson said.

“We also have work underway, including a review of our Hateful Conduct and Harassment policies, enhanced offensive username detection, improvements to AutoMod and our Banned Words list and other projects focused on reducing harassment and hateful conduct. According to Twtich’s policy, hateful conduct is anything that “promotes, encourages or facilitates discrimination, denigration, objectification, harassment or violence based on race, ethnicity, national origin, religion, sex, gender, gender identity, sexual orientation, age, disability or serious medical condition or veteran status and is prohibited.”

But when it comes to punishment for such “prohibited” activity, things get fuzzy. Prohibited typically means forbidden, but in Twitch’s case, doing something forbidden doesn’t seem to mean you’ll be prevented from doing it ever again. Twitch’s policy says hateful conduct is a “zero-tolerance violation” but then immediately points to a “range of enforcement actions,” which includes indefinite suspension, as well as warnings and temporary suspensions (1-30 days).

When we asked Twitch if it ever permanently bans users, the company would only point me to its Account Enforcements and Chat Bans page, which states: “Depending on the nature of the violation, we take a range of actions including a warning, a temporary suspension and, for more serious offenses, an indefinite suspension.” However, the guidelines don’t delineate which behaviors lead to this level of enforcement.

“For the most serious offenses, we will immediately and indefinitely suspend your account with no opportunity to appeal,” Twitch’s Account Enforcements and Chat Bans page adds. “If a banned user appears in a third-party channel while being suspended, this could cause the ban of the channel they appear in.”

Of course, the best streaming platforms of 2020 include more than just Twitch. There are also gamers on platforms like Facebook Gaming and YouTube. WIth YouTube, policies essentially allow for the occasional slip-up of slurs and other offenses.

YouTube lets you anonymously flag content for it to review if you think it violates their guidelines. And YouTube says it removes any content that violates its Community Guidelines. But the people who posted it may be back sooner than you’d expect.

YouTube explicitly states that “Hate speech, predatory behavior, graphic violence, malicious attacks, and content that promotes harmful or dangerous behavior isn't allowed on YouTube” and gets more specific by detailing hate speech against “age, caste, disability, ethnicity, gender identity and expression, nationality, race, immigration status, religion, sex/gender, sexual orientation, victims of a major violent event and their kin [and] veteran status”

The site dives even further when it comes to race, refuting those who “incite or promote hatred based on any of the attributes noted above. This can take the form of speech, text, or imagery promoting these stereotypes or treating them as factual.” The list goes on, putting the X on “claiming superiority of a group” or comparing people to “animals, insects, pests, disease or any other non-human entity.”

But when it comes to enforcement, YouTube gets way less specific, saying it may remove content, but only “in some rare cases.” Confusingly, further reading the policy follows up with a statement that seems to implicate that this content will automatically be removed:

“If your content violates this policy, we’ll remove the content and send you an email to let you know. If this is your first time violating our Community Guidelines, you’ll get a warning with no penalty to your channel. If it’s not, we’ll issue a strike against your channel. If you get three strikes, your channel will be terminated,” YouTube’s policy states.

YouTube’s so-called Strikes System grants users three strikes within a 90-day period. Theoretically, you could spread your harassment over a careful 91-day period and never rack up more than a couple strikes. It’s possible YouTube’s moderation squad is too savvy for this, but its policy doesn’t explicitly prevent it.

Streaming Platforms Are Already Moderating With Tech

Perhaps more black-and-white than streaming platforms’ open-ended language on fighting harassment, a layer (or two) of tech is also critical. Advancements like machine learning are common in streaming platforms’ moderation toolkits. But there’s also room for more advanced technologies to help fight hate speech and other harassment in real-time.

First, let’s start with some things gaming platforms are currently using.

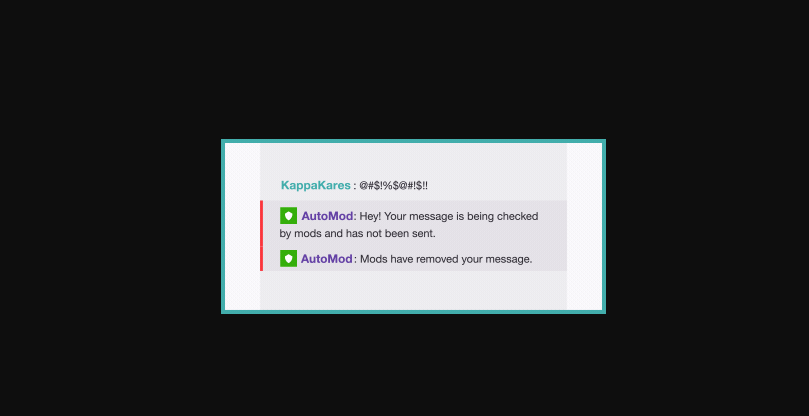

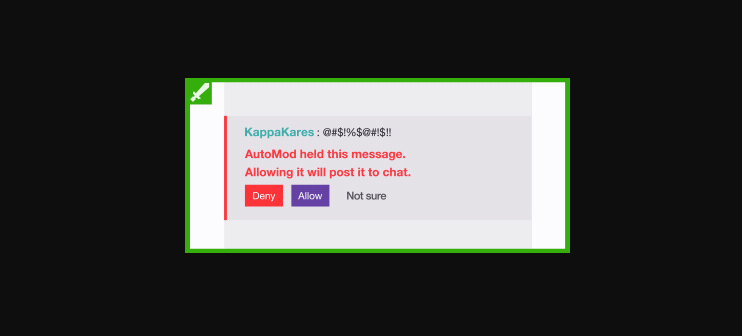

Twitch’s AutoMod, for example, combines machine learning and natural language processing algorithms to identify “risky” chat messages. It puts these comments on hold until a moderator can view them and approve them for display. The tool can also read through misspellings and evasive language.

AutoMod also allows for five levels of moderation, including none, over four different categories: discrimination, hostility, profanity and sexual content. Twitch controls the dictionary of words used, but streamers can also make a list of words for their own channel.

YouTube builds community policies by working with YouTube creators, as well as machine learning “to detect problematic content at scale,” considering that hundreds of hours of content are reportedly loaded to the platform by the minute. Flagged content, meanwhile, gets viewed by “trained human reviewers.” YouTube also employs an Intelligence Desk that “monitors the news, social media and user reports to detect new trends surrounding inappropriate content.”

But with offenders having loopholes for keeping their accounts and, therefore, the harassment alive, what can be done so streamers don’t have to see hate speech and other offensive behavior in the first place?

Hope for Improvement: Real-Time Moderation Tools

One of the obvious challenges with streaming moderation is the sheer wealth of videos and comments that hits these platforms that are exploding with popularity. Streamers can have volunteer moderators on air with them, but automation has the potential to take things to the next level.

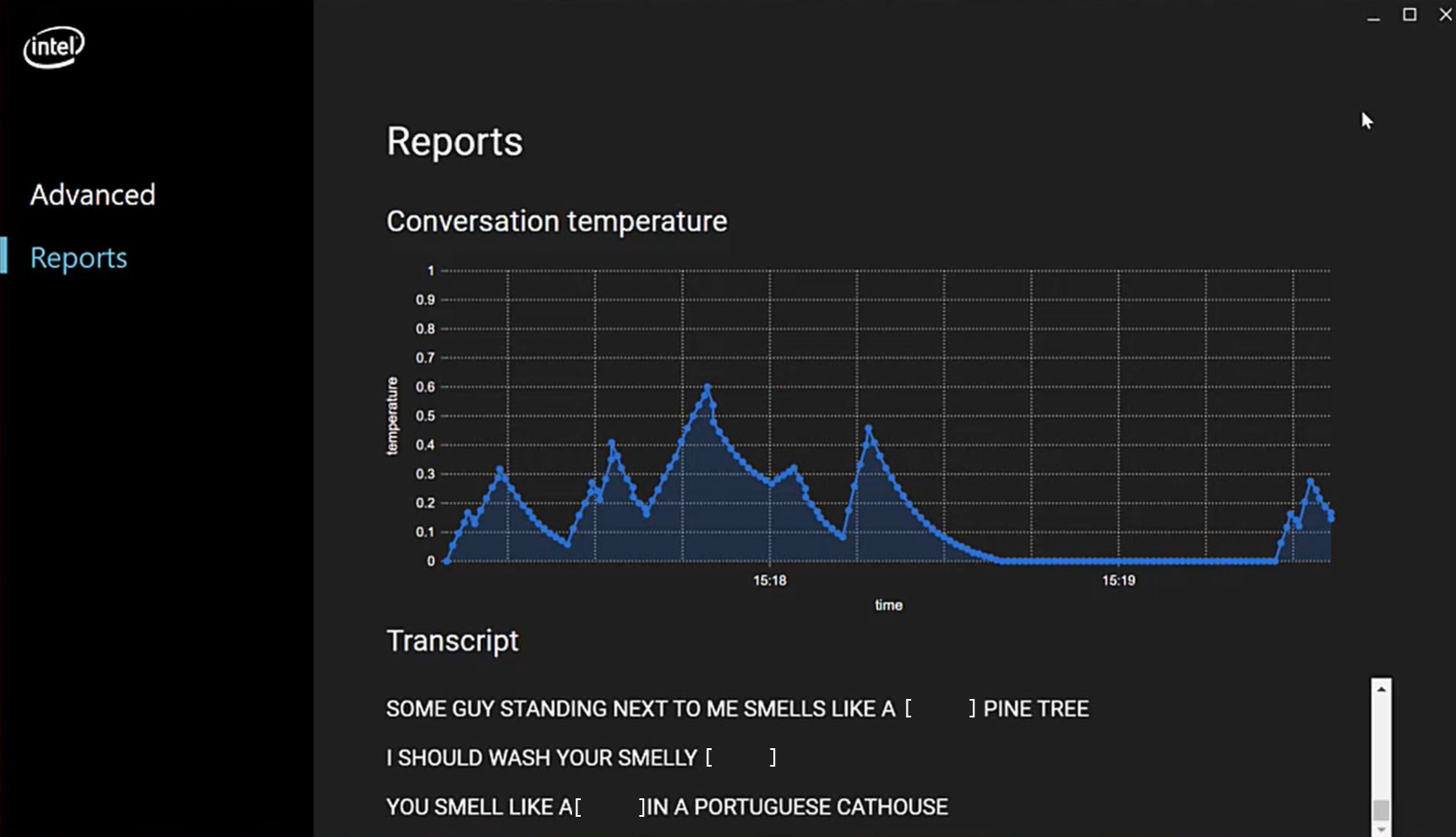

At 2019’s Game Developers Conference (GDC), Intel detailed a fascinating way for tech to play a role in moderating. Intel showed off a prototype tech made in collaboration with Spirit AI that used artificial intelligence to identify toxic chat. Spirit AI had been focusing on text chat, but with Intel’s help the technology is growing to moderate voice chat too.

“The tooling that we built, basically you point it at a directory on your on your system. It would go through that list of files and tell you which ones which ones flagged certain types of behaviors and why,” George Kennedy, Intel gaming solutions architect, told Tom’s hardware regarding the demo showed off last year.

Since then, Intel has worked with Discord to harvest opt-in data from its Destiny 2 channel and built a validated data set to prove that it has a “really high accuracy at detecting certain types of toxic content,” Kennedy said. Now, the company is experimenting with different features.

Currently the tech takes human speech (mostly in English), converts it to text and then uses behavior models that Spirit AI uses for its various customers in the gaming industry. The behavior models vary. Some look at an entire domain and tell you if something’s toxic. Other companies use individual behavior models for individual categories. Those types of models have the option to use deep learning or a random forest classifier.

Kennedy showed us a couple of demos that Intel has been using to test its machine learning pipelines. They’re able to take content from any video or audio file, including one playing off YouTube, or microphone and do transcripts in real-time, blocking out whatever type of audio is specified.

They’re also experimenting with a “temperature tracking mechanism” where gamers could track the temperature of a conversation with another user. If the conversation crosses the gamer’s threshold of comfort, the other user is muted.

In a demo, Kennedy played a YouTube video and had the AI replace the names of fruits with quacks. This sort of technology could help gamers with hands-free moderation during livestreams.

It also showed us customization options with different categories, putting the power in the user’s hands.

“After the algorithm converts it to text, then we have a set of behavior models that we use to do detection,” Kennedy explained.

This is all still in the research and development phase, and Intel hasn’t committed to building any products, but there’s clearly opportunity down the line -- especially considering the wide gaps stream platform’s community policies are leaving exposed.

Streamers Push for More

Tech can automate moderation, helping to better tackle large audiences and live content and giving streamers customization power with tools that are easier to understand and leverage than streaming platforms’ community policies. But ultimately, the industry is widely looking at streaming gaming platforms to use their power too.

“Once these different platforms have policies, they can use machine learning tools to enforce those policies, but at the end of the day, you may need to get to behavior change,” Intel’s Kennedy said. “The platforms have the option to ban people for things that violate their terms of service. … If you don't want to actually censor players, there is the option to give players user-facing tools that let them control the type of content that they encounter when they play games.”

“We see a future where in addition to delivering the best power performance, we can actually improve the gamer experience so that this issue is less of a problem for them,” the exec said.

In her op-ed, ZombaeKillz pushed for more enforcements from platforms like Twitch, such as “IP bans, so users cannot create multiple accounts for harassment” and a way to prevent hate speech-based usernames.

Some streamers have pushed for instant bans for certain offenses.

““Any time a racial slur [is used], whether they tried to explain that that was a one-time thing or they didn’t mean it like that, i feel like just one strike and they’re gone [would be a better policy,” YouTube streamer BrownSugarSaga told Tom’s Hardware. “Anything against the LGBT community, one strike and you’re completely gone. I feel like they should be a bit more strict and...take that into consideration because a lot of times people do report, but the [offenders] still stay there. They are not strict enough … they wait for multiple reports…”

Tech is only as powerful as the people and policies behind it. And it seems that players at the heart of the burgeoning streaming industry are looking to the platforms to step up their game.

Scharon Harding has over a decade of experience reporting on technology with a special affinity for gaming peripherals (especially monitors), laptops, and virtual reality. Previously, she covered business technology, including hardware, software, cyber security, cloud, and other IT happenings, at Channelnomics, with bylines at CRN UK.

-

jpe1701 This definitely isn't just a problem for streamers. I haven't been able to play a multiplayer session in anything for years because I have disabled hands that slow me down so I am inundated with messages to get off with such colorful language and threats.Reply -

andrewkelb I think tools already exist that allow content creators to moderate their own streams and content. My worry is that the most offended among us will set broad policies that affect everyone and have a negative impact far beyond the offending parties.Reply -

USAFRet "AI" is only as good as he who creates the parameters and algorithms.Reply

It is not yet magic. -

saltyGoat11 Reply

I am sorry to hear this bro! I quit online gaming because of the toxic environment and some gamers are vile and have disgusting behavior. I only play single players now. Sometimes I play ARK on PvE servers. Some gamers are nice and helping you out when you begin. But others..... well, you already know... :(jpe1701 said:This definitely isn't just a problem for streamers. I haven't been able to play a multiplayer session in anything for years because I have disabled hands that slow me down so I am inundated with messages to get off with such colorful language and threats. -

NightHawkRMX People on the internet are toxic. Just accept it and just ignore them. It's really not hard.Reply -

Phaaze88 Nope. That won't be enough.Reply

The power of anonymity...

Some users get massive e-boners from ruining others' experiences - they pretty much live for that crap. Comes with the territory.

If folks can't deal with that, they really shouldn't stream.

So true.USAFRet said:"AI" is only as good as he who creates the parameters and algorithms.

It is not yet magic.

I see the Skynet entity from the Terminator series becoming a distant possibility if mankind isn't careful.

A sentient AI is no different from a human - err, minus the body at least. -

EdgeT And thus Freedom of Speech died. Sad state of affairs.Reply

Added to the list of services that engage in terrorism, which will never get my money. -

Jim90 ReplyEdgeT said:And thus Freedom of Speech died. Sad state of affairs.

Added to the list of services that engage in terrorism, which will never get my money.

Freedom of Speech doesn't give you open license to make other folks lives a misery...does it??...you DO know that??

- and therein lies the issue with 'certain groups' of the population pre-disposed to, literally, over-generalising law / constitutional wording simply because they lack the required mental toolset. There's also a critical issue with interpretation of that same wording, particularly that written in very different times and which should have equally clearly, time-expired.

Locally and globally, society has a hell of a lot of growing up to do. -

NightHawkRMX Reply

The issue with punishing hate speech is that everyone's definition of hate speech depends on each person's opinions and their own biases.Jim90 said:Freedom of Speech doesn't give you open license to make other folks lives a misery...does it??...you DO know that??

- and therein lies the issue with 'certain groups' of the population pre-disposed to, literally, over-generalising law / constitutional wording simply because they lack the required mental toolset. There's also a critical issue with interpretation of that same wording, particularly that written in very different times and which should have equally clearly, time-expired.

Locally and globally, society has a hell of a lot of growing up to do.

If you punish hate speech you end up oppressing certain views that are against the moderators biases, which tears down the first amendment.