Epic Games At GDC: Gearbox Software Tech Demo, New Features In VR Editor

Epic Games is back again with its annual “State of Unreal” show at GDC. Aside from showing the power of the Unreal Engine in movies and the automotive industry, the company showed how its engine is used to power future games, whether it’s in VR or in traditional video games.

A Gearbox Tech Demo

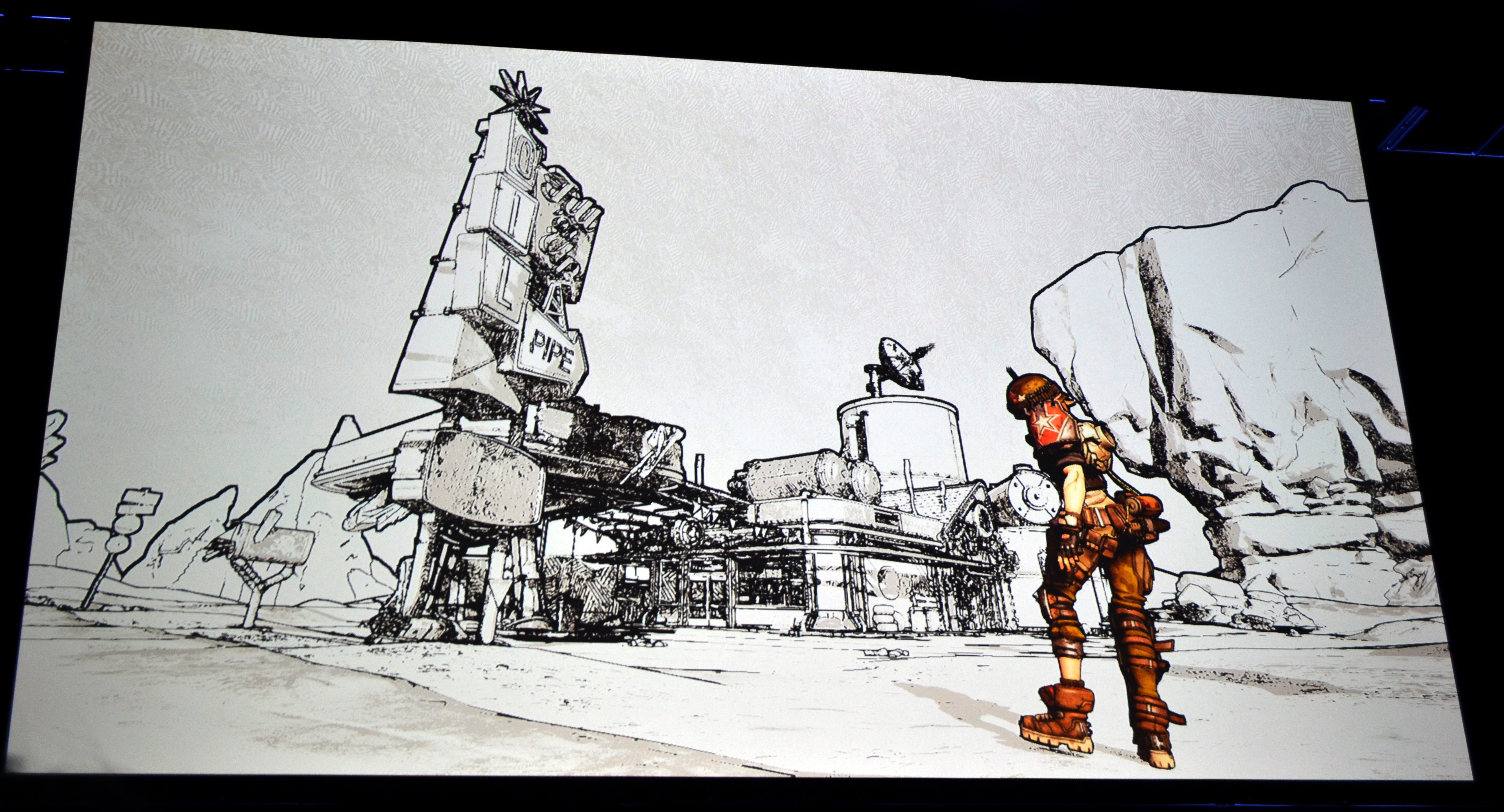

Gearbox Software CEO Randy Pitchford appeared on stage to talk about how the company will use the Unreal Engine to create the next installment in the Borderlands franchise. He presented a tech demo with assets that could be used in a future title from Gearbox.

The demo focused on highlights and crosshatching, which uses intersecting lines to create a shaded area. Both of these elements are particularly important in terms of lighting. In one example, he showed a spherical shape that used both crosshatching and highlights to show natural light hitting the object at an angle. In color, you would notice that a portion of the sphere is gleaming from direct contact with the light while another part of the sphere is shaded. When the same animation is displayed in black and white, however, you easily notice which sections of the sphere have highlighted and crosshatched layers.

Pitchford showed other objects including a large turbine, clear water, and even a character to demonstrate how the elements combined to create even more realistic lighting in a game. Throughout the presentation, Pitchford reassured the crowd that the landscape and its mysterious character were just built for the purposes of the tech demo, and not indicative of what’s coming in the next Borderlands game. However, the assets used could make their way to an upcoming game from the company.

More Features For VR Developers

Epic Games also further increased the way developers can now work with VR titles within the VR Editor software. The company showed a demo onstage where a woman was using the Oculus Touch controllers to move around the map, which was a beachfront area. She could “pull” the map towards her with the controller in order to move forward, or use both controllers to turn the map while she said stayed in the same position.

With the new radial menu in VR, she could access specific assets and place them on the ground in a similar manner to an airbrush. Another way to add assets is with gravity. By activating the simulate menu, you can place rocks in mid-air and have them drop onto the ground thanks to the virtual gravity. There’s also a new “smart snapping system,” which makes it easier to conjoin assets together without having to fine tune their placement.

She then started an animation sequence where a plane flew across the ocean and then towards the beach. However, it was flying too low. Within VR, you can pause the animation and change the plane’s overall trajectory so that it flies higher, and then resume the animation without any hiccups.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The editing tool also has new mesh editing plugins so that you can build assets from scratch within VR. In the demo, the woman built a rough outline for a lighthouse. However, in the process of constructing the structure’s upper levels, the shapes that made up the base and the lower level of the lighthouse began to morph into its finalized form.

Epic's Tim Sweeney said that the process is called subdivision surface technology, a feature created by Pixar in its early days. By changing the shape’s base factor with any number between one and zero (the base factor of one makes it a completely smooth shape, whereas zero gives it more polygonal features), you can shape the asset into anything you want. In this case, the feature was used to sculpt the lower levels of the lighthouse while additional shapes continued to pile on at the top.

Eventually, each facet of the lighthouse will be formatted with the technology in order to make it even more realistic. According to Sweeney, the constant additions to the VR Editor make it one of the best tools to create virtual reality titles.

"It’s the full Unreal Editor running in real time in VR," he said. "It’s not just a little tool for fooling around with 3D. It is the real, professional tool for building AAA games. It’s far easier to use than [the] mouse-based CAD software that you’re used to because you reach out and grab objects and interact with all of them naturally."

Even with the many features available on the editor, the fact remains that only one person can work in the virtual space at a time. Putting multiple users in the same VR space would make for a faster development time. I asked if Epic Games was working on implementing the feature in a future update, and it seems that Sweeney and the developers are already somewhat ahead of the curve:

“We have some experiments going on with that on the Unreal Engine team, and we think it’s going to be very promising. There’s an interesting scaling problem, though. We compare it to ‘What if we had 10 programmers each with a single text editor and they’re all typing code at the same time?’ You realize really quickly that the code is broken most of the time because it’s not completed, and so we think the real solution is going to be a layered set of systems for real-time interactive editing with other users, as well as revision control tools so you can work on some updates in an isolated way, and then once they’re complete you can check them into the working tool version. I think it’s that full suite of different tools that everyone will be working together that makes this scale up to the large-scale teams that use [the] Unreal Engine today.”

More On The Way

After GDC, the company will continue to provide support and updates for its immense client base of developers that use the Unreal Engine. Those creating experiences for virtual reality already have many features available in the VR Editor, but Epic Games will continue to listen to the community and add more tools in future updates. With additional ventures in the automotive, movie and mobile industries, it seems that the state of Epic Games and its Unreal Engine is well.

Rexly Peñaflorida is a freelance writer for Tom's Hardware covering topics such as computer hardware, video games, and general technology news.