Google Slashes Data Center Cooling Costs By 40 Percent In First Real-World Application Of the DeepMind AI

Google announced that it has already been using the DeepMind AI to cut the company’s data center cooling costs by 40 percent. This implementation marks one of the first real-world applications of the DeepMind AI after Google used it to power the AlphaGo client that beat Lee Sedol, an 18-times world champion of the Chinese game "Go."

DeepMind, Beyond "Go"

So far, Google has mostly used its DeepMind artificial intelligence technology in gaming environments, where it could learn how to play the games by itself through trial and error, which is not unlike how humans learn various skills.

The games were effective in helping the AI develop human-like thinking in various environments, which is how the DeepMind AI managed to beat a world champion at a game that many experts thought was unwinnable by an AI for at least another ten years.

After the Go games, Google started talking to the National Health Services (NHS) in England about how they could use the DeepMind technology in real-world scenarios to improve healthcare. However, while we’re still waiting on the results of that collaboration, the DeepMind AI has already scored a big win for Google itself by helping the company cut its data center cooling costs by 40 percent.

The DeepMind-Powered Data Center

Google has always focused on reducing the power consumption of its data centers. It even invested significant amounts of money to power them with renewable energy to reduce the environmental impact that its large data centers have on the climate. The company said the computational power of its servers is now 3.5 times larger than it was five years earlier, but that it can accomplish it using the same amount of energy.

One of the primary uses for energy in a data center environment is cooling. The servers generate large amounts of heat, which the data center must remove for it to stay within safe temperature ranges. Data centers typically employ large industrial equipment such as pumps, chillers and cooling towers to regulate the temperature.

However, Google said that operating these cooling systems in a complicated data center environment, while taking into account external factors such as the weather, is a highly complex task. Human intuition or various formulas for operating the systems often fall short of optimal results. In addition, each data center is unique, so Google cannot apply the knowledge of how to operate one entirely to another.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google started using machine learning two years ago to address the complex problem. However, it was only a few months ago when the company’s data center engineers began collaborating that the DeepMind team to find a better solution.

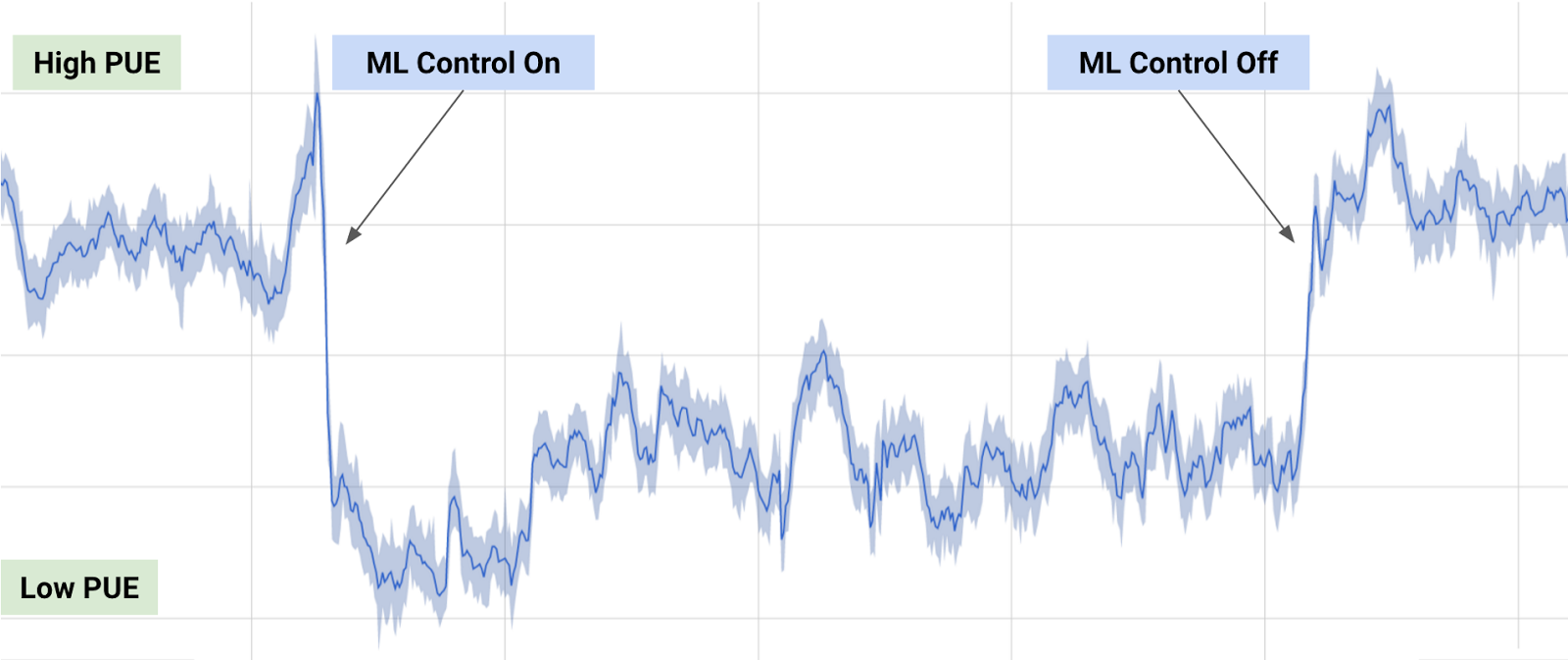

The teams used data such as temperatures, power, pump speeds and setpoints, among others, from all of the existing data center sensors to train a set of deep neural networks. Google first trained neural networks on the average future PUE (Power Usage Effectiveness), which is the ratio of the total building energy usage to the IT energy usage. Then it trained two additional neural networks to predict the future temperature and pressure of the data center over the next hour. Using these neural networks, it can recommend a set of actions to ensure the optimal use of energy.

This DeepMind-powered solution led to a 40 percent reduction in energy used for cooling and a 15 percent reduction in PUE. This achievement is especially impressive because the data center that Google used for the experiments was already at its lowest historical PUE rating.

Google intends to use the general-purpose framework it created for its data center in other scenarios, including for improving power plant conversion efficiency, reducing semiconductor manufacturing energy and water usage, or helping manufacturing facilities increase throughput. Google should unveil more details in an upcoming publication.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

anbello262 These kind of articles are the ones that make me thinkb"We are in the future" (as in the future I saw in movies when I was little, 15~20 years ago).Reply

I'm glad to be the generation that gets to explore cyberspace. -

drozd81 So Google replaced their default Building Automation System with its own "AI" which in this case is simply their own version of a Building Automation System that is custom tailored to their particular data center, probably has capability to control more points than there were originally and optimized PID's based on environmental factors. I applaud the 40% improvement, but realistically, is artificial intelligence something that's really required here to make that improvement? It seems that a team of skilled data center engineers would do no worse than that. Looking forward to reading that publication and hoping to find out that AI really did something incredible there that couldn't be done with reasonable human-based resources. Right now I'm skeptical but I would love to be wrong about that.Reply -

anbello262 I think that the improvement made is humanely possible.Reply

But that's exactly the thing that surprises me.

It means that AI is already on a deployable level. It doesn't need to be 'better than humans' at some complex task. The fact that it is 'as good as', is already more than enough for me.

After all, the main point is the ability to deploy it multiple times with little modification, and get a semi-optimum result each time. If using a teams of engineers, they usually have to pretty much start over every time.

Like comparing doing certain task, or programming something to do it for you. If it's only one time, just doing it is better. But if it needs to be dlne several times (or there's hope of reusing it in the future) programming is usually the best choice.

I hope to see more, how far this goes in the near future. -

uglyduckling81 The answer to climate change will no doubt be exterminate all humans as the route cause of the problem. Skynet confirmed...Reply -

jtown82 its not that this could not have been done by humans its that the amount of humans it would have taken to extrapolate enough data and then convert it into useable information to be used to then compare and contrast this type of problem would not be efficient. There is no such thing as an AI currently. Every super computer runs off of hard set parameters using simple if, or, then , when, where, ect ect ect statements. they all do the same thing. take data they are hardset to look at, run them against the hard set parameters and out put it in a meaningful way that humans can use efficiently. Nothing about that is AI. it is 100% human intervention I wish people would stop incorrectly labeling things as AI when it isn't.Reply -

alextheblue Reply18309962 said:The answer to climate change will no doubt be exterminate all humans as the route cause of the problem. Skynet confirmed...

What about non-anthropogenic climate change?