Google Lens Gets a Huge Upgrade with Real-Time Search

MOUNTAIN VIEW -- What Google’s search engine is to text on the internet, Google Lens is for seeing the world around you. Now, the visual search tool is coming to the default Camera app in more than 10 Android handsets, so you can aim your smartphone camera at objects to find more information about them.

Before, Lens was a feature tucked inside Google Photos and Google Assistant. Now it will live next to other Camera app options, such as video-recording. On LG's G7 ThinQ, the double-click of a dedicated Google Assistant hardware button on the side of the device will open Lens.

Not only is Lens becoming more accessible, it’s also growing more powerful. New features rolling out toward the end of May include smart text selection, a tool called Style Match, and real-time responses to find information directly inside the Camera app.

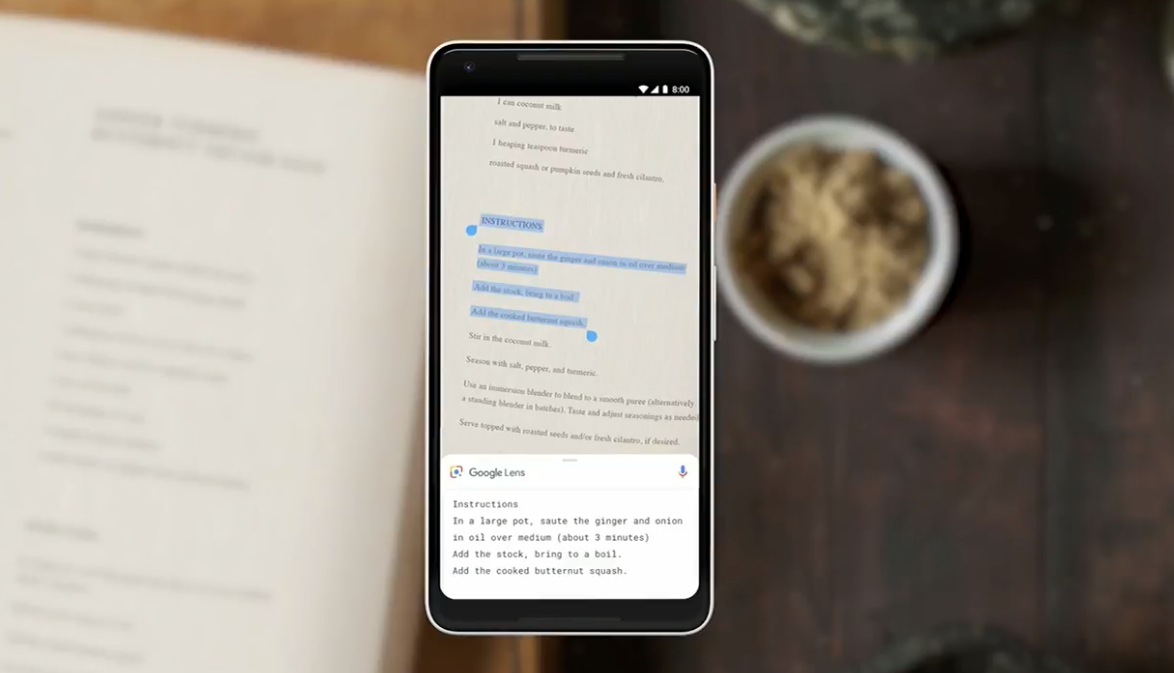

The text selection tool lets you copy and paste words from an image and turn them into text. For instance, you can aim your camera at a handwritten recipe and convert it into a document that you save or share with your friends.

The Style Match feature finds items comparable to the one you’re looking at, so you can scour the web for stylish furniture or outfits you might want to buy without doing any work. This is challenging, Google’s Aparna Chennapragada said during the company’s I/O developers’ conference keynote on Tuesday (May 8), because Lens has to navigate variable lighting conditions and textures as it combs through hundreds of millions of items around the web.

MORE: Android P Features: What's New in Google's Next OS

But perhaps the coolest new Lens feature is its real-time analysis. When you launch the Camera app and toggle over to Lens, the camera starts scanning its environment to offer you more information about what it’s seeing. For instance, if you aim your camera at a concert poster, Lens will surface a YouTube video of the artist’s new single and start playing it directly in the Camera app.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

“This is an example of how the camera is not just answering questions, but it is putting the answers right where the questions are,” Chennapragada said.

We can’t wait to put it to the test.

This article originally appeared on Tom's Guide.

-

bit_user This starts to get at the real promise of AR - contextually-aware computing - where your digital assistant is as perceptive, vigilant, informative, and helpful as any real personal assistant or guide might be.Reply

I think it's going to take a while for it to get refined to the point where it won't be a continual nuisance, but this is where we're headed.