Plagiarism Engine: Google’s Content-Swiping AI Could Break the Internet

The Search Generative Experience seems more like a text-copying experience.

Search has always been the Internet’s most important utility. Before Google became dominant, there were many contenders for the search throne, from Altavista to Lycos, Excite, Zap, Yahoo (mainly as a directory) and even Ask Jeeves. The idea behind the World Wide Web is that there’s power in having a nearly infinite number of voices. But with millions of publications and billions of web pages, it would be impossible to find all the information you want without search.

Google succeeded because it offered the best quality results, loaded quickly and had less cruft on the page than any of its competitors. Now, having taken over 91 percent of the search market, the company is testing a major change to its interface that replaces the chorus of Internet voices with its own robotic lounge singer. Instead of highlighting links to content from expert humans, the “Search Generative Experience” (SGE) uses an AI plagiarism engine that grabs facts and snippets of text from a variety of sites, cobbles them together (often word-for-word) and passes off the work as its creation. If Google makes SGE the default mode for search, the company will seriously damage if not destroy the open web while providing a horrible user experience.

A couple of weeks ago, Google made SGE available to the public in a limited beta (you can sign up here). If you are in the beta program like I am, you will see what the company seems to have planned for the near future: a search results page where answers and advice from Google take up the entire first screen, and you have to scroll way below the fold to see the first organic search result.

For example, when I searched “best bicycle,” Google’s SGE answer, combined with its shopping links and other cruft took up the first 1,360 vertical pixels of the display before I could see the first actual search result.

For its part, Google says that it’s just “experimenting,” and may make some changes before rolling SGE out to everyone as a default experience. The company says that it wants to continue driving traffic offsite.

“We’re putting websites front and center in SGE, designing the experience to highlight and drive attention to content from across the web,” a Google spokesperson told me. “SGE is starting as an experiment in Search Labs, and getting feedback from people is helping us improve the experience and understand how generative AI can be helpful in information journeys. The experiences that ultimately come to Search will likely look different from the experiments you see in Search Labs. As we experiment with new LLM-powered capabilities in Search, we'll continue to prioritize approaches that will drive valuable traffic to a wide range of creators."

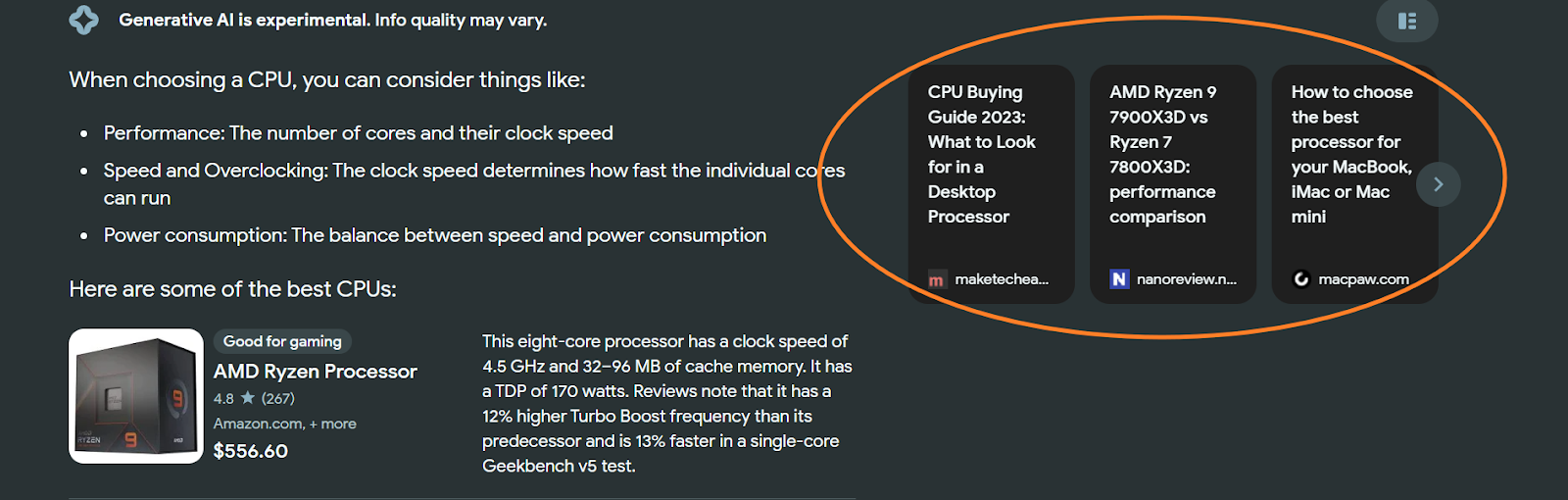

By “putting websites front-and-center,” Google is referring to the block of three related-link thumbnails that sometimes (but not always) appear to the right of its SGE answer. These are a fig leaf to publishers, but they’re not always the best resources (they don’t match the top organic results) and few people are going to click them, having gotten their “answer” in the SGE text.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

For example, when I searched for “Best CPU,” the related links were from the sites Maketecheasier.com, Nanoreview and MacPaw. None of these sites is even on the first page of organic results for “Best CPU” and for good reason. They aren’t leading authorities in the field and the linked articles don’t even provide lists of the best CPUs. The MacPaw article is about how to choose the best processor for your MacBook, a topic that does not match the intent of someone searching for “best CPU,” as those folks are almost certainly looking for a desktop PC processor.

A Plagiarism Stew

Even worse, the answers in Google’s SGE boxes are frequently plagiarized, often word-for-word, from the related links. Depending on what you search for, you may find a paragraph taken from just one source or get a whole bunch of sentences and factoids from different articles mashed together into a plagiarism stew.

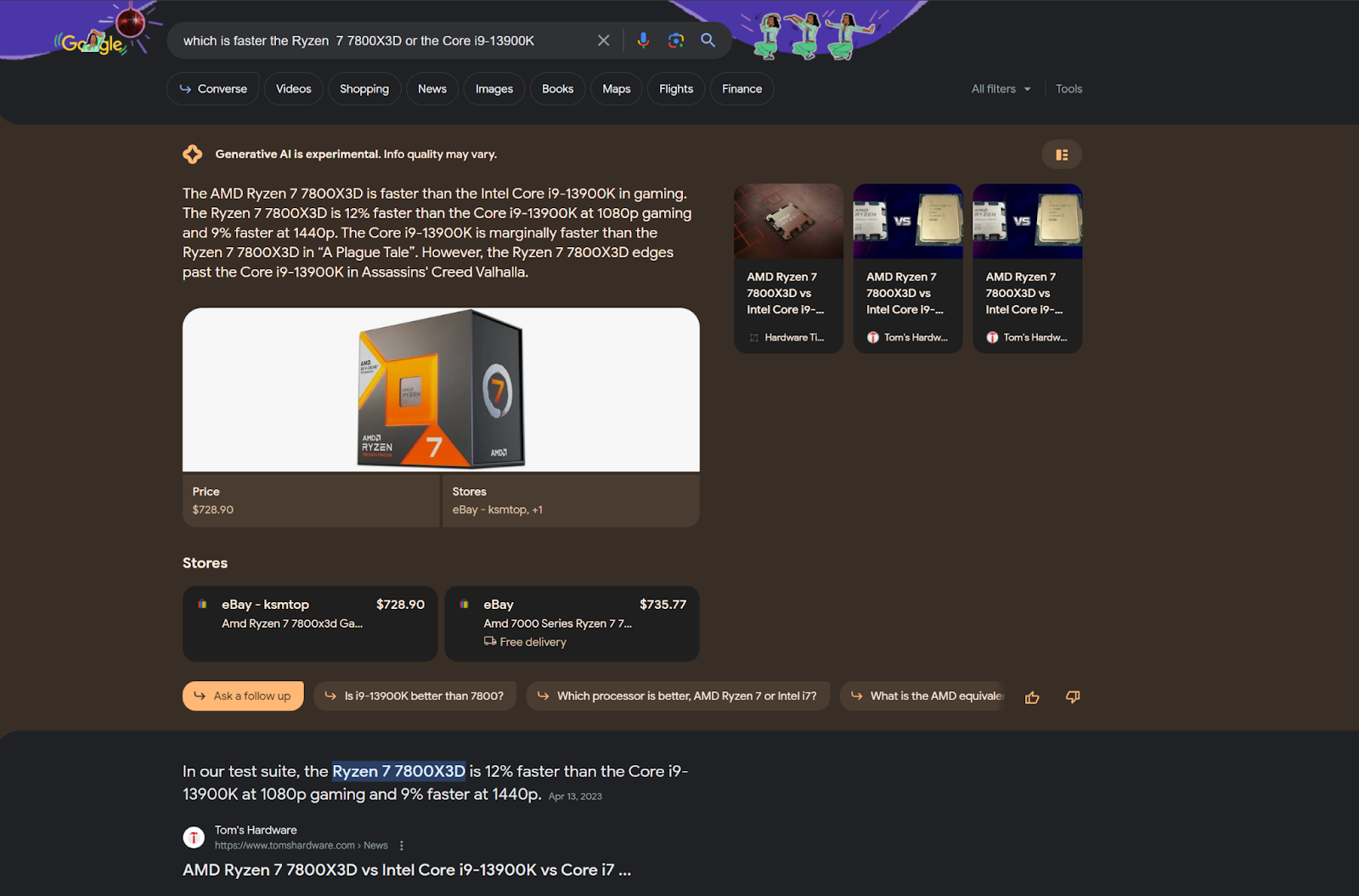

When I searched “which is faster the Ryzen 7 7800X3D or the Core i9-13900K,” the Google SGE grabbed an exact phrase from our Tom’s Hardware article comparing the two CPUs, writing “The Ryzen 7 7800X3D is 12% faster than the Core i9-13900K at 1080p gaming and 9% faster at 1440p.” It then rephrased two sentences from this article on Hardware Times. The original copy read as:

“The Core i9-13900K snags a win in “A Plague Tale” both with and without ray-tracing. It’s marginally faster than the Ryzen 7 7800X3D with similar lows. The tables get turned in Assassins’ Creed Valhalla as the 7800X3D edges past the 13900K in Ubisoft’s latest title.”

And Google’s AI wrote it as:

“The Core i9-13900K is marginally faster than the Ryzen 7 7800X3D in ‘A Plague Tale’. However, the Ryzen 7 7800X3D edges past the Core i9-13900K in Assassins' Creed Valhalla.”

You can even clearly see in our screenshot that our sentence is quoted word-for-word in Google’s “featured snippet” box but not in the SGE box (which will likely replace the featured snippets in the future since SGE does basically the same thing). Yes, both the Hardware Times article and the Tom’s Hardware article that Google’s bot copied data from are listed as related links on the right side of the box.

When I asked Google about the fact that its SGE answers are frequently word-for-word copies drawn from the related links articles, the company said that it picks those links because they “corroborate” the responses.

“Generative responses are corroborated by sources from the web,” the spokesperson said. “And when a portion of a snapshot briefly includes content from a specific source, we will prominently highlight that source in the snapshot.”

It’s pretty easy to find sources that back up your claims when your claims are word-for-word copied from those sources. While the bot could do a better job of laundering its plagiarism, it’s inevitable that the response would come from some human’s work. No matter how advanced LLMs get, they will never be the primary source of facts or advice and can only repurpose what people have done. LLMs are relatively good at generating “creative” works that are designed to be a mashup of existing ideas (ex: “write me a haiku about farts”) but, until they are connected to robotic bodies that go out and gather information first-hand, they will never be a source of truth.

The company also said that “you can expand to see how the links apply to each part of the snapshot.” There’s an expand icon that sits inconspicuously in the upper right corner of the SGE box, above the third related link. And, if you decide to click it, you will see a clunky interface which puts the thumbnails for related links inline with the pilfered text.

Whether you click the expand button or not, SGE’s related links are not presented as citations, but recommendations for further reading. If I start singing “Thriller” then tell you that it’s an original song I wrote, it doesn’t matter if I also say “you might want to listen to a guy named Micheal Jackson because he also makes some nice songs like this.” That’s still plagiarism and, even if it were not, we’d have a problem.

Plagiarism is a moral and academic term, not a legal one, and simply giving credit is not a defense against copyright infringement. You can’t run a business selling pirated Blu-ray discs and then, when busted, say “it’s all good, because I listed George Lucas as the director of Star Wars rather than substituting my own name in the credits.”

In answering my questions, Google’s spokesperson also compared the SGE box to featured snippets, noting that publishers today usually want their articles to appear in featured snippets because those links drive traffic back. While both experiences use content directly from publishers, featured snippets are short quotes with direct attribution and a very prominent link directly to the source. They do not pretend to be generated by an all-knowing AI and they often give you just enough information to want to click-through for more.

No Authority, No Trust

From a reader’s perspective, we’re left without any authority to take responsibility for the claims in the bot’s answer. Who, exactly, says that the Ryzen 7 7800X3D is faster and on whose authority is it recommended? I know, from tracing back the text, that Tom’s Hardware and Hardware Times stand behind this information, but because there’s no citation, the reader has no way of knowing. Google is, in effect, saying that its bot is the authority you should believe.

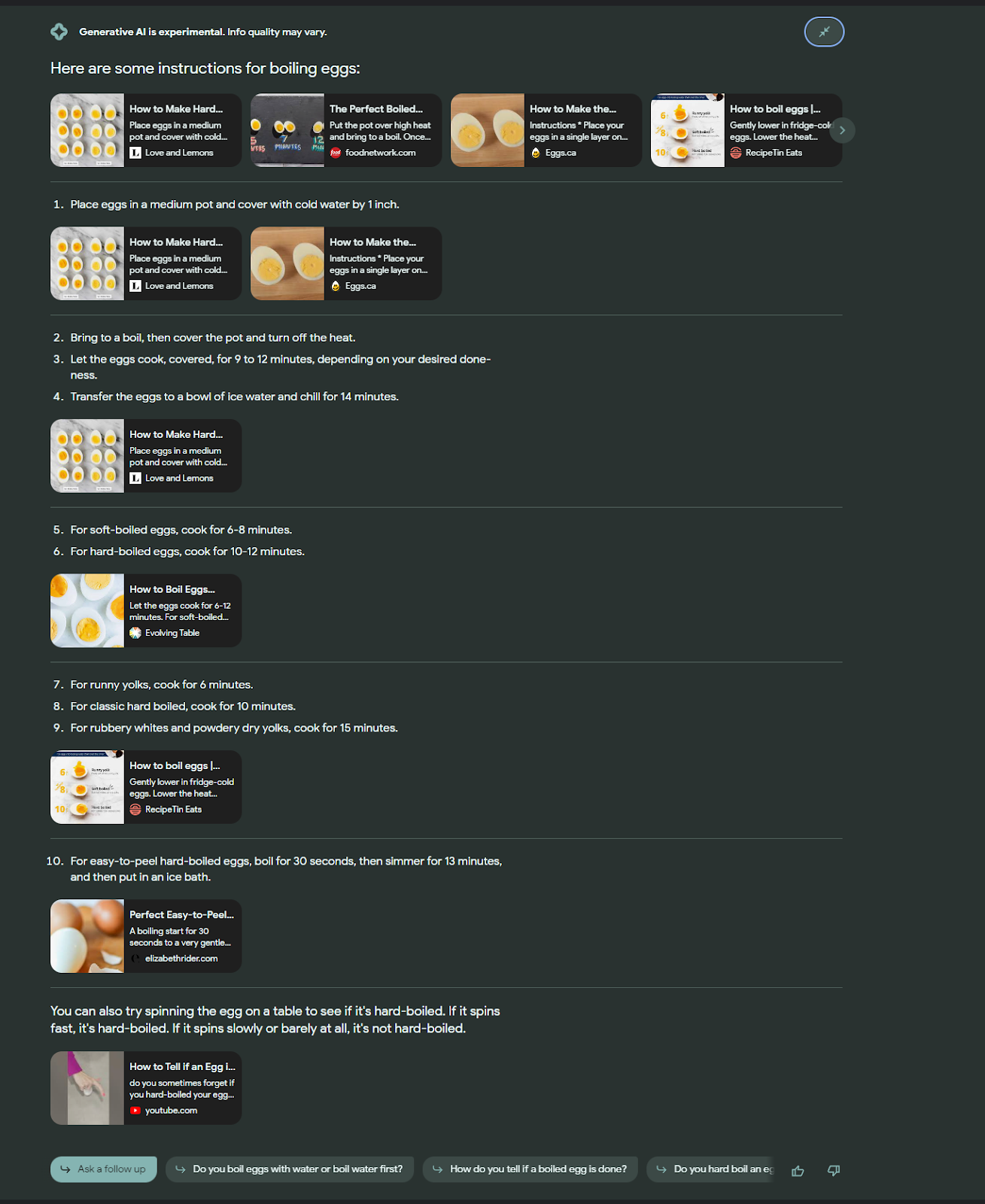

The fallacy underlying Google SGE is the false belief that a bot can have authority in the first place. Until the bot grows a pair of hands and opens its own lab space, it will never test CPUs. Until it opens a kitchen, it will never have its own family recipes. The only thing it can cook up is a plagiarism stew.

Relying on an unsourced bot as the end-all, be-all authority stands in direct contradiction to Google’s stated emphasis on E-E-A-T (Expertise, Experience, Authority and Trust), a standard it uses to decide which websites and authors should rank highly in organic search.

It makes total sense that someone who has been reviewing CPUs for 15 years on a website that specializes in CPUs should have their AMD Ryzen review rank higher than someone with no authority on the topic. Unfortunately, when it comes to Google’s own AI author – a faceless entity that has no experience doing anything – the rules go out the window.

Mish-Mash Plagiarism Leads to Poor Answers

At least the result we got when asking which CPU was faster was an accurate one. However, by mashing up text from different sources and then not sharing what the source for each sentence or bullet point is, Google is offering incorrect information that often contradicts the source material it’s copied from, or contradicts itself.

For example, I searched for “ThinkPad X13 AMD Review,” because I was interested in seeing what reviewers thought of Lenovo’s ThinkPad X13 laptop with AMD processor inside. The Google bot wrote its own mini-review, complete with bulleted pros and cons for the ThinkPad X13, while grabbing sentences and bullet points from at least four different articles, including a review from Laptop Mag, a review from Tom’s Hardware, another review from Notebook Check and a blog post from LaptopOutlet – which is a store that had about 100 words on the product.

The image below shows the result, along with pointers to where the SGE took its content from.

Aside from it being plagiarism and a slap in the face to the writers who did the actual work of testing and using this laptop, Google’s answer has a lot of issues. First of all, the answer refers to the ThinkPad X13 Gen 3 (the latest version with AMD CPU) but the reviews it draws from are from the Gen 1 and Gen 2 versions of the product, which are not the same.

While Laptop Mag and Tom’s Hardware both praised the laptop’s keyboard and durable design, both sites described the battery life as “lackluster” or “subpar,” while Google lists “Long battery life” as a pro. The bot clearly got the battery life pro from another site, but by mixing advice from different sources, Google is presenting readers with a very inaccurate picture.

Also, since the bot doesn’t cite sources, the reader has no way to know who thought it had long battery life, whether that came from a reputable outlet and how they tested. One of the sources, LaptopOutlet, is a store that sells laptops and doesn’t do any benchmark testing. Should its claims be given equal weight to those journalists who actually do test and aren’t actively trying to sell the product? Like most LLMs, Google’s SGE bot doesn’t seem to care whether it’s giving you the truth or just mashing sentences together in a way that seems convincing.

Giving Faulty Medical Advice

The Google SGE bot is so careless in its plagiarism mashups that it also gives incorrect medical advice that has been drawn from a variety of sources. For example, I asked: “do I need a colonoscopy?” and it gave me the following answer:

I highlighted the text in blue because it is dangerously wrong. Google’s bot says that “the American Cancer Society recommends that men and women should be screened for colorectal cancer starting at age 50.” However, the American Cancer Society’s own website says that screenings should start at age 45, so this misleading “fact” probably came from elsewhere.

There’s also a bulleted list of “reasons to have a colonoscopy” that don’t include “routine screening,” hence it’s implying that you should only get the procedure if you have symptoms. The bulleted list is copied word-for-word from an article on an Australian Government health site called BetterHealth. The article actually lists “screening and surveillance for colorectal cancer” as a reason, but Google’s bot decided not to copy that fact.

Even if all the facts in the colonoscopy answer were clear and correct, they are not attributed to anyone. So why on earth should you trust them and whom do you blame when you follow this advice – for example, delaying your screening to age 50 – and something bad happens? By claiming content as its own, Google is acting as a publisher, which likely opens it up to lawsuits.

Keeping You On Google.com, Killing the Open Web

Though Google is telling the public that it wants to drive traffic to publishers, the SGE experience looks purpose-built to keep readers from leaving and going off to external sites, unless those external sites are ecomm vendors or advertisers. In some queries – “screenshot in windows” for example – there is a detailed answer but no related links at all. Nevermind that there are tons of articles that give you a lot more detail about how to take a screen shot.

If Google were to roll its SGE experience out of beta and make it the default, it would be detonating a 50-megaton bomb on the free and open web. Many publishers, who rely on Google referrals for the majority of their visits, would fold within a few months. Others would cut resources and retreat behind paywalls. Small businesses that rely on organic search placement to sell their products and services would have to either pay for advertising or, if they cannot afford it, close up shop.

Eventually, even hobbyists who either run not-for-profit websites or post advice on forums would likely stop doing it. Who wants to write, even for fun, if your words are going to be stolen and no one is going to read your copy? Would you answer someone’s programming question on Stack Overflow if your contribution would just be reworded and spat out by Google, without ever mentioning your name or the post itself?

Not an AI Issue: An Anti-Competitive Issue

This isn’t a case of artificial intelligence outsmarting human writers or providing a better experience. In fact, the method of publishing is incidental to the problem. If it rolls the current SGE experience out, Google would be leveraging its monopoly position to push its own content over and above everyone else’s. The company could hire an army of unskilled writers to copy and paste content from third-party websites, sometimes rewording it, instead of using an AI. The outcome would be the same.

There’s no doubt that Google’s AI will get better, but get better at what exactly? It will likely do a better job of rephrasing content so that it’s harder to find the original source it copied from. It will do a better job of offering information that’s up-to-date and logically consistent with itself. However, by just grabbing other peoples’ ideas and not citing the source, there’s no authority behind anything it says.

The end result of Google SGE going live as the default search experience would be a weaker, more siloed Internet, but likely a wealthier Google. The company would increase its time-on-site, ad revenue and ecommerce referrals. It would also please investors, who want to see it compete with OpenAI and Bing. Some readers may grouse about the quality of the information, which can be outdated, false or word-for-word plagiarized, but taking up the entire first screen of results will be enough for Google to grab a huge percentage – if not the majority – of its current outbound clicks.

Many people I have talked to about and shown Google SGE can’t believe that the company would roll such a dangerous, poor-quality and web-breaking experience out to everyone. We can hope that the final product won’t take up as much screen real estate as what we’re seeing today. But Google is already making this the daily search experience for anyone who, like me, signs up for the beta. And it has every economic incentive to make this the new default experience for 91 percent of the web’s searches.

What Publishers Can Do, What Users Can Do

Anyone who publishes on the web and needs people to actually read their work is in a precarious position, because of Google’s SGE. Almost every publication desperately needs to keep getting referrals from Google, so they can’t opt out of being indexed and having their data scraped. But if Google makes SGE the default search experience, the amount of Google referrals may fall so sharply that they can’t keep the lights on.

Bing took only a few months to go from having its AI Chat in a limited beta to it being available to everyone. If Google follows a similar timeline, it could go from being a search engine to a zero-click, plagiarism engine by this fall.

Publishers and publishing associations are still grappling with what AI plagiarism could do to their businesses. The News / Media Alliance, an industry group that represents magazines and newspapers, published a set of AI principles that states “The unlicensed use of content created by our companies and journalists by GAI systems is an intellectual property infringement: GAI systems are using proprietary content without permission.”

Getty Images is suing Stability AI to prevent the company from using its copyrighted images in training data. The image library has even asked a UK court to block sales of the AI system in that country. IAC Media Chairman Barry Diller has advocated for media companies to sue AI vendors over the unauthorized use of training data.

Will publishers sue Google over what it’s doing with SGE? There’s an argument that the word-for-word copying of information from websites without permission is a form of copyright infringement, even if the source was cited. However, we haven’t seen this litigated in court yet. And many companies, needing whatever traffic they will still get from Google, would want to avoid getting on the company’s bad side.

Companies could band together, through trade associations, to demand that Google respect intellectual property and not take actions that would destroy the open web as we know it. Readers can help by either scrolling past the company’s SGE to click on organic results or switching to a different search engine. Bing has shown a better way to incorporate AI, making its chatbot the non-default option and citing every piece of information it uses with a specific link back (the links aren’t very prominent, however).

In the end, if Google follows through with its current iteration of SGE, it will damage the quality of its own service. The content that the bot trains on would get worse and worse as more quality publishers left the open web. Eventually, users would start looking for a service that provides better answers. But by that time, the damage done to the entire web information ecosystem could be irreparable.

Note: As with all of our op-eds, the opinions expressed here belong to the writer alone and not Tom's Hardware as a team.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

Alvar "Miles" Udell ReplyBing has shown a better way to incorporate AI, making its chatbot the non-default option and citing every piece of information it uses with a specific link back (the links aren’t very prominent, however).

Bing's source links are cited in-line as superscript note numbers, referenced at the bottom with the primary site name, similar to Wikipedia. Mousing over the source links brings up the page title, and in the Bing app it loads source previews.

How is that not prominent without being invasive? -

I guess it’s back to the old days because there is no good search engine anymore. It’s all BS and you better know what it is you’re looking for and dig deep and hard.Reply

I don’t use stupid bots, and now I have to ignore all the garbage they generate -

Math Geek I'm part of the non-google using 9% but this will def keep me permanently in that 9%.Reply

I currently use duck duck go and find it a good substitute. Comparing it to Google searches side by side over a couple years shows me I'm not missing anything not using google. Well I am missing a lot of ads n paid links but that's about it. I find what I need on duck duck go. -

setx If only you put the same effort in reviewing hardware...Reply

And your links "best <anything>" that you just have to put in almost all articles are exactly the same as what google is doing: they are best for you, not the users. -

lmcnabney Not sure how AI creates content is any different than how humans do it. The algorithm just lives a human life looking at other people's art before synthesizing its own. Artists just don't admit IP theft when their content is clearly influenced by others.Reply -

USAFRet Reply

This AI isn't creating anything.lmcnabney said:Not sure how AI creates content is any different than how humans do it. The algorithm just lives a human life looking at other people's art before synthesizing its own. Artists just don't admit IP theft when their content is clearly influenced by others.

It is grabbing other peoples work, conflating that into a mostly human sounding thing, and claiming it as its own content.

Plagiarism by a human is bad. This is worse. -

setx Reply

Surprise: that's exactly what most "news" sites do nowadays (at least, hardware ones). They are rehashing official marketing info or other sites.USAFRet said:It is grabbing other peoples work, conflating that into a mostly human sounding thing, and claiming it as its own content.

Sure, there are probably links to source stuffed somewhere, but amount of valuable information added is the same as AI if not even less. -

USAFRet Reply

2 things....setx said:Surprise: that's exactly what most "news" sites do nowadays (at least, hardware ones). They are rehashing official marketing info or other sites.

Sure, there are probably links to source stuffed somewhere, but amount of valuable information added is the same as AI if not even less.

Most get there content from a known content distributor. And pay for the content.

Also, they generally reference where they got it from.

This thing is grabbing text from elsewhere, and claiming it as original content.

Straight up plagiarism. Even worse, plagiarism from a non human. -

setx Reply

It looks more like "get paid" than "pay for" (at least for hardware news).USAFRet said:Most get there content from a known content distributor. And pay for the content. -

USAFRet Reply

That too.setx said:It looks more like "get paid" than "pay for" (at least for hardware news).

But at least that is a bit of back and forth.

Rather than just stealing and claiming as its own.