IBM Proposes Carbon Nanotubes Instead of Silicon for Chips

IBM researchers believe to have found a way to overcome the physical limitations to shrink silicon in future computer chips.

IBM researchers believe to have found a way to overcome the physical limitations to shrink silicon in future computer chips. The company suggests that carbon nanotubes are key to smaller transistors as the material may be able to replace silicon at some point.

According to the company, it was able to produce "10,000 working transistors made of nano-sized tubes of carbon" and place them "precisely" on a single chip using "standard semiconductor processes". The placement density was one billion carbon nanotubes per square centimeter. Of course, 10,000 transistors are a far cry from the more than 1 billion transistors that are placed on today's CPUs. The precision rate of 99.8 percent appears to be close to the required 99.999 percent to achieve 1 billion transistors, but those extra 0.199 percent are more difficult to achieve than the previous 99.8 percent.

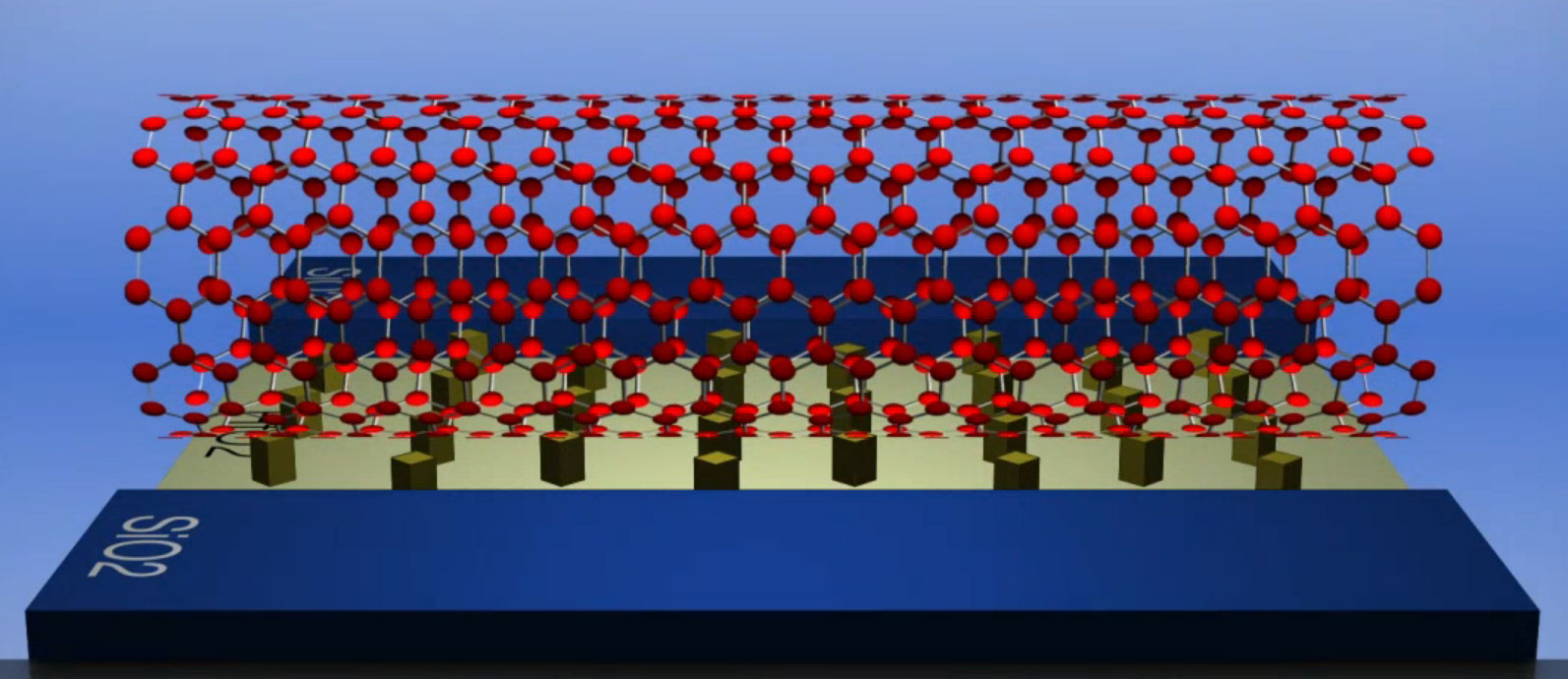

Nevertheless, IBM's announcement is remarkable and the company states that there is reason to believe that carbon nanotube transistors are likely to "replace and outperform silicon technology". In its research, IBM said it was able to position the carbon transistors by creating a circuit pattern on a substrate using a chemically-modified hafnium oxide (HfO2) and the rest of silicon oxide (SiO2). The added carbon nanotubes attached themselves to the HfO2via a "chemical bond", IBM said.

Carbon transistors are nowhere near commercial production, but IBM clearly sees the technology as a viable approach to build transistors that are "a few tens of atoms across". The company said that the material itself is also more attractive than silicon as electrons can move easier in carbon transistors than in silicon-based devices, which would result in faster chips.

Contact Us for News Tips, Corrections and Feedback

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Wolfgang Gruener is an experienced professional in digital strategy and content, specializing in web strategy, content architecture, user experience, and applying AI in content operations within the insurtech industry. His previous roles include Director, Digital Strategy and Content Experience at American Eagle, Managing Editor at TG Daily, and contributing to publications like Tom's Guide and Tom's Hardware.

-

ksampanna At what point in the process shrink node do silicon transistors start creating problems?Reply -

dark_knight33 No matter what, you can't shrink a transistor past one atom.Reply

http://www.sciencedaily.com/releases/2012/02/120219191244.htm

Miniaturization has taken us quite far from the early 80's, but it's time to start looking towards other innovations to improve computing power. At some point, once nano structures have bottomed out in size, we will need to go back to clock speed and IPC to improve performance on a classical (i.e. non-quantum) computer. Maybe photonics hold the key here, but it's getting to be time for Intel to pull something truly revolutionary out from behind the R&D curtain. -

bison88 Intel has plans up to 2018-2020 for shrinking down to 5nm, which given their R&D budget I'm sure they can accomplish. This is definitely a positive sign well before that time frame to have this tech already being worked with and looking much closer to prime time.Reply

Kudos to IBM. You may be a dinosaur, but you still got the R&D curve and last I heard they were working on an 1Tbps Optical Interconnect for CPU bandwidth.

http://www.extremetech.com/computing/121587-ibm-creates-cheap-standard-cmos-1tbps-holey-optochip

Let the good times keep rollin :D -

acadia11 IBM rules when it comes to research, intel implements and matkets tech better butIBM hands down at creating it. Now if we could just we've that space elevator.Reply -

acadia11 ksampannaAt what point in the process shrink node do silicon transistors start creating problems?Reply

5nm , you start getting funny behavior aside from just plain old electon bleeding, but effects like quantum tunneling. -

punahou1 This research is critical because with silicone we will reach a limit where Moore's Law will hit a physical limitation in the next 20 years or so. Maybe more importantly is the Bell Labs research into organic technology as this has the potential to extend Moore's Law by several hundred years.Reply -

TeraMedia At a certain point, research projects move from being "throw some $ at it" activities to being "bet the farm on it" business-changers. A company that thrives on risk (Intel, Boeing, Lockheed, AMD could probably be counted in this list) will deploy research sooner, while a company that is risk-averse will avoid making changes even when that course of action might lead to reduced profits or its eventual downfall.Reply

Unfortunately, IBM doesn't quite fall into the Intel/Boeing/Lockheed camp. They don't bet the company each time they design a new jumbo-jet, or construct a new chip-Fab, or design a prototype warplane. And even Intel doesn't risk as much as these other companies. If they did, or IBM did, we might see commercial nanotube transistor-based ICs sooner.