IBM Unlocks Quantum Utility With its 127-Qubit "Eagle" Quantum Processing Unit

There's little quantum without standard, it seems.

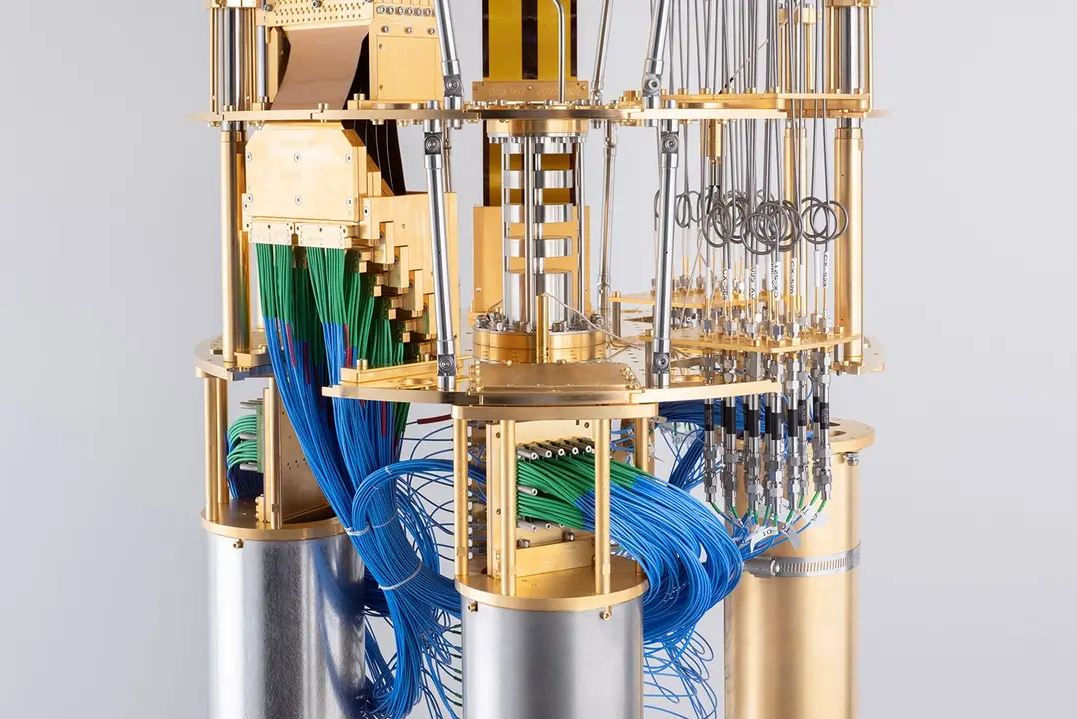

A team of IBM researchers in association with UC Berkeley and Purdue University have managed to extract useful quantum computing out of one of today’s NISQ (Noisy Intermediate Scale Quantum) computers. The team used one of IBM’s latest Quantum Processing Units (QPU), Eagle, to perform calculations that were expected to fail in the midst of qubit noise. However, using a clever feedback mechanism between IBM’s 127-qubit Eagle QPU and supercomputers with UC Berkeley and Purdue University, IBM managed to prove it could derive useful results from a noisy QPU. The door to quantum utility is open – and we’re much earlier than expected.

Our NISQ-era quantum computers are roped-in to our standard supercomputers – the most powerful machines known to mankind, capable of trillions of operations per second. Powerful as they are, it’s a universal truth that when two subjects are roped together, they only move as fast as the slowest of them allows. And the supercomputer was already stretched thin for this experiment, using advanced techniques to keep up with the simulation’s complexity.

When the qubits’ simulation became too complex for the supercomputer to simply “brute force” the results, the researchers at UC Berkeley started using compression algorithms – tensor network states. These tensor network states (matrixes) are essentially data cubes, where the numbers that comprise the calculations are represented in a three-dimensional space (x, y, z) that’s capable of handling more complex information relationships and volumes than a more usual 2D solution - think of a simple Excel 2D table (x, y) and the many more rows you’d have to search through in that configuration if you had to consider another plane of information (z).

“The crux of the work is that we can now use all 127 of Eagle’s qubits to run a pretty sizable and deep circuit — and the numbers come out correct,”

Kristan Temme

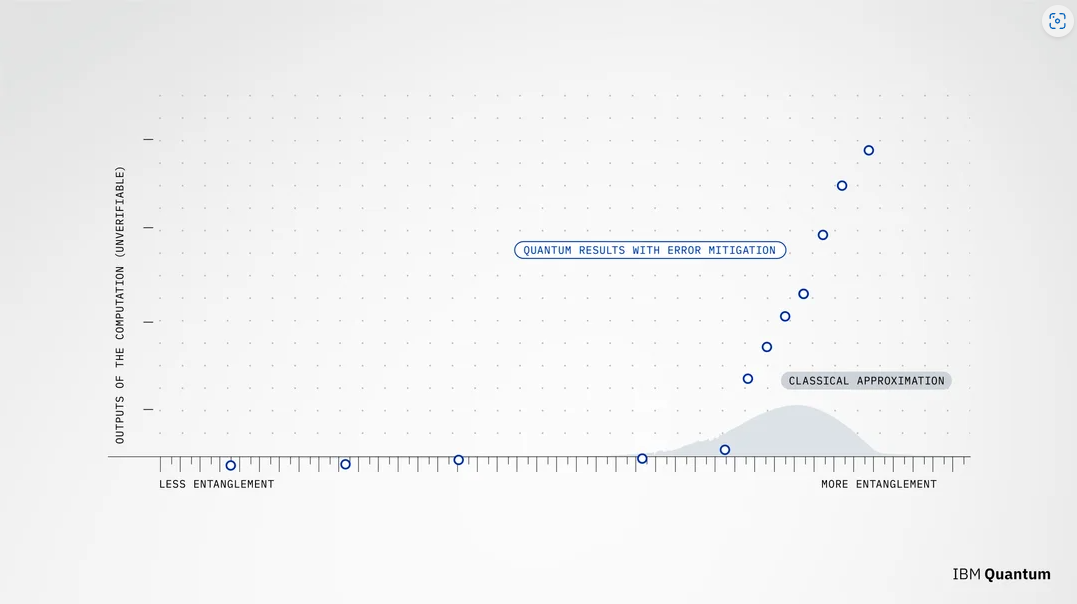

This means that there’s already some utility that can be extracted from NISQ quantum computers – there are matters where they can produce results that would be beyond the reach – at least in terms of time and money – to standard supercomputers, or where the hoops required to obtain those results would make the effort bigger than the gain.

There’s now a back and forth happening between solutions given by our NISQ-era quantum computers that feature a few hundred qubits (at best), and our standard supercomputers that feature trillions of transistors. As the number of available, useful qubits increases, circuits with depths deeper 60 used in the paper will be explored. As the number and quality of qubits increase, standard supercomputers too will have to keep up, crunching the numbers and verifying as deep a queue of quantum computing’s results as it possibly can.

“It immediately points out the need for new classical methods,” said Anand. And they’re already looking into those methods. “Now, we’re asking if we can take the same error mitigation concept and apply it to classical tensor network simulations to see if we can get better classical results.”

Essentially, the more accurately you can predict how noise evolves in your quantum system, the better you know how that noise poisons the correct results. The way you learn how to predict something is simply to prod at it and observe what happens enough times that you can identify the levers that make it tick.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Some of these levers have to do with how and when you activate your qubits (some circuits use more qubits, others require those qubits to be arranged into more or less quantum gates, with more complex entanglements between certain qubits… ) IBM researchers had to learn precisely how much and what noise resulted from moving each of these knobs within its 127-qubit Quantum Eagle – because if you know how to introduce noise, then you begin to control it. If you understand how it appears in the first place, you can account for it, which in turn allows you to try and prevent or take advantage of that happening.

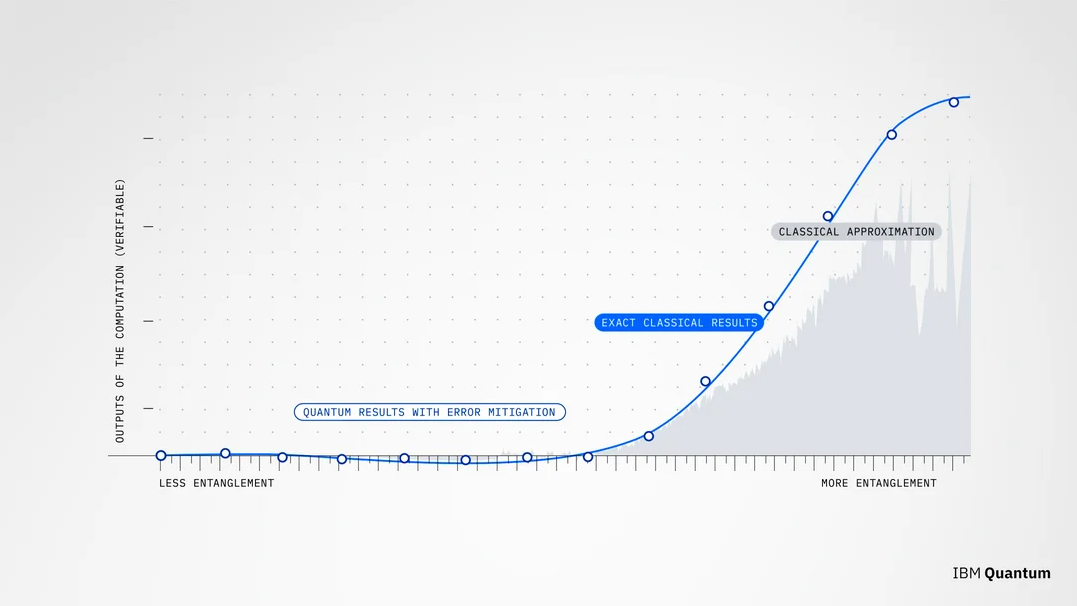

But if you’re only running calculations on your noisy computer, how can you know those calculations are, well, correct? That’s where standard supercomputers – and the search for a ground truth – comes in.

The IBM team got access to two supercomputers - Berkeley National Lab’s National Energy Research Scientific Computing Center (NERSC) and at the NSF-funded Anvil supercomputer at Purdue University. These supercomputers would calculate the same quantum simulations that IBM ran on its 127-qubit Eagle QPU – divvied up as needed within them, and in ways that would allow the comparison of both results from the supercomputers. Now, you have a ground truth – the solution you know to be correct, achieved and verified by standard supercomputers. Now the light is green to compare your noisy results with the correct ones.

“IBM asked our group if we would be interested in taking the project on, knowing that our group specialized in the computational tools necessary for this kind of experiment,” graduate researcher Sajant Anand with UC Berkeley said. “I thought it was an interesting project, at first, but I didn’t expect the results to turn out the way they did.”

Then it’s “just” a matter of solving a “find the differences” puzzle: once you realize how exactly the presence of noise skewed the results, you can compensate for its presence, and glean the same “ground truth” that was present in the standard supercomputers’ results. IBM calls this technique Zero Noise Extrapolation (ZNE).

It’s a symbiotic process: the IBM team responsible for the paper is also looking to bring its error mitigation techniques – and equivalents to Zero Noise Extrapolation – to standard supercomputers. Between raw power increase from the most recent hardware developments and algorithm and technique optimizations (such as the usage of smart compression algorithms), raw supercomputing power will grow, allowing us to verify our quantum computing work just that little bit further into the era of post-NISQ quantum computers and their deployment of quantum error correction.

That’s the moment where the rope breaks, and quantum will be relatively free of the need to verify its results with classical techniques. That’s what’s slowing quantum computing down (beyond the absence of error correction that will allow qubits to perform the calculations themselves, of course).

In an interview with Tom’s Hardware for this article, Dr. Abhinav Kandala, manager at Quantum Capabilities and Demonstrations at IBM Quantum, put it beautifully:

“... Even though you have a noisy version of that state you can measure what properties of that state would be in the absence of noise.”

Dr. Abhinav Kandala

Except with quantum, you can then increase the problem’s complexity beyond what supercomputers can handle – and because you have correctly modeled how noise impacts the system, you can still perform the cleanup steps on your noisy results… with some degree of confidence. The farther you are from the “conclusively truthful” results provided by standard supercomputers, the more likely you are to introduce fatal errors into the calculations that weren’t (and couldn’t be) accounted for on your noise model.

But while you can trust your results, you’ve actually delivered quantum processing capabilities that are useful, and beyond what can be achieved with current-gen, classical Turing machines like the supercomputer at Berkeley. It’s also beyond what was thought possible in our current NISQ (Noisy Intermediate Stage Quantum)-era computers. And it just so happens that many algorithms designed for near-term quantum devices would be able to fit within the 127 qubits in IBM’s Eagle QPU, which can deliver circuit depths in excess of 60 steps “worth” of quantum gates.

Dr. Kandala then added: “What we're doing with error mitigation that is running short depth quantum circuits and measuring what are called expectation values measuring properties of the state this is not the only thing that people want to do with quantum computers right I mean to unlock the full potential one does need quantum error correction and the the prevailing feeling was that for anything useful to be done one can only access that once you have an error corrected quantum computer

“The critical piece was being able to manipulate the noise beyond pulse stretching,” said Dr. Kandala. “Once that began to work, we could do more complicated extrapolations that could suppress the bias from the noise in a way we weren’t able to do previously.”

ZNE is likely to become a staple of any quantum computing approach – error mitigation is an essential requirement for the error-prone NISQ computers we currently have and will likely be required even when we arrive at the doorstep of error correction – an approach that sees certain qubits tasked with functions related to correcting errors in other qubits’ calculations.

The work done by IBM here has already had impact on the company’s roadmap – ZNE has that appealing quality of making better qubits out of those we already can control within a Quantum Processing Unit (QPU). It’s almost as if we had a megahertz increase – more performance (less noise) without any additional logic. We can be sure these lessons are being considered and implemented wherever possible on the road to a "million + qubits".

It's also difficult to ignore how this work showcases that there isn't really a race between quantum and classical: the future is indeed Fusion, to game a little with AMD's moto of old. That Fusion will see specific computing elements addressing specific processing needs. Each problem, no matter how complex, has its tool, from classical to quantum; and human ingenuity demands that we excel at using all of ours.

That proverbial rope between standard supercomputers and quantum computers only stretches so far – but IBM is finding cleverer and cleverer ways to extend its length. Thanks to this research, quantum computers are beginning to see that little bit ahead already. Perhaps Dr. Kandala will get to see what he hopes sooner than even he expects: the playground to quantum utility is now open ahead of schedule. Let's see what humans can do within it, shall we?

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

AndrewJacksonZA "'It immediately points out the need for new classical methods,' said Anand."Reply

Am I the only person who paused a bit there for a second? #IYKYK

(Also, Anand's full name and introduction was used after only his surname, which is unusual in articles.) -

Thanks for the news.Reply

Yes traditionally, useful quantum computation can’t be done without fault tolerance, however, IBM’s paper does provide an important data point that demonstrates current quantum computers can provide value much sooner than expected by using error mitigation ( a total of 2,880 CNOT gates ? ).

Glad they focused on the "quantum Ising model" and tensor network methods, and the Pauli–Lindblad noise model for noise shaping in ZNE. And also shifting from Clifford to non-Clifford gates also helped provide a comparison between the quantum solution and the classical solution. But there are other techniques as well.

Actually, once QEC is attained, building fault-tolerant quantum machines running millions of qubits in a quantum-centric supercomputing environment, wouldn't be a far cry. It's about time we reach the ‘utility-scale' industry threshold.

Unfortunately, most claims about quantum advantage are usually based on either random circuit sampling or Gaussian boson sampling, but they are not considered to be as useful of an application, and there have been no useful applications demonstrating quantum advantage, since QCs are too error prone and too small.

Noise leads to errors, and uncorrected errors limit the number of qubits we can incorporate in circuit, which in turn limits the algorithm's complexity. Clearly error control is important.

We can also agree that realizing the full potential of quantum computers, like running Shor’s algorithm for factoring large numbers into primes, will surely require error correction.

IBM has actually done more error mitigation research than others. It's current roadmap shows a more detailed focus on error mitigation beginning in 2024 and leading to fault tolerance thence afterwards.

By the way, IBM isn’t claiming that any specific calculation tested on the Eagle processor exceeded the abilities of classical computers. Other classical methods may soon return MORE correct answers for the calculation IBM was testing. -

bit_user Maybe this is an oversimplification, but it sounds to me like they're essentially calibrating their quantum computer. The very next question I have is whether you'd have to do this per-machine, or is simply doing it per-architecture sufficient? And if the former, do you periodically have to re-calibrate?Reply

The answers to these questions seem key to how practical the technique would be.

Also, given that I assume they're using a limited type of computation for this calibration, would you be able to do it in a more cost-effective and energy-efficient way using FPGAs? -

TechyIT223 Reply

Is sajat his real name or Anand ? Or maybe Anand is surname?AndrewJacksonZA said:"'It immediately points out the need for new classical methods,' said Anand."

Am I the only person who paused a bit there for a second? #IYKYK

(Also, Anand's full name and introduction was used after only his surname, which is unusual in articles.) -

bit_user Reply

You guys are thinking of Anand Shimpi? I don't know what he's up to, these days, but he's not a quantum computing researcher. I'm sure those folks basically all have PhDs in particle physics.TechyIT223 said:Is sajat his real name or Anand ? Or maybe Anand is surname? -

TechyIT223 Reply

Nope, I was not referring to that guy though. Usually most Indians basically swap/shift their username and surname in some cases I have seen.bit_user said:You guys are thinking of Anand Shimpi? I don't know what he's up to, these days, but he's not a quantum computing researcher. I'm sure those folks basically all have PhDs in particle physics.

So maybe ANAND is the first name and sajat his surname? But officially he is using his surname first. But I'm not sure... just a guess -

AndrewJacksonZA Reply

Lol, yeah, that's what popped into my head. And yes, I know. It was a nod to those of us who have been around for a while, especially since Tom's and Anandtech now share a corporate overlord.bit_user said:You guys are thinking of Anand Shimpi? -

TechyIT223 Reply

Yeah. TomHW and Anandtech are both related. I think one of them is a sister concernAndrewJacksonZA said:Lol, yeah, that's what popped into my head. And yes, I know. It was a nod to those of us who have been around for a while, especially since Tom's and Anandtech now share a corporate overlord.