IBM Updates Quantum Roadmap for Scalabity, Modularity, K-level Qubit Counts

Bringing in lessons from classical computing on system scalability

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

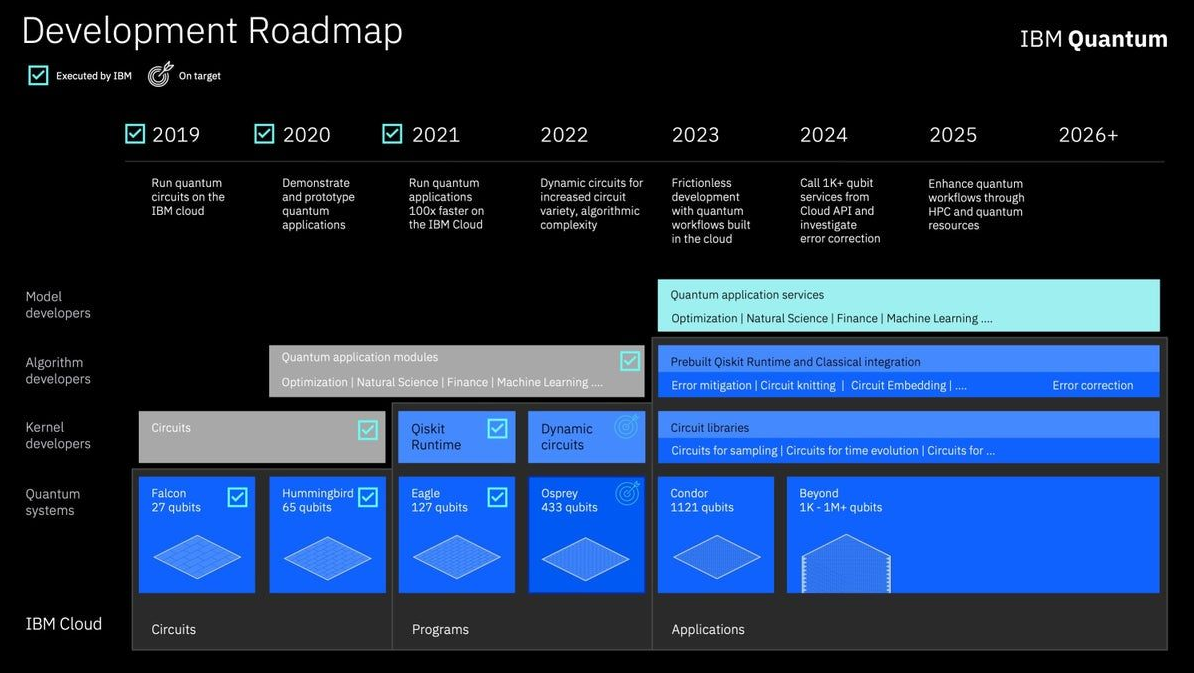

IBM announced a revision of its (originally) 2020 quantum computing roadmap, bringing about a renewed focus in quantum computing scaling by doubling down on modularity and quantum-capable networking. In a day and age where delays are the norm, IBM's re-commitment to its quantum computing efforts instills confidence in the company's choice of framework, qubits, and software development, and it lead to the so-called quantum advantage sooner than expected.

According to IBM, its revised quantum computing vision will focus on three crucial pillars: robust, modularly scalable quantum hardware; the optimized quantum software required to run it; and enabling growth via community and research partnerships with quantum-focused groups.

The company's Qiskit Runtime platform, a programming model that enables researchers and developers to bridge the gap between classical and quantum workloads, is at the forefront of those efforts. And it's interesting to note that IBM expects quantum applications to remain in the prototype stage until at least 2024.

“In just two years, our team has made incredible progress on our existing quantum roadmap. Executing on our vision has given us clear visibility into the future of quantum and what it will take to get us to the practical quantum computing era,” said Darío Gil, Senior Vice President, Director of Research, IBM. “With our Qiskit Runtime platform and the advances in hardware, software, and theory goals outlined in our roadmap, we intend to usher in an era of quantum-centric supercomputers that will open up large and powerful computational spaces for our developer community, partners and clients.”

IBM's renewed roadmap introduces a number of additional quantum computing chips developed on the basis of this modular approach. It adds detail where there was previously none, introducing us to the 2024 and 2025 products IBM expects to deliver. These will bring about increases in raw qubit counts, as expected. 2024 should see IBM introduce its Flamingo platform, boasting of 1,386+ qubits. It does seem that 2025 is where quantum computing developments might reach critical mass, as that's when IBM expects to introduce its "Kookaburra" QPU (Quantum Processing Unit), encapsulating a staggering 4,158+ qubits in a single quantum package. For reference, IBM's current QPU, Eagle, only possesses 127 qubits.

IBM's roadmap showcases the benefits of modular scaling. Kookaburra seems to triple the number of interlinked quantum chips, from three chips deployed with Flamingo to Kookaburra's nine. It's clear that IBM still isn't sure where in the qubit count kingdom Flamingo will end up, but it seems to have established 1,386 qubits as the minimum density. Advances in error-correction could prove crucial here, as reducing the number of qubits in active error-correction duties is one surefire way of increasing "useful qubit" counts.

But how is this modularity achieved? IBM has to solve at least three problems. The first includes building systems able to classically communicate with and parallelize operations across multiple QPUs. The company expects its work in this field to bring about improvements in error correction while also accelerating quantum computing workload orchestration.

For that to happen, the company will have to get these QPUs connected and talking amongst themselves. To that end, IBM is developing chip-level couplers that enable short-range inter-chip communications — essentially an MCM (Multi-chip Module) approach to quantum. Much like AMD's rumored approach for its Radeon 7000-series, the aim is to have multiple smaller, easier to manufacture chips working in tandem as seamlessly and abstractedly as possible. Why ignore lessons earned from classical semiconductors?

The third component shifts focus toward full scalability by providing quantum communication links between individual quantum processors. This is the technology that will enable IBM to scale from a single Kookaburra-class chip (with its nine, MCM-like QPUs interlinked) to server-level scaling, by enabling multiple Kookaburra chips to be linked together. It's currently unclear which technologies IBM will be pursuing in this scaling push, but recent advances in quantum photonics place them as likely candidates.

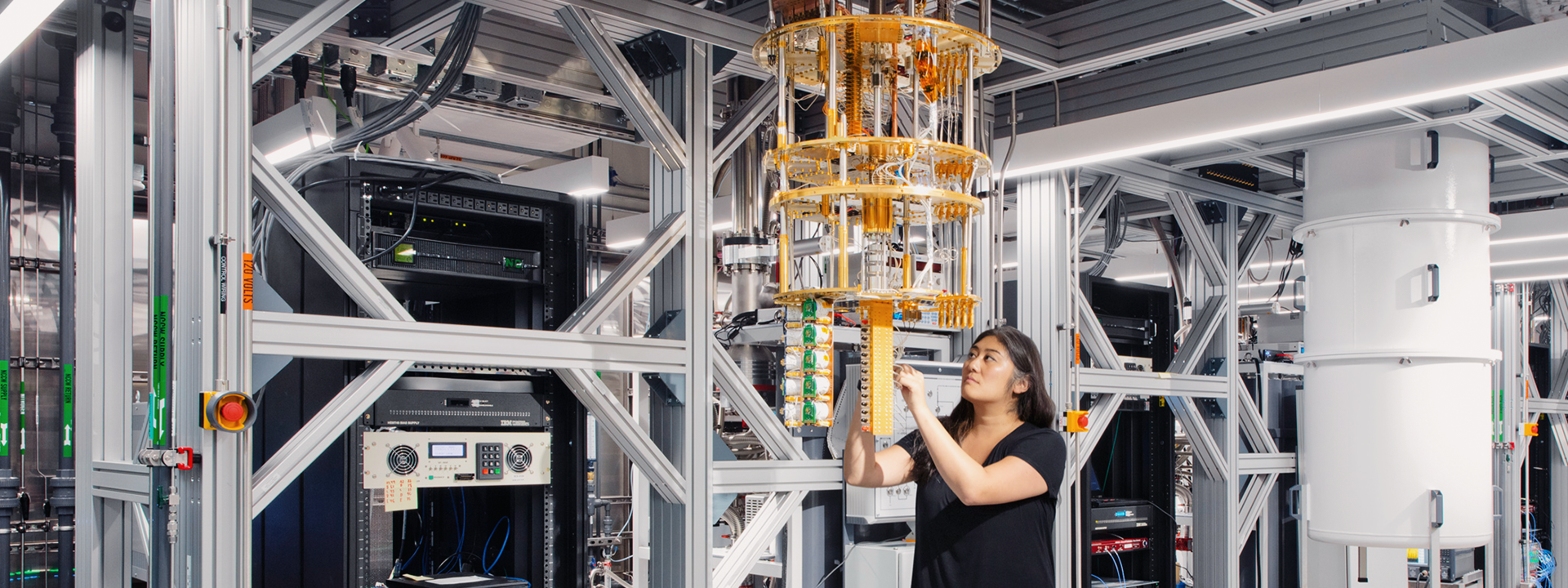

All of these technologies and advancements are necessary if IBM wants to achieve its 2025 goal of packing 4,000+ qubits in a single QPU. The company knows it's in a breakneck race to ship and corner the quantum computing market with its chosen approach to quantum computing, and it has years of ecosystem building behind it. IBM is looking to first integrate its roadmap developments into its IBM Quantum System Two, which will serve as the showcase hardware and testing ground for the new technology.

It remains to be seen if IBM will be able to cross the finish line on the back of its quantum computing technologies. Microsoft is chasing a wholly different path with its search for the famed topological superconductor qubits, as are a number of other companies such as LiteOn, Ampere, Riggeti, and IonQ. And unlike superimposed quantum states, the market only allows for victors or losers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

husker Just in case there is a theoretical physicist who is reading this , I'm going a bit off-subject at first but I'll bring it back to quantum computing . I used to think that we could circumvent the limitation of faster-than-light communications issue by using entangled quantum particles. As you may know, entangled particles communicate with each other instantaneously without regard to distance. We change the spin on one of the entangled particles on Earth, and someone on Neptune with the other of the entangled pair sees the spin change on his particle instantaneously. Expand on this concept and soon your sending 1's and 0's back and forth and we have instantaneous communication. But any learned physicist (as well as Wikipedia) says "No, this will not work because reading the spin may actually change the spin and blah, blah, blah... science". Okay fine. I'll accept that without fully understanding. Then how is it that we can have entangled quantum particles as a key component to quantum computers from which we read values? Wouldn't the faster than light entangled particles be a much simplified version of a quantum computer with a count of just a couple of "useful" qubit pairs? What am I missing?Reply