IEEE to Develop Standard for AV Decision-Making Based on Mobileye RSS

A formal decision-making model for autonomous vehicles preferred over black-box algorithms

IEEE will develop a standard for safety-considerations in automated vehicle (AV) decision-making, the first version of which it aims to publish within a year. The workgroup will be led by Intel Senior Fellow Jack Weast and will take Mobileye’s RSS model as starting point, Intel announced on Thursday.

The Institute of Electrical and Electronics Engineers (IEEE) has approved the proposal for a model in automated vehicle decision-making (IEEE 2846). It aims to develop a rules-based, mathematical model that is will be technology neutral and verifiable through math. It will also be adjustable to allow for regional customization by local governments. The proposed standard will also include a test methodology to assess conformance with the standard.

The need for such a standard has arisen from the need to regulate and assess the safety of AVs. Some automotive SoC makers are touting hundreds of TOPS for their systems. Those chips run deep learning artificial intelligence algorithms. While self-driving systems are very compute hungry, a solely deep learning-based approach creates in effect a black box that can only be tested by statistical evidence through perhaps millions of miles of driving or simulation.

A formal rules-based model would provide an alternative means to verify AV decision-making and to ensure safety of self-driving systems, as opposed to those last resort statistical arguments. Perhaps evidence that this is necessary, GM Cruise and Daimler postponed their plans for robotaxis this year, both based on safety concerns, which proved to be a harder challenge than the companies thought.

While IEEE has not announced any participants in the workgroup (open to anyone), it will be led by Intel Senior Fellow Jack Weast, vice president of Automated Vehicle Standards at Mobileye. Intel will bring its RSS (Responsibility-Sensitive Safety) model as a starting point, which it published in late 2017 as exactly such an industry proposal. As Intel describes it:

“Open and technology-neutral, RSS defines what it means for a machine to drive safely with a set of logically provable rules and prescribed proper responses to dangerous situations. It formalizes human notions of safe driving in mathematical formulas that are transparent and verifiable.”

Without going into the math, RSS adheres to five rules, the first four of which define what a dangerous situation is and the proper response:

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

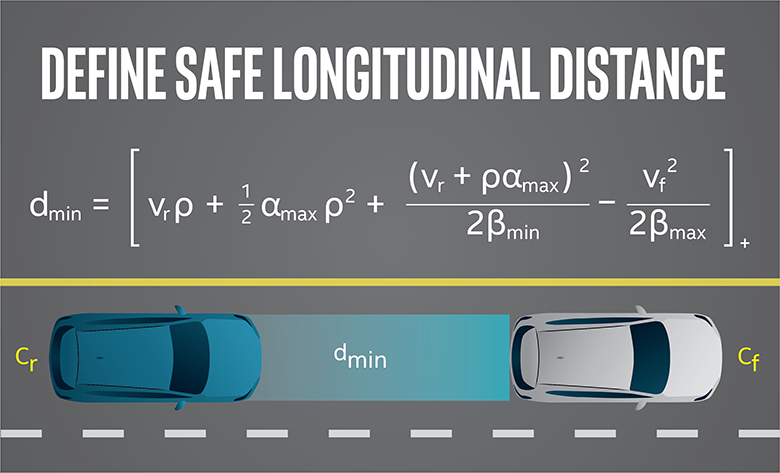

- Do not hit the car in front (longitudinal distance)

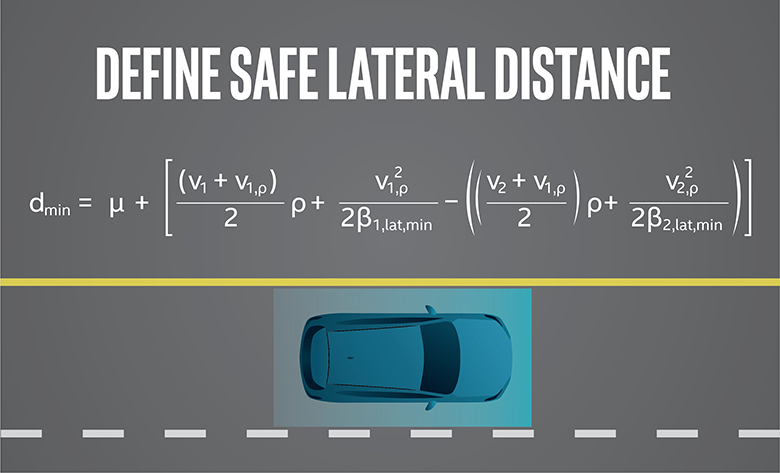

- Do not cut in recklessly (lateral distance)

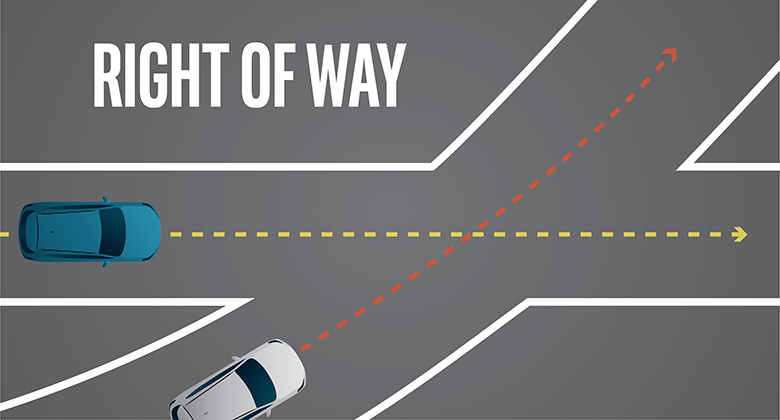

- Right of way is given, not taken

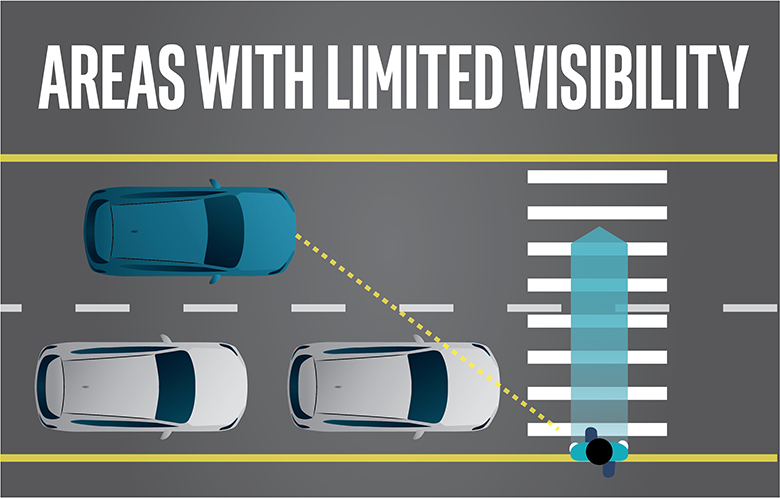

- Be cautious in areas with limited visibility

- If the vehicle can avoid a crash without causing another one, it must

It has since been adopted by numerous parties, including:

- Baidu, as part of its Apollo autonomous driving platform, has incorporated the world’s first open-source implementation of RSS in 2019

- Automotive supplier Valeo

- China Ministry of Transportation to use RSS as the framework for its forthcoming AV safety standard

- Various research institutions

Earlier this year, Mobileye CEO Amnon Shashua accused Nvidia of copying Mobileye’s RSS with its Safety Force Field (SFF); the incorporation of RSS in an IEEE standard might vindicate RSS, similar perhaps to how CXL this year won the coherent interconnect wars. Or more aptly, perhaps, its standardization will depoliticize RSS as a safety model, since it is vendor neutral.

The first meeting will be held in the first quarter of 2020, and IEEE hopes to publish the first draft of the standard within a year. EETimes has published an interview with Weast.

-

bit_user ReplyIt will also be adjustable to allow for regional customization by local governments.

I'd be curious to see how the North Koreans customize it. I imagine they'll probably replace this rule:

If the vehicle can avoid a crash without causing another one, it must...with something like:

If the vehicle can crush a non-government official to avoid possibly colliding with a government official, it must.

Speaking of which, I didn't see any means of resolving such moral dilemmas - do they really ignore things like the Trolley Problem?