Nvidia Claims Doubled Inference Performance with H100

Nvidia's TensorRT-LLM boosts GPU performance for large language models.

Nvidia says its new TensorRT-LL open-source software can dramatically boost performance of large language models (LLMs) on its GPUs. According to the company, the capabilities of Nvidia's TensorRT-LL let it boost performance of its H100 compute GPU by two times in GPT-J LLM with six billion parameters. Importantly, the software can enable this performance improvement without re-training the model.

Nvidia developed TensorRT-LLM specifically to speed up performance of LLM inference and performance graphcs provided by Nvidia indeed show a 2X speed boost for its H100 due to appropriate software optimizations. A particular standout feature of Nvidia's TensorRT-LLM is its innovative in-flight batching technique. This method addresses the dynamic and diverse workloads of LLMs, which can vary greatly in their computational demands.

In-flight batching optimizes the scheduling of these workloads, ensuring that GPU resources are used to their maximum potential. As a result, real-world LLM requests on the H100 Tensor Core GPUs see a doubling in throughput, leading to faster and more efficient AI inference processes.

Nvidia says that its TensorRT-LLM integrates a deep learning compiler with optimized kernels, pre- and post-processing steps, and multi-GPU/multi-node communication primitives, ensuring that they run more efficiently on its GPUs. This integration is further complemented by a modular Python API, which provides a developer-friendly interface to further augment capabilities of the software and hardware without delving deep into complex programming languages. For example, MosaicML has added specific features that it needed on top of TensorRT-LLM seamlessly and integrated them into their inference serving.

"TensorRT-LLM is easy to use, feature-packed with streaming of tokens, in-flight batching, paged-attention, quantization, and more, and is efficient," said Naveen Rao, vice president of engineering at Databricks. "It delivers state-of-the-art performance for LLM serving using NVIDIA GPUs and allows us to pass on the cost savings to our customers."

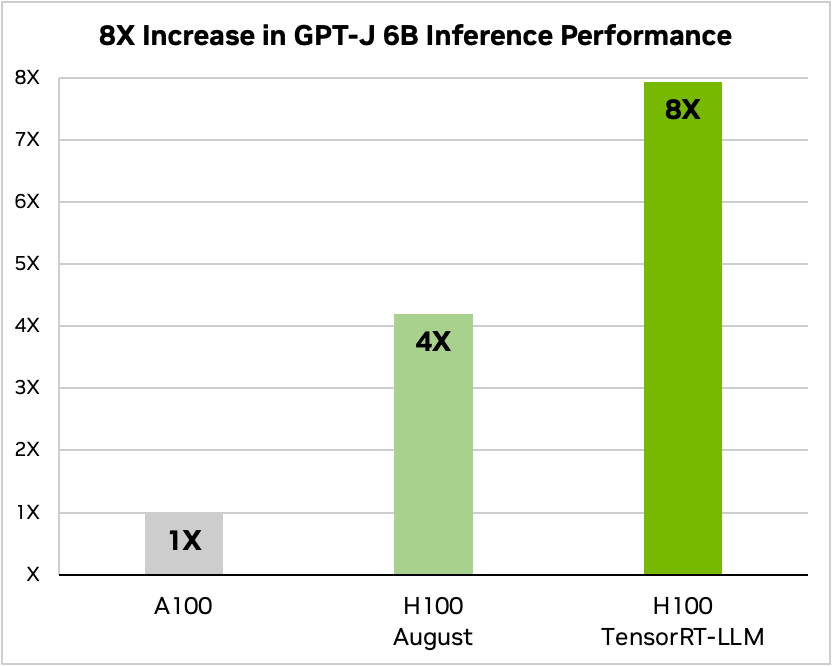

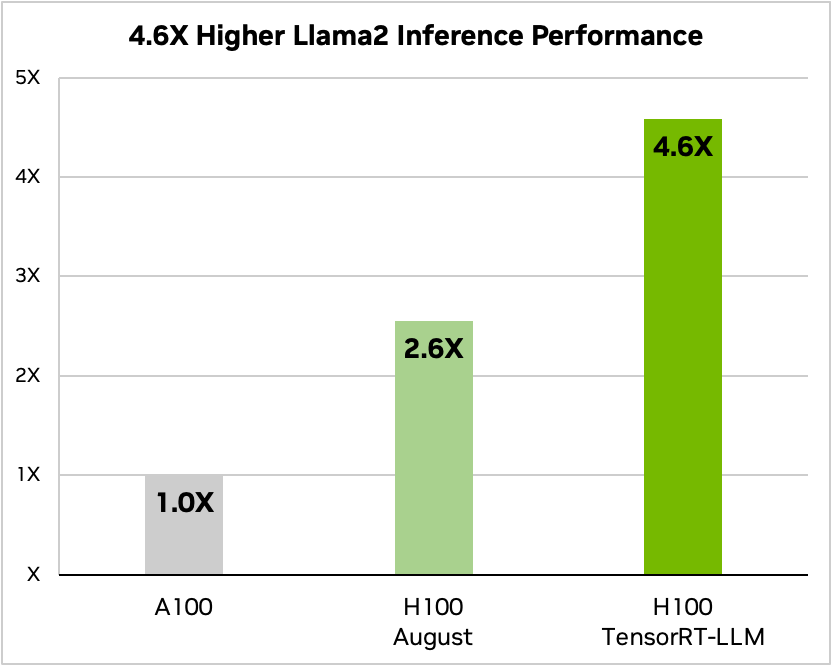

The performance of Nvidia's H100 when coupled with TensorRT-LLM is impressive. On NVIDIA's Hopper architecture, the H100 GPU, when paired with TensorRT-LLM, outperforms the A100 GPU by a factor of eight. Furthermore, when testing the Llama 2 model developed by Meta, TensorRT-LLM achieved a 4.6x acceleration in inference performance compared to the A100 GPUs. These figures underscore the transformative potential of the software in the realm of AI and machine learning.

Lastly, the H100 GPUs, when used in conjunction with TensorRT-LLM, support the FP8 format. This capability allows for a reduction in memory consumption without any loss in model accuracy, which is beneficial for enterprises that have limited budget and/or datacenter space and cannot install a sufficient number of servers to tune their LLMs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JarredWaltonGPU I do have to wonder how much of this is simply from using FP8 computations instead of FP16 / BF16. Half the bandwidth, double the compute, double the performance. But I would seriously doubt that all AI algorithms could use FP8 without encountering problems due to the loss of precision.Reply

More likely is that this is simply a case of the base models and algorithms not being tuned very well. Getting a 2X speedup by focusing on optimizations, especially when done by Nvidia people with a deep knowledge of the hardware, is definitely possible. -

The Hardcard Reply

Inference in many cases can go much lower than eight bit. Large language models are functioning at upwards of 98% of full precision accuracy with just five bits and even two bit inference is usable. FP8 will in most cases be indistinguishable from full precision.JarredWaltonGPU said:I do have to wonder how much of this is simply from using FP8 computations instead of FP16 / BF16. Half the bandwidth, double the compute, double the performance. But I would seriously doubt that all AI algorithms could use FP8 without encountering problems due to the loss of precision.

More likely is that this is simply a case of the base models and algorithms not being tuned very well. Getting a 2X speedup by focusing on optimizations, especially when done by Nvidia people with a deep knowledge of the hardware, is definitely possible.