Nvidia H100 'Hopper' Benchmark Results Published

Nvidia's H100 us up to 4.5 times faster than A100, but it has strong rivals too.

MLCommons, an industry group specializing in artificial intelligence performance evaluation and machine learning hardware, has added results of the latest artificial AI and ML accelerators to its database and essentially published the first performance numbers for Nvidia's H100 and Biren's BR104 compute GPUs obtained via an industry-standard set of tests. The results were compared against those obtained on Intel's Sapphire Rapids, Qualcomm's AI 100, and Sapeon's X220.

MLCommons' MLPerf is a set of training and inference benchmarks that are recognized by tens of companies that back the organizations and submit test results of their hardware to the MLPerf database. The MLPerf Inference version 2.1 set of benchmarks includes datacenter and edge usage scenarios as well as such workloads as image classification (ResNet 50 v1.5), natural language processor (BERT Large), speech recognition (RNN-T), medical imaging (3D U-Net), object detection (RetinaNet), and recommendation (DLRM).

The machines participating in these tests are evaluated in two modes: in server mode queries arrive in bursts, whereas in offline mode all the data is fed at once, so evidently in offline mode they perform better. Also, vendors can submit results obtained in two conditions: in closed category everyone has to run mathematically equivalent neural networks, whereas in open category they can modify them in a bid to optimize them for their hardware, reports IEEE Spectrum.

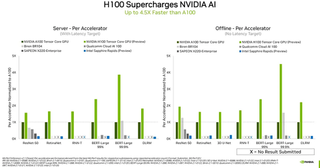

Results obtained in MLPerf not only describe pure performance of accelerators (e.g., one H100, one A100, one Biren BR104, etc.), but also their scalability, and performance-per-watt to draw a more detailed picture. All the results can be viewed in the database, but Nvidia compiled results of per-accelerator performance based on submissions from itself and third parties.

Nvidia's competitors have not submitted all of their results just yet, so the graph published by Nvidia is missing some results. Yet we can still make some quite interesting findings in the table released by Nvidia (yet still keep in mind that Nvidia is an interested party here, so everything should be taken with a grain of salt).

Since Nvidia's H100 is the most complex and the most advanced AI/ML accelerator backed by very sophisticated software optimized for Nvidia's CUDA architecture, it is not particularly surprising that it is the fastest compute GPU today that is up to 4.5X faster than Nvidia's A100.

Yet Biren Technology's BR104, which offers around half of performance set to be offered by the flagship BR100, shows quite some promise in image classification (ResNet-50) and natural language processing (BERT-Large) workloads. In fact, if the BR100 is twice as fast as the BR104, it will offer higher performance than Nvidia's H100 in image classification workloads, at least as far as per-accelerator performance is concerned.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Sapeon's X220-Enterprise as well as Qualcomm's Cloud AI 100 cannot even touch Nvidia's A100 that was launched around two years ago. Intel's 4th Generation Xeon Scalable 'Sapphire Rapids' processor can run AI/ML workloads, though it does not look like the code has been optimized enough for this CPU, which is why its results are rather low.

Nvidia fully expects its H100 to offer even higher performance in AI/ML workloads over time and widen its gap with A100 as engineers learn how to take advantage of the new architecture.

What remains to be seen is how significantly will compute accelerators like Biren's BR100/BR104, Sapeon's X220-Enterprise as well as Qualcomm's Cloud AI 100 improve their performance over time. Furthermore, the real competitor for Nvidia's H100 will be Intel's codenamed Ponte Vecchio compute GPU that is positioned both for supercomputing and AI/ML applications. Also, it will be interesting to see results of AMD's Instinct MI250 — which is arguably optimized primarily for supercomputers — in MLPerf, and whose omission from the current tests is somewhat odd. Yet at least for now, Nvidia holds the AI/ML performance crown.

Anton Shilov is a Freelance News Writer at Tom’s Hardware US. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

Most Popular