Nvidia GPU Subwarp Interleaving Boosts Ray Tracing by up to 20%

The technique relies on architectural extensions so it's destined for future GPUs.

Nvidia researchers have been looking into techniques to improve GPU ray tracing performance. A recently published paper, spotted by 0x22h, sees GPU Subwarp Interleaving as a technology with good potential to accelerate real-time raytracing by as much as 20%.

However, to reach this headline figure, some micro-architectural enhancements need to be put in place; otherwise, the gains from the technique will be limited. Furthermore, the required changes preclude GPU architectures such as Turing (which was modified, then used in the study), and Nvidia will have to bake the changes into a new GPU as architectural extensions. This means the Subwarp Interleaving gains aren't likely to be seen until a generation after Lovelace.

Real-time ray tracing will only get bigger in the world of graphics, and Nvidia continues to tackle the issue of RT performance from multiple angles to maintain a competitive advantage and marketing halo. With this in mind, a research team consisting of Sana Damani (Georgia Institute of Technology), Mark Stephenson (Nvidia), Ram Rangan (Nvidia), Daniel Johnson (Nvidia), Rishkul Kulkarni (Nvidia), and Stephen W. Keckler (Nvidia) have published a paper which shows early promise in ray tracing microbenchmark studies.

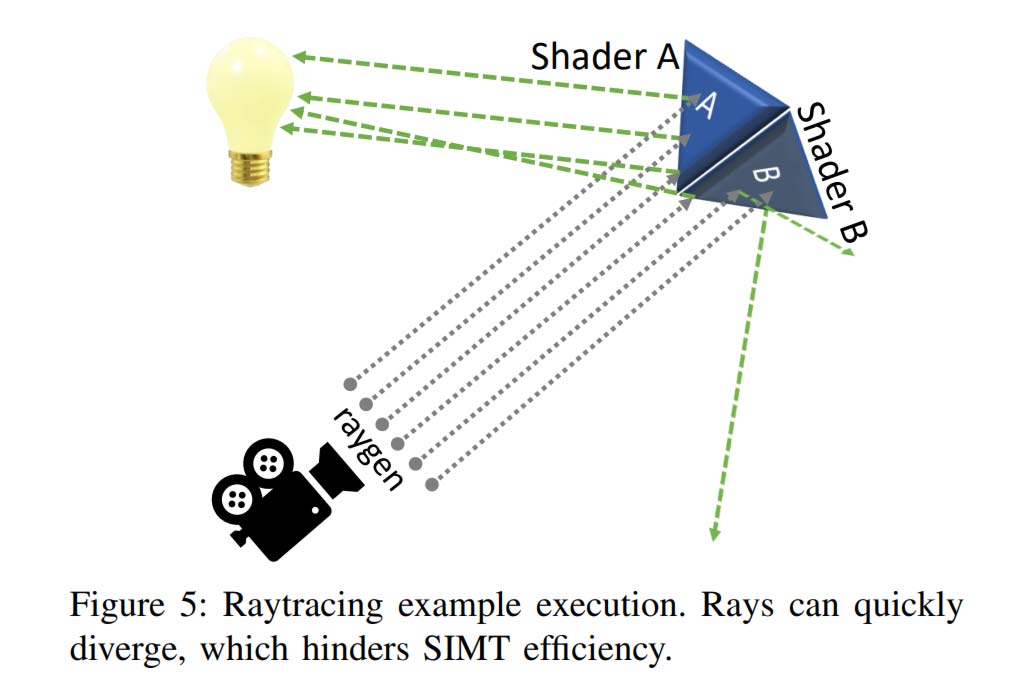

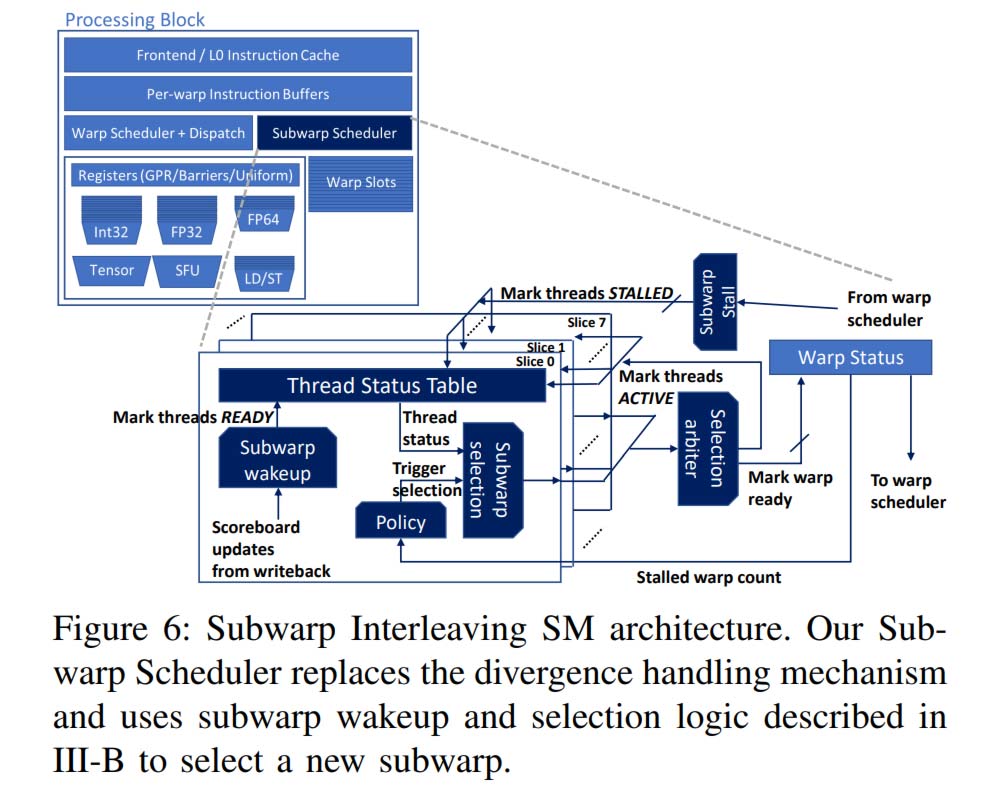

The paper's main thrust is that the way that modern Nvidia GPUs are designed gets in the way of RT performance. "First, GPUs group threads into units, which we call warps, that fetch from a single program counter (PC) and execute in SIMT (single instruction, multiple thread) fashion," explain the researchers. "Second, GPUs hide stalls by concurrently scheduling among many active warps." However, these design choices inherently cause issues in real-time ray tracing due to warp divergence, warp-starved scenarios, and the loss of GPU efficiency when the scheduler runs out of threads and can no longer hide any stalls.

Bring in the Subwarp Scheduler

GPU Subwarp Interleaving is a good solution to the above sticky situations faced by contemporary GPUs being overloaded by warps and running out of threads. The key technique is described as follows: "When a long latency operation stalls a warp and the GPU's warp scheduler cannot find an active warp to switch to, a subwarp scheduler can instead switch execute to another divergent subwarp of the current warp."

On a microarchitecture enhanced Turing-like GPU with Subwarp Interleaving, the researchers achieved what they call "compelling performance gains," of 6.3% on average, and up to 20% in the best cases, in a suite of applications with raytracing workloads.

As we mentioned in the intro, these RT performance gains aren't going to be available to existing GPU families. So you won't be getting a 20% boost courtesy of a driver update to your current GeForce card. However, these background moves are critical to future GPU architectures. So one must assume GPU Subwarp Interleaving is one of many background projects that Nvidia is working on to push forward in future generations. In fact, it may be compounded by other GPU advancements that arrived in Ampere and are coming to Lovelace, before this particular microarchitectural enhancement makes it to shipping GPUs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

VforV What I don't get is, why is this not coming to Lovelace?Reply

You would think 6 months from now is enough time to add this, I mean we found out about this now because it was published, but nvidia could be actually working on this for over a year or more... so how is the author of this article sure it won't come to Lovelace? -

Murissokah ReplyVforV said:What I don't get is, why is this not coming to Lovelace?

You would think 6 months from now is enough time to add this, I mean we found out about this now because it was published, but nvidia could be actually working on this for over a year or more... so how is the author of this article sure it won't come to Lovelace?

This is still academic work. They are just proving that the concept is worth pursuing. This comes before NVidia even considers integrating the technology into their stack. And since it requires microcode changes, there's no chance they'd do it 6 months before release. More like 2 years down the road to see it in a product. -

VforV Reply

But how do we know they don't actually work on this since 2 years ago?Murissokah said:This is still academic work. They are just proving that the concept is worth pursuing. This comes before NVidia even considers integrating the technology into their stack. And since it requires microcode changes, there's no chance they'd do it 6 months before release. More like 2 years down the road to see it in a product.

Is it mandatory to submit this at the start of the research? Because if not, they can be in the middle or end... -

Murissokah ReplyVforV said:But how do we know they don't actually work on this since 2 years ago?

Is it mandatory to submit this at the start of the research? Because if not, they can be in the middle or end...

It's not a matter of regulation, it's a matter of going through all the steps necessary. Up until now this was merely an idea. As the article states, they have only just now published a paper that "...shows early promise in ray tracing microbenchmark studies.". If you look at the paper, you'll see that the test data comes from a simulator. This is a proof of concept, they managed to show that the design changes they propose could improve Ray Tracing performance by an amount singificant enough to be worth pursuing.

NVidia is likely to follow on this because Ray Tracing performance one of their top goals, if not the first one. What this means is that they will now have to evaluate the effect these changes will have on the rest of the featureset and then design and produce prototypes. This is likely to take a year on its own. After this is done, they have to integrate the design into the next architecture. Finally, once all that is done, there's the matter of allocating and ramping up production, which requires billion-dollar deals with third parties (Samsung made the 3000 family GPUs, TSMC will make the 4000s).

The only reason I say this might be in the market in 2 years is that NVidia is avid for this kind of improvement. Otherwise 3 to 4 years would be more reasonable. -

d0x360 ReplyMurissokah said:It's not a matter of regulation, it's a matter of going through all the steps necessary. Up until now this was merely an idea. As the article states, they have only just now published a paper that "...shows early promise in ray tracing microbenchmark studies.". If you look at the paper, you'll see that the test data comes from a simulator. This is a proof of concept, they managed to show that the design changes they propose could improve Ray Tracing performance by an amount singificant enough to be worth pursuing.

NVidia is likely to follow on this because Ray Tracing performance one of their top goals, if not the first one. What this means is that they will now have to evaluate the effect these changes will have on the rest of the featureset and then design and produce prototypes. This is likely to take a year on its own. After this is done, they have to integrate the design into the next architecture. Finally, once all that is done, there's the matter of allocating and ramping up production, which requires billion-dollar deals with third parties (Samsung made the 3000 family GPUs, TSMC will make the 4000s).

The only reason I say this might be in the market in 2 years is that NVidia is avid for this kind of improvement. Otherwise 3 to 4 years would be more reasonable.

It will be in their uarch after lovelace... Probably. It really depends on what kind of changes to the hardware need to be made.

These companies plan hardware 4-6 years out. Changes can be made but they have to be minor ones. I saw a prototype AMD "chiplet" gpu back when the 290x was king of the hill. It was essentially the 295x which was a dual core card but seen by the system as crossfire so not every game supported both cores.

This prototype was for "7nm" but now it's looking like it will be 5nm but that's close enough for something so far off. The PC with the prototype saw the card as a single GPU as did games and performance was 30% higher than the unmodified 295x. It was pretty impressive. I expect to see it in RDNA3 which would be fantastic.

AMD already has the current rasterization crown vs the 3090 and at a much lower price... although you can't find any. They still need to catch up to nVidia in ray tracing but now nVidia needs to catch up to AMD in DX12, Vulkan and rasterization performance while also staying ahead in ray tracing.

It's impressive what AMD has managed with over a decade of essentially no r&d budget in both cpu & gpu market segments yet they caught up to Intel and will catch them again... people seem to forget that AMD hasn't launched zen4 and zen3+ is still the same architecture with some 3d vcache slapped on the die and that alone gave them a 20-30% boost in performance. At the same time they have been slowly but steadily catching up to nVidia and if RDNA2 had proper dedicated ray tracing hardware then they probably would have matched nVidia this gen even if you include DLSS because amd could also use machine learning for aa and upscaling. The only reason they couldn't was the lack of proper hardware combined with the rush they were in due to market pressure.

The real question is if they saw even slight performance gains on current hardware then why can't they implement it now? Even if it's just a 5% gain...that's still a gain and could be the difference between a locked 60 or sometimes 60.

I mean, they said they used current hardware to test this on so it obviously works on current hardware. Sure it doesn't work as good as it will with an architectural change but it works. -

VforV Reply

I understand now, thank you.Murissokah said:It's not a matter of regulation, it's a matter of going through all the steps necessary. Up until now this was merely an idea. As the article states, they have only just now published a paper that "...shows early promise in ray tracing microbenchmark studies.". If you look at the paper, you'll see that the test data comes from a simulator. This is a proof of concept, they managed to show that the design changes they propose could improve Ray Tracing performance by an amount singificant enough to be worth pursuing.

NVidia is likely to follow on this because Ray Tracing performance one of their top goals, if not the first one. What this means is that they will now have to evaluate the effect these changes will have on the rest of the featureset and then design and produce prototypes. This is likely to take a year on its own. After this is done, they have to integrate the design into the next architecture. Finally, once all that is done, there's the matter of allocating and ramping up production, which requires billion-dollar deals with third parties (Samsung made the 3000 family GPUs, TSMC will make the 4000s).

The only reason I say this might be in the market in 2 years is that NVidia is avid for this kind of improvement. Otherwise 3 to 4 years would be more reasonable. -

Blitz Hacker Reply

Likely production of lovelace is already happening or about to happen. To do a complete redesign on already ordered parts is very unlikely. Most of the modern day processors and gpus have atleast a 6 month lead time when they're making them with engineering samples (sent to board partners etc) to design pcb's etc for the gpu and memory kits that nvidia sells) So maybe we will see this implemented post lovelace, provided it pans out into tangible real world RT performance, which hasn't really been shown yetVforV said:What I don't get is, why is this not coming to Lovelace?

You would think 6 months from now is enough time to add this, I mean we found out about this now because it was published, but nvidia could be actually working on this for over a year or more... so how is the author of this article sure it won't come to Lovelace? -

VforV Reply

I know how hardware tech is designed and planned years in advance, what I did not know was how this new research specifically will be implemented, in regards to regulation, which Murissokah explained already...Blitz Hacker said:Likely production of lovelace is already happening or about to happen. To do a complete redesign on already ordered parts is very unlikely. Most of the modern day processors and gpus have atleast a 6 month lead time when they're making them with engineering samples (sent to board partners etc) to design pcb's etc for the gpu and memory kits that nvidia sells) So maybe we will see this implemented post lovelace, provided it pans out into tangible real world RT performance, which hasn't really been shown yet