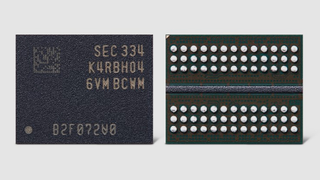

Samsung Paves The Way to 1TB Memory Sticks with 32Gb DDR5 ICs

Samsung develops world's highest capacity DDR5 memory chip.

Samsung has introduced the industry's first monolithic DDR5 SDRAM chip featuring 32 Gb (4 GB) of capacity. The memory IC will enable the company to greatly simplify the production of high-capacity memory modules and build unprecedented 1 TB RDIMMs for servers.

Samsung's 32 Gb memory device is made on the company's 12nm-class fabrication technology for DRAM that enables higher density and optimizes power consumption. In particular, the company says that a 128 GB DDR5 module based on the new 32 GB ICs consumes 10% less power than a similar module powered by 16 Gb devices.

For now, Samsung isn't revealing speed bins for its 32 Gb DDR5 memory chips, but its 16 Gb DDR5 ICs feature a 7200 MT/s data transfer rate. Not revealing the supported speed for these chips is not completely unexpected, as the company's press release clearly positions them for servers that demand capacity rather than client PCs that require high speeds.

Indeed, Samsung can now build a 128 GB DDR5 RDIMM with ECC using 36 single-die 32 Gb DRAM chips, which greatly lowers the costs of such products, which are widely used in today's servers. Also, the company can use 40 8-Hi 3DS memory stacks comprised of 32 Gb dies to build 1 TB memory modules for AI and database servers, which will sell like hotcakes given the generative AI frenzy,

Samsung says it will start mass production of 32 Gb DDR5 devices by the end of the year, so expect actual modules featuring these DRAMs to arrive late in 2023 or early in 2024. As far as high-end 512 GB and 1 TB memory modules are concerned, since they are aimed at high-end servers, expect these modules to debut after they are validated by platform vendors (e.g., AMD, Ampere, Intel, etc.) and then qualified by actual server makers.

Given that demand for AI and HPC servers is booming these days, we would expect 512 GB and 1 TB memory modules to arrive sooner rather than later, though making predictions about enterprise-grade hardware is hardly a good business.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Anton Shilov is a Freelance News Writer at Tom’s Hardware US. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

InvalidError The smallest 16Gbits DDR5 chip I remember reading about was 88sqmm, which means this 32Gbits chip must be around 160sqmm. These DRAMs are going to be a bit pricey from having fewer than half as many viable chips per wafer.Reply -

Diogene7 ReplyAdmin said:Samsung uses 12nm DRAM process technology to build its highest-capacity DRAM IC to date.

Samsung Paves The Way to 1TB Memory Sticks with 32Gb DDR5 ICs : Read more

I wish it would be low-power 32Gb Non-Volatile VG-SOT-MRAM ICs, to be able to get low-power 32GB Non-Volatile memory in a package.

This would be disruptive and open plenty new opportunities.

https://www.eetimes.eu/sot-mram-architecture-opens-doors-to-high-density-last-level-cache-memory-applications/ -

InvalidError Reply

According to your link, even the most advanced MRAM variant still has a write endurance of only a trillion cycles. In a PC-style environment or anything with comparably regular use, you may burn through the endurance as DRAM within weeks, possibly down to hours as SRAM.Diogene7 said:I wish it would be low-power 32Gb Non-Volatile VG-SOT-MRAM ICs, to be able to get low-power 32GB Non-Volatile memory in a package.

I wouldn't want that in any of my devices until endurance is up by at least another +e3. -

bit_user ReplySamsung can now build a 128 GB DDR5 RDIMM with ECC using 36 single-die 32 Gb DRAM chips

Nope. Starting with DDR5, ECC DIMMs require 25% more chips. So, the number would be 40 chips, whereas a non-ECC DIMM of the same capacity would have only 32.

However, the 12.5% overhead figure does apply to DDR4 and older DIMM standards. -

bit_user Reply

Do you have comparable figures for DDR5 DRAM and ~7 nm SRAM cells? Just curious.InvalidError said:According to your link, even the most advanced MRAM variant still has a write endurance of only a trillion cycles.

BTW, as memory layout randomization seems to be gaining favor, for security reasons, I think it will have the effect of leveling-out wear. Most DRAM content should be rather low turnover. Therefore, I'm a little skeptical that ~1T cycles is inadequate for a client PC or other device. -

InvalidError Reply

Ever heard of DRAM or SRAM failing? CPU registers are made the same way as SRAM cells, they get read and written trillions of times per active hour and we usually take for granted that apart from material, manufacturing or something else in the system failing and killing the CPU first, CPUs typically outlast how long people can be bothered to run them to find out how long they can really last, mostly the same goes for DRAM.bit_user said:Do you have comparable figures for DDR5 DRAM and ~7 nm SRAM cells? Just curious.

Basically, cycle endurance does not appear to be a thing for DRAM and SRAM. At least not yet.

Papers do predict that N-channel performance degradation from ions getting trapped in the gate oxide over time will become a problem eventually.

While the OS may be randomizing memory page allocations, once that allocation is done, stuff usually stays wherever it was physically mapped at allocation until it either gets evicted to swap or freed. The int64 that is counting 1kHz clock ticks for the RTC is allocated at boot and likely stays there for as long as the system is turned on. That is ~31 billion writes per year on the LSB. Your NIC's driver has a stats, status and buffer blocks that get written to every time a packet comes in or out, that can be millions of writes per second if you are transferring large files at high speeds and AFAIK, peripherals cannot directly DMA in/out of CPU caches to avoid transient memory writes. Last time I looked, my system was reporting 30k interrupts/s while mostly idle, which I suspect translates to thousands of memory writes/s to mostly static locations too.bit_user said:BTW, as memory layout randomization seems to be gaining favor, for security reasons, I think it will have the effect of leveling-out wear. Most DRAM content should be rather low turnover. Therefore, I'm a little skeptical that ~1T cycles is inadequate for a client PC or other device.

Burning through 1T writes to xRAM within a desktop's useful life isn't too far-fetched if it is used for some consistently intensive stuff that doesn't cache particularly well.

If imminently consumable memory becomes a thing, we'll need AMD, Intel and friends to implement system-managed "L4$" memory pools for the OS to use as a target for high-traffic OS/driver structures, DMA buffers, high-frequency and transient data.

2T0C IGZO is another potentially interesting DRAM technology that takes the refresh interval from 16ms to 100-1000s, practically eliminating self-refresh and could easily scale in a multi-layer manner. One major drawback (at least for now) is that the material can fail in as little as 120 days when exposed to oxygen or moisture. -

bit_user Reply

DRAM, for sure. I've experienced about a dozen DRAM DIMMs, that once tested fine, developing bad cells. It's not hard to find other accounts of this happening.InvalidError said:Ever heard of DRAM or SRAM failing?

As far as SRAM, I'm not even sure how you'd know. In CPUs with ECC-protected caches, I guess you might get a machine check exception and maybe you happen to check the system logs and see it mentioned there. In general, I'd expect CPUs to be built with more generous margins for SRAM than your typical DRAM chip, because getting it wrong is a lot more costly to them.

Nothing says that registers need to be constructed at the same size as memory cells in L3 cache, for instance. I'd almost be surprised if they were exactly the same.InvalidError said:CPU registers are made the same way as SRAM cells, they get read and written trillions of times per active hour

Anyway, I just asked if you had stats. It'd suffice to simply say "no", or just not respond to this point.

This used to be true, but once memory controllers and PCIe switches both moved on die, it became cheap enough for memory accesses by PCIe devices to snoop the CPU cache. Intel calls this Data Direct I/O, and it was first introduced during the Sandybridge generation.InvalidError said:Your NIC's driver has a stats, status and buffer blocks that get written to every time a packet comes in or out, that can be millions of writes per second if you are transferring large files at high speeds and AFAIK, peripherals cannot directly DMA in/out of CPU caches to avoid transient memory writes.

https://www.intel.com/content/www/us/en/io/data-direct-i-o-technology.html

While your system is idling, those probably won't tend to get past L3 cache.InvalidError said:Last time I looked, my system was reporting 30k interrupts/s while mostly idle, which I suspect translates to thousands of memory writes/s to mostly static locations too.

Okay, maybe 1T is a bit on the low side.InvalidError said:Burning through 1T writes to xRAM within a desktop's useful life isn't too far-fetched

A more likely scenario is that they add visibility to the on-die ECC errors in DDR5 (or later) DRAM chips to the OS. Once the error rate of a memory page crosses some threshold, the kernel can remap the page and exclude that address range from further use (which they can already do).InvalidError said:If imminently consumable memory becomes a thing, we'll need AMD, Intel and friends to implement system-managed "L4$" memory pools for the OS to use as a target for high-traffic OS/driver structures, DMA buffers, high-frequency and transient data. -

InvalidError Reply

The article says DDIO is only available on Xeon E5 and E7 CPUs though. Another page adds the XSP g1/2/3 lineup, W-2200 and W-3200 families. Unless it has changed since then, that looks like there is still no DDIO on consumer CPUs and entry-level Xeons. Also, DDIO was responsible for the NetCAT security flaw exposed in 2019, which means no DDIO for you if your data needs privacy.bit_user said:This used to be true, but once memory controllers and PCIe switches both moved on die, it became cheap enough for memory accesses by PCIe devices to snoop the CPU cache. Intel calls this Data Direct I/O, and it was first introduced during the Sandybridge generation. -

Diogene7 ReplyInvalidError said:According to your link, even the most advanced MRAM variant still has a write endurance of only a trillion cycles. In a PC-style environment or anything with comparably regular use, you may burn through the endurance as DRAM within weeks, possibly down to hours as SRAM.

I wouldn't want that in any of my devices until endurance is up by at least another +e3.

It seems that European research center IMEC is first intending VG-SOT-MRAM as SRAM cache replacement (Level 1, 2 and 3).

Although 10E12 cycle endurance is I think lower than current generation SRAM/DRAM cycle endurance, for many (IoT) applications it may be reasonable enough.

And I would think that with further R&D, with time, it may be possible to achieve better endurance.

So yes, it may not yet be a good fit for all applications, but it could already certainly be use usage that do are not too intensive in read/write.

For example, NXP has announced that in 2025 they intend to use TSMC automotive 16nm Finfet with MRAM, and I think it is only 10E6 read/write cycle endurance… -

Diogene7 Replybit_user said:Do you have comparable figures for DDR5 DRAM and ~7 nm SRAM cells? Just curious.

BTW, as memory layout randomization seems to be gaining favor, for security reasons, I think it will have the effect of leveling-out wear. Most DRAM content should be rather low turnover. Therefore, I'm a little skeptical that ~1T cycles is inadequate for a client PC or other device.

The feedback from InvalidError may be valid, but to get a better idea, I agree that it would be better to have some « hard » statiscal numbers from research papers related to usage to give some context.

I have no idea, but I would think that 10E12 (1 Trillion) read/write cycle could be enough for edge IoT devices / sensors as if they are not triggered to intensively.

But I have no idea for mobile devices (smartphone), desktop and servers…

My dream is that low-power MRAM endurance is high enough to at least be able to replace SRAM and DRAM in mobile phones and edge IoT devices in order that they would inherently be built on the « Normally-Off Computing » concept.

It would provide the opportunity to re-architect the compute in those devices differently.

Desktop and servers are not a priority for myself…

Most Popular