Tesla details how it finds punishing defective cores on its million-core Dojo supercomputers — a single error can ruin a weeks-long AI training run

Tesla's FSD training goes on.

Detecting malfunctioning cores and disabling them on a massive processor is challenging, but Tesla has developed its Stress tool, which can detect cores prone to silent data corruption across not only Dojo processors but also across Dojo clusters with millions of cores, all without taking them offline. This is an incredibly important capability, as Tesla says a single silent data error can destroy an entire training run that takes weeks to complete.

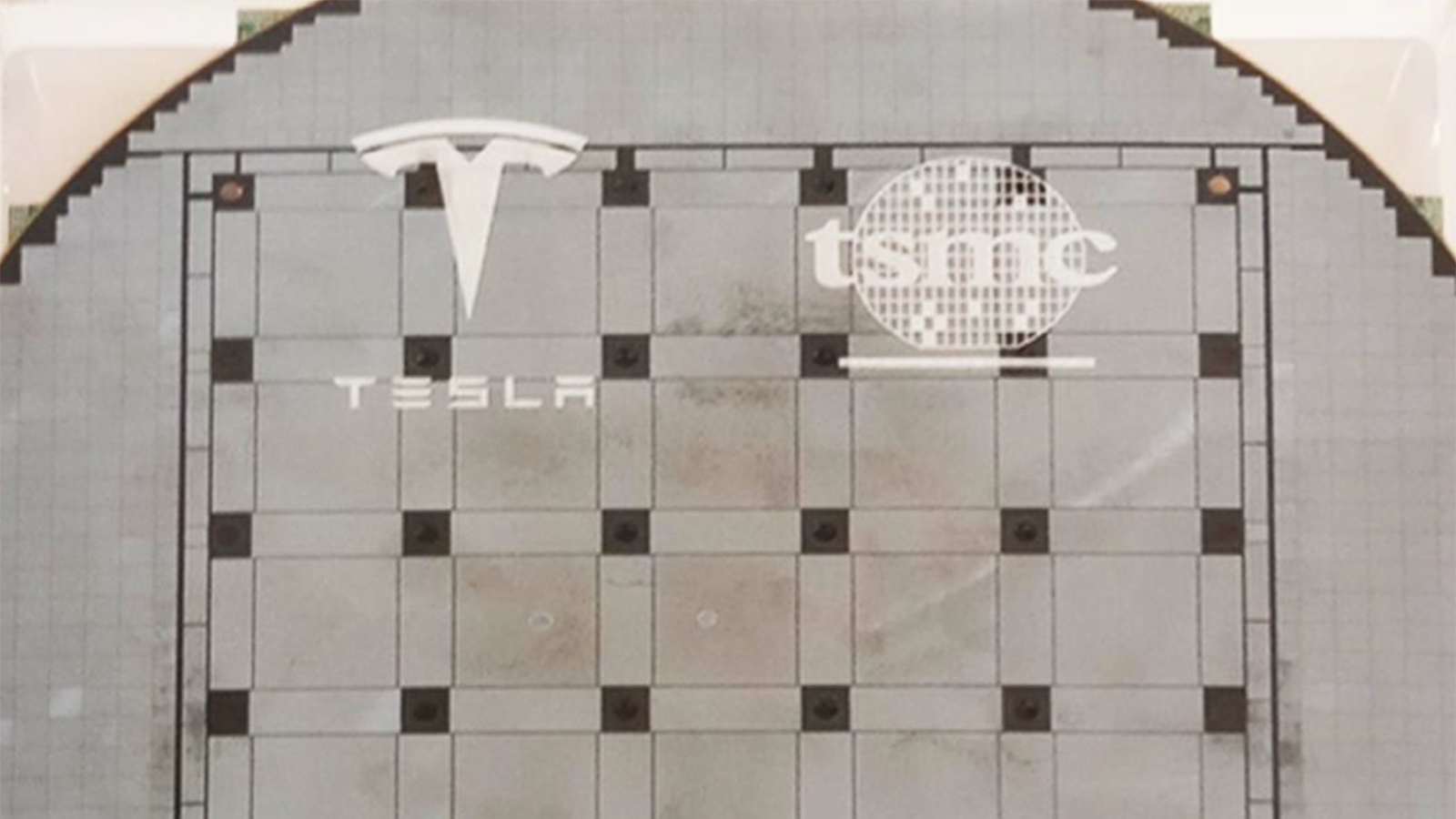

Tesla's Dojo is one of the two largest processors that currently exist on the planet. These massive wafer-scale chips use a whole 300-mm wafer, meaning it simply isn't possible to create a larger chunk of computing power in one go. Each Dojo wafer-scale processor packs up to 8,850 cores, but some of them may induce silent data corruptions (SDCs) after deployment, corrupting the results of extensive training runs.

A big processor

Given the extreme complexity of the Dojo Training Tile (the large wafer-size chip), it isn't easy to detect defective dies even during the manufacturing process, but when it comes to silent data corruption (SDC), things become even more complex.

Keep in mind that SDCs are inevitable on all types of hardware, but a Dojo processor consumes 18,000 amps and dissipates 15,000W of power, which has an effect. However, all cores should perform as intended, as otherwise Tesla's AI training will become more complicated, because a single error caused by data corruption can render weeks of AI training obsolete.

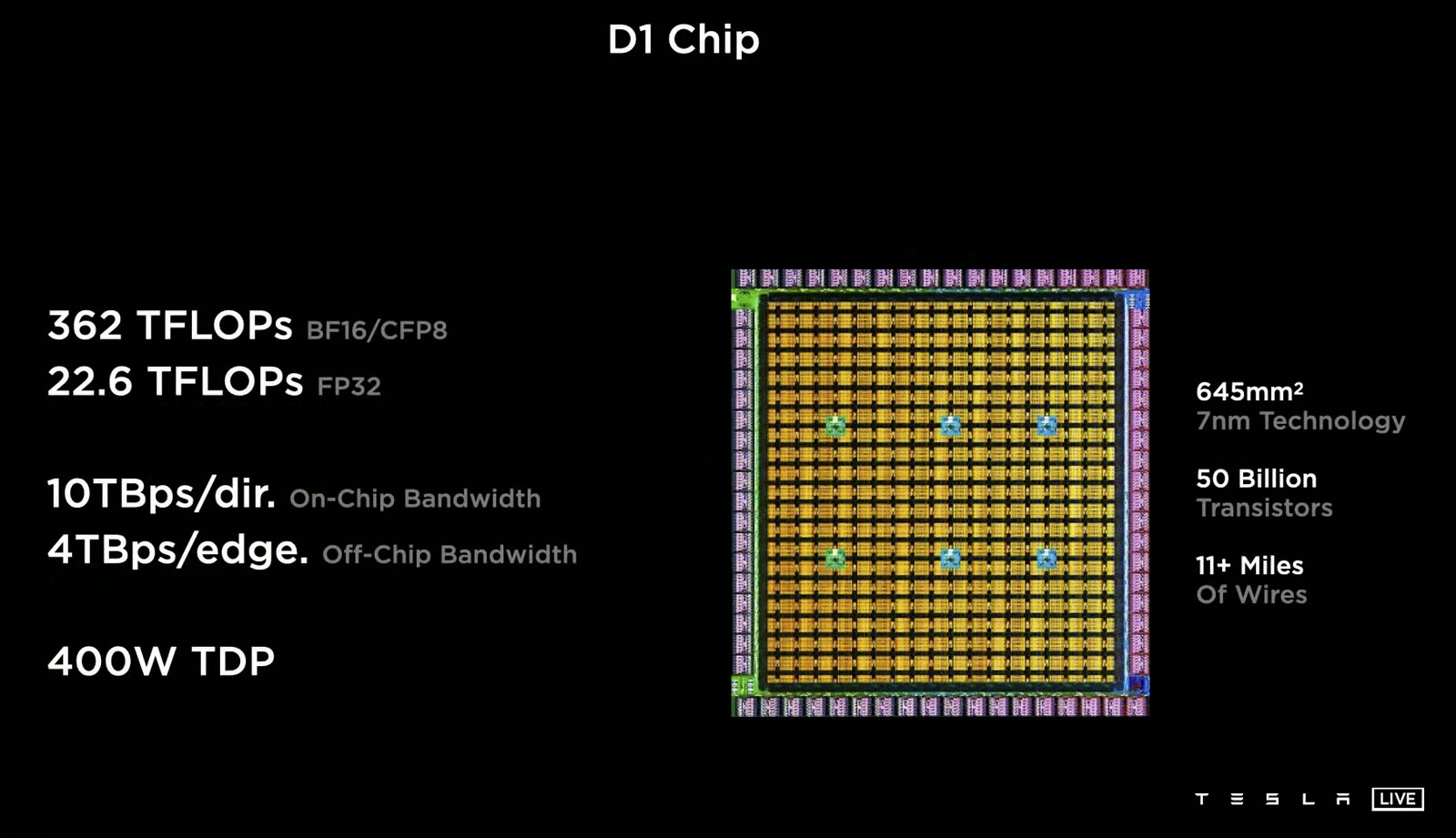

Tesla refers to each wafer-scale Dojo processor as a 'Training Tile.' Each Trailing tile packs twenty-five 645 mm^2 D1 'chips' with 354 custom 64-bit RISC-V cores featuring 1.25 MB of SRAM for data and instructions (which Tesla calls nodes, but we will call them cores for easier understanding) organized in a 5×5 cluster and interconnected using a mech network with a 10 TB/s of directional bandwidth.

Each D1 also supports 4 TB/s off-chip bandwidth. Each 'Training Tile' therefore packs 8,850 cores, supporting 8-, 16-, 32-, or 64-bit integers, as well as multiple data formats. Tesla uses TSMC's InFO_SoW technology to package its wafer-scale Dojo processors.

Needs proper maintenance

To address the risk of core faults, Tesla first deployed a differential fuzzing technique. This initial version involved generating a random set of instructions and sending the same sequence to all cores. After execution, the outputs were compared to find mismatches. However, the process took too long due to the significant communication overhead between the host and the Dojo training tile.

In a bid to boost efficiency, Tesla refined the method by assigning each core a unique payload consisting of 0.5 MB of random instructions. Instead of communicating with the host, cores retrieved payloads from each other within the Dojo training tile and executed them in turn. This internal data exchange utilized Dojo training tile's high-bandwidth communication, enabling Tesla to test approximately 4.4 GB of instructions in a significantly shorter timeframe.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Then, Tesla further enhanced the method by enabling cores to run each payload multiple times without resetting their state between runs. This technique introduced additional randomness into the execution environment, enabling the exposure of subtle errors that might otherwise go undetected. Despite the increased number of executions, the slowdown was minimal compared to the gains in detection reliability, according to the company.

Another improvement resulted from periodically integrating register values into a designated SRAM area using XOR operations, which increased the probability of identifying defective computational units by a factor of 10 (as tested in known defective cores) without substantial performance degradation.

Not only on the processor level

Tesla's method not only works at the Dojo training tile level or the Dojo Cabinet level (which packs 12 Dojo training tiles), but also at the Dojo Cluster level, enabling the company to identify a faulty core out of millions of active cores.

Once tuned properly, the Stress monitoring system revealed numerous defective cores across Dojo clusters, according to the report. The distribution of detection times varied widely, though. Most defects are found after executing 1 GB to 100 GB of payload instructions per core, corresponding to seconds to minutes of runtime. Harder-to-detect defects may require 1000+ GB of instructions, meaning several hours of execution.

It should be noted that Tesla's Stress tool testing runs are lightweight and self-contained within the cores, allowing it to perform background testing without requiring cores to be offline. Obviously, only the cores identified as faulty are disabled afterward, and even then, each D1 die can tolerate a few disabled cores without affecting the overall functionality.

Identifying design flaws

Tesla also mentioned that in addition to detecting failed cores, the Stress tool has also uncovered a rare design-level flaw, which engineers managed to address through software adjustments. Several issues within low-level software layers were also found and corrected during the broader deployment of the monitoring system.

By now, the Stress tool has been fully integrated into operational Dojo clusters for in-field monitoring of ongoing hardware health surveillance during active AI training. The company states that the defect rates observed through this monitoring are comparable to those published by Google and Meta, indicating that the monitoring tool and hardware are on par with those used by others.

In post-silicon and pre-silicon phases

Tesla now plans to use data obtained by using its Stress to study the long-term degradation of hardware due to aging. In addition, the company intends to extend the method to pre-silicon testing phases and early validation workflows to catch the aforementioned faults even before production, although it is challenging to envision exactly how this might be achieved, as SDCs can occur due to aging.

Thoughts

Developing and building a wafer-scale processor is an extremely complex task, and only two companies in the industry — Cerebras and Tesla — have accomplished it. Like other processors, these devices are prone to defects and degradation; however, Tesla has developed its own method of identifying faulty processing cores without taking them offline, which highlights significant progress.

TSMC, which builds these gargantuan processors for Cerebras and Tesla, states that more companies will adopt wafer-scale designs using its SoIC-SoW technology in the coming years. Apparently, the industry is preparing and gaining experience for this. Little by little.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

usertests ReplyDeveloping and building a wafer-scale processor is an extremely complex task, and only two companies in the industry — Cerebras and Tesla — have accomplished it. Like other processors, these devices are prone to defects and degradation; however, Tesla has developed its own method of identifying faulty processing cores without taking them offline, which highlights significant progress.

Every hyperscaler is going to have wafer scale chips soon. Facebook, Apple, Google, Microsoft, Amazon, and Netflix next.

TSMC, which builds these gargantuan processors for Cerebras and Tesla, states that more companies will adopt wafer-scale designs using its SoIC-SoW technology in the coming years. Apparently, the industry is preparing and gaining experience for this. Little by little. -

emike09 Rented a Cyberbeast for a 4000 mile road trip to the redwoods. FSD is mind blowing. In that 4000 miles, I only needed to take control 3 times where I didn't like how close to the inner line it was on super curvy mountain roads. Absolutely incredible.Reply -

abufrejoval Reply

It just might become the point where some roads will split and one or another current hyperscaler may no longer be able to keep up and stay in the race.usertests said:Every hyperscaler is going to have wafer scale chips soon. Facebook, Apple, Google, Microsoft, Amazon, and Netflix next.

Doing custom ARM cores and fabric ASICs may be one thing (and a lot of that seems to be semi-custom with Broadcom), but doing your own wafer-scale AI custom design at the order of a Cerebras is quite a different matter.

And I wouldn't bet on Tesla/Dojo actually having succeeded and earning its money just yet or ever: Tesla is buying a surprisingly high number of Nvidia GPUs and Mr. Musk's track record on truth may be one of the ever fewer things he still shares with his ex boss. -

bit_user Reply

First, I thought AI models were generally more resilient to errors than that.The article said:Tesla says a single silent data error can destroy an entire training run that takes weeks to complete.

Second, this is not a new problem. In the field of HPC, they rely on check-pointing and revert to an earlier snapshot of the state, when an error occurs or is detected.

Why not? I really don't understand this claim, even if it were like Cerebras' design (which it isn't). IIRC, Tesla attaches their compute dies to a carrier, which means they should be able to test each of the compute dies first, and only mount the good ones. Of course, you can have errors introduced during the mounting phase, or which occur later, but test-before-mounting should significantly cut down on the rate of hardware defects that make it into the finished assembly.The article said:Given the extreme complexity of the Dojo Training Tile (the large wafer-size chip), it isn't easy to detect defective dies even during the manufacturing process

They previously said it could be used to find design flaws. That's what you'd use it for, in a pre-silicon validation scenario.The article said:the Stress tool has also uncovered a rare design-level flaw

...

In addition, the company intends to extend the method to pre-silicon testing phases and early validation workflows to catch the aforementioned faults even before production, although it is challenging to envision exactly how this might be achieved, as SDCs can occur due to aging.