A Complete History Of Mainframe Computing

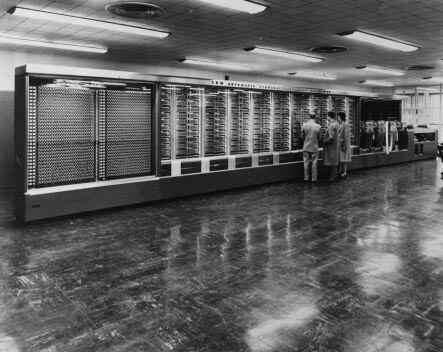

The Harvard Mark I

Our trip down mainframe lane starts and ends, not so surprisingly, with IBM. Back in the 1930s, when a computer was actually a fellow with a slide rule who did computations for you, IBM was mainly known for its punched-card machines. However, the transformation of IBM from one of the many sellers of business machines to the company that later became a computer monopoly was due in large part to forward-looking leadership, at that time going by the name of Thomas Watson, Sr.

The Harvard machine was a manifestation of his vision, although in practical terms, was not a technological starting point for what followed. Still, it is worth looking at, just so we can see how far things have come.

It all began in 1936, when Howard Aiken, a Harvard researcher, was trying to work through a problem relating to the design of vacuum tubes (a little ironic, as you will see). In order to make progress, he needed to solve a set of non-linear equations, and there was nothing available that could do it for him. Aiken proposed to Harvard researchers there that they build a large-scale calculator that could solve these problems. His request was not well-received.

Aiken then approached Monroe Calculating Company, which declined the proposal. So Aiken took it to IBM. Aiken's proposal was essentially a requirement document, not a true design, and it was up to IBM to figure out how to fulfill these requirements. The initial cost was estimated at $15,000, but that quickly ballooned up to $100,000 by the time the proposal was formally accepted in 1939. It eventually cost IBM roughly $200,000 to make.

It was not until 1943 that the five-ton, 51-ft. long, mechanical beast ran its first calculation. Because the computer needed mechanical synchronization between its different calculating units, there was a shaft driven by a five-horsepower motor running its entire length. The computer "program" was created by inserting wire links into a plug board. The data was read by punched cards and the results were printed on punched cards or by electric typewriters. Even by the standards of the day, it was slow. It was only capable of doing three additions or subtractions per second and the machine took a rather ponderous six seconds to do a single multiplication. Logarithms and trigonometric calculations took over a minute each.

As mentioned, the Harvard Mark I was a technological dead-end, and did not do much important work during the 15 years it was used. Still, it represented the first fully-automated computing machine ever made. While it was very slow, mechanical, and lacked necessities like conditional branches, it was a computer, and represented a tiny glimpse at what was yet to come.

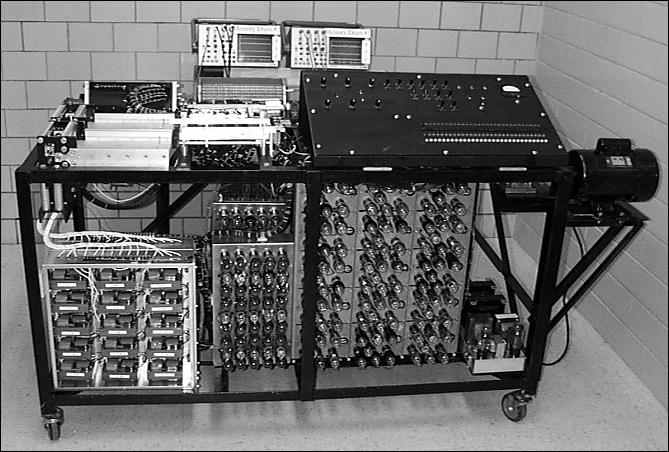

The ABC (Atanasoff-Berry Computer)

Although only recognized as such many years later, the ABC (Atanasoff-Berry Computer) was really the first electronic computer. You might think "electronic computer" is redundant, but as we just saw with the Harvard Mark I, there really were computers that had no electronic components, and instead used mechanical switches, variable toothed gears, relays, and hand cranks. The ABC, by contrast, did all of its computing using electronics, and thus represents a very important milestone for computing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Although it was electronic, the computer's parts were very different than what is used today. In fact, transistors and integrated circuits are required just to have the same building blocks. They did not exist in 1939 when John Atanasoff received funding to build a prototype, so he used what was available at the time: vacuum tubes. Vacuum tubes could amplify signals and act as switches, so they could thus be used to create logic circuits. However, they used a lot of power, got very hot, and were very unreliable. These were tradeoffs he and others had to live with and were unfortunate characteristics of the computers built from them.

The logic circuits he created with the vacuum tubes were fast, and could do addition and subtraction calculations at 30 operations per second. While it would be normal today, it was rare for a computer to use a binary system, since it was not a number system with which many were familiar at that time. Another important technology was the use of capacitors for memory, and "jogging" them with electricity to keep their contents (similar to a DRAM refresh used today). Memory was not truly random though, as it was actually contained in a spinning drum that rotated fully once per second. Specific memory locations could only be read from when the section of the drum they were in was over the reader. This obviously had serious latency issues. Later, he added a punched-card machine (punched cards were very extensively used by businesses at that time to store records and perform computations on them) to hold data that could not fit in the drum memory.

In retrospect, this computer wasn't terribly useful. It wasn't even programmable. But it was, at least on a conceptual level, a very important milestone for computers, and a progenitor to computers of the future. While working on this machine, Mr. Atanasoff invited a man named John W. Mauchly to view his creation. Let's find out why that was significant.

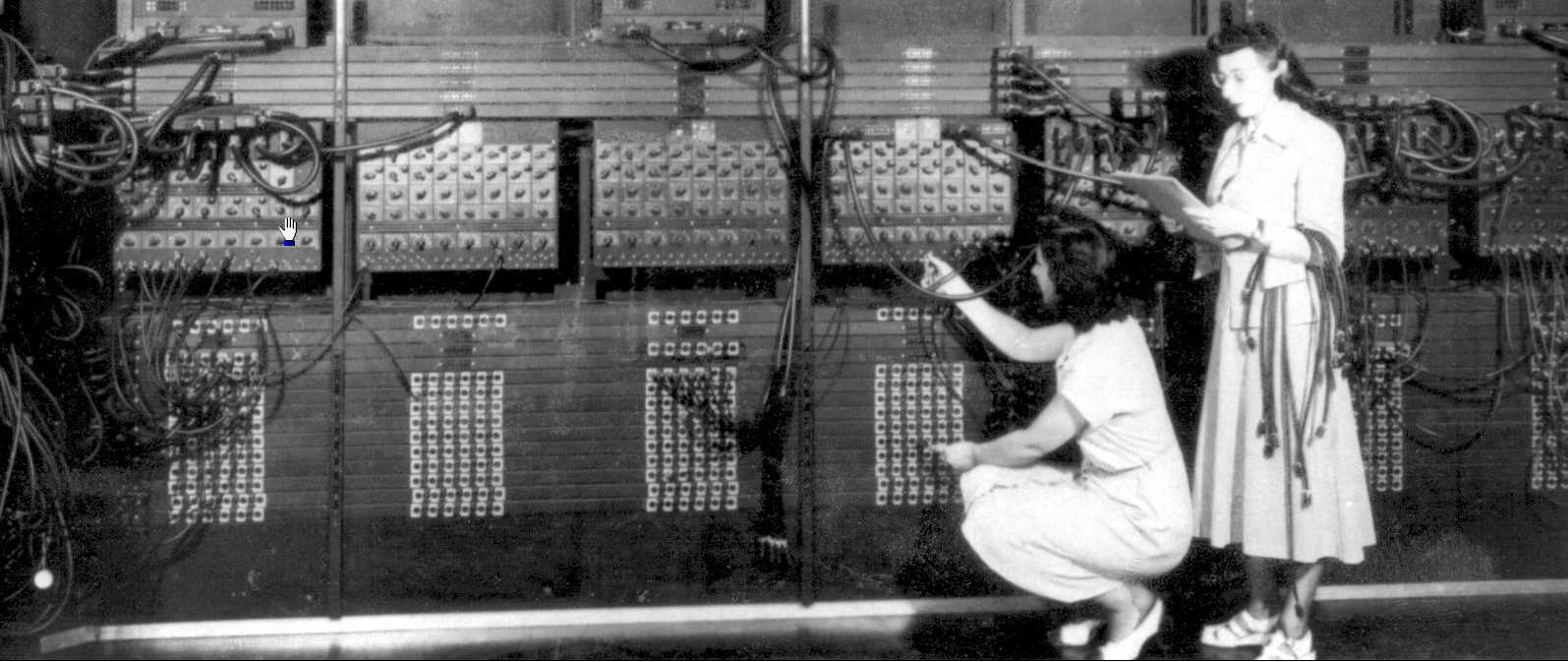

The ENIAC

On December 7, 1941, Japan attacked Pearl Harbor, drawing the United States into the conflagration known as World War II. One problem every country at war had was creating artillery ballistic tables for each type of artillery they produced. This was a huge undertaking, being both a very slow and tedious process. So, the U.S. army granted funds to the Moore School of Electrical Engineering at the University of Pennsylvania to build an electronic computer to facilitate this work. You might have guessed from the last page that our friend John Mauchly just happened to be there, and he then took on this project with a gifted graduate student named J. Presper Eckert.

However, World War II ended before the machine was completed. When finished in 1946, this 30-ton monstrosity consisted of 49-ft. high cabinets, 18,000 vacuum tubes, 1,500 relays, 70,000 resistors, 10,000 capacitors, and 6,000 manual switches, and it consumed 200 kilowatts. Although finished after the war, it hardly proved useless. Capable of 5,000 additions, 357 multiplications, or 38 divisions per second, the performance of this machine was incredible. Problems that took a human mathematician 20 hours to solve, took only 30 seconds for the ENIAC.

The main problem with the machine, aside from the unreliability inherent in all vacuum tube machines, was that it was not programmable in the conventional sense. "Programs" were entered by the "ENIAC girls" working on plug boards and banks of switches. This generally took from a few hours to a few days. Also, in a backward step from the ABC computer, the ENIAC worked with decimal and not binary numbers.

Nevertheless, the ENIAC was an extremely useful machine for the U.S., particularly with the enhancements that were later added on, until it was retired in 1955. During its lifetime, it worked on problems ranging from weather forecasts, random-number studies, thermal ignition, wind-tunnel design, artillery trajectory calculations, and even development of the hydrogen bomb. In fact, by the time it was retired in 1955, it was estimated that the ENIAC by itself did more calculations than all of humankind did up to 1945.

While the story of the ENIAC trails off in 1955, our two heroes, Mauchly and Eckert, still have much to accomplish before their stories end.

The EDVAC

Even before the ENIAC ran its first test, Mauchly and Presper were very aware of its shortcomings. So was John Von Neumann, whom many of you have heard about from the expression "Von Neumann Architecture" (although he received too much personal credit for what was a group effort). At any rate, the EDVAC was the first expression of this architecture, although Mauchly and Presper left the University of Pennsylvania where it was being built in 1946, before the computer was finished.

At that time, there were several major issues with the ENIAC. Sure, it was fast. But it had very little storage. More than that, it had to be reprogrammed by re-wiring it, which could take hours or even days, and it was inherently unreliable because the computer used so many vacuum tubes. In addition to being unreliable, vacuum tubes also used a lot of power, required a lot of space, and generated a lot of heat. Clearly, minimizing their use would have multiple advantages.

There were two important conceptual changes (one of which was revolutionary) on the EDVAC that seem very obvious today. For one, it was binary rather than decimal, like the ENIAC, and this was much more efficient. Also, rather than rewiring the machine every time you wanted to change the "program," the EDVAC introduced the idea of storing the program in memory, just as if it were data. This is what we do today. We do not, after all, have separate RAM areas for applications and for their data (although L1 caches typically operate this way). The processor knows, based on the context in which the memory was accessed, whether it is data or an executable.

In addition, memory no longer consisted of vacuum tubes, but was stored as electrical impulses in mercury. The mercury delay line was 100 times more efficient in terms of the electronics necessary to store data and made much larger amounts of memory feasible and more reliable.

The EDVAC was a major advance, and proved very useful until it was retired in 1960. It was a binary stored-program computer, which could be programmed much more quickly than the ENIAC could. It was also much smaller, weighing less than nine tons, and consumed "only" 56 kilowatts of power. Even still, our two heroes were not done yet.

The UNIVAC

As mentioned, Eckert and Mauchly left the University of Pennsylvania in 1946 to form the Electronic Control Co. They incorporated their company in 1947, calling it the Eckert-Mauchly Computer Corp., or EMCC. Their departure delayed the completion the EDVAC to the extent that the EDSAC, based on the EDVAC design, was actually completed before it. The dynamic duo, however, wanted to explore the commercial opportunities that this new technology offered, which was not possible with university-sponsored research, so they developed a computer based on their ideas for the EDVAC and even superseded them. Along the way, they created the BINAC for financial purposes, but the Universal Automatic Computer (UNIVAC) is really the more interesting machine.

The UNIVAC was the first-ever commercial computer, 46 units of which were sold to businesses and government after its 1951 introduction. All machines before it were unique, meaning they only made one of them. The difference for the UNIVAC was there were multiple UNIVACS (meaning many of the same design). Eckert and Mauchly correctly concluded that a computer could be used not only for computations, but also for data processing, while many of their contemporaries found the idea of using the same machine for solving differential equations and paying bills to be absurd. At any rate, this observation was critical in the design and success of the UNIVAC.

On a lower level, the UNIVAC consisted of 5,200 vacuum tubes (almost all in the processor), weighed 29,000 pounds, consumed 125 KW, and ran at a whopping 2.25 MHz clock speed. It was capable of doing 455 multiplications per second and could hold 1,000 words in its mercury delay-line memory. Each word of memory could contain two instructions, an 11-digit number and sign, or 12 alphabetical characters. The processing speed was roughly equivalent to the amount of time it took the ENIAC to complete the tasks that it could perform. But in virtually every other way, it was better.

Perhaps most importantly, the UNIVAC was much more reliable (mainly due to its use of much fewer vacuum tubes) than the ENIAC. On top of this, the "Automatic" in its name alluded to how it required no human effort to run. All the data was stored and read from a metal tape drive (as opposed to having to manually load the programs each time they were to be run with paper tapes or punched cards). Using tapes made actual processing much faster than the ENIAC, since the I/O bottleneck was mitigated. And of course, setup time re-wiring the ENIAC for the next "program" was eliminated. There were other niceties that made their appearance on the UNIVAC as well, like buffers (similar to a cache) between the relatively fast delay lines and relatively slow tape drives, extra bits for data checking, and the aforementioned ability to operate on both numbers and alphabetical characters.

The UNIVAC gained additional fame by correctly predicting the landslide presidential victory of Dwight Eisenhower in 1952 on national TV. This and the fact it was the first commercially available computer gave Remington Rand (which had bought EMCC) a very strong position in the burgeoning electronic computer industry. They had thrown down the gauntlet with UNIVAC. But what was IBM doing at this time?

The IBM 701

While most of our esteemed readers have a good idea of IBM's dominance in the world of computing from the mid to late 20th century, what may be less-known is where it starts, how and why it happened, and how it progressed. Let's start with one of the two computers it developed at the same time as the UNIVAC.

We'll begin with the IBM 701, which was a direct competitor to the esteemed UNIVAC. Announced in 1952, there were many similarities between the 701 and the UNIVAC, but many differences as well. Memory was not stored in a mercury-delay line, but in 3" vacuum tubes referred to as "William's Tubes," in deference to their inventor. Although they were more reliable than normal vacuum tubes, they still proved to be the greatest source of unreliability for the computer. However, one benefit was that all bits of a word could be retrieved at once, as opposed to the UNIVAC's mercury delay lines, where memory was read bit by bit. The CPU was also considerably faster than the UNIVAC's, which could almost perform 2,200 multiplications per second, compared to 455 for the UNIVAC. It could also execute almost 17,000 additions and subtractions, as well as most other instructions, per second. This was remarkable for that time. IBM's eight million byte tape drive was also very good and could stop and start much faster than the UNIVAC's and was capable of reading or writing 12,500 digits per second. However, unlike the UNIVAC with its elegant buffers, the processor had to handle all I/O operations, which could severely impact performance on heavily I/O-based applications.

In 1956, IBM introduced a technology known as RAMAC, which was the first magnetic disk system for computers. It allowed data to be quickly read from anywhere on the disk and could be attached not just to the 701, but to IBM's other computers, including the 650, which we will look at next. As most of you no doubt realize, this technology is the progenitor to the hard disks that are very much with us today.

IBM produced 19 701 units, which were fewer than the number of UNIVACs made, but still enough to prevent Remington Rand from dominating the field. The cost was a serious inhibitor to more widespread use, setting the user back over $16,000 a month. Also, as mentioned, the 701 was only part of IBM's response. The 650 was the other.

The 650 Magnetic Drum Data Processing Machine

While IBM's more direct response to the UNIVAC was the 701 (and later the 702), it also was working on a lower-end machine known as the 650 Magnetic Drum Data Processing Machine (so named because it employed a rotating drum that spun at 12,500 revolutions per minute and could store 2,500 10-digit numbers). It was positioned somewhere between the big mainframes like the 701 and UNIVAC and the punched-card machines used at the time, the latter of which were still dominating the market.

While the 701 generated most of the excitement, the 650 earned most of the money and did much more to establish IBM as a player in the electronic computer industry. Costing $3,250 per month (IBM didn't sell computers at that time, but only leased them), it was much less expensive than the 701 and UNIVAC, but was still considerably more expensive than the punched-card machines so prevalent at that time. In total, over 2,000 of these machines were built and leased. While this greatly exceeded the 701's and UNIVAC's deployment, it was paltry compared to the number of punched-card accounting machines that IBM sold during the same period. Although very reliable by computer standards, it still used vacuum tubes and thus was inherently less reliable than IBM's electromechanical accounting machines. On top of this, it was considerably more expensive. Finally, the peripherals for the machine were mediocre at best. So, right up to the end of the 1950s, IBM's dominant machine was the punched-card Accounting Machine 407.

To be able to usurp the IBM Accounting Machine 407, a whirlwind of changes were needed. The computer would need better peripherals and had to become more reliable and faster, while costing less. Our next machine is not the computer that finally banished the 407 into obsolescence--at least not directly--but many of the technologies that were developed for it did.

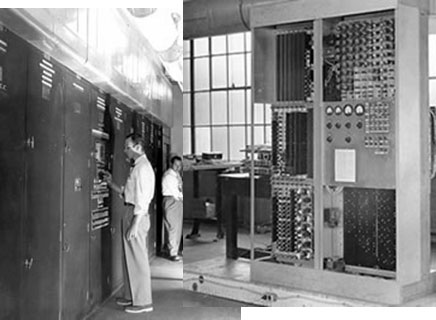

The Whirlwind Project

The Whirlwind project was ironic. It went way over budget, took much longer than intended, and was never used in its intended role, but was arguably one of the most important technological achievements in the computer field.

In 1943, when the US Air Force gave MIT's Jay Forrester the Whirlwind project, he was told to create a simulator to train aircraft pilots rather than have them learn by actually being in a plane. This intended use was very significant in that it required what we now call a "real-time system," as the simulator had to react quickly enough to simulate reality. While other engineers were developing machines that could process 1,000 to 10,000 instructions per second, Forrester had to create a machine capable of a minimum of 100,000 instructions per second. On top of this, because it was a real-time system, reliability had to be significantly higher than other systems of that time.

The project dragged on for many years, long after World War II had ended. By that time, the idea of using it for a flight simulator disappeared, and for a while, they weren't quite sure what this machine was being developed to do. That is, until the Soviets detonated their first nuclear bomb and the U.S. government decided to upgrade its antiquated and ineffective existing air defense system. One part of this was to develop computer-based command-and-control centers. The Whirlwind had a new life, and with so much at stake, funding would never be a problem.

Memory, however, was a problem. The mercury-delay line that others were using was far too slow, so Forrester decided to try a promising technology: electrostatic storage tubes. One problem he faced was that they did not yet exist, so a lot of development work had to be put into this before he would have a working product. But once it was completed, electrostatic storage tubes were deemed unreliable and their storage capacity was very disappointing. Consequently, Forrester, who was always looking for better technology, started work on what would later be called "core memory." He passed his work on to a graduate student also working on the project, called Bill Papian, who had a prototype ready by 1951 and a working product that replaced the electrostatic memory in 1953. It was very fast, very reliable, and did not even require electrical refreshes to hold its values. We'll talk more about core memory later, but suffice it to say, it was an extremely important breakthrough that quickly became the standard for well over a decade.

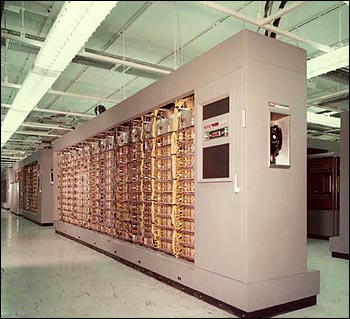

Core memory was the final piece of the puzzle. The computer was effectively complete in 1953 and first deployed in Cape Cod. Although it failed to reach the intended performance level, it was still capable of 75,000 instructions per second. This far exceeded anything available back then. The technology was transferred by MIT to IBM, where the production version was re-christened the IBM AN/FSQ-7 and saw production in 1956. These monsters had over 50,000 vacuum tubes, and weighed over 250 tons, which made them the largest computers ever built. It also consumed over a megawatt of power, not including the necessary air conditioning.

SAGE (Semi-Automatic Ground Environment), the bomber-tracking application for which the Whirlwind was now intended, became fully operational by 1963. Ironically, this was past the time when the Whirlwind was truly useful, since it was designed to track bombers, and by then, ICBMs had made their appearance a few years earlier. Nonetheless, while the actual uses for the Whirlwind were dubious, the technologies either created or accelerated by it were extremely important. These include not only the aforementioned core memory, but the development of printed circuits, mass-storage devices, computer graphics systems (for plotting the aircraft), CRTS, and even the light pen. Connecting these computers together gave the United States a big advantage in networking expertise and digital communications technologies. It even had a feature we lack in modern computers: a built-in cigarette lighter and ashtray. Clearly, it was worth the $8 billion that it cost to fully install SAGE, even though SAGE never helped intercept a single bomber.

The IBM 704

Announced in 1954, the IBM 704 was the first large-scale commercially-available computer system to employ fully automatic floating-point arithmetic commands and the first to use the magnetic core memory developed for the Whirlwind.

Core memory consisted of tiny doughnut-shaped metal pieces that were roughly the size of a pin-head with wires running through them, which could be magnetized in either direction, giving a logical value of zero or one. Core memory had a lot of important advantages, not the least of which was that it did not need power to maintain its contents (an advantage it holds over modern memory). It also allowed truly random access, where any memory location was accessed as quickly as any other (except when interleaving was used, of course). This was not the case with prior forms of memory. It was considerably faster than other memory technologies used, having an access time of 12 microseconds. Perhaps most importantly, however, was the much greater reliability that the IBM 704 offered.

For longer-term storage, the 704 used a magnetic drum storage unit. For additional storage, tapes capable of holding five million characters each were used.

The 704 was quite fast, being able to perform 4,000 integer multiplications or divides per second. However, as mentioned, it was also capable of doing floating point arithmetic natively and could perform almost 12,000 floating-point additions or subtractions per second. More than this, the 704 added index registers, which not only dramatically sped up branches, but also reduced program development time (since this was handled in hardware now).

The 704 pioneered two major technologies we have today: the index registers and floating-point arithmetic. Magnetic core memory was also extremely useful, offering far greater speed and reliability, but it was a transient technology.

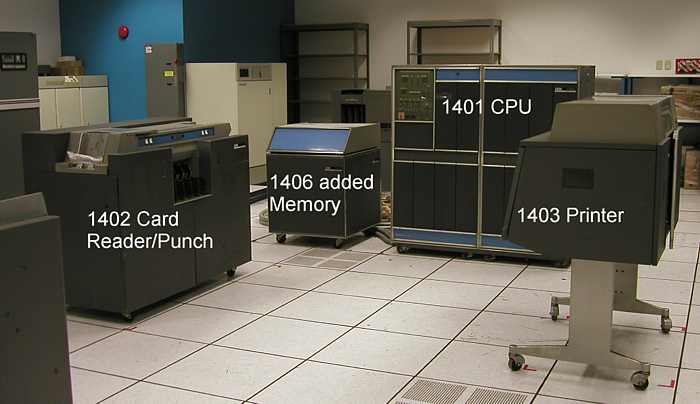

The IBM 1401 Data Processing System

While the 650 put IBM on the map, its replacement, the IBM 1401 Data Processing System, was the computer that made punched-card machines obsolete. It is considered to be the "Model T" of the computer industry, since its combination of functionality and relative low cost allowed many businesses to start using computer technology. Its popularity helped IBM become the dominant computer company of the era. Ironically, its success was not entirely good for IBM, and this machine was surely not its biggest or most profitable. In fact, in some cases, it was just used as an adjunct to its bigger brothers to transfer data on punched cards to tape and to print.

However, for the first time, the cost, reliability, and functionality made computers very attractive to many customers. Compared to the 650 it replaced, the 1401 was roughly seven times faster, more reliable, and better-supported. Perhaps most importantly, it had better I/O. IBM had the perspicacity to develop a machine that actually did what its customers really needed and at a cost that made sense to them. In some ways it was too good, as it was problematic when customer after customer returned their rented accounting machines to IBM for these new wonders. This caused a lot of short-term problems for IBM, but it was farsighted enough to bear the pain. And history has recorded how well the new computing business model later paid off.

So, what made this computer resonate so well with customers? Core memory, transistors, software, and a printer were all tremendous advances, any one of which would have made the computer a big advance over the 650. Put them all together, and the machine outsold IBM's expectations by over 12 to one.

We have already been introduced to core memory in the description of the 704. Its virtues of speed, reliability, high capacity, and lower power use made this a very important technology. The 704 was a very expensive machine, however, and was not affordable for many businesses. The 1401 moved this technology to a much larger market.

By now we all know what transistors are, but the improvement over existing technologies at the time included reliability, power use, heat dissipation, and cost.

The holistic approach IBM took also included software. For the first time, free of charge, IBM included software packages for most of the needs of its customers rather than make its customers develop their own. This was critically important, since it saved considerable time and money on in-house development and allowed businesses that did not have programmers to finally derive the benefits of computers.

And strangely, one of the biggest advantages of the 1401 was its printer. The 1403 "chain" printer had a rated speed of 600 lines per minute, which was four times the speed of the 407 accounting machine. It was also very reliable. In fact, for many, the 1403 was a salient characteristic of the system and often sold the computer that went with it.

All of these contributed to a machine that transformed the computer industry. It was extremely successful not only thanks to its excellent technical characteristics, but also due to its low starting price of only $2,500 per month. In fact, after the release of the 1401, the computer industry became known as IBM and the seven dwarfs. The 1401 was that good.

The IBM 7090

Announced in late 1958, IBM replaced the aging 709 (the last of the 700 line we saw a few pages ago) with the 7090. In fact, in many ways, the 7090 was essentially a 709 made with 50,000 transistors rather than vacuum tubes. However, there were many benefits because of it, including both speed and reliability.

The 7090 and its later upgraded form, the 7094, were classic, powerful, and very large mainframe computers--and they were very expensive. The 7090 cost around $63,500 a month to rent in a typical configuration, and that did not include electricity.

Despite its cost, the speed of this machine could still make it very appealing. It was roughly five to six times faster than the 709 it had replaced, and was capable of 229,000 additions or subtractions, 39,500 multiplications, or 32,700 divisions in one second. The 7094, announced in 1962, was capable of 250,000 additions or subtractions, 100,000 multiplications, and 62,500 divisions per second. It could use 32,768 36-bit words of core storage.

However, outside of implementing the newest technologies (core memory, RAMAC, transistors, etc.) and the consequent improvement in speed, power use, and reliability, it was not functionally very different from its predecessor. Jobs were executed by collecting them on reels of tape and were run in batches, and the results were given back to the programmer when done.

While the performance, capacity, and reliability of these machines were impressive (mainly due to the move to transistors and other new technologies), it would be a stretch to call this a groundbreaking machine that pushed the boundaries of computing.

-

Ramar Wonderful article, thanks Tom's. =]Reply

Killed a good hour of my day, and I very much enjoyed it. -

1ce Really cool. One observation, on page 7 I think the magnetic drum is rotating 12,500 revolutions per minute, not per second....If my harddrive could spin at 12,500 revolutions per second I'm sure it could do all sorts of amazing things like flying or running Crysis.Reply -

pugwash Good article, however although not quite "Complete". There is no mention of Collosus (which was used to break Enigma codes from 1944) or The Manchester Small-Scale Experimental Machine (SSEM), nicknamed Baby, which was the world's first stored-program computer which ran its first program in June 1948.Reply -

neiroatopelcc So the ABC was in fact the first mobile computer? The picture does show wheels under the table at least :) But I guess netbooks are easier to handle, and have batteriesReply -

dunnody I am with pugwash - its a good article but why does it seem like it is a bit US centric, no mention of Alan Turning or "Baby" and the Enigma code cracking machines of Bletchley ParkReply -

candide08 I agree with others, in that I am surprised that there was not even a mention of a Turing machine or other very early "computers".Reply

Surely they qualified as Mainframes of their times? -

It's a shame that multiplication, addition and division benchmarks are not persistently noted throughout the article.Reply

I know that now a days it's very much dependent on software design, but it would still be nice to follow the progression in terms of calculation power of the machines.