A Complete History Of Mainframe Computing

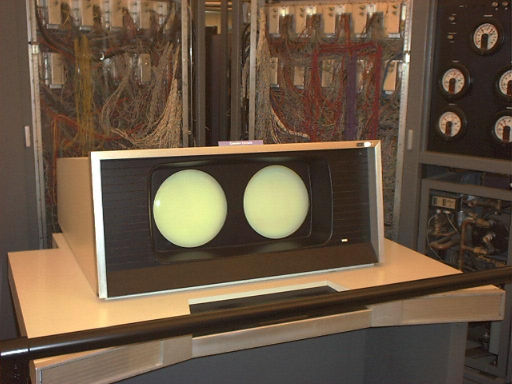

The IBM 7030 (The Stretch)

IBM's 7030, or Stretch, is something of a paradox. It introduced new technologies, many of which are still in use today, and was the fastest computer in the world for three years after it was introduced. However, it was considered a failure to such an extent that IBM reduced its price before discontinuing it very quickly with a loss of around $20 million. How could this be?

In 1956, Los Alamos Scientific Laboratory awarded IBM a contract to build a supercomputer. The goal of this computer was to offer a hundred-fold improvement over the IBM 704's performance. This was a very ambitious goal indeed. And in fact, the 7030 outperformed the 704 by a factor of up to 38 when it was released in 1961. Due to this "disappointing" performance, IBM was forced to lower the price of the machine from $13.7 million to a paltry $7.78 million, which meant IBM lost money on every machine. This being the case, after meeting its contractual obligations, IBM withdrew the 7030 from the market, which was a major disappointment and failure. Or was it?

Not only was the performance of this machine far ahead of its time (0.5 MIPS), but the technologies it introduced read like a who's who list of modern computing. Does instruction prefetching sound familiar? Operand prefetching? How about parallel arithmetic processing? There was also a 7619 unit that channeled data from the core memory to external units, like magnetic tapes, console printers, card punches, and card readers. This is an expensive version of the DMA functionality we use today, although mainframe channels were actual processors themselves and far more capable than DMA. It also added interrupts, memory protection, memory interleaving, memory write buffers, result forwarding, and even speculative execution. The computer even offered a limited form of out-of-order execution called instruction pre-execution. You probably already surmised that the processor was pipelined.

The applications are almost equally impressive. The 7030 was used for nuclear bomb development, meteorology, national security, and the development of the Apollo missions. This became feasible only with the Stretch due to the enormous amount of memory (256,000 64-bit words) and incredible processing speed. In fact, it could perform over 650,000 floating point adds in a second and over 350,000 multiplications. Up to six instructions could be in flight within the indexing unit and up to five instructions could be in flight within the look-ahead and parallel arithmetic unit. Thus, up to 11 instructions could be in some stage of execution within Stretch at any one time. Even compared to the excellent 7090 released at that time, the 7030 was anywhere from .8 to 10 times the speed, depending upon the instruction stream.

So, while the 7030 had a short, but very useful life, its technology is still with us today, and had a very important impact on the legendary System/360 family. This could easily be the most important computer in the history of mainframes. Yet, it was a failure. Who says life makes sense?

The B 5000

By now, at least a few of you would probably like to remind me that IBM was not the only company to make a computer since the UNIVAC. Your point is well taken, so let us take a look at a machine from Burroughs, the B 5000. This is a really interesting machine, especially considering that it was announced in 1961. In fact, to this day, UNISYS still supports the software.

The B 5000 was developed for high-level languages, namely COBOL and ALGOL. By this I mean the machine language was created mainly for easy translation from higher-level languages. It contained a hardware stack, segmentation, and many descriptors for data access.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The descriptors had many uses, which included allowing bound checking in hardware, distinguishing between character strings and arrays of words, easing dynamic array allocation, indicating the size of characters, and even whether something was in core memory or not. Why would we need that? In two words, virtual memory. The B 5000 was the first commercial computer with this technology. It also supported multiprocessing and multiprogramming, even with ALGOL and COBOL. In fact, the Master Control Program (MCP), as the operating system was called, handled memory and input/output unit assignments, segmentation of programs, subroutine linkages, and scheduling, which freed the programmer from all these tedious and time-consuming tasks.

Another aspect Burroughs was proud of was the modular nature of the computer. It could be increased or decreased, without costly "reprogramming" of the entire machine.

The B 5000 was not the commercial success IBM mainframes were. In fact, it was sometimes referred to as the machine everyone loves but no one buys. However, its design was nothing less than elegant and efficient. It focused on solving problems within the context of how humans interacted with and related to computers, as opposed to speed for the sake of speed. Perhaps more importantly, some of the technologies it introduced, like virtual memory and multiprocessing, are necessities in present computers, some of which still support this magnificent architecture 48 years after it was introduced.

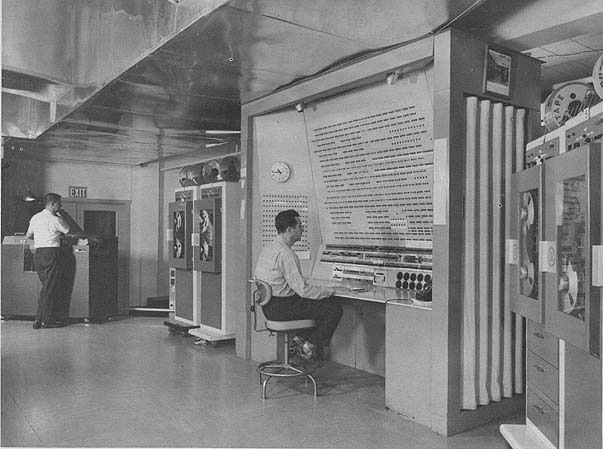

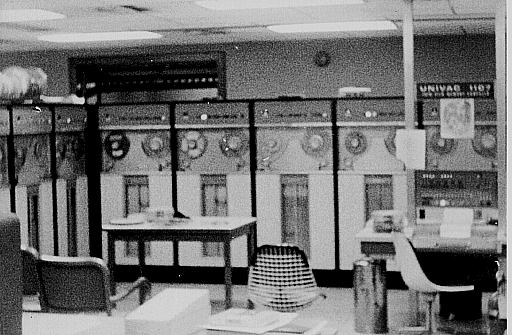

The UNIVAC 1107

While IBM deserves much praise for the innovations first expressed in the Stretch, Remington Rand, the number-two computer company in the world at the time, was busy conjuring up some of its own magic with the UNIVAC 1107 Thin Film Memory Computer.

As you no doubt guessed from its name, the main technological accomplishment was the use of thin-film memory. It had an access time of 300 nanoseconds and a complete cycle time of 600 nanoseconds, making it extremely fast for 1962, when the machine was released. However, this did not replace core memory, which had a cycle time of roughly two microseconds, but rather was used to provide multiple accumulators, multiple index registers, and multiple input-out control registers. This allowed for greater parallelism, with increased speed as the end result. In total, there were 128, 36-bit words of thin-film memory (alternatively called "control memory" because of its function). By today's standards, this would not be considered memory at all, but part of the processor, much like registers. Although, in both cases, they are really very fast internal memory. One difference is that the control-memory registers were actually accessed by using a memory address as opposed to register name, but only when using special instruction designators or when referred to by an execution address. If not accessed this way, the addresses were mapped to core memory. So, rather strangely, the memory map for the first 128 bytes was different depending upon the context.

While the thin-film memory was certainly the biggest splash in the pool, there were other interesting features of this enduring line worth mentioning. For one, it had usable word sizes of 36-bits. Characters were expressed in six bits. Memory banks were interleaved so that if reads were done from different banks in successive reads, the access time was only 1.8 microseconds. If the word was in the same bank, it was four microseconds. As mentioned, this averaged out to two microseconds since it was more likely to access a different bank. The 1107 also contained 16 input and 16 output channels, all of which could be used concurrently to support a maximum of 250,000 words per second.

The main storage of the machine consisted of one to eight magnetic drums, each capable of storing from 262,144 to 6,291,456 words, giving this machine an enormous capacity of over 94 million 36-bit words (or over half a billion characters of storage).

Although the UNIVAC 1107 was without question a fine machine in its own right, its more important significance was the family of computers it started. While never approaching the sales of a series of computers that IBM would soon introduce, UNIVAC's 1100-series made the company the second-largest in the world for many years and is still supported by UNISYS today. But enough of the horse that placed. Let's head back to Big Blue.

IBM's System/360 Series

When most people think of a mainframe, they think of the System/360 family of computers from IBM, arguably the most important computer architecture created. In many ways, it is similar to 8086 processors in that it created the standard for an industry and spawned a long line of descendants that are still alive and thriving to this day. One big difference is that IBM actually intended the System/360 to be important, unlike the 8086, which gained an importance its creator could never have foreseen. In fact, as many of you know, Intel even tried to kill off this instruction set with the Itanium.

But let's get back to the matter at hand. Prior to the System/360, IBM had something of a mess on its hands, having created many systems that were incompatible with each other. Not only did this make it more difficult for its customers to upgrade, but it also was a logistical nightmare for IBM to support all these different operating systems on different hardware. So, IBM decided to create what we almost take for granted today: a compatible line of computers, with differing speeds and capacities, but all capable of running the same software. In fact, in April 1964, IBM announced six computers in the line, with performance varying by a factor of 50 between the highest- and the lowest-end machines. This actually doubled the design goal of 25, which in itself posed many problems for IBM. Scalability of this magnitude was said to be impossible even by the infamous and brilliant Gene Amdahl. It was never a simple matter of just making something 25 times "bigger" than the smallest part and it really had to be completely re-implemented.

Today, it is common to disable parts of a processor, or underclock it to somehow compromise the performance. But back then, it was not economically feasible to create a high-end processor and artificially lower its performance for marketing purposes. So, IBM decided on the idea of adding "microprogramming" to the System/360, so that all members of the family used the same instruction set (except for the lowest-end, Model 20, which could execute a subset). These instructions were then broken down into a series of "micro-operations," which were specific to that system implementation. By doing this, the underlying processor could be very different, and this allowed scalability of the magnitude IBM wanted, and as mentioned, even exceeded it by two times.

This probably sounds familiar to you, since something similar has been implemented on x86 processors since the Pentium Pro (or really, NexGen Nx586). As mentioned, however, IBM planned this. The x86 designers did this because the instruction set was so poor, it could not be directly executed effectively. There was one very important advantage of this micro-programming that could not be easily implemented on a microprocessor. By creating new micro-programming modules, the System/360 could be made compatible with the very popular 1401, for the lower end machines, and even the 7070 and 7090 for the higher end System/360s. Since this was done in hardware, it was much faster than any software emulation, and in fact generally ran older software much faster on the System/360 than on the native machine, due to the machine being more advanced.

Some of these advances are still with us today. For one, the System/360 standardized the byte at eight bits, and used a word length of 32-bits, both of which helped simplify the design since they were powers of two. All but the lowest-end Model 20 had 16 general-purpose registers (the same as x86-64), whereas most previous computers had an accumulator, possibly an index register, and perhaps other special-function registers. The System/360 could also address an enormous amount of memory of 16 MB, although at that time this amount of memory was not available. The highest-end processor could run at a very respectable 5 MHz (recall that is the speed the 8086 was introduced at 14 years later), while the low-end processors ran at 1 MHz. Models introduced later in 1966 also had pipelined processors.

While the System/360 did break a lot of new ground, in other ways it failed to implement important technologies. The most glaring deficiency was that there was no dynamic address translation (except in the later model 67). This not only made virtual memory impossible, but it made the machine poorly suited for proper time-sharing, which was now becoming a possibility with the increasing performance and resources of computers. Also, IBM eschewed the integrated circuit, and instead used "solid-logic technology," which could roughly be considered somewhere between the integrated circuit and simple transistor technology. Conversely, on the software side of things, IBM was perhaps a bit too ambitious with OS/360, one of the operating systems designed for the System/360. It was late, used a lot of memory, was very buggy, lacked some promised features, and more than that, continued to be buggy long after it was released. It was a well known, high visibility, and dramatic failure, although IBM eventually did get it right and it spawned very important descendants.

Despite these issues, the System/360 was incredibly well-received and over 1,100 units were ordered in the first month, far exceeding even IBM's goals and capacity. Not only was it initially successful, but it proved enduring and spawned a large clone market. Clones were even made in what was then the Soviet Union. It was designed to be a very flexible and adaptable line, and was used extensively in all types of endeavors, perhaps most famously the Apollo program.

More importantly, the System/360 started a line that has been the backbone of computing for almost 50 years, and represents one of the most commercially important and enduring designs in the history of computing.

The CDC 6600

While IBM was busy focusing on a wide swath of compatible systems with its System/360 line, a company called CDC had a different design goal for its next computer: fast and really fast.

Unshackled by any other considerations, such as compatibility or cost, Seymour Cray was free to use his legendary talents to focus on raw speed. He succeeded, as the roughly $7 million machine was the fastest computer from 1964 to 1969 by employing a unique design that relied on what would now be called an asymmetric multiprocessor design.

The main CPU ran at a blazing 10 MHz, but was very limited in the instructions it could perform, since it was in a very real sense a RISC processor long before the term was coined. It was capable of only very simple ALU functions, but was complemented by 10 logical peripheral processors that could do what the CPU could not, and kept it fed with data, while unfettering it from retiring data. The ability to make the processor more specialized and the parallelism by using the 10 "barrel" processors were key components in the exceptional performance of this machine. With an enormous amount of memory (128 K words), this 60-bit computer could trade off larger executable size for the additional performance that a simple instruction set could offer.

Although the CDC 6600 was a profitable machine, it was never a threat to the System/360’s market share–nor was it ever intended to be. Like our next machine, sometimes it was better to compete where IBM was not, rather than where it had targeted. The 6600 targeted a market higher than even the System/360 Model 75 could reach, while the next computer we look at targeted a market below where the System/360 Model 20 could.

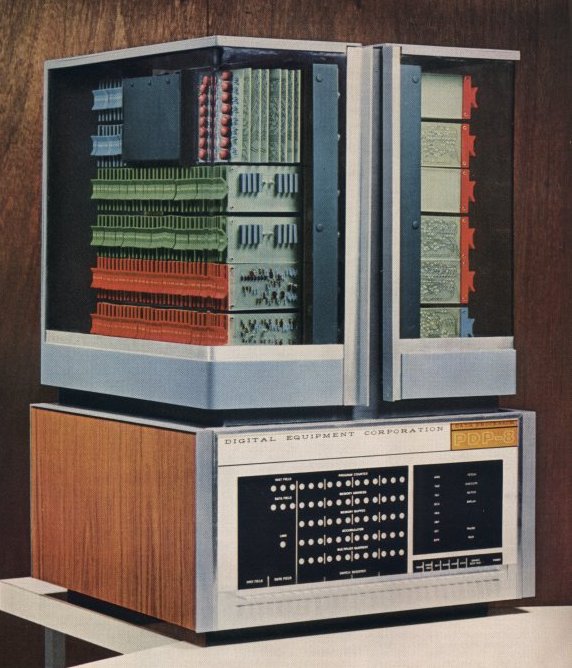

DEC's PDP-8

While IBM was busy releasing its magnificent System/360 line, Digital Equipment Corp. (DEC) was about to release a computer that would have a major impact on the future of computing as well, the PDP-8. Although the different computers in System/360 had an enormous range of performance characteristics and capacities, they were still mainframes, and even the lowest-end models were still too expensive for many businesses. This opportunity was not lost on DEC's founder, Ken Olsen.

Although DEC had released computers as early as 1960, these models were only modestly successful and had little impact on the industry. However, the steady advance of technology, mainly in the form of integrated circuits, allowed DEC to sell a much smaller and much less expensive computer than its predecessors. Integrated circuits also allowed for much lower power use, and consequent to that, much less heat dissipation. This freed computers from purpose-built air-conditioned rooms. When released in 1965, the PDP-8 sold for an astonishingly low price of $18,000, which, with the aforementioned housing requirements, put computers within the reach of many companies that previously found them to be prohibitively expensive.

One unique feature of the PDP-1, DEC's first product, was the use of true direct memory access (DMA), which was much cheaper and less complicated than the channels mainframes used, and without much negative impact on the processor performance. In fact, a single mainframe channel cost more than the entire PDP-1. DMA was used on every successive computer DEC made, including the PDP-8. However, not all the cost-cutting comprises made for the PDP-8 were so benign. The 12-bit word length dramatically limited the amount of directly addressable memory, while only 7-bits of the word comprised the address field, allowing only 128 bytes to be directly addressed. There were ways around this drawback, one of which was to use indirect addressing, where the 7-bits pointed to a memory location that contained the actual address that you wanted to access, which was slower, but allowed a full 12-bit access. The other way was to divide memory into segments of 128 bytes, and change segments when necessary (and people thought the 64 K segments of 16-bit x86 processors were bad). Neither solution was desirable, and they severely limited the usefulness of the PDP-8 with high-level languages. The PDP-8 was also no speed demon, and was capable of only 35,000 additions per second.

Despite these compromises, the PDP-8 was remarkably successful, selling over 50,000 machines before it was discontinued. The low purchase price, low continuing costs, and ease of housing it all were more compelling than its deficits were damning. In fact, this modest machine sparked a whole new type of computer, called the mini-computer, which became very successful for over two decades and made DEC the second-largest computer company in the world. Perhaps sadly, the mini-computer did not survive the march of the micro-computer, and is now an extinct species, and thus is more applicably called a dinosaur than the normal recipient of that unflattering term, the mainframe. The mainframe still sits on top of the food chain, capable of things far beyond desktop computers.

The System/370

Although the System/360 was very successful, and in some ways, revolutionary and innovative, it also eschewed leading-edge technologies that left opportunities for other companies to exploit. To its credit, however, it was still selling well even six years after its announcement, and it laid a foundation for generations that followed it, of which the System/370 was first.

The initial launch of the System/370 in 1970 consisted of just two machines, the charismatically named 155 (running at almost 8.70 MHz) and 165 (running at 12.5 MHz). Naturally, both machines were compatible with programs written for the System/360 and could even use the same peripherals. Additionally, the performance was greatly improved, with the System/370 165 offering close to five times the performance of the System/360 65, the fastest machine available from that line when it was released in November 1965.

There were also several new technologies for the System/370, compared to the System/360. IBM finally moved to the integrated circuit, a change many people thought long overdue. Most models in the line had transistor memory rather than core memory. The System/370 also finally supported dynamic address translation (on all but the initial two models), which was an important technology for time sharing and virtual memory. There was also a very high-speed memory cache (80 ns for the 165), which IBM called a buffer. This was used by the processor to mitigate the relatively slow (two microsecond or 2,000 ns) main memory access time. Another important consideration was that the System/370 was built from the beginning with dual processors and multiprogramming in mind.

So, while the System/370 was not a spectacular announcement, it did plug up some glaring holes in the System/360, improved speed considerably, expanded the instruction set, and maintained a high degree of compatibility. It was a solid step forward and maintained IBM's dominance in the mainframe world.

The 3033: The Big One

While the System/370 line dominated mainframe computing for many years by introducing new models with new features and performance characteristics, IBM announced in March 1977 the successor to this very successful family of computers, the 3033, or "The Big One."

Although IBM mainly stressed the additional speed (1.6 to 1.8 times the speed of the System/370 168-3) and its much smaller size, ironically for "The Big One," this machine's technical merits would not look out of place on a modern computer. Running at 17.24 MHz, the processor sported an eight-stage pipeline, branch prediction, and even speculative execution. It contained several logical units and 12 channels. The units of 3033 processor were the instruction preprocessing function (IPPF), execution function (E-function), processor storage control function (PSCF), maintenance and retry function, and the well-known channels indigenous to all IBM mainframes. The IPPF fetched instructions and prepared them for the execution by the E-function, determined priority, and made fetch requests for the operand. It not only used branch prediction, but it could buffer three instruction streams at once, so in the event it "guessed" wrong, it was likely to have the other instruction sequence ready and preprocessed for the E-Function. The E-Function, not surprisingly, was the execution engine of the processor, boasting a very large 64 K cache (with a 64 byte line size) for the first time, to speed up memory accesses. Memory itself was eight-way interleaved, allowing refreshes to occur in the seven banks it was not accessing when it did a read, which sped up read time if the next access was in one of those seven banks (DRAM requires a refresh after a read to be accessed again).

The processor storage control function handled all requests for storing or fetching data from processor storage, and translated virtual address to absolute storage addresses using a technology we previously mentioned as dynamic address translation. Like modern processors, it used translation lookaside buffers to speed this up. Essentially, this is a cache of addresses already translated from virtual to absolute, so if the processor can find them there, conversion is unnecessary. On the 3033, if an address could be found, it would take one clock cycle to resolve it, or if not, it could take anywhere from 10 to 40, which is quite a difference.

The maintenance and retry function provided the data path between the operator console and the 3033 processor for manual and service operations.

So, while ostensibly the 3033 was just a very fast successor to the powerful System/370 168-3, when we look closer we see it has almost all the technologies of a modern processor and even some that are lacking in a portion of today's modern CPUs. However, it was still a scalar design, and despite its impressive characteristics, was replaced relatively quickly by the 3081. While I know you are just brimming with curiosity about the 3081 (who could blame you?), and I can assure we will get very familiar with it, let us first take a short interlude by looking at what DEC, the second largest computer company of the world at that time, had to offer.

The VAX-11/780

While most of our readers know that the x86 instruction set originated in 1978 with the 8086, perhaps a more important development happened a year earlier when Digital released the infamous VAX-11/780. But how could anything possibly be more important than the x86 instruction set?

When most people think of DEC, they remember a large mini-computer maker that failed and was bought by Compaq when the micro-computer usurped DEC’s key market. But what happened in 1977 that was so important? DEC’s VAX and its very comely wife, VMS, the latter of which still has much relevance today.

The VAX-11/780 was ostensibly released to address the shortcomings of the highly successful and very well liked PDP-11. DEC downplayed many of the changes and instead focused on the ability to finally break the 16-bit (64 K) addressable memory limitation of the PDP-11 with the VAX-11/780’s 32-bit address. However, there was much more to it than that.

The VAX is considered by most to be the finest of all CISC instruction sets, rivaled only by those influenced by it. It was a highly orthogonal instruction set, with 243 instructions on several basic data types and with 16 different addressing modes. This elegant architecture was a strong influence in the Motorola 68000 family, which became the platform for Apple Lisa and MacIntosh until it was replaced by the PowerPC in the 1990s. Incidentally, the performance of the VAX-11/780 was adopted as a standard measurement when “VAX MIPS,” or later just “one MIPS,” became a measure of computer performance.

However, perhaps the most important contribution of the VAX was VMS. Windows NT was developed by none other than Dave Cutler, the designer for VMS. He was one of many VMS developers who went over to Microsoft to work on the development of Windows NT. Despite the controversy surrounding Windows, Windows NT is still the dominant operating system in use today, and will remain so for the foreseeable future, particularly since Windows 7 is being far better received than Vista. This is not to denigrate the VMS operating system as insignificant other than its impact on Windows NT as it was a much respected design that was especially user friendly.

Many showered accolades on this easy-to-use operating system, which was very much ahead of its time. In fact, although the VAX is dead, OpenVMS is clearly not, and is currently running on Intel's Itanium processors and HP's archaic Alpha processors, with a new release due out later this year. Thus, since its release 32 years ago, the operating system is still going strong.

As delightful as the VAX and VMS were, and the latter still is, they never challenged the Big Blue beast in any real economic way and instead probably helped IBM in its fight with the government, which was not too fond of what they considered IBM's monopoly. In 1981, President Ronald Reagan dropped the anti-monopoly suit against IBM, and that same year, Big Blue released the 3081, which incidentally, was the first mainframe with which I had experience. And what an experience it was.

The 3081

I still remember it like it was yesterday, that spring day in 1988 when I got a call from IBM telling me to come in to interview for a computer operator position. I was ecstatic when what was considered to be the greatest company in the world, the only one I wished to work for, was going to be my employer. It was a different time then, when IBM represented everything good about American business, and represented the pinnacle of success.

During my first day of work, I was introduced to a machine that was released in 1981, the 3081. I had some experience with an old Univac 1100/63 in college, but until then, I was more familiar with micro-computers, which were best represented by the still fairly-new 80386 and 68030. My first impression of the mainframes was unfavorable. Even by the microcomputers standards of the day, the interface was primitive and far less intuitive than that of PCs. I was not impressed.

The scale of things was shocking. The air-conditioned, raised-floor room was hundreds of feet wide and long, with almost a dozen 3081s and an enormous number of DASDs. We had six printers, which were enormous and almost the same size as the mainframe. There were three sets of consoles, one for the print area and one for the tape area, while the computers were monitored closely in the main console area.

We had three interfaces to MVS, as the operating system was called, which stood for multiple virtual storages, but we derisively referred to it as “man versus system.” There were the system consoles, which were essentially only available in operations: time share option (TSO) and operations planning and control (OPC). TSO was what many people used to do their work, while OPC was mainly for scheduling batch jobs that were going to run on the system. Many programmers preferred to work on VM, which was another operating system IBM offered for the 3081, before transferring their work to the MVS machine.

Our site had responsibility for the customer master record (CMR), which was used by many applications and sometimes directly by people. This ran on an IBM internal application called AAS, which was never sold. There were also some applications on CICS, which was the product IBM sold and is still widely used today by many companies. Many of these applications were critical, and any down time was extremely expensive during normal working hours. In fact, we were told that it cost IBM a million dollars every minute the system was down, which I never believed and still do not. Anyway, after these online applications would go down (usually at around 8:00 PM), the batch processing would begin. Jobs were scheduled in OPC and were submitted in kind of a "wrapper" called job control language (JCL). JCL could run several executables in one job and it specified the resources and order for the executables. You did not tell it explicitly in the executable which DASD to access, as the JCL defined where the input and output were. As mentioned, jobs were tracked in OPC, and were released based on time and/or dependencies. They were sent to the job entry system (JES) and from there were executed.

As mentioned, interfaces were poor compared to PCs of that time, but the reliability of the operating system was far greater than the Windows NT derivatives that we use today. It's something I learned to appreciate over time, and I still marvel at it. The 3081 was a "dyadic" design, meaning it had two processors that even shared the same cache. They could not be split into two computers, as they were inseparable. However, the sophistication of this operating system was such that even if one of the processors died, the system would stay up and continue to execute. The application that was using the failed processor would crash, but it would do so fairly gracefully as the operating system would recognize the failure and send it into the proper place for crashed applications (we'd track them in OPC, and either fix them ourselves or get their support team to take care of the problem). This is not to imply the 3081 CPUs were always crashing, as it was rare when they did so.

DASDs did fail fairly often, although most of the time failures happened when we had to power them down and then back up periodically. This was expected behavior and we always had CEs there for scheduled downtimes to repair those that were especially problematic. Normally, only one or two would fail out of the few hundred on the floor during each power down.

Each 3081 processor ran at a blistering clock speed of almost 38.5 MHz. By IBM's somewhat optimistic measurements, the base 3081 (model D) was up to 21 times faster than the 3033UP, while the higher-end model K was almost 30 times faster. Some of this, of course, came from the extra processor, although sharing the cache did create some overhead. The uniprocessor 3083, for example, actually ran 15% faster than the 3081 when the workload could not engage the 3081’s second processor. The 3084 was another extension of this line, and actually had a pair of dyadic processors. Unlike the 3081, it could be divided into two separate machines. Another improvement of the 3081 was that it could address more than 16 MB and used 31-bit addressing rather than the 3033’s 24-bit addressing. All in all, considering it was released only four years later than the 3033, it was a substantially improved machine. The hardware was good and the stability of the software was just incredible.

I hope the personal tone of this entry does not bore or irritate the reader, but I wanted to give a view of what working with these machines was really like. In many ways they were amazing machines. Still, as amazing as the 3081, 3083, and 3084 were, we were envious of sites that had the 3090, and we had heard the stories of how incredible the performance was.

-

Ramar Wonderful article, thanks Tom's. =]Reply

Killed a good hour of my day, and I very much enjoyed it. -

1ce Really cool. One observation, on page 7 I think the magnetic drum is rotating 12,500 revolutions per minute, not per second....If my harddrive could spin at 12,500 revolutions per second I'm sure it could do all sorts of amazing things like flying or running Crysis.Reply -

pugwash Good article, however although not quite "Complete". There is no mention of Collosus (which was used to break Enigma codes from 1944) or The Manchester Small-Scale Experimental Machine (SSEM), nicknamed Baby, which was the world's first stored-program computer which ran its first program in June 1948.Reply -

neiroatopelcc So the ABC was in fact the first mobile computer? The picture does show wheels under the table at least :) But I guess netbooks are easier to handle, and have batteriesReply -

dunnody I am with pugwash - its a good article but why does it seem like it is a bit US centric, no mention of Alan Turning or "Baby" and the Enigma code cracking machines of Bletchley ParkReply -

candide08 I agree with others, in that I am surprised that there was not even a mention of a Turing machine or other very early "computers".Reply

Surely they qualified as Mainframes of their times? -

It's a shame that multiplication, addition and division benchmarks are not persistently noted throughout the article.Reply

I know that now a days it's very much dependent on software design, but it would still be nice to follow the progression in terms of calculation power of the machines.