Incredible High-Resolution Die Imagery Of Nvidia GPUs

Nvidia's "Fermi" GF100 GPU is just over the horizon. With it, comes new technology as well as a new host of cards all vying for your dollar. But before that happens, we take an amazing photographic look into Nvidia's GPUs, present, and past.

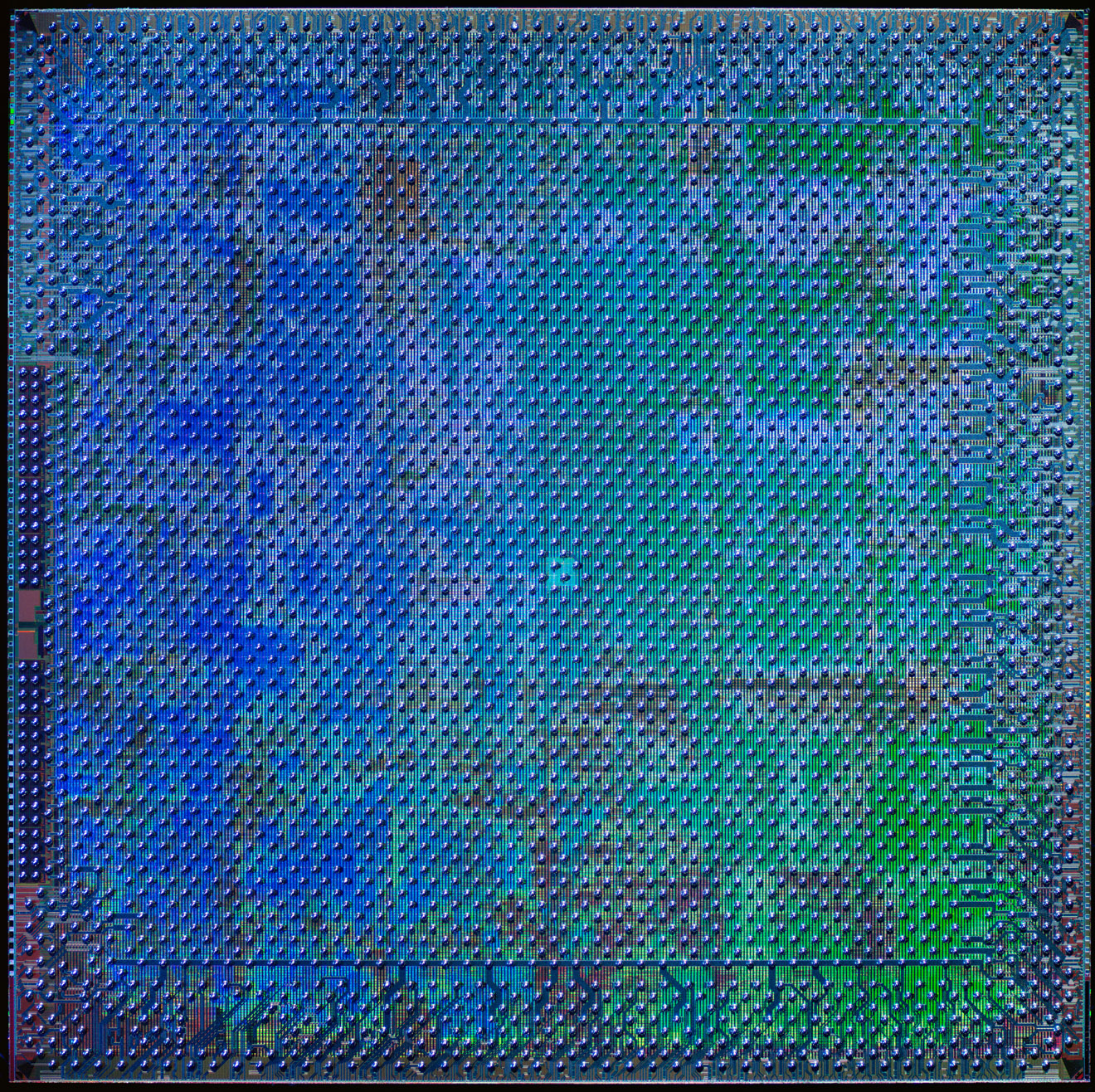

G70 As GeForce 7800 GTX, June 2005

GeForce 7800 GTX.

* 256MB of memory

* 256-bits Memory Interface Width

* 430 MHz Core Clock

* 1,296 MHz Processor Clock

* 1.2 GHz Memory Clock

* Texture Fill Rate 10.32 Gigatexel/s

* Memory Bandwidth (GB/s) 38.4

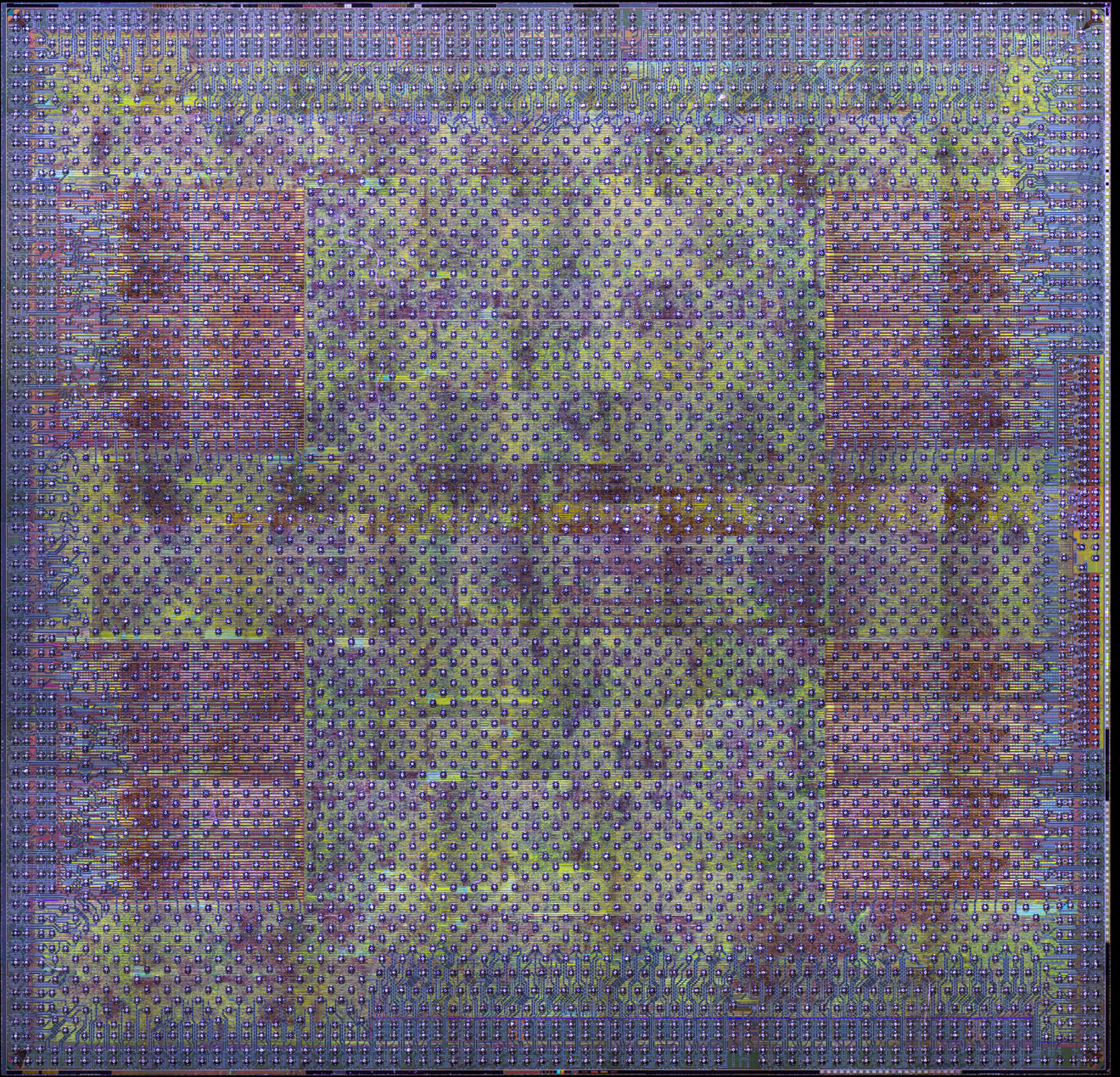

G80 As 8800 GTX, 2006

GeForce 8800 GTX.

For many gamers and industry analysts alike, the 8800 series is still considered to be one of the best GPUs Nvidia ever released, and in fact, is still widely used today. Although the 8800 GTX is several years old now, it still performs very well with today's games. Do you still have a 8800 GTX in use?

* 768MB of memory

* 384-bits Memory Interface Width

* 575 MHz Core Clock

* 1,350 MHz Shader Clock

* 900 MHz Memory Clock

* Texture Fill Rate (billion/s) 36.8

* Memory Bandwidth (GB/s) 86.4

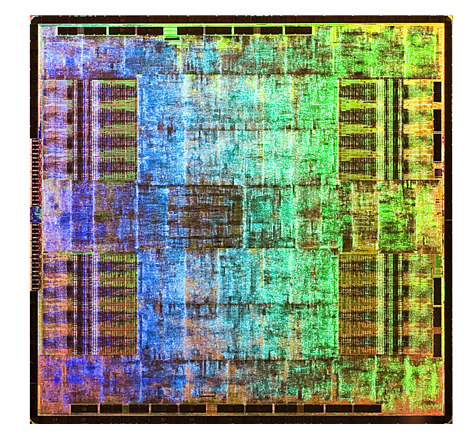

G92 As 8800GT October, 2007

GeForce 8800GT.

Essentially a 65nm version of the G80 GPU. Despite its lower power usage and smaller die size, the G92 was slightly gimped compared to the G80 due to its 256-bit memory interface compared to the G80's 384-bit interface. Consequently, performance wasn't as good. The G92 GPU was used on more entry level and mainstream cards.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

* 112 Stream Processors

* 512-1,024MB of GDDR3 memory

* 256-bits Memory Interface Width

* 600 MHz Graphics Clock

* 1,500 MHz Processor Clock

* 900 MHz Memory Clock

* Texture Fill Rate 33.6 Gigatexel/s

* Memory Bandwidth (GB/s) 57.6

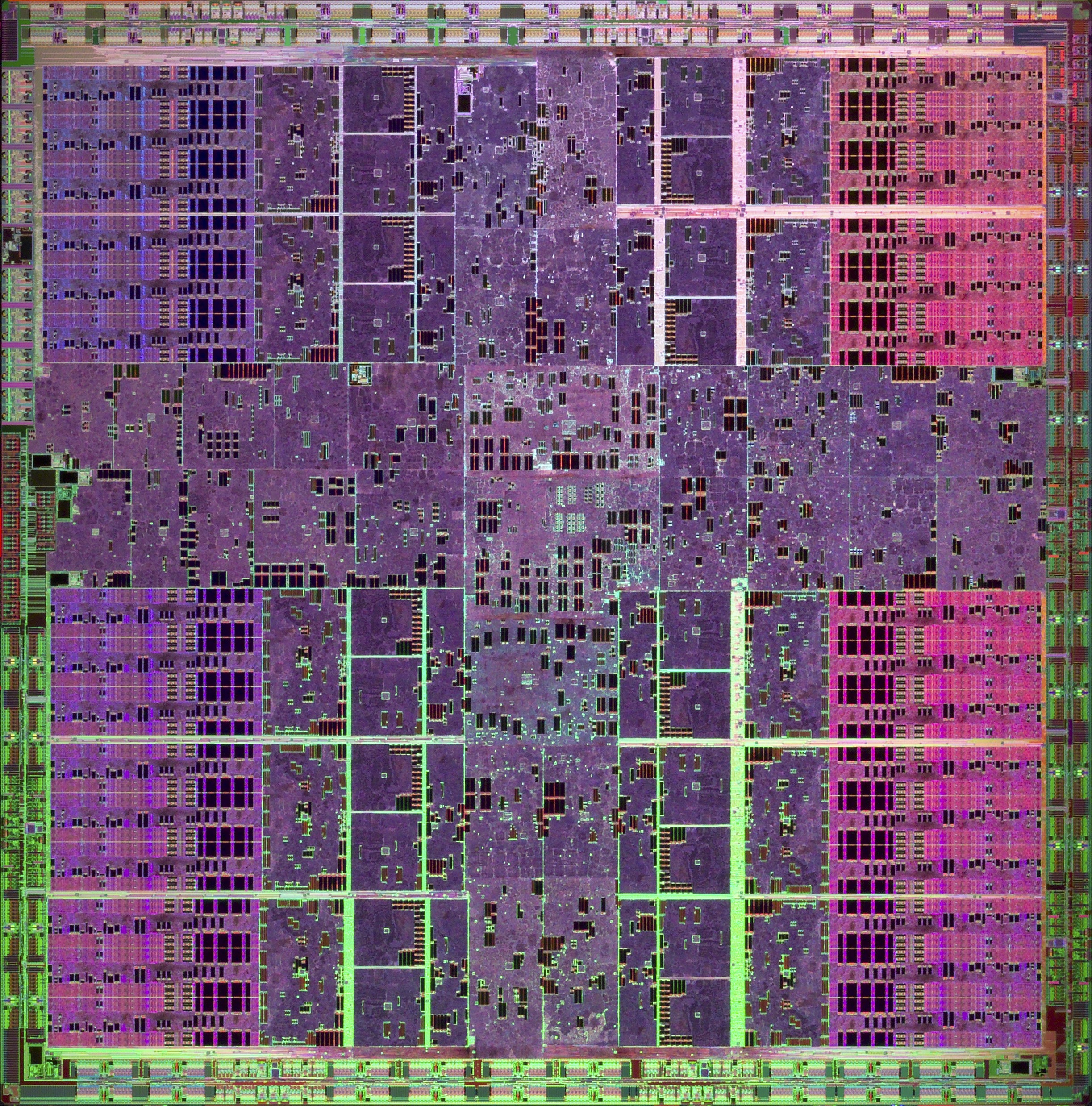

GT200 As GeForce GTX 280, June 2008

GeForce GTX 200-series.

Currently Nvidia's top offering. The GPUs based on the GT200 GPU rules the roost for Nvidia cards, that is, until Fermi (GF100) arrives.

Cards based on the GT200 and its variants series are GeForce 2XX series ranging from the GeForce 210 all the way up to the GeForce GTX 295, which sports two GPUs on one board.

* 240 CUDA Cores

* 1GB of memory

* 512-bits Memory Interface Width

* 602 MHz Graphics Clock

* 1,296 MHz Processor Clock

* 1,107 MHz Memory Clock

* Texture Fill Rate 48.2 Gigatexel/s

* Memory Bandwidth (GB/s) 141.7

While we're missing a few GPU variants from Nvidia, we were only given these photos, but as more trickle in, we'll update the article!

-

razor512 I had a geforce fx 5900xt a long time ago, then the fan dies and since it had a lifetime warranty, I sent it in for replacement and BFG sent me back a geforce 7300GT instead (card now does a great job displaying the windows desktop on my data server)Reply -

pcxt21 Yep I'm still kicking 2 8800GTX's in SLI. Have the pants OC'd out of them now though...Reply -

tuannguyen rambo117lol, I like how the GeForce 5 series was skippedReply

No, it's because Nvidia couldn't dig up photos of it for us. -

nforce4max Hey you left out the dustbuster and a few others but ok posting.Reply

How about the NV1.

http://commons.wikimedia.org/wiki/File:NV1_GPU.jpg -

Parsian Aww i saw my old beloved GeForce MX440 64mb and GeForce TI 4600 series :PReply

never was a fan of 5000 series. ATi had much better card. I bought the ATi 9800 XT over the GeForce 5000 -

rambo117 tuannguyenNo, it's because Nvidia couldn't dig up photos of it for us.or they were too embarrassed...Reply