Vertex Shaders and Pixel Shaders

Making The Most Of The Vertex Shader Architecture Within A Game

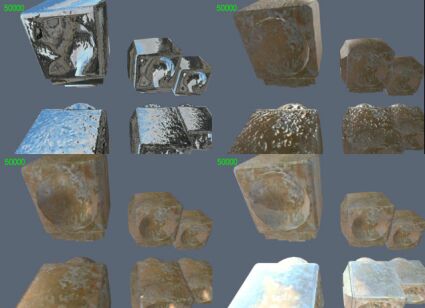

A variety of material models.

To demonstrate the limitations of vertex shaders, I'll start with the idea that we want to write a graphics engine which can take full advantage of the vertex shader architecture. Rather than just using vertex shaders for a few special effects, I'll show you some methods that allow us to use vertex shaders exclusively.

There are a number of reasons that we shouldn't try to do this with PC game engines, I'll go through these at the end of the section when you'll be better able to understand them.

To begin with, we need to classify all of the geometry processing that we may wish to perform using the vertex shader architecture. The main classes of geometry processing are: the type of transformation we wish to apply to the vertex data; the lighting environment used for the vertices; and, the material models we will use to render the surfaces.

Materials are an interesting aspect of modern game engines. While it's possible to maintain a relatively simple material model, in order to achieve the most impressive effects, far more complex material models are needed. For example, Dot3 bump-mapping requires that the lighting calculations are partially moved from the vertex processor into the pixel rasterizer. Although bump-mapping is mainly classed as a material property, it also means that the lighting block of the vertex processor is no longer used.

In fact, another common form of lighting requires that the lighting block carries out no processing whatsoever. "Static lighting," using light maps, places all of the lighting information for the world into textures. These textures are then used to modulate the diffuse color of surfaces. The advantage of this type of lighting is that you can achieve very complex lighting effects that require days of processing before a level can be viewed in the engine. Unfortunately, it is too expensive to modify the light maps at runtime, so if dynamic lighting is needed, it must be added to the system using per-vertex processing, much as before.

The vertex lighting that we carry out in a vertex shader will typically consist of up to four lights, of type "positional," "directional" or "spotlight." Spotlights are less useful for vertex processing because vertex spotlights don't give the soft edges that people want to see with complex lighting effects. However, there are still cases where you may wish to use relatively diffuse, uni-directional lights.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

All the combinations of lighting, materials and vertex processing can result in a very large number of vertex shaders.

A lighting engine that allows up to four lights (which can be either directional, positional, or spotlights) leads to 34 different lighting environments. There are also a few different types of vertex position processing - skinned vertices, transformed vertices and untransformed vertices, which give us three different combinations of transformation. We'll also have a number of different materials - probably between ten and one hundred different models for modern game engines. For argument's sake, we'll say we have twenty materials.

This means that we need to program a grand total of 2040 different vertex shaders if we want to be able to process every combination of vertex type, light state and material model.

Obviously very few companies will have the resources to hand-code this number of vertex shaders, so we need to find a way to reduce the amount of vertex shader code we need. The two ways of doing this are to either limit the number of combinations to a manageable number using a variety of techniques, or to provide a fully comprehensive, "fragment"-based vertex processing engine to handle automatic generation of each combination. I'll go through these two approaches next.

Current page: Making The Most Of The Vertex Shader Architecture Within A Game

Prev Page Pixel Shader Pipeline Next Page Constrained Vertex Processing