Linux dev delivers 6% file system performance increase – says ‘it was literally a five minute job’

Jens Axboe, developer of IO_uring, says he’s been thinking about implementing this update for years.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

A Linux developer has implemented a couple of changes to a caching algorithm which claim to deliver a 6% performance improvement in I/O operations. IO_uring creator and self-confessed Linux kernel IO dabbler, Jens Axboe, decided to implement the code changes after putting them off for years but admits the changes were “literally a 5 min job” (h/t Phoronix).

Something I've had in the back of my mind for years, and finally did it today. Which is kind of sad, since it was literally a 5 min job, yielding a more than 6% improvement. Would likely be even larger on a full scale distro style kernel config.https://t.co/f4nPBCc6iFJanuary 15, 2024

Axboe’s patches seem to deliver their performance gains by reducing the number of time queries made to the I/O system. In his RFC patch notes Axboe writes that lots of code is “quite trigger happy with querying time.” There is some code to reduce this already, but the new patchset, described by Axboe as trivial, “simply caches the current time in struct blk_plug, on the premise that any issue side time querying can get adequate granularity through that.” The developer reasons that “Nobody really needs nsec granularity on the timestamp.” Here we have another case of some ingenious thinking delivering measurable benefits in a long-established piece of technology.

Five minutes work for one person delivers 6% I/O benefits for all (Linux users)

In Axboe’s tests the observed 6% improvement in IOPS readings comparing pre-and post-patching. Interestingly the asynchronous I/O interface developer hints that Linux users might see even greater benefits in the real world. This is because in Axboe’s test system he “doesn't even enable most of the costly block layer items that you'd typically find in a distro and which would further increase the number of issue side time calls.” In other words, those using more bloated Linux vendor kernels could get more mileage out of Axboe’s new patches.

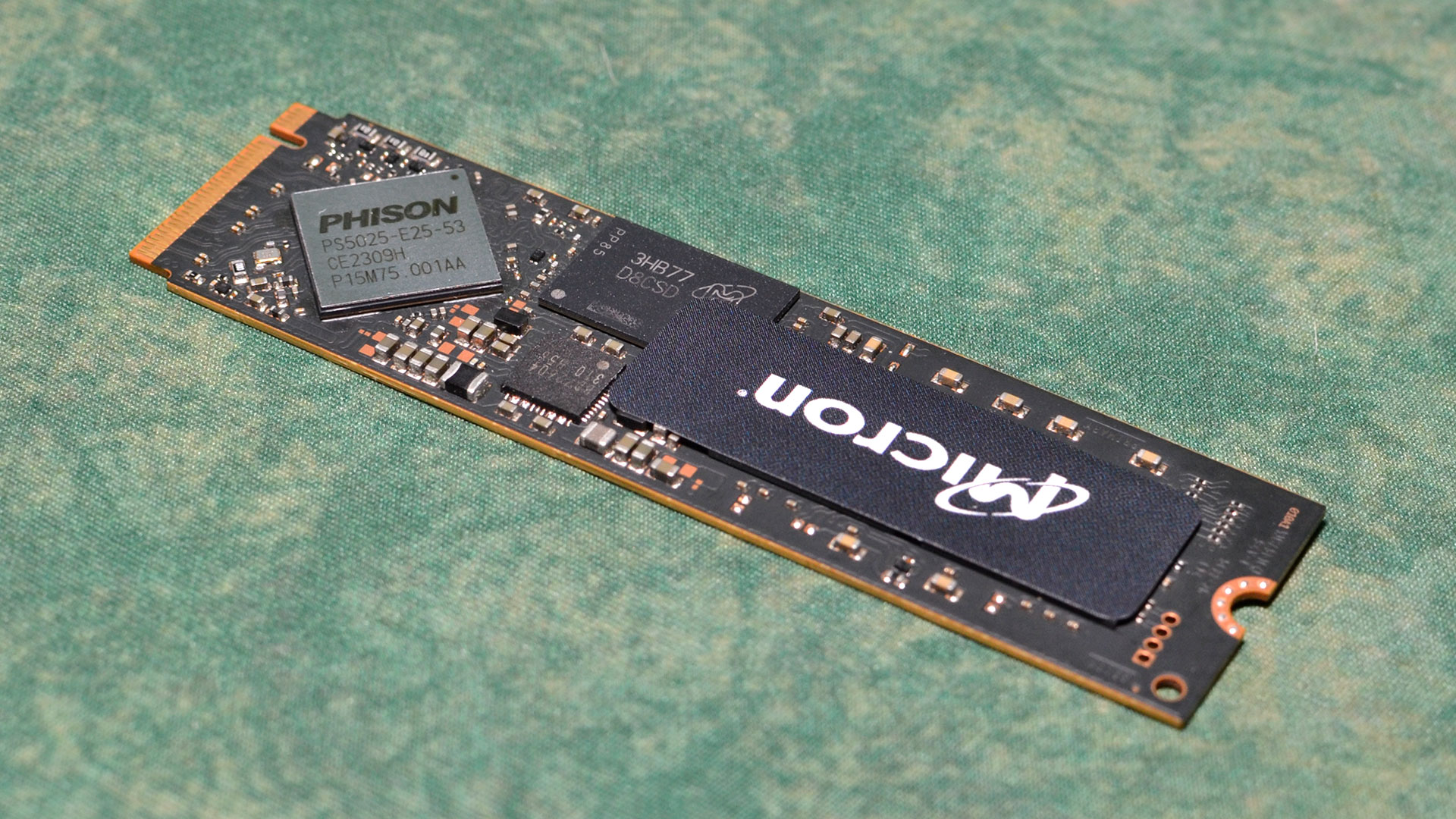

Phoronix reckons there is a good chance the RFC patches could be ready for upstreaming with Linux 6.9, later this year. Whenever it arrives, it is great to get extra performance for free, especially as storage can be a common system bottleneck. Meanwhile, if you are in the market for faster or more capacious storage, it might be a good idea to check out our best SSD storage guide which covers everything from budget SATA drives to the latest M.2 PCIe SSDs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

coolitic I mean, 90% of the work you put into coding is either planning or bug-fixing, so describing it as a "5 minute job" when spending a long time thinking about it is somewhat misrepresentative.Reply -

usertests Reply

Which is why I'm now dubbing this code change a "multi-year job".coolitic said:I mean, 90% of the work you put into coding is either planning or bug-fixing, so describing it as a "5 minute job" when spending a long time thinking about it is somewhat misrepresentative. -

DougMcC 'Nobody really needs' is a recipe for finding the people who need it. Who wants to bet on this being rolled back when they find out whose need they are ignoring?Reply -

bit_user Reply

When syscalls take at least several hundreds of ns, it's hard to see a case where someone is going to nitpick over a few ns. It's below the noise floor. They really could've just truncated it to microsecond precision and it would've been fine.DougMcC said:'Nobody really needs' is a recipe for finding the people who need it. Who wants to bet on this being rolled back when they find out whose need they are ignoring? -

Theodore Ts'o "File system performance" is a little misleading. What Jens has done is to optimize the overhead for I/O submission. So if you have a workload which is isusing a large number of I/O's, and if the storage device is fast enough that the overhead in recording time information for iostats dominates. For example, on an hard drive or a USB thumdrive, this change is probably not going to be really noticeable. Even for a consumer grade, SATA-attached SSD, it's probably not going to be that great of an improvement. Furthermore, it's only going to be a high IOPS workload, such as a 4k random read or random write workload. If your workload is a streaming read or streaming write workload, again, it's not going to be that big of a deal.Reply -

bit_user Reply

Thanks for the clarification.Theodore Ts'o said:"File system performance" is a little misleading. What Jens has done is to optimize the overhead for I/O submission. So if you have a workload which is isusing a large number of I/O's, and if the storage device is fast enough that the overhead in recording time information for iostats dominates.

I do wonder what's the purpose of sampling the clock. Is it just for updating the file metadata?

Also, it sounds as though it applies to all file I/O in Linux - not just io_uring. Can you confirm? -

JJM_NY Reply

High frequency trading firms do need nanosecond level logging and optimize performance around that information.DougMcC said:'Nobody really needs' is a recipe for finding the people who need it. Who wants to bet on this being rolled back when they find out whose need they are ignoring? -

bit_user Reply

They're not talking about globally caching the high resolution timer. This is just for file I/O, as far as I understand.JJM_NY said:High frequency trading firms do need nanosecond level logging and optimize performance around that information.

I'm totally with you on the general need for nanosecond-resolution precision (or, at least sub-microsecond). It's definitely useful for synchronizing entries from different logfiles and for detailed performance analysis.

If we go back and look at specific filesystems, I'm not sure if they all even support nanosecond resolution. I believe XFS only added this somewhat recently.