AI-generated videos now possible with gaming GPUs with just 6GB of VRAM

Bringing video diffusion to the masses.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

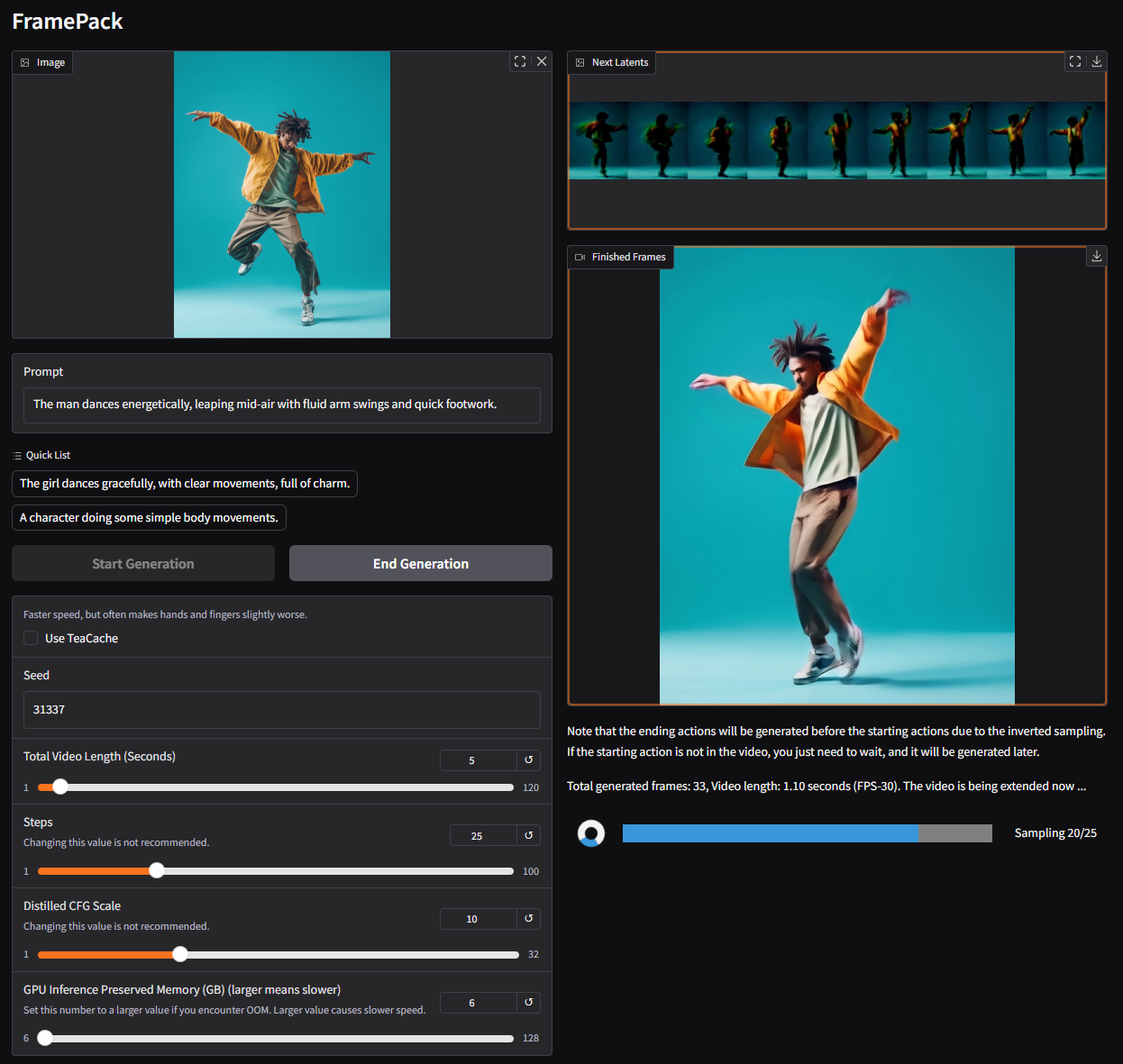

Lvmin Zhang at GitHub, in collaboration with Maneesh Agrawala at Stanford University, has introduced FramePack this week. FramePack offers a practical implementation of video diffusion using fixed-length temporal context for more efficient processing, enabling longer and higher-quality videos. A 13-billion parameter model built using the FramePack architecture can generate a 60-second clip with just 6GB of video memory.

FramePack is a neural network architecture that uses multi-stage optimization techniques to enable local AI video generation. At the time of writing, the FramePack GUI is said to run a custom Hunyuan-based model under the hood, though the research paper mentions that existing pre-trained models can be fine-tuned using FramePack.

Typical diffusion models process data from previously generated noisy frames to predict the next, slightly less noisy frame. The number of input frames considered for each prediction is called the temporal context length, which grows with the video size. Standard video diffusion models demand a large VRAM pool, with 12GB being a common starting point. Sure, you can get away with less memory, but that comes at the cost of shorter clips, lower quality, and longer processing times.

Enter FramePack: a new architecture that compresses input frames, based on their importance, into a fixed-size context length, drastically reducing GPU memory overhead. All frames must be compressed to converge at a desired upper bound for the context length. The authors describe the computational costs as similar to image diffusion.

Coupled with techniques to mitigate "drifting", where the quality degrades with the video's length, FramePack offers longer video generation without significant compromise to fidelity. As it stands, FramePack requires an RTX 30/40/50 series GPU with support for the FP16 and BF16 data formats. Support on Turing and older architectures has not been verified, with no mention of AMD/Intel hardware. Linux is also among the supported Operating Systems.

Aside from the RTX 3050 4GB, most modern (RTX) GPUs meet or exceed the 6GB criteria. In terms of speed, an RTX 4090 can dish out as many as 0.6 frames/second (optimized with teacache), so your mileage will vary depending on your graphics card. Either way, each frame will be displayed after it is generated, providing immediate visual feedback.

The employed model likely has a 30 FPS cap, which might be limiting for many users. That said, instead of relying on costly third-party services, FramePack is paving the way to make AI video generation more accessible for the average consumer. Even if you're not a content creator, this is an entertaining tool for making GIFs, memes, and whatnot. I know I'll be giving it a go in my free time.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Hassam Nasir is a die-hard hardware enthusiast with years of experience as a tech editor and writer, focusing on detailed CPU comparisons and general hardware news. When he’s not working, you’ll find him bending tubes for his ever-evolving custom water-loop gaming rig or benchmarking the latest CPUs and GPUs just for fun.

-

Firestone If social media wasn't dead before it certainly is now. You can guarantee Instagram, tik tok, meta, Twitter, will all be flooded with this before the year is out.Reply -

circadia Reply

they're all already flooded. Worse, normal people think this sort of thing is awesome. Just look at the amount of people who sent the dude who started the trend of generating Studio Ghibli-style pictures using AI positive messages about how it made them happy and whatnot.Firestone said:If social media wasn't dead before it certainly is now. You can guarantee Instagram, tik tok, meta, Twitter, will all be flooded with this before the year is out. -

usertests Reply

It is awesome.circadia said:Worse, normal people think this sort of thing is awesome.

Good.closs.sebastien said:we will have even more 'realistic' fake news... -

tamalero Reply

the internet death theory will become real.Firestone said:If social media wasn't dead before it certainly is now. You can guarantee Instagram, tik tok, meta, Twitter, will all be flooded with this before the year is out. -

qwertymac93 This uses the Hunyuan architecture under the hood, by the way. If anyone is curious enough to try this one out, I highly suggest you check out the "issues" tab in the GitHub and pick up the one click sage attention install script, you could see a 30% speed improvement if you do.Reply

Even so, the speed is significantly behind regular Hunyuan text to video or WAN image to video, and the resolution is limited to sub 640x640. That said, the prospect of "unlimited length" video generation being on the horizon is huge. This technique could become the standard for future video generation models similar to how Deepseek R1's "reasoning" has become the standard for state of the art text generation models. -

CelicaGT Just try and search the short form video platforms, all full of AI drivel. Quality varies but the vast, vast majority of it is incomprehensibly bad and it exists solely as a vehicle for ad revenue. The general internet is just as bad, zombie sites with stolen, AI regurgitated content fill searches, somehow promoted above actual content. I cannot, for fear of moderation, use the words that best describe my thoughts on this matter.Reply -

Amdlova Working on the 4060 :) make some videos... my 4060 nad 32gb ram just died for 1 hour to make 5 sec videoReply -

LolaGT A quick look on facebook(if you have it) shows the reels or whatever the shorts vids are called there are chock full of AI shorts and hundreds if not thousands of people who are dumb enough to think they are real.Reply

We're pretty much doomed as the majority are easily duped.