Geekbench AI gets renamed, hits version 1.0

Geekbench ML no more.

Geekbench is moving its artificial intelligence benchmark to prime time. Developer Primate Labs is pushing the newly renamed Geekbench AI (previously called 'Geekbench ML' while in preview) to version 1.0. The benchmark is available for Windows, Linux, and macOS, as well as for Android in the Google Play Store and for iPhones and iPads in Apple's App Store.

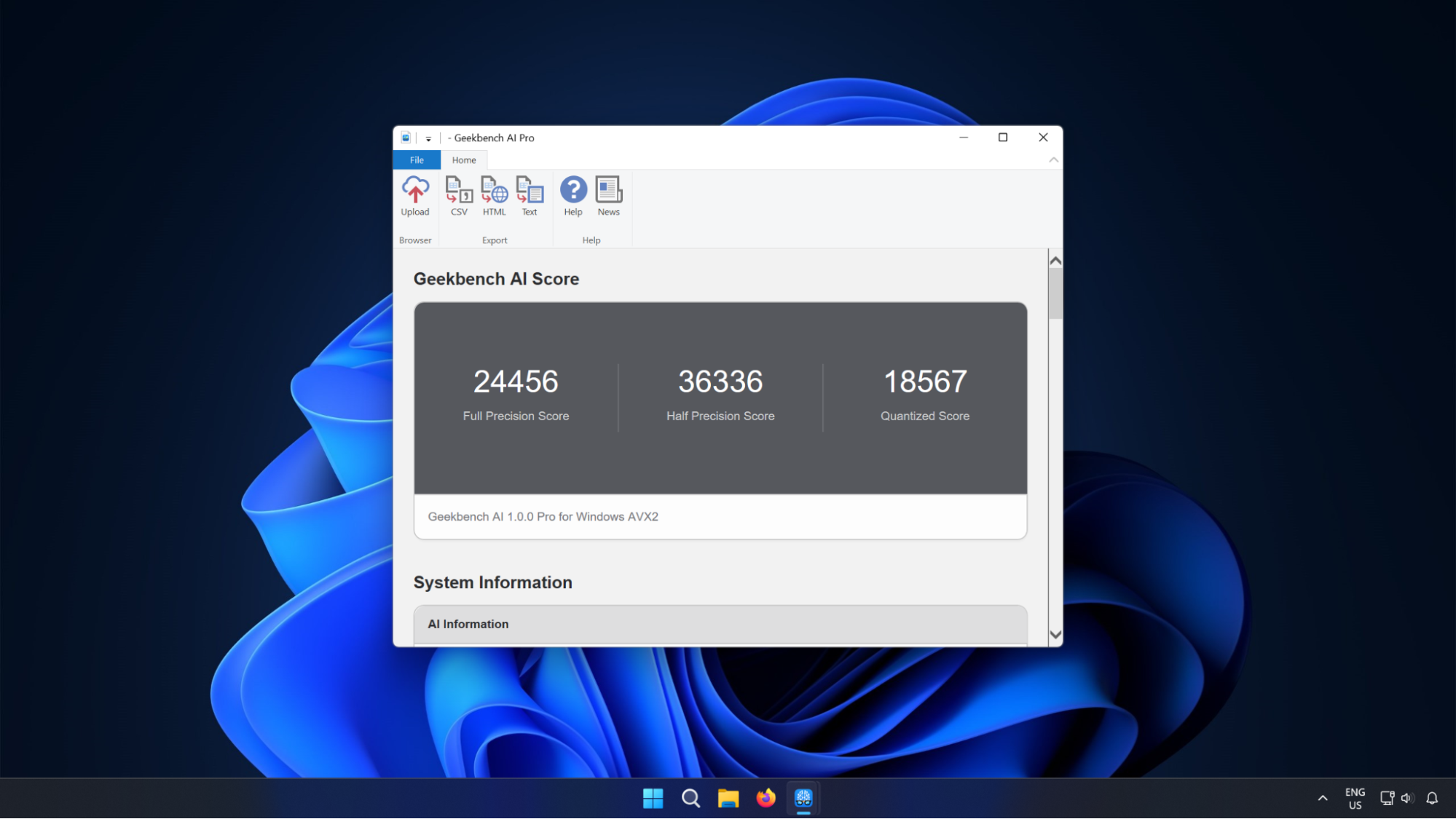

Like regular Geekbench, which provides two scores for the CPU, Geekbench AI will use multiple scores. In this case, the software will use three scores that range in precision: single-precision data, half-precision data, and quantized data. Primate Labs claims that this is "to better describe the multidimensionality of AI performance and the impact of different hardware designs."

But there's another component: correctness. Another measurement for each test sees how close the workload delivers what's expected. Primate Labs explained using an example of a model that detects the presence of a hotdog:

"Your hotdog object detection model might be able to run very, very quickly, but if it can only accurately detect a hotdog 0.2% of the time one is actually present, it’s not very good," it explained. "This accuracy measurement can also help developers see the benefits and drawbacks of smaller data types — which can increase performance and efficiency at the expense of (potentially!) lower accuracy. Comparing accuracy as part of performance through the use of our database can also help developers estimate relative efficiency."

A whole bunch of new frameworks are available with the AI 1.0 launch. It now supports OpenVINO, ONNX, and Qualcomm QNN on Windows, OpenVINO on Linux, and "vendor-specific TensorFlow Lite Delegates" including Samsung ENN, ArmNN, and Qualcomm QNN on Android. This release will also be adding more datasets, the company says.

Scoring and comparing

Primate Labs wants to prevent any gaming of the system, or, more politely stated: "vendor and manufacturer-specific performance tuning on scores[.]" All workloads run for at least one second, which should ensure devices have the time to reach peak performance levels during testing.

Primate Labs suggests this will also help show the difference between phones and desktops, or AI GPUs. If a test is completed too fast the device will underreport its performance, the company says.

Like regular Geekbench, Geekbench AI will report to the Geekbench browser (and potentially provide fodder for upcoming hardware leaks — who knows). The highest-performing devices will be here, while the most recent results will be here.

Currently, there are few ways to measure AI performance that aren't from the big hardware manufacturers, so this is a welcome addition. Primate Labs said in a blog post that Samsung and Nvidia, among others, are using the software, and the company suggests the software will evolve quickly, with lots of future releases.

But it's difficult right now to evaluate real-world AI performance, as there are limited use cases. So there's a lot of weight riding on just a few benchmarks to tell us what's what.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Andrew E. Freedman is a senior editor at Tom's Hardware focusing on laptops, desktops and gaming. He also keeps up with the latest news. A lover of all things gaming and tech, his previous work has shown up in Tom's Guide, Laptop Mag, Kotaku, PCMag and Complex, among others. Follow him on Threads @FreedmanAE and BlueSky @andrewfreedman.net. You can send him tips on Signal: andrewfreedman.01

-

Jiminez Krankenshire Alright! Another Apple-centric Geekbench benchmark! If we're to believe this benchmark, a 5-year-old iPhone is enough to beat the pants off Google or Qualcomm's latest. Surprise, surprise.Reply