Silver Nanowire Networks to Overdrive AI Acceleration, Reservoir Computing

Further exploring the possible futures of AI performance.

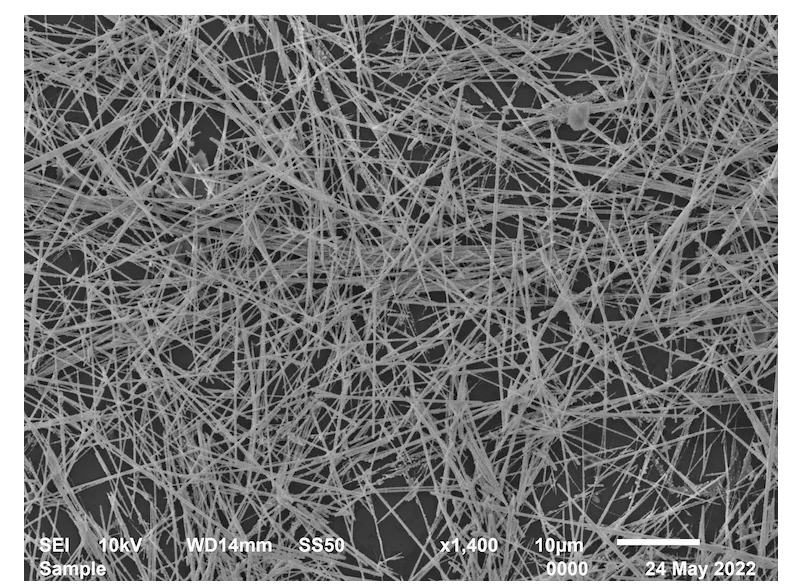

A team of researchers with the Universities of California and Sydney has sought to sidestep the enormous power consumption of artificial neural networks through the creation of a new, silver nanowire-based approach. Thanks to the properties of silver nanowire - nanostructures around one-thousandth the width of a human hair - and the similarity of its networks with those present in biological CPUs (brains), the research team was able to build a neuromorphic accelerator that results in much lower energy consumption in AI processing tasks. The work has been published in the journal Nature Communications.

Nanowire Networks (NWNs) explore the emergent properties of nanostructured materials – think graphene, XMenes, and other, mostly still under-development technologies – due to the way their atomic shapes naturally possess a neural network-like physical structure that’s significantly interconnected and possesses memristive elements. Memristive in the sense that it possesses structures that can both change their pattern in response to a stimulus (in this case, electricity) and maintain that pattern when that stimulus is gone (such as when you press the Off button).

The paper also explains how these nanowire networks (NWNs) “also exhibit brain-like collective dynamics (e.g., phase transitions, switch synchronisation, avalanche criticality), resulting from the interplay between memristive switching and their recurrent network structure”. What this means is that these NWNs can be used as computing devices, since inputs deterministically provoke changes in their organization and electro-chemical bond circuitry (much like an instruction being sent towards an x86 CPU would result in a cascade of predictable operations).

Learning in Real-Time

Nanowire Networks and other RC-aligned solutions also unlock a fundamentally important capability for AI: that of continuous, dynamic training. While AI systems of today require lengthy periods of data validation, parametrization, training, and alignment between different “versions”, or batches (such as Chat GPT’s v3.5 and 4, Anthropic’s Claude and Claude II, Llama, and Llama II), RC-focused computing approaches such as the researcher’s silver NWN unlock the ability to both do away with hyper-parametrization, and to unlock adaptive, gradual change of their knowledge space.

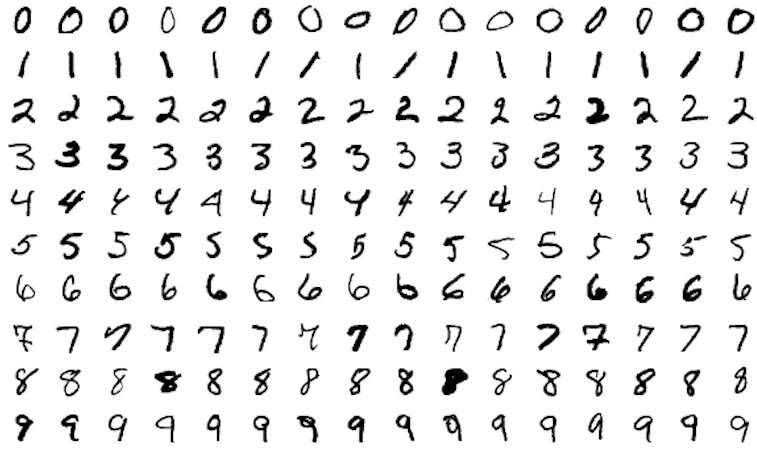

This means that with each new piece of data, the overall system weights adapt: the network learns without having to be trained and retrained on the same data, over and over again, each time we want to steer it towards usefulness. Through the online learning, dynamic stream-of-data approach, the silver NWN was able to teach itself to recognize handwritten digits, and to recall the previously-recognized handwritten digits from a given pattern.

Once again, accuracy is a requirement as much as speed is – results must be provable and deterministic. According to the researchers, their silver-based NWN demonstrated the ability to sequence memory recall tasks against a benchmark image recognition task using the MNIST dataset of handwritten digits, hitting an overall accuracy of 93.4%. Researchers attribute the “relatively high classifications accuracy” measured through their online learning technique to the iterative algorithm, based on recursive least squares (RLS).

The Biological Big Move

If there’s one area where biological processing units still are miles ahead of their artificial (synthetic) counterparts, it is energy efficiency. As you read these words and navigate the web and make life-changing decisions, you are consuming far fewer watts (around 20 W) to process and manipulate, to operate, on those concepts than even the world’s most power-efficient supercomputers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

One reason for this is that while fixed-function hardware can be integrated into our existing AI acceleration solutions (read, Nvidia’s all-powerful market dominance with its A100 and H100 product families), we’re still adding that fixed-function hardware onto a fundamental class of chips (highly-parallel but centrally-controlled GPUs).

Perhaps it’s useful to think of it this way: any problem has a number of solutions, and these solutions all exist within the equivalent of a computational balloon. The solution space itself shrinks or increases according to the size and quality of the balloon that holds it.

Current AI processing essentially emulates the crisscrossing, 3D map of possible solutions (through fused memory and processing clusters) that are our neurons onto a 2D Turing machine that must waste incredible amounts of energy simply to spatially represent the workloads we need to fix – the solutions we need to find. Those requirements naturally increase when it comes to navigating and operating on that solution space efficiently and accurately.

This fundamental energy efficiency limitation – one that can’t be corrected merely through manufacturing process improvements and clever power-saving technologies – is the reason why alternative AI processing designs (such as the analog-and-optical ACCEL, from China) have been showing orders of magnitude improved performance and – most importantly – energy efficiency than the current, on-the-shelves hardware.

One of the benefits of using neuromorphic nanowire networks is that they are naturally adept at running Reservoir Computing (RC) – the same technique used by the Nvidia A100 and H100s of today. But while those cards must simulate an environment (they are capable of running an algorithmic emulation of the 3D solution space), purpose-built NWNs can run those three-dimensional computing environments natively - a technique that immensely reduces the workload for AI-processing tasks. Reservoir Computing makes it so that training doesn’t have to deal with integrating any newly added information – it's automatically processed in a learning environment.

The Future Arrives Slow

This is the first reported instance of a Nanowire Network being experimentally run against an established machine learning benchmark – the space for discovery and optimization is therefore still large. At this point, the results are extremely encouraging and point towards a varied approach future towards unlocking Reservoir Computing capabilities in other mediums. The paper itself describes the possibility that aspects of the online learning ability (the ability to integrate new data as it is received without the costly requirement of retraining) could be implemented in a fully analog system through a cross-point array of resistors, instead of applying a digitally-bound algorithm. So both theoretical and materials design space still covers a number of potential, future exploration venues.

The world is thirsty for AI acceleration, for Nvidia A100s, and for AMD’s ROCm comeback, and Intel’s step onto the fray. The requirements for AI systems to be deployed in the manner we are currently navigating towards – across High-Performance Computing (HPC), cloud, personal computing (and personalized game development), edge computing, and individually-free, barge-like nation states will only increase. It’s unlikely these needs can be sustained by the 8x AI inference performance improvements Nvidia touted while jumping from its A100 accelerators towards its understocked and sanctioned H100 present. Considering that ACCEL promised 3.7 times the A100’s performance at much better efficiency, it sounds exactly the right time to start looking towards the next big performance breakthrough – how many years into the future that may be.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

bit_user Wow, this sounds pretty impressive! I wonder how temperature-sensitive it is.Reply

If there’s one area where biological processing units still are miles ahead of their artificial (synthetic) counterparts, it is energy efficiency.

I thought that had a lot to do with analog vs. digital and the fact that biology uses spiking neural networks, rather than the systolic approach currently dominating the AI industry. -

P.Amini This is interesting, we started in analog domain then we went digital and finally it surpassed analog in almost every area. Then that digital technology advancements led to AI, then advancements in AI is leading us to go back to analog again! I never would have thought of that!Reply -

bit_user Reply

Throughout the history of Neuromorphic Computing, analog has always been lurking in the background.P.Amini said:This is interesting, we started in analog domain then we went digital and finally it surpassed analog in almost every area. Then that digital technology advancements led to AI, then advancements in AI is leading us to go back to analog again! I never would have thought of that!

Back in the early/mid-90's, Intel partnered with another company to build an analog IC for accelerating "multi-layer perceptrons". Wikipedia says the Ni1000 is the second generation chip - and a digital one - but references a previous generation that was analog:

https://en.wikipedia.org/wiki/Ni1000

Also, a family member once told me about a 512-synapse analog perceptron he worked on, in the research division of the USAF, back in the early 1960s.

I remember when I first learned about analog computers and how much faster they were than digital computers of the time. However, you have to be aware of their drawbacks and limitations. There are good reasons why the computing revolution very quickly went digital.