Researchers Create Photonic Microprocessor

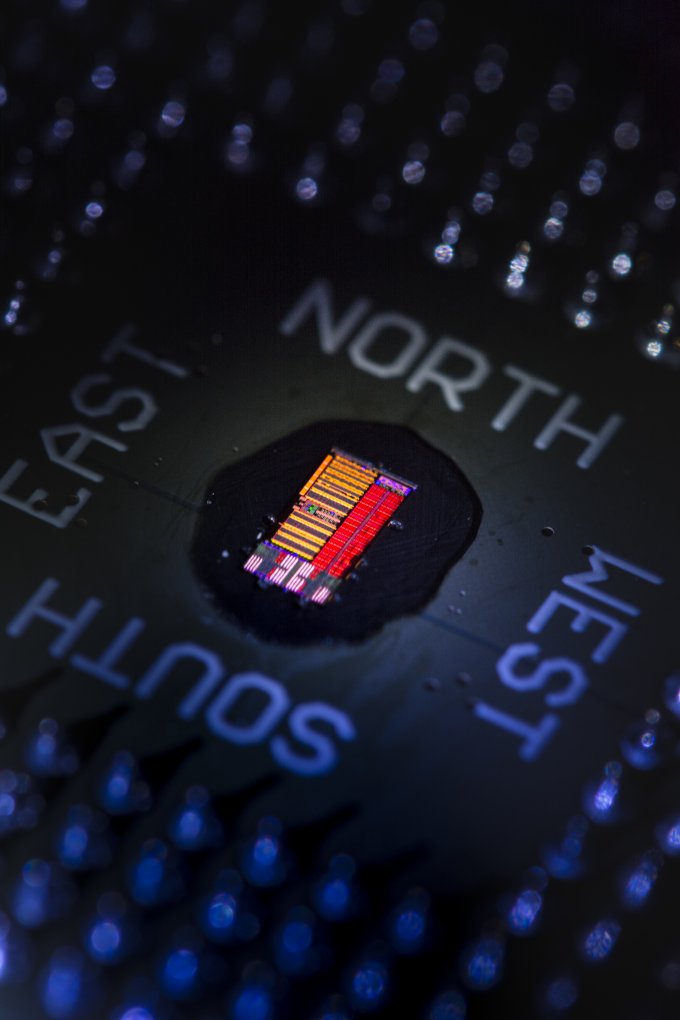

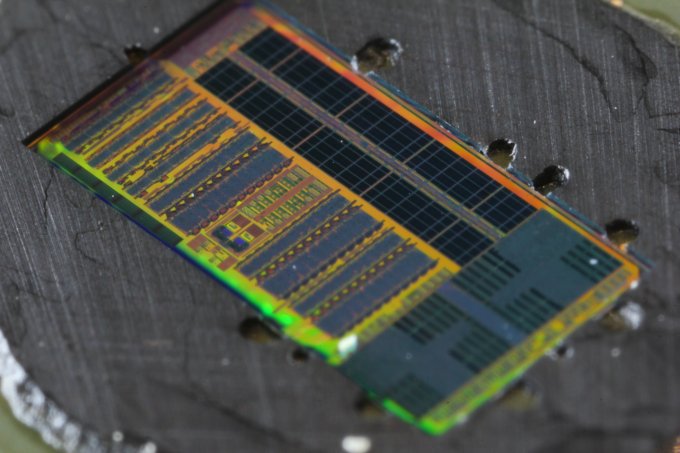

The University of Colorado Boulder in collaboration with the University of California, Berkeley and MIT announced that they have developed the first photonic microprocessor.

According to Milos Popovic, an assistant professor at Boulder who lead the team in the photonic processors development, the processor uses infrared light with a physical wavelength of less than 1 micron to transmit data between nodes. “This enables very dense packing of light communication ports on a chip, enabling huge total bandwidth,” said Popovic.

The microprocessor uses 850 optical input/output components and has a bandwidth density rated at 300 Gb/s per square millimeter, which is roughly 10 to 50 times greater than modern electronic processors currently available.The microprocessor also consumes significantly less power than a modern processor because the energy requirements to generate and transmit a light signal over distance is significantly lower than in an electronic processor.

“One advantage of light based communication is that multiple parallel data streams encoded on different colors of light can be sent over one and the same medium – in this case, an optical wire waveguide on a chip, or an off-chip optical fiber of the same kind as those that form the Internet backbone,” said Popović.

Although the optical components are responsible for most of the work inside of the processor, the 3 x 6 mm prototype is actually sort of a hybrid design with electric-based cache and compute cores.

The photonic processor is still in its early days of of development, but if the testing of these prototypes goes well, in a few years we could see a new wave of devices ranging from IoT devices to supercomputers powered by them.

Follow Michael Justin Allen Sexton @EmperorSunLao. Follow us on Facebook, Google+, RSS, Twitter and YouTube.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

kenjitamura Replyin a few years we could see a new wave of devices

In the tech sphere "a few years" usually ends up being 10+. Wouldn't get my hopes up too high. -

turkey3_scratch This is incredible, and definitely a start for what will have to come to the computing world. Once silicon limits are finally hit (5-10 years) quantum computing or now photonic computing are the next big things.Reply

I would like more information from them on what tests have been done with this CPU and the reliability. -

techguy911 ReplyThis is incredible, and definitely a start for what will have to come to the computing world. Once silicon limits are finally hit (5-10 years) quantum computing or now photonic computing are the next big things.

I would like more information from them on what tests have been done with this CPU and the reliability.

Quantum computers are the next big thing Google already has one according to test is 100 million times faster than a single core cpu.

Next year they plan to buy a much larger one with NASA 1000 qubit model.

Also in Australia a group of scientist are working on a quantum computer printed on silicon.

D-Wave is the leading quantum computer mfg in the world.

I would say in about 10 years we will have hybrid computers with a quantum co-processor as they are for math and physics calculations only.

-

turkey3_scratch Yeah but the computer only does a specific thing, it's not like it can run Windows.Reply -

IInuyasha74 We also don't know about the cost of production yet. It might end up being that quantum computers are faster but far too expensive for every day devices like smartphones and PCs. I have no idea, as neither are in production now, but the photonic processors seem to be targeted at lower-end devices.Reply -

alextheblue ReplyQuantum computers are the next big thing Google already has one.

1) Not for consumers. They require extremely low temps near absolute zero.

2) Extremely expensive.

3) Extremely limited use - they're actually next to useless right now and yes that includes the ones Google has purchased to tinker with.

4) Unknown reliability/accuracy.

D-Wave and MS are both working on some new designs, but anything remotely useful for real work are a ways off. But even then the other issues will remain extremely difficult to solve, in particular the operating temperature range. -

anbello262 I don't think quantum PCs are going to be a consumer reality anytime soon (20+ years), because of the type of calculation they are built for. But, having photonic PCs in 15 years will really be a big step up for consumers. I sure hope this kind of technology is used for GPUs shortly after.Reply -

Darkbreeze I'd be surprised if it didn't find it's way into some kind of APU type configuration that handled both CPU and graphics functions. Virtual reality and holographic potential from this kind of tech is probably phenomenal. If you think those holograms they are using on stage now are something, think ACTUAL holosuites.Reply -

Joe Black ReplyQuantum computers are the next big thing Google already has one according to test is 100 million times faster than a single core cpu.

Next year they plan to buy a much larger one with NASA 1000 qubit model.

Also in Australia a group of scientist are working on a quantum computer printed on silicon.

D-Wave is the leading quantum computer mfg in the world.

I would say in about 10 years we will have hybrid computers with a quantum co-processor as they are for math and physics calculations only.

1000 qubits will be enough to process more "bits" of data than there are atoms in the observable universe if some docs I watched were telling the truth...

That said - I am not certain that q-computers will be ideal for all purposes. Precision is a big thing the way I understand it. They excel at certain types of computational tasks that conventional computers are abysmally bad at in comparison - Problems where brute iteration from step 0 - n to find an optimal solution would take too long. Problems that involve probability algorithms. Just like graphics processors can execute massively parallel tasks.

I am no expert on the matter, but it may very well be that the ideal chip of the future will have photonic GPU, CPU as well as QCPU cores all in one package. And it could be that QCPUs excel at graphics computations as well - I think its very possible.