Deep Learning To Soon Bring Pro-Level Photography To Smartphones

Google researchers have been working on a project in which deep learning is used to create “professional-level photographs.” The project shows that in the near future, all photos could look like they were taken by professionals, and pictures taken with smartphones could benefit the most from this technology over the coming years.

Deep Learning Photography

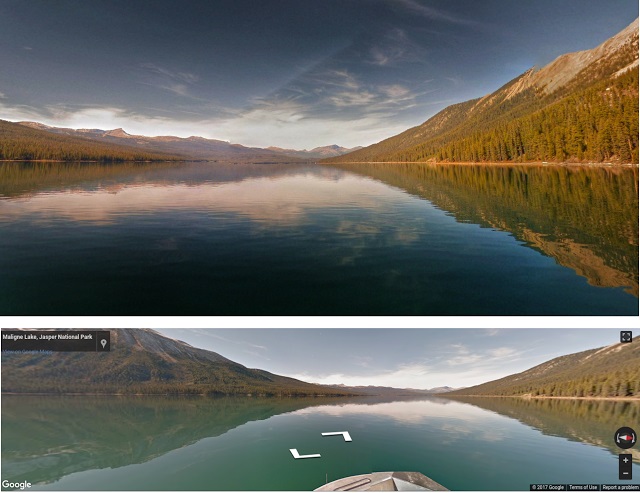

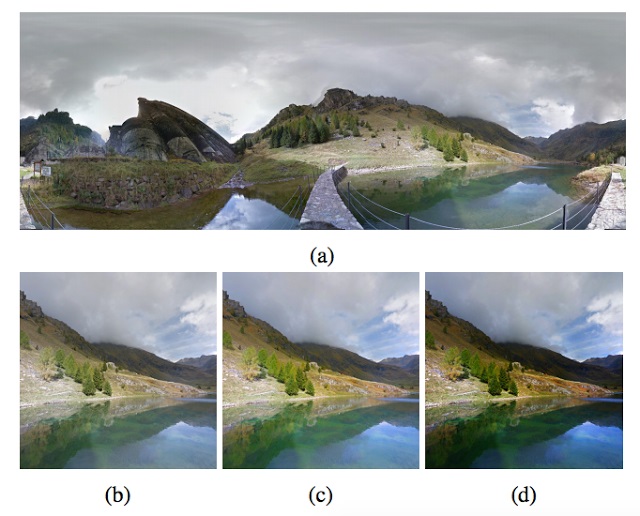

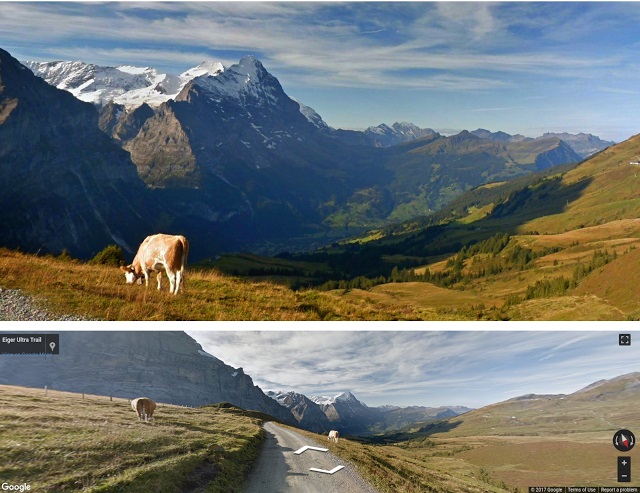

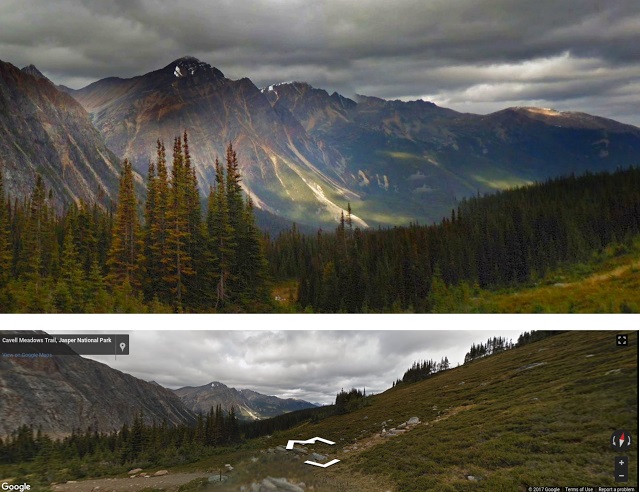

Google’s researchers have created a deep learning system that mimics the workflow of a professional photographer in order to achieve similar results with its automatically edited photos. The system analyzed the best panoramas from Google Street View and searched for the best composition. Afterward it carried out various post-processing techniques to create an “aesthetically pleasing image.”

Google said that using supervised learning and manually selecting labels for various features of aesthetically pleasing images would have been be an intractable task. Therefore, the company used a collection of professional quality photos to automatically break down the aesthetics into multiple aspects.

The researchers used traditional image filters to generate negative training examples for saturation, HDR detail, and composition. They randomly applied image filters and brightness levels to professional photos to degrade their appearance. The “good” image that was supposed to be the end result would then be trained based on those negative images. This type of training technique is called a “generative adversarial network.”

Turing Test For Aesthetics

The researchers couldn’t develop an “objective” arbiter to evaluate the photographs’ beauty because, as they say, beauty is in the eye of the beholder. As far as we know, Google’s AI also doesn’t yet have feelings and emotions to be able to see "beauty" with its own "eyes."

This is why the company used the next best thing: a panel of photographers that could look at photos edited either by humans or the deep learning system to identify which were which. The human judges were instructed to assign one of these four levels to each photograph they saw:

Point-and-shoot without consideration for composition, lighting etc.Good photos from general population without a background in photography. Nothing artistic stands out.Semi-pro. Great photos showing clear artistic aspects. The photographer is on the right track of becoming a professional.Pro.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google said that 40% of the photos that were automatically edited by its deep learning system received either a “semi-pro” or a “pro” rating.

From Street View To Smartphones?

The researchers working on this project said that for now, they used only Street View panoramas to test their project, but that the technology could one day be applied to take better photos in the real world.

Most people take photos with their smartphones these days, and Google already has a horse in the race with Android making up the vast majority of those handsets. Although we’ve seen impressive improvements to smartphone photo quality in the past few years, most people would still argue that we’re still not close to taking photos that look as good as those taken by thousand-dollar-plus professional DSLRs (and commensurately talented photographers).

Based on physical qualities alone, it should be impossible for smartphone cameras to catch up to DSLRs, maybe ever. That’s because, for one, it’s unlikely that the lenses of smartphones will match the quality of those of DSLR lenses anytime soon. Second, those cameras use significantly larger sensors to capture much more light, and they couldn’t possibly fit in a smartphone without making one look like a point-and-shoot camera.

Deep Learning Accelerators For Mobile

Deep learning photography systems, such as the ones being developed by Google right now, could optimize photos to the extreme and turn even mediocre-looking pictures into professional-quality photography.

To achieve those kinds of complex edits, smartphones are going to need specialized chips that can do all of those operations on a tight power budget. We’ve already seen interest from Qualcomm, Samsung, and MediaTek in designing such machine learning accelerators for mobile, but the first generation of such chips may not be completely optimized or adequate for such tasks.

We may first need the software that is capable of automatic professional editing before the chips that are optimized for that software can arrive. Similarly, we’ve seen Nvidia’s GPUs evolve over the past few years to deliver increasingly higher deep learning performance with each new architectural design after machine learning researchers had already decided on a handful of frameworks. The mobile deep learning chips will need to follow a similar path over the next few years to produce the best computational photography results.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

Novell SysOp fire phasers 5 time Wrong. You need a Quadro card to edit 10-bit color, and a true 10-bit IPS monitor, not some BS scam 8+FRC, and it better be 100% Adobe RGB. Try finding one. Pros take pics in RAW 16-bit format. No damn stupidphone can do that.Reply

I'll edit this to give you a hint. SONY X300 V2 4K OLED. $45,000, and only 10-bit panel. Dolby has a 12-bit panel. You want the best? You only have 2 choices, and you can't afford either of them. -

Novell SysOp fire phasers 5 time There are only a handful of pro apps that let you edit 10-bit color, and Adobe is the only one the pros use and care about. And you need a Quadro card to do it. Geforce is not allowed. google are full of idiots who dabble in things they know nothing about. google fiber is dead and gone and moved on to Wi-Fi because larry page is a moron and knows nothing about the expense of rolling out fiber. google videos is dead and gone too. Never trust corps or anyone with your data. Pay cloud services have folded overnight and all your info is gone.Reply

Just who are these "experts" google used to examine these pics? I doubt any of them are pros and none of them have access to or use the $40,000 equipment I listed above. And I can guarantee NONE of you on this forum knew of it either. -

Krazie_Ivan i'm just going to downvote Novell till his smugness balances out with his knowledge. knowing stuff is great, nice, but nobody wants to learn from a personality like that.Reply -

CobraMatte Does it do selfies?Reply

If beauty is the only goal, its ok i suppose. It seems strange that the edited pictures no longer accurately depict what you took a picture of. -

hannibal Heh! Take picture of chetto and dying people and the AI change that to "Bold and beatifull" with luxury cars!Reply

Photo manipulation in a big way ;) -

msroadkill612 Big hit with; estate agents, used cars, dating sites, tourism in war zones, the mid west & texas, investment brochures,...Reply -

derekullo Reply19940495 said:There are only a handful of pro apps that let you edit 10-bit color, and Adobe is the only one the pros use and care about. And you need a Quadro card to do it. Geforce is not allowed. google are full of idiots who dabble in things they know nothing about. google fiber is dead and gone and moved on to Wi-Fi because larry page is a moron and knows nothing about the expense of rolling out fiber. google videos is dead and gone too. Never trust corps or anyone with your data. Pay cloud services have folded overnight and all your info is gone.

Just who are these "experts" google used to examine these pics? I doubt any of them are pros and none of them have access to or use the $40,000 equipment I listed above. And I can guarantee NONE of you on this forum knew of it either.

I have been able to edit 10 bit color per channel in gimp for free for about 2 years.

https://www.gimp.org/news/2015/11/27/gimp-2-9-2-released/

-

Crystalizer Reply19940495 said:There are only a handful of pro apps that let you edit 10-bit color, and Adobe is the only one the pros use and care about. And you need a Quadro card to do it. Geforce is not allowed. google are full of idiots who dabble in things they know nothing about. google fiber is dead and gone and moved on to Wi-Fi because larry page is a moron and knows nothing about the expense of rolling out fiber. google videos is dead and gone too. Never trust corps or anyone with your data. Pay cloud services have folded overnight and all your info is gone.

Just who are these "experts" google used to examine these pics? I doubt any of them are pros and none of them have access to or use the $40,000 equipment I listed above. And I can guarantee NONE of you on this forum knew of it either.

You are making a lot of assumptions.

1. You think google cannot afford the best professionals?

2. Because one of the many google features is not successful suddenly it all comes crap?

3 You also think Google has no cash for professional gear.

4..You think no one on this forum has no knowledge of what you are talking about.

And the most funny thing is I think all of those are wrong. That I think makes you either a troll, idiot or a guy who is desperately fighting against this to not lose his job or something by throwing"Impossible I'm pro comments".

Here is something to think about(you could have asked nicely too you know).

Even an intermediate programmer is not limited to bits in image processing. An AI programmer involved in deep learning is most often far beyond intermediate. Access to pro equipment can be made using a company's dedicated credit card with approved funding for the project. Google seems to wan't the very best so that should not be a problem. In this case it's easier to just hire a professional photographer with pro equipment.

5. You should never trust anyone with your personal information! But that would be boring? Why not just lay and die?

I think using artificial intelligence to enchant images beyond their original scope is great idea. Ai is great at analyzing and processing. So it shouldn't be a problem to use shapes and some color information to make even better images. Human has powerful imagination and teaching deep learning ai to make images beyond what human can do is like winning another chess against humans. If you have tons of 10 bit hdr images to get data from where do you need an expensive camera when you can just fake it until you can't know about it. Scary isn't it, or is it? Not for me at least.

Have a nice day. It seems like you need it! -

peterf28 we live in virtual reality, in a simulation , humans were created by some more advancen civilisationReply