Nvidia JetPack 2.3 Doubles Deep Learning And Compilation Performance

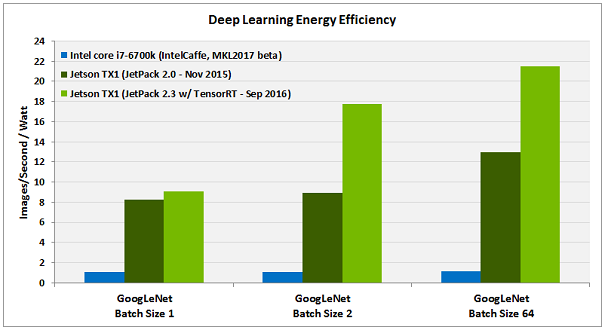

The Jetson TX1 suite of development tools and libraries reached version 2.3, which brings a doubling of performance for deep learning tasks.

Nvidia’s Jetson TX1 is the company’s highest-performance embedded chip for deep learning. The chip can run intelligent algorithms that can solve problems associated with public safety, smart cities, manufacturing, disaster relief, agriculture, transportation and infrastructure inspection.

TensorRT

The new JetPack 2.3 includes TensorRT, previously known as the GPU Inference Engine. The TensorRT deep learning inference engine doubles the performance of applications such as image classification, segmentation and object detection (compared to Nvidia's previous implementation of cuDNN). Nvidia said that developers could now deploy Jetson TX1-powered real-time neural networks.

cuDNN 5.1

The new software suite includes the cuDNN 5.1 CUDA-accelerated library for deep learning that offers developers highly optimized implementations for standard routines such as convolutions, activation functions, and tensor transformations. The latest JetPack release also includes support for LSTM (long short-term memory), and other types of recurrent neural networks.

Nvidia seems focused on not only improving performance for the most common types of neural networks right now, but also adding support for others. We’re still in the early days of the deep learning field, and it may be worth experimenting with various types of neural networks to achieve the best results for different kinds of applications.

Multimedia API

Performance is important for machine learning, and because computer vision applications are poised to become some of the most popular deep learning applications, Nvidia also added a low-level multimedia API package for Jetson. The package includes a camera API and a V4L2 (Video4Linux2) API.

The camera API gives developers per-frame control over the camera parameters and EGL stream outputs that allow efficient interoperation with Gstreamer and V4L2 pipelines. The V4L2 API includes support for decode, encode, format conversion and scaling functionality. V4L2 encoding gives developers low-level access to features such as bit-rate control, quality presets, low-latency encode, temporal trade-off and motion-vector maps.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

CUDA 8

The new JetPack 2.3 includes support for Nvidia’s latest CUDA 8GPU programming language. The company also optimized the NVCC CUDA compiler, which now achieves a 2x faster compilation time. CUDA 8 supports Nvidia’s accelerated library for graphs analytics and new APIs for half-precision floating-point computation in CUDA kernels. Nvidia also added cuBLAS and cuFFT libraries.

Leopard Imaging Partnership

Nvidia has partnered with Leopard Imaging Inc. to create camera solutions, such as stereo depth mapping for embedded machine vision applications, as well as for other consumer applications. Developers can use Leopard’s solutions to integrate RAW image sensors with Nvidia’s internal or external image signal processors via CSI or USB interfaces.

The JetPack 2.3 software suite can be downloaded from Nvidia’s website.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

Jake Hall I didn't understand any of that... I feel like such a piece of <Language, Watch it please.>Reply -

bit_user Impressive that software optimizations accounted for such an improvement. I wonder how much of it is down to simply improving data access patterns.Reply

For those who don't know, this has 4x A57 cores and 256 Maxwell cores. Memory is dual-channel LPDDR4-1600 w/ 2 MB L2 Cache. 20 nm process node. 512 GFLOPS, single-precision; 1024 GFLOPS @ fp16. ~10 W.

They call it an "embedded supercomputer". But, in terms of raw performance, a low-end gaming desktop with a GTX 950 is faster.