Google Shows Off Project Tango Area Learning, Lenovo Phone (Still) Coming This Year

Although Project Tango seems to be making strides, it's still just a project, although that will change this year, as Lenovo said in a session at Google I/O 2016 that it will launch the first consumer-facing Project Tango-enabled phone at some point in 2016, presumably this summer. (No word from Intel yet on a release date for its own Project Tango device.) In the session, we also saw a Project Tango demo about area learning.

Project Tango is a technology that enables mobile devices to learn from and understand their surrounding geometry and position in the world using computer vision. To do so, it employs three main technologies: motion tracking, depth perception and area learning.

Motion tracking allows a device to understand how it moves through the world and its position relative to its starting point. Depth perception is handled by 3D cameras present in all Project Tango-enabled devices that sense the geometry of the surrounding environment. Finally, area learning, the topic demonstrated today, gives Project Tango-enabled devices memory.

Understanding Area Learning

To understand how devices memorize through area learning, imagine walking into a room, spending some time in it and making mental notes of the space, such as the size and points of interest. Now leave the room; you'll have roughly memorized how the room looked. When you walk back into the room, you should recognize it at once. Project Tango works in a similar way. A Project Tango-enabled device memorizes by taking and storing mathematical descriptions of what the environment looks like. If you were to leave with the device and return, the device will recognize the area based on the descriptions it has stored.

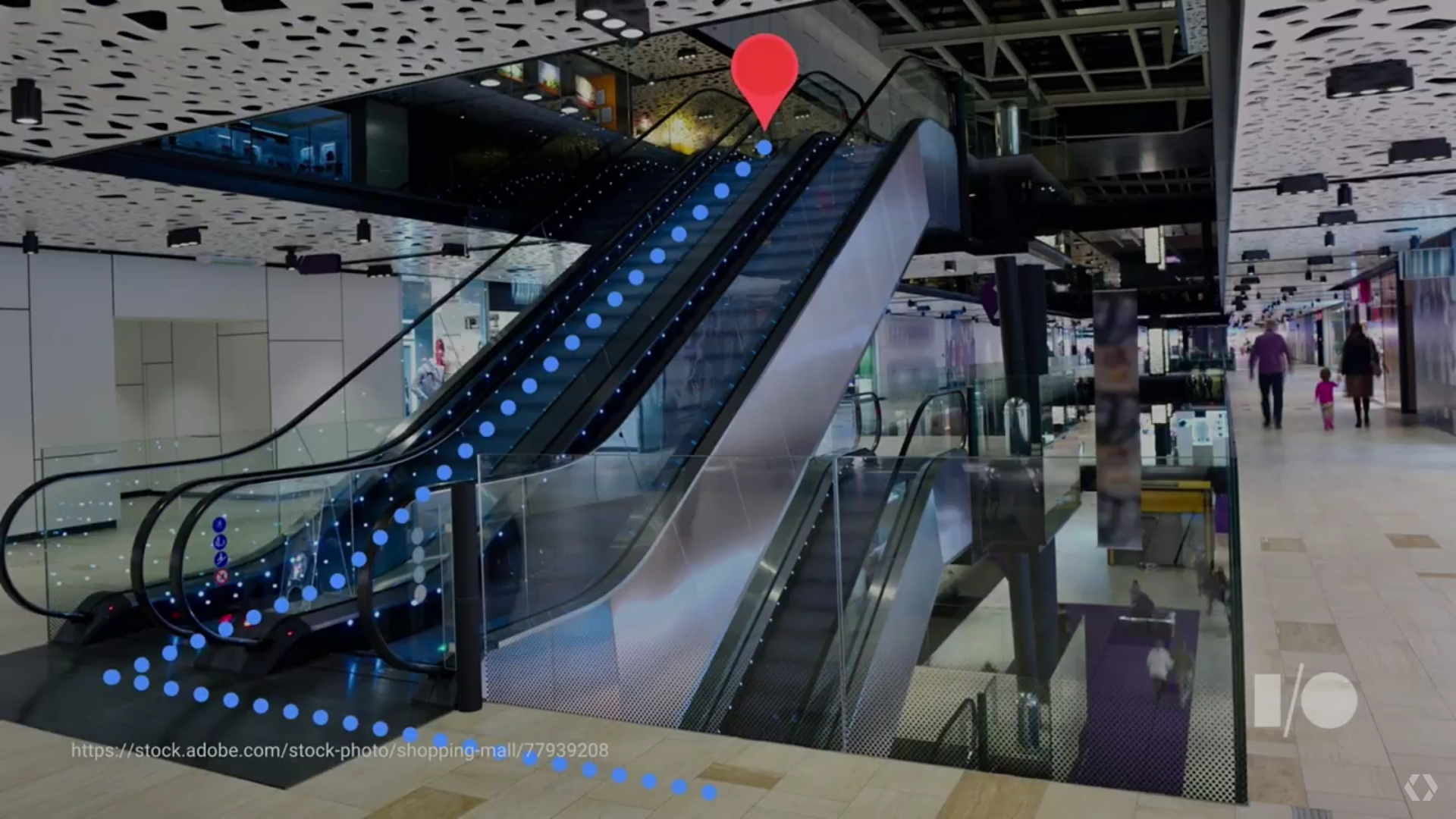

To illustrate this in a more practical sense, consider visiting a mall with your Project Tango device. Opening an application using Project Tango enables the device’s wide-angle camera, which looks for key landmarks in its field of view. When you move, the landmarks’ relative positions will change. Project Tango memorizes where it saw the landmarks as well as their descriptions. Project Tango doesn’t have to memorize the entire image, though--just the key landmarks.

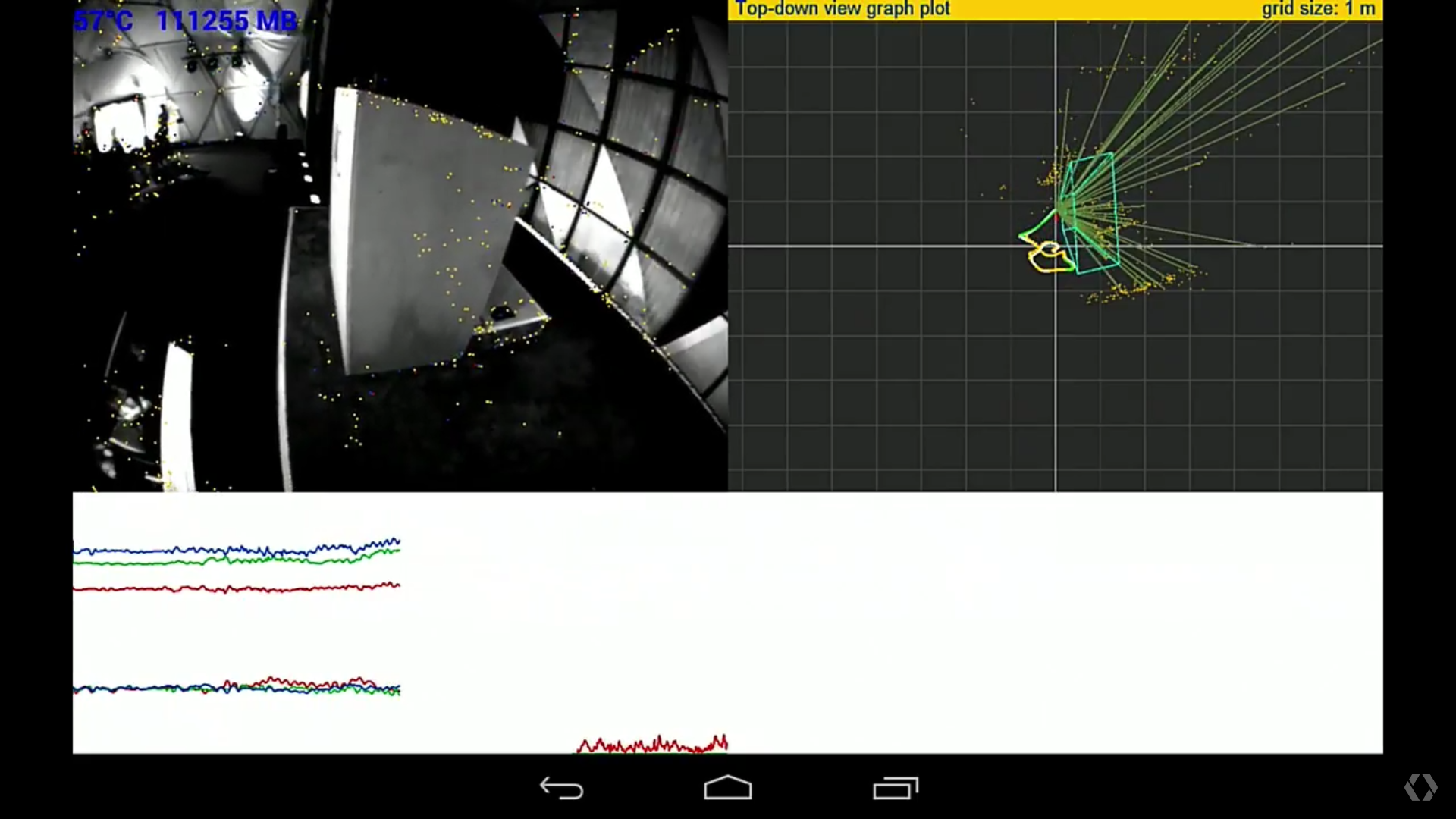

In reality, Project Tango can track at least 100 points at a time. As the device moves, Project Tango keeps track of how each landmark moves within the image. When it memorizes a landmark, the dots remain static and can be viewed from different angles.

During the demo, the device’s camera was turned away, shaken and even covered, all of which momentarily disoriented Project Tango. Once the camera was given a glimpse of the target area again, it recognized the area and illustrated the landmark points.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Area learning makes it possible for augmented reality application developers to place virtual objects with consistency. For example, if you were shopping for dining room chairs, you could use an AR app to see how well the chair would fit in your dining room. Traditional AR is easily disrupted by motion tracking, which makes virtual objects, in this case a chair, look out of place. Area learning memorizes the object’s place in the world, and when Project Tango recognizes the surrounding landmarks, the object is placed where it was meant to be.

Area learning isn’t perfect. In particular, Project Tango has trouble recognizing differences in images with too many variable landmarks. The examples given were a messy and clean room, a light and dark image, and a lively and barren tree. Similarly, Project Tango needs discernible landmarks in order to function; an empty white room would be hard to recognize. Google’s solution for the time being is for app developers to focus on short-term experiences within areas whose landmarks are relatively static, such as a living room.

Multiple Devices, Multiple Users

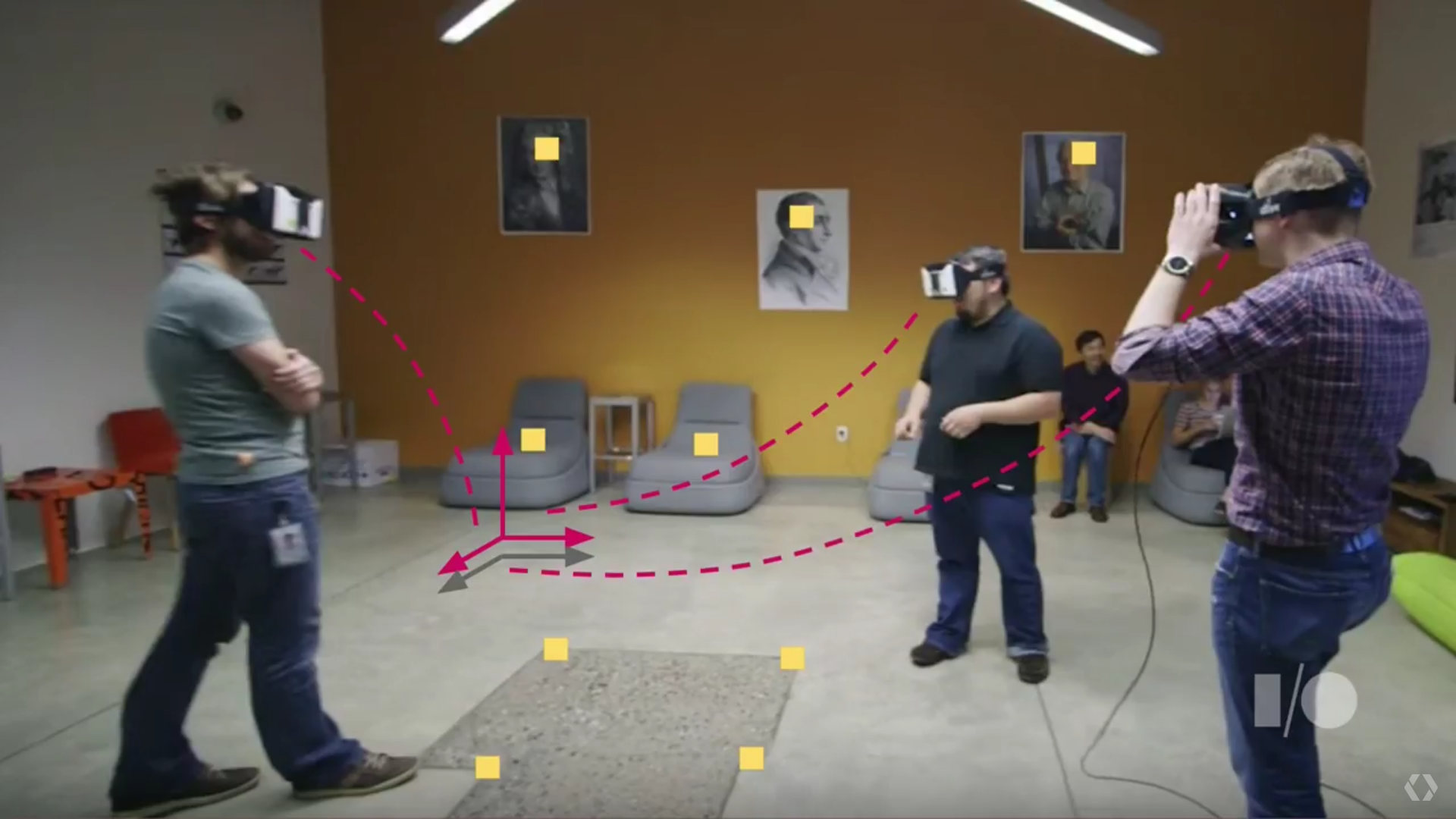

Multiple Project Tango-enabled devices open the possibility for interactive VR multiplayer games. For example, one device may survey and memorize an area. The memory can be shared to multiple nearby devices, which track their own location in relation to the shared landmarks. Then, participating devices may engage in the VR playspace.

Developers are still becoming accustomed to Project Tango, but its functionality may lead to an infinite number of practical applications in the future. Back to our mall example, suppose you were using Google Maps for directions to a particular store. Currently, Google Maps illustrates a blue circle which approximates your location. By recognizing landmarks, Project Tango can pinpoint your exact location. Combining that with augmented reality may even illustrate a 3D path on the Project Tango device, as we saw with the GuidiGO app in a Project Tango demo at Mobile World Congress.

The availability of this technology might seem far off, but it’s a lot closer than you’d expect. In fact, everything shown during today’s demo will be available for developers next month.

Alexander Quejado is an Associate Contributing Writer for Tom’s Hardware and Tom’s IT Pro. Follow Alexander Quejado on Twitter.

Tom's Hardware is the leading destination for hardcore computer enthusiasts. We cover everything from processors to 3D printers, single-board computers, SSDs and high-end gaming rigs, empowering readers to make the most of the tech they love, keep up on the latest developments and buy the right gear. Our staff has more than 100 years of combined experience covering news, solving tech problems and reviewing components and systems.

-

bit_user Intel stopped taking pre-orders, for their phone. No idea whether they cancelled existing preorders. Since they're discontinuing their entire phone platform, the RealSense/Tango phone might never see the light of day.Reply

If it ever does ship, the old preorder price of $399 makes it very attractive for developers.