Quantum Computing Benchmarks Begin to Take Shape

Development of quantum computing advances at a breakneck pace, with multiple global players (from institutions to corporations) exploring ways to achieve the so-called "quantum supremacy" - the moment where problems not solvable via traditional computing can be solved via quantum. However, the industry lacked a proper way to measure the quantum performance of their approaches. Now, a team from the Quantum Economic Development Consortium (QED-C) released the first tentative step towards an industry-wide performance gauge, which they've named Application-Oriented Performance Benchmarks for Quantum Computing.

There are a multitude of approaches to quantum computing currently being explored, and we've covered some of them — silicon quantum dots, topological superconductors, trapped ions, etc.

A nascent field means several approaches will be pursued until one (or some of them) proves the most efficient. However, without the capacity to benchmark actual performance, quantum computing has been left with somewhat open - and debatable - performance metrics, such as qubit count (i.e., how many qubits in a system) and quantum volume (i.e., how many useful qubits in a system, with consideration for error rates).

These metrics have thus far served as a way to compare the evolution of different quantum systems. Still, as we in the PC world know, a theoretical resource advantage doesn't always translate into performance — just think of the architectural differences between an AMD GPU and an Nvidia one. It's clear that chip area, for example, isn't a perfect predictor for performance. With this benchmark being released, the narrative for quantum computing performance is shiting from qubit count to qubit quality.

And not all qubits are the same. As Dominik Andrzejczuk of Atmos Ventures (a seeding venture fund) puts it in The Quantum Quantity Hype, "The lower the error rates, the fewer physical qubits you actually need. [...] a device with 100 physical qubits and a 0.1% error rate can solve more problems than a device with one million physical qubits and a 1% error rate." The main performance limitation for quantum computing doesn't lay in the possibility of scaling qubit counts - but in the extremely sensitive way these systems react to external and internal imbalances, producing calculation errors that force researchers to throw results (and expensive computational time) out the proverbial window.

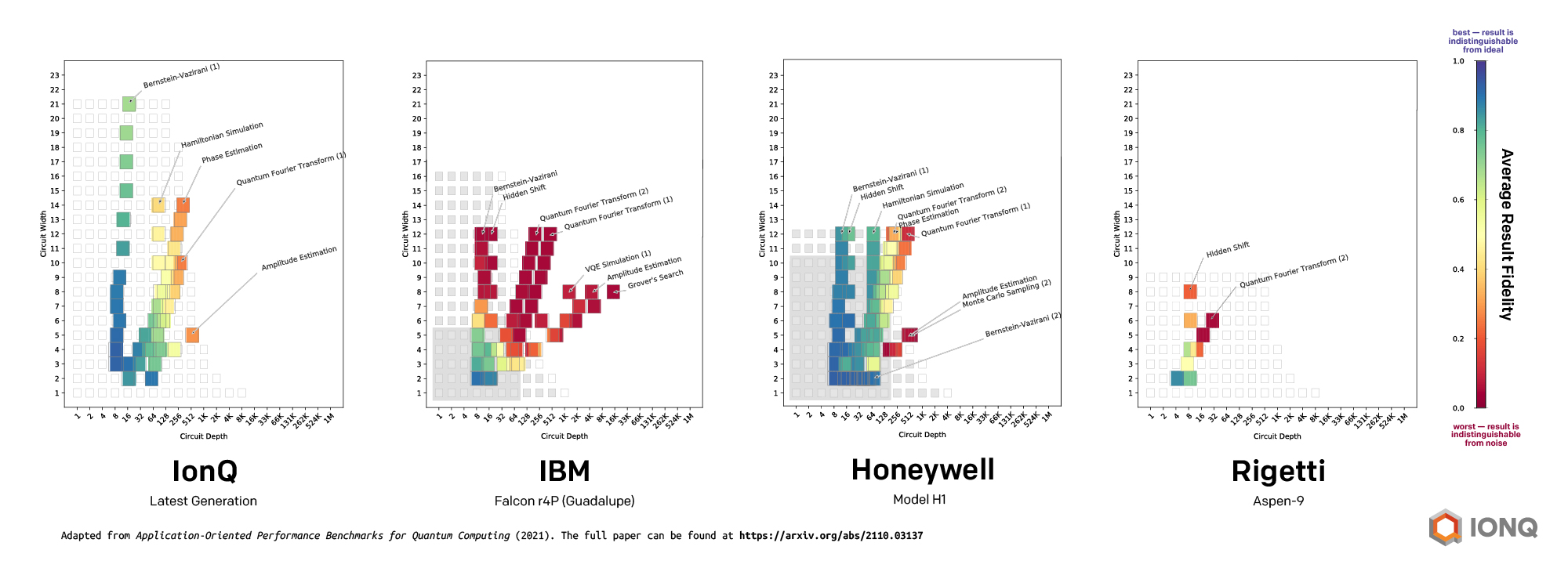

This qubit quality issue is brought front and center in the QED-C benchmark, which tested quantum computing systems from several companies, including IonQ, IBM, Rigetti, and Honeywell. While the published results don't yet contain data on all possible benchmarks from the suite, those that have been included have already painted an interesting picture: IonQ's trapped ion approach to quantum computing seemingly demonstrates the most reliable (and thus, the highest quality) results compared to the other benchmarked systems.

There are currently several limitations to this quantum benchmarking approach - which is only natural, considering the infancy of both quantum computing and its benchmarking. Nevertheless, it's interesting to ponder on how closely the development of quantum computing is following the historic, tried-and-true path of classical computing - differences in system design and benchmarking philosophies will surely remain open questions for a while.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.