VR And AR Go To Mars: Interview With NASA Scientists Jeff Norris And Alex Menzies

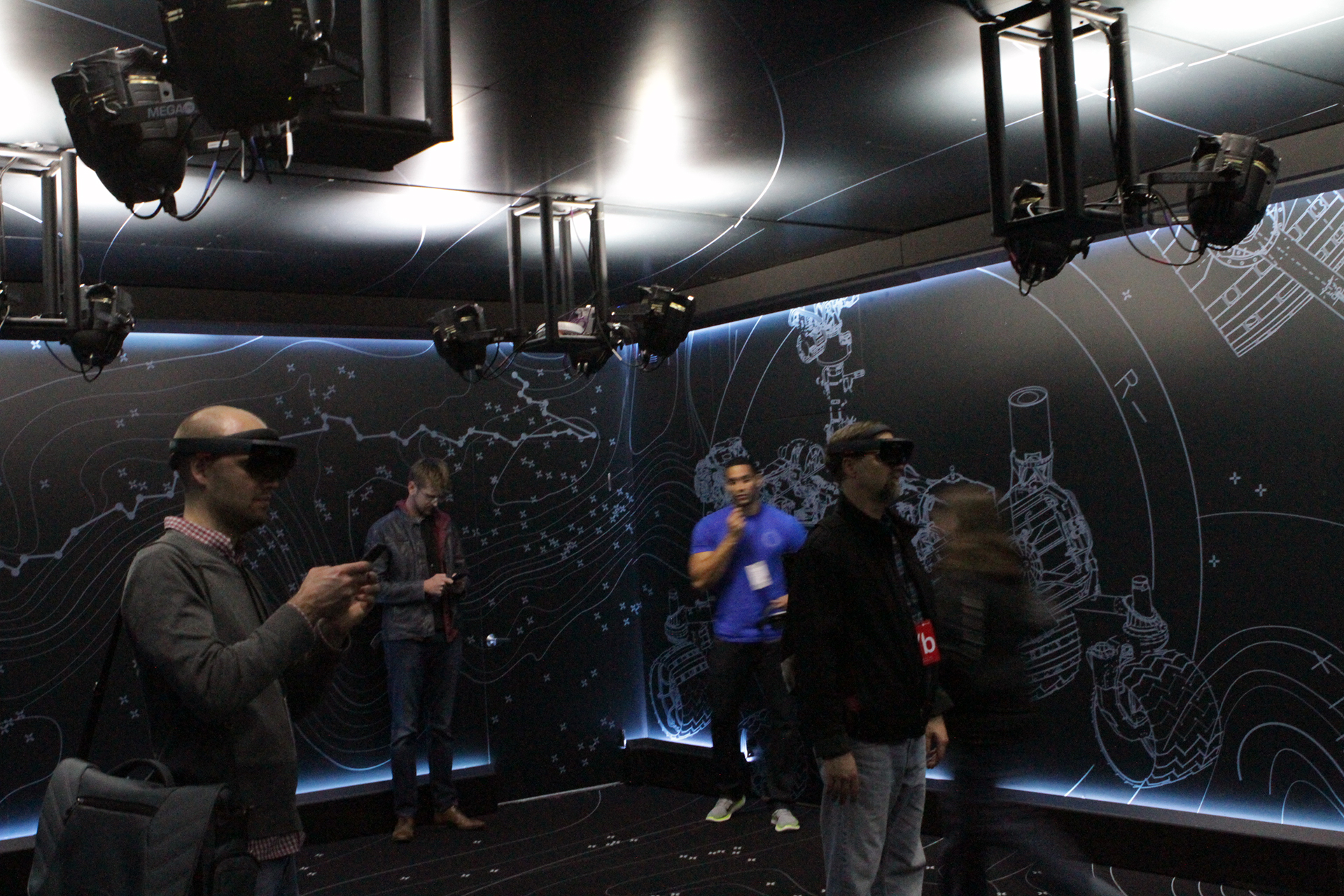

At Microsoft Build 2016, we enjoyed a pair of HoloLens demos. In one, we were transported to the surface of Mars. Once I realized that there were actual NASA scientists present around the demo, I tracked a couple of them down and probed them for more information on what it’s like to create VR and AR experiences like this one, the technology underpinning the demo, and how they solved problems such as image rendering on a mobile chip-powered device like the HoloLens.

I spoke with Jeff Norris, Mission Operations Innovation lead for the NASA Jet Propulsion Laboratory (JPL), and Alex Menzies, software lead for JPL’s augmented and virtual reality projects. Menzies also lead the team that built the terrain for OnSight and Destination: Mars.

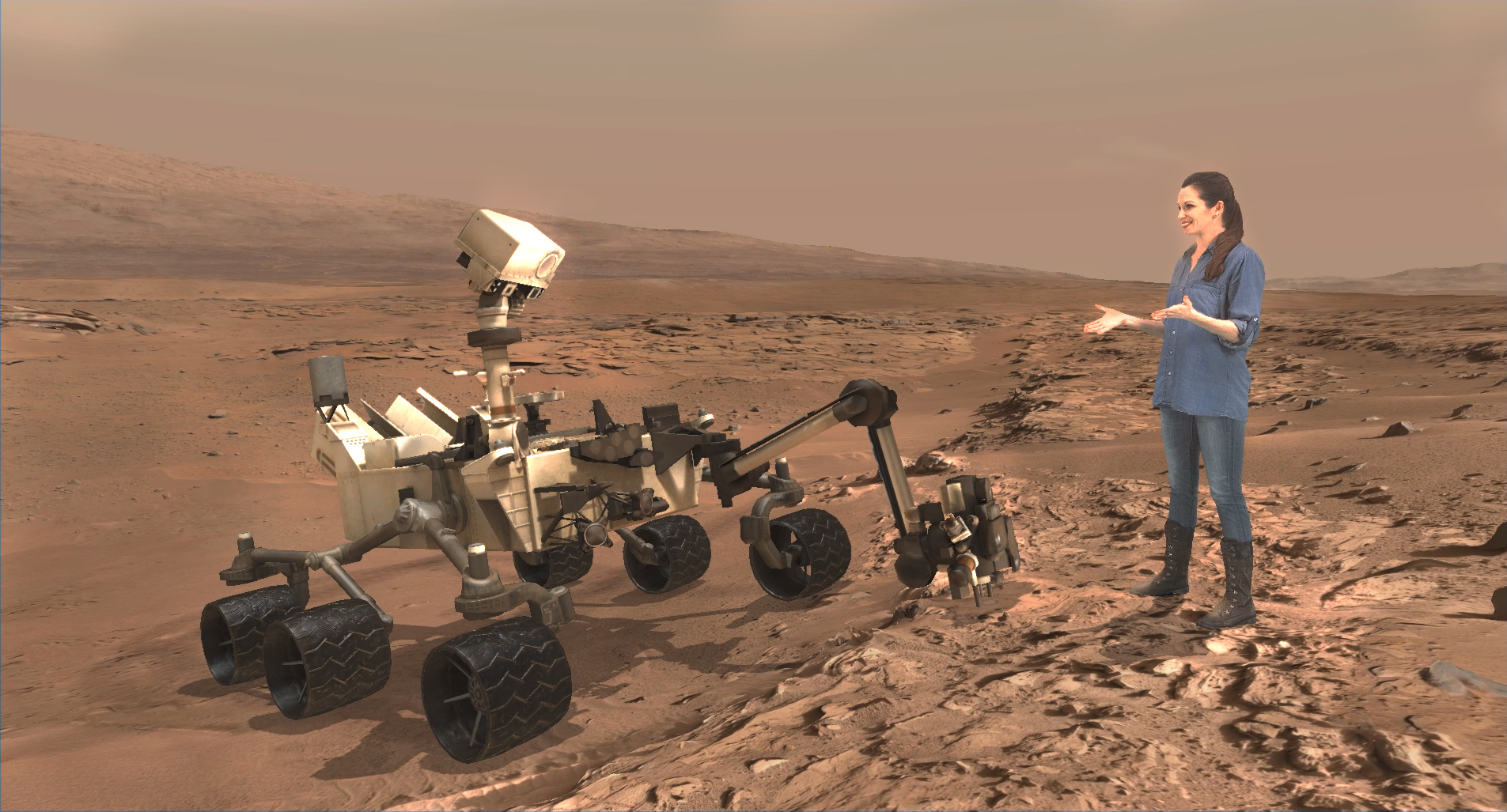

“OnSight” is the name of the Curiosity Rover mission operations tool that Microsoft and JPL created together. It is the technology used in the Destination: Mars demo and is an actual tool that NASA scientists use in the Curiosity Mars mission.

Norris told me that although the group is showing the HoloLens demo, they’re experimenting with numerous VR and AR HMDs, as well.

(I spoke with Norris and Menzies individually and combined their answers to my questions below for clarity and length.)

On Working With AR Versus VR

Tom’s Hardware: In terms of the actual building of the experience, how much of that were you actually involved in?

Jeff Norris: A great deal. First of all, OnSight, the core of the system, is a [NASA JPL] tool. Microsoft contributed to the development of it, and we continue to develop it at JPL. Specifically [regarding] Destination: Mars, we contributed the terrain that you see in there. We were largely in control of the script and directed the performances of the actors, and Microsoft contributed a great deal and the interactive elements that you see. It was a true collaboration.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

TH: But Microsoft programmed it? They built it in Unity?

JN: No, they did not write all of the code. We have an engineering team at JPL that was in my lab, [and] a bunch of Unity experts that programmed things for virtual [and] augmented reality.

TH: Can you speak to how easy or difficult it is to build these experiences? Microsoft would like us to think that it’s super easy. In terms of building from scratch, how difficult was it to put [Destination: Mars] together?

JN: I’m fond of saying that as a field, virtual and mixed reality is challenging. We all have a great deal to learn. I’ve found that while developing applications for [HoloLens] and other similar devices, a lot of my initial intuitions about what would be usable or would be pleasant were wrong, because they were intuitions that were born of building web applications, phone applications or desktop applications. What’s really fun [is that] everyone that’s working in this field together is figuring those questions out together.

TH: I’m curious about the processing power required. All the devices [VR and AR HMDs] are totally different in what they require and demand. How was it different to build for something self-contained like HoloLens, compared to something like the [Oculus] Rift or [HTC] Vive that is essentially a peripheral connected to a PC?

JN: You’ve hit it on the head. These are devices that emphasize certain qualities, and they can’t emphasize everything at once. That’s an unworkable design, unfortunately. I think it’s been interesting to watch [HoloLens] compress everything down so it can fit on your head, and you know how big workstations are – you can’t wear a workstation. At least not comfortably. So that demands a level of creativity from my team and the team that works with me. But the rewards for that trade off – it allows us to do other things.

So, yeah, I would say every platform that we work with, it’s a whole onset of challenges to choose to emphasize certain things.

Imaging With Curiosity

TH: How quickly can the Curiosity Rover get you images?

JN: Pretty much every day. We command it to do a whole day, between one to three days of things at a time. And the rover quickly communicates with us every day, and we get a large number of images from it every day. Every image that arrives, we automatically generate a completely new 3D model that you’re walking around in.

Alex Menzies: What we’ll do is we’ll drive [the rover] to a location and it will take a panorama. It’s really high-resolution up close, but as we get into the distance, it will drop off dramatically. A lot of times we’ll use orbital data there because it’s actually [a] higher resolution than the surface data.

It’s like a cone that is expanding, resolution is dropping off exponentially, whereas with orbital data, it’s constant everywhere. [However] the orbital image will oftentimes be very crisp, so we weigh these sorts of decisions: Which gives people the best sort of imagery?

In that way it’s also sort of interesting, because we can give the scientist a better sort of view than just the still images that we’re taking. [We can] really pull in the very best data from everywhere. And we can do really interesting things: If I click on a picture of a rock or I drop a marker inside OnSight on a rock, we can pull up every other image of that rock that was ever taken from any angle, including the orbital spacecraft, so it’s a really powerful tool for scientists.

TH: Does Curiosity constantly scan the surface with sensors, then?

JN: No, in our case we are taking pictures, and then we derive range data using a process called stereo correlation – similar to how our eyes work. We look at two images, find the differences between the two images, and using a model of the camera, we figure out how far away every point is. So the range maps are derived from the pictures.

AM: Right, as soon as those images hit the ground, those will go through the normal image processing pipelines, stereo vision and things like that, and then those get uploaded to our cloud where do intensive processes, like all of the shapes detected from the stereo images and we build a surface out of that, and then we basically paint that with the images and it’s all real data.

TH: So you wireframe it?

AM: Right, but the thing is that these are all automatic algorithms, it’s not a human that’s going in and matching these things. We’re building a mathematically, statistically accurate model of the surface.

TH: What kind of hardware does that require? Is there image processing on board [the Rover]?

JN: It does do those kind of computations in some cases. [But] the Rover sends us the images – just images – and then we perform the process of stereo correlation on the ground.

TH: How much data is that? That seems like a lot.

AM: It’s not really that much! One of the things that constrains us is that every image you get from Mars has to be sent from the Rover through an orbiter, to Earth, to a giant 80-meter antenna (of which there are three). So really, the bandwidth is very limited--think of dial-up (speeds) or something.

Earth science missions have a much harder problem because they have colossal bandwidth and huge images--they really have huge data volume problems. Ours is really just one of computation.

TH: One of the animations in the Destination: Mars demo showed wind erosion under a rock overhang that was, in my view, just a few inches off the ground. How can the rover get the image from basically underneath a rock?

JN: Keep in mind that the scenes that you are looking at in there are observed from several different positions. In the case of that first sight, we don’t even show you where the Rover was. Some people think that’s odd--why isn’t there a hole in this data where the Rover couldn’t see underneath itself?

The reason is that because we image that scene from several locations, we fuse everything together. So that rock with the overhang, there was a place where the Rover – from a distance – was able to view the rock and see that.

Playing Rendering Games

TH:Virtual reality sometimes uses foveated rendering. Did you use anything like that in the demo?

AM: I think the thing to remember with any sort of display is that you only need to render as many pixels as are visible on the screen. We use a lot of tech tricks to manage that level of detail.

One of the tricks we use is we slice up the terrain into “tiles” of different sizes. This is something called the quadtree. We have various levels of detail, so we build the terrain at a very high level of detail – at the highest level of detail that our image sensors and stereo data products allow us to do – but then we produce lower and lower and lower resolution versions going up. For the tiles that are nearby, we can provide you [with the] highest level terrain that is possible for the display to handle – by which I mean to saturate the pixels on the display, so we can give you the maximum resolution available close, and then for things that are further away, the angular resolution drops off as it goes into the distance.

What’s cool about this is that even though we’re a rendering a lower quality mesh and actually further away, it appears just as crisp. And if you move toward it, then we’re able to swap in the higher-resolution textures.

TH: Is there any latency? Like, if I sprinted toward the wall [in the demo], would I be able to catch the tiles at lower resolutions?

AM: Probably not. It will keep up with you. The latency is on the order of the frame rate, so current 60 fps, and we selectively swap the tiles when they’re far enough away [so] that you can’t really distinguish the difference between the high quality tile and the low quality tile. It’s already changed by the time you get close enough to notice it. It’s slight little pops, but minor.

TH: And this is all at 60 fps?

AM: I believe. Certainly at the updated tiles, we can do at that frame rate.

TH: So for whatever is behind me, is it the same process? Is it being rendered at all, or is it only activated when I turned my head around?

AM: We are being very clever. The other nice thing about those tiles is that we are being selective; they are appropriate sizes, so anything that is not visible in your field of view, we can remove.

The other thing we do is pre-compute what areas are visible, so that also means that when you have a nearby hill, we don’t have to render all the things that are behind that hill, so you can get a really long way by being smart and only trying to draw the things that are immediately visible.

There’s a thing call a frustum. It’s basically like a pyramid coming out, and it’s everything the display sees, so you can imagine every single tile has a box around it, and we just look for the geometric intersection between that vision cone coming out and the boxes that are around.

TH: That renders at frame rate too, then?

AM: Yes.

TH: Very clever. I love that.

AM: And part of that, too, is when we pre-compute all of the things that are visible from a particular location. We can immediately remove all the things that we know are not visible, and then that reduces the number of things that we have to check what the cone intersects. That trick we use to make that go faster.

TH: If I took off sprinting around another ridge, it’s possible the model doesn’t want me to see that...I would just walk off into space?

AM: No, it will still render it before you get there.

VR And AR Experiences As “Books”

AM: We were able to land on this really exciting place on Mars, and when you look around at the rocks and realize how jagged and different [the landscape is] and you see all [of] these giant dunes and these huge mountains, Mars stops being a thing and starts being a place. And [it’s a] place you can go to, hang out there.

I think with VR and AR, places and times are like books. You can take them off the shelf any time you want and go visit them, and what you do there is sort of up to you.

TH: It’s so cool that looking at Mars is your job!

AM: Yeah for the last while here, the first thing I do [in the morning] is grab my coffee, check my email, and live on Mars for a little while, and write a little code and come back in time for dinner with my lovely wife and two cats.

Earth is a pretty nice place. But what’s brilliant is that you can go to Mars today, and tomorrow go to any place we’ve sent spacecraft to scan that location. So I think it is a real democratization of space exploration where everyone gets to go.

Seth Colaner is the News Director for Tom's Hardware. Follow him on Twitter @SethColaner. Follow us on Facebook, Google+, RSS, Twitter and YouTube.

Seth Colaner previously served as News Director at Tom's Hardware. He covered technology news, focusing on keyboards, virtual reality, and wearables.

-

scolaner Reply17782551 said:Thanks for the interview Toms.. It was a really good read!

Thanks! Loved talking to those guys...