Google's Big Chip Unveil For Machine Learning: Tensor Processing Unit With 10x Better Efficiency (Updated)

While other companies are arguing about whether GPUs, FPGAs, or VPUs are better suited for machine learning, Google came out with the news that it has been using its own custom-built Tensor Processing Unit (TPU) for over a year, achieving a claimed 10x increase in efficiency. The comparison is likely made in relation to GPUs, which are currently the industry standard chips for machine learning.

Tensor analysis is an extension of vector calculus, which is at the basis of Google’s (recently released as open source) Tensorflow framework for machine learning.

The new Tensor Processing Units, as you might expect, are specifically designed to do only tensor calculations, which means the company can fit more transistors on the chip that do only one thing well--achieving higher efficiency than other types of chips.

This class of chips is called ASICs (application-specific integrated circuits), and they’ve been used, for instance, in wireless modems such as Nvidia’s Icera modem, and in Bitcoin mining rigs for orders of magnitude higher efficiency compared to GPUs and FPGAs.

Movidius said that Google's TPU philosophy is more in line with what it's been trying to achieve with its Myriad 2 vision processing unit (VPU) by squeezing out more ops/W than it's possible with GPUs.

"The TPU used lower precision of 8 bit and possibly lower to get similar performance to what Myriad 2 delivers today. Similar to us they optimized for use with TensorFlow," said Dr. David Moloney, Movidius' CTO."We see this announcement as highly complementary to our own efforts at Movidius. It appears Google has built a ground-up processor for running efficient neural networks in the server center, while Myriad 2 is positioned to do all the heavy lifting when machine intelligence needs to be delivered at the device level, rather than the cloud," he added.

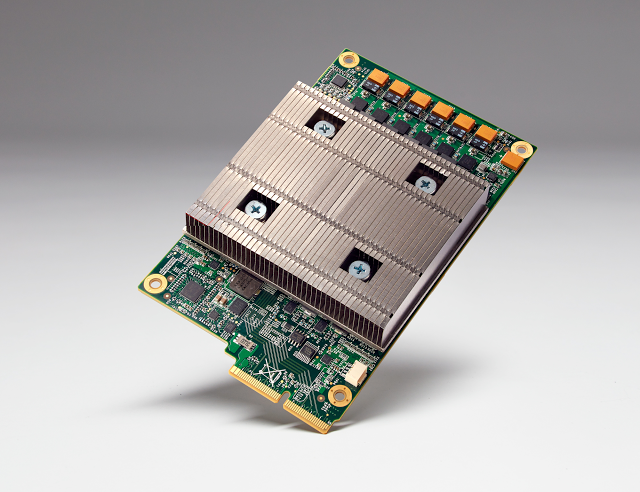

Google claimed in a blog post that its TPU could achieve an order of magnitude better efficiency for machine learning, which would be equivalent to about seven years of progress following Moore’s Law. Google said that a TPU board could fit inside a hard disk drive slot in its data centers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google also unveiled for the first time that the TPUs were used not only to power its Street View product but also AlphaGo in its Go matches against Lee Sedol. When the company previously talked about AlphaGo, it only mentioned using CPUs and GPUs, although that was months before the Go matches happened. Google said the TPUs allowed AlphaGo to “think” much faster and allowed it to look further ahead between moves.

Google can now use TPUs not just to improve its own products, but it can also offer that jump in performance to machine learning customers. The company can now allegedly best the competition in this type of market, because others may still only offer GPU-based or perhaps FPGA-based machine learning services.

ASICs, in comparison to FPGAs, are hard-coded and cannot be reprogrammed in the field. This inflexibility restricts many from employing the specialized processors in large-scale deployments. However, Google indicated that it leapt from the first-tested silicon to a production environment in a mere 22 days. This incredible ramp indicates that Google has the ability to develop and deploy other optimized ASICs on an accelerated timeline in the future.

Google’s TPU could now change the landscape for machine learning, as more companies may be interested in following the same path to achieve the same kind of performance and efficiency gains. Google has also been dabbling with quantum computers, as well as the OpenPower and RISC-V chip architectures.

The Intel Xeon family powers 99 percent of the world's datacenters, and there are long-running rumors that Google is developing its own CPU to break the Intel stranglehold. The Google TPU may be a precursor of yet more to come. Google's well-publicized exploratory work with other compute platforms spurred Intel to begin offering customized Xeons for specific use cases. It will be interesting to watch the company's future efforts in designing its own chips, and the impact on the broader market.

Updated, 5/19/2016, 10:58am PT: The post was updated to add Movidius' comments on Google's TPU announcement.

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

clonazepam ReplyDo you want skynet? Because that's how you get skynet!

Ooh I've got one.

But can it learn to play Crysis? And now we've gone full circle.

That'd be pretty cool to see Google, which was at one time nothing more than a search engine... develop everything (I was going to do a list but laziness and 'everything' seems plausible at this point). -

ZolaIII Anyone with at least litle brains would see how ASICS aren't a way to go into the future. Couple of reasons to begin with are; fixed logic (not programmable) in conjunction to absence of perfected algorithms & as much as days of specialized accelerators are coming it would still be a very unefficient (power/cost/die size) to have one of those for each & every task. Compared to last actual gen of ADSP's this is really just a little more efficient (cost or performance wise) on the other hand ASICS will always be 2 steps behind; lagging a gen or two behind in implementing latest algorithms, not very good solution because they can't be upgraded. ASICS are OK but for simple fixed function logic that won't need any future development. They certainly aren't for something that should be flexible as it can & that should be able to self evolve. Curent so cold deep machine learning AI is on the level of reflex behavior & that certainly isn't intelligence. In order for it to be able to evolve it needs fully opened pathways. Only suitable hardware platform for it their for is a large area (of programmable gates) FPGA in it's modern design that actually integrate & ADSP (with SIMD blok) & that is fully reprogram-able & capable of simultaneously multiple tasks execution. I won't even waist words how much GPU's aren't suitable for this task.Reply

Google's fat bottom girls are getting really lazy & if they continue like this they won't last very much longer. -

wifiburger this seems viable for next gen gaming, with a bit modification we could have some interesting learning AI without wasting cpu time,Reply

sony should integrate something similar in their future playstation, I know I would buy it :-) -

kenjitamura Replyand there are long-running rumors that Google is developing its own CPU to break the Intel stranglehold.

Putting that in there is just plain cruel. We all know that Google will not be making CPU's to challenge AMD or Intel in the foreseeable future. -

bit_user Reply

Do you really think Google doesn't know what they're doing? Their blog post said they have over 100 teams that are using machine learning. This is a problem they understand very well, and I'm sure they calculated the economic benefit of having such an ASIC, before they invested the millions of $'s needed to design, manufacture, and deploy it.17991866 said:Anyone with at least litle brains would see how ASICS aren't a way to go into the future.

And because they're Google, they can make one ASIC that's designed to accelerate today's workloads. As soon as that's debugged and starting to roll out, they can begin designing another ASIC to tackle the next generation of machine learning problems. That's how it "evolves" to meet future needs.

-

jamiefearon Is it possible to turn this into a PCI Express expansion card, that will be work with current PC's?Reply -

bit_user Reply

Definitely agree. Lucian is extrapolating unreasonably, here. Designing an ASIC to calculate with tensors is far simpler than developing a leading-edge CPU. And I think it'd be really hard to do it with sufficiently better perf/Watt to justify the investment over just partnering with Intel/AMD/Qualcomm/etc. I mean, we're talking hundreds of $M, to design a single CPU. Are the margins that high on existing CPUs, for a huge customer like Google?17993068 said:and there are long-running rumors that Google is developing its own CPU to break the Intel stranglehold.

Putting that in there is just plain cruel. We all know that Google will not be making CPU's to challenge AMD or Intel in the foreseeable future.

And the article is potentially off the mark on another point: when Xeon D was launched, I read a detailed article (on another site) about how Intel worked with Facebook to create the Xeon D. The problem they had was that the perf/Watt of the E5 Xeon series wasn't improving at the same rates as Facebook's workload was increasing. And the Silvermont Atom-based server CPUs were slow enough that they exceeded FB's latency requirements (ARM: are you listening?). So, they basically power-optimized a multi-core Broadwell.

I'm sure Intel also talked to Google about it, but I don't know if it was the same level of collaboration as they had with FB.

-

bit_user Reply

I think google would rather sell you time on their TPU-equipped servers in the cloud, than get in the business of selling hardware to end-users.17993217 said:Is it possible to turn this into a PCI Express expansion card, that will be work with current PC's?

For hardware in your PC, GPU's aren't bad. This year, Intel is supposed to introduce a line of Xeons with built-in FPGAs that could be configured to do something comparable to (though maybe smaller than) Google's ASIC. I'm hoping that capability will trickle down to consumer CPUs in coming generations.

I'd love to know the size of that ASIC. I wouldn't be surprised if it were really big and low-clocked, because they can afford a higher up-front manufacturing cost to offset lower lifetime operating costs.