Primer: The Principles Of 3D Video And Blu-ray 3D

Today we're partnering up with the experts at CyberLink to introduce the principles underlying 3D video, how it is created, and how it's displayed. Our main interest is Blu-ray 3D, so we'll be exploring the tech your 3D-enabled home theater might include.

Encoding And Delivering 3D Video Content

The highest-quality method to encode and deliver a 3D video program is to store and deliver it as a dual-stream synchronized video program, with one full-quality video stream for each eye. This is how Blu-ray 3D works, storing the video for each eye as a full “Blu-ray quality” video program.

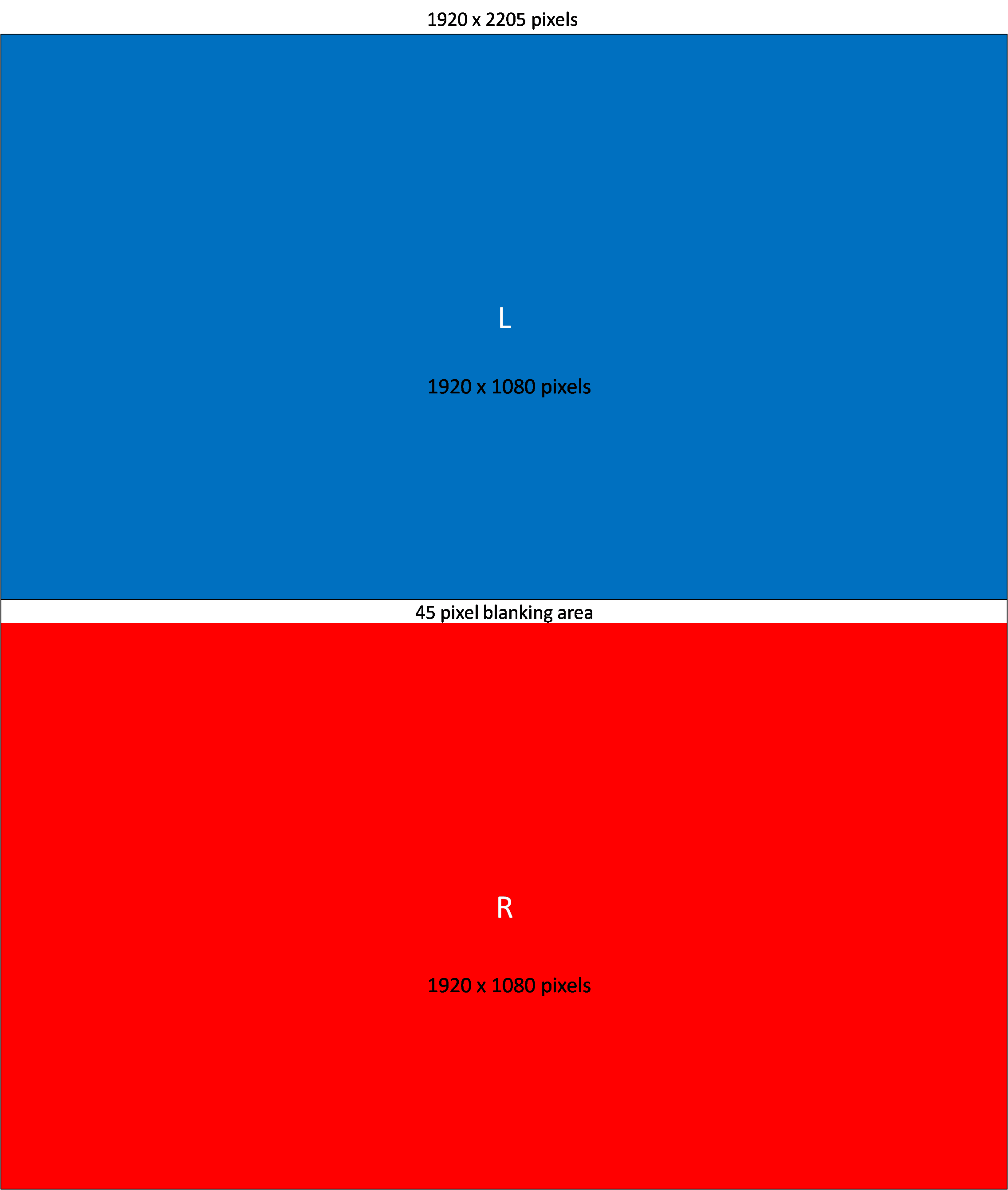

The HDMI 1.4 specification provides for 3D stereoscopic video to be delivered in several different ways, including over/under-formatted frames that are 1920 pixels wide and 2205 pixels high. The frame for the left eye and right eye are delivered together, to assure that synchronization is always maintained, even if the signal is momentarily lost and then restored.

Compressed 3D Encoding

For compatibility with existing equipment and video standards, 3D video content can be compressed to fit in a standard video signal. There are several ways that this can be done.

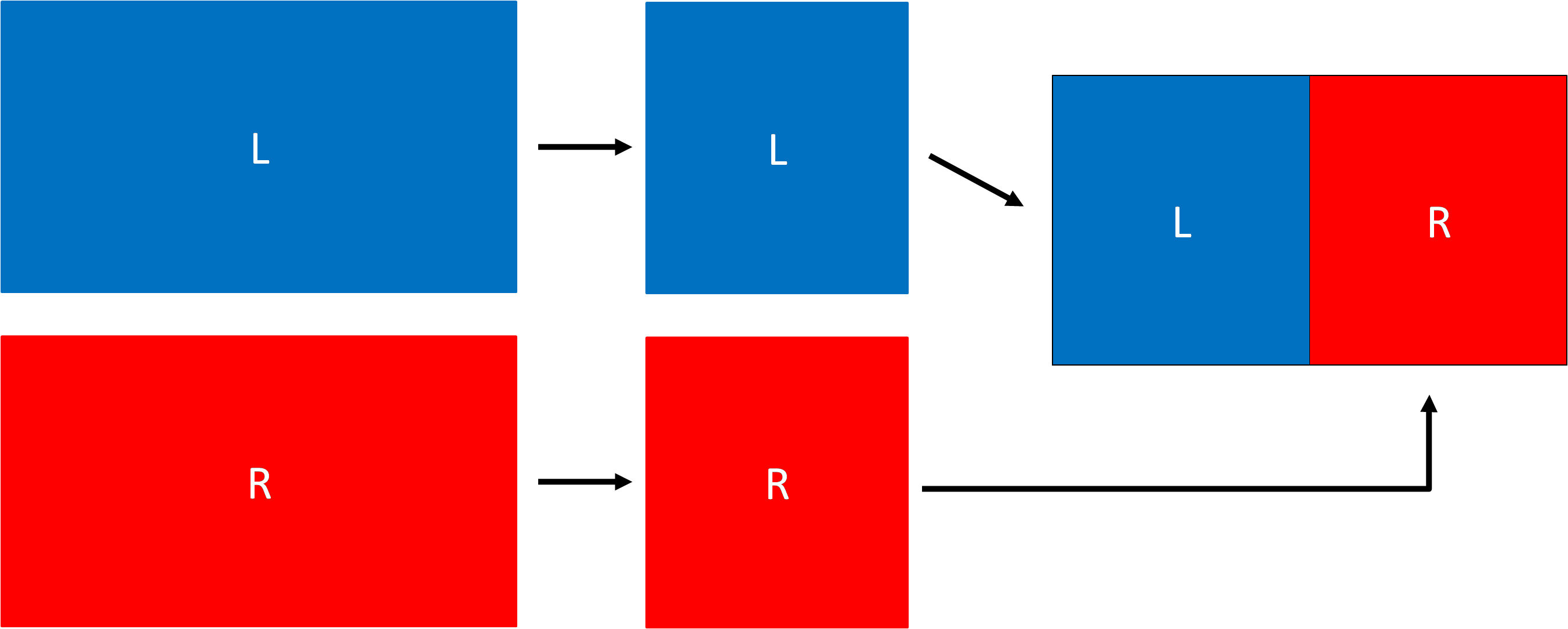

Side by Side encodes the video for each eye in half of a standard video frame (with the right eye picture on the right side of the frame). Thus, the video for each eye is stored with half of the horizontal resolution (960x1080 pixels in a standard 1080p video frame).

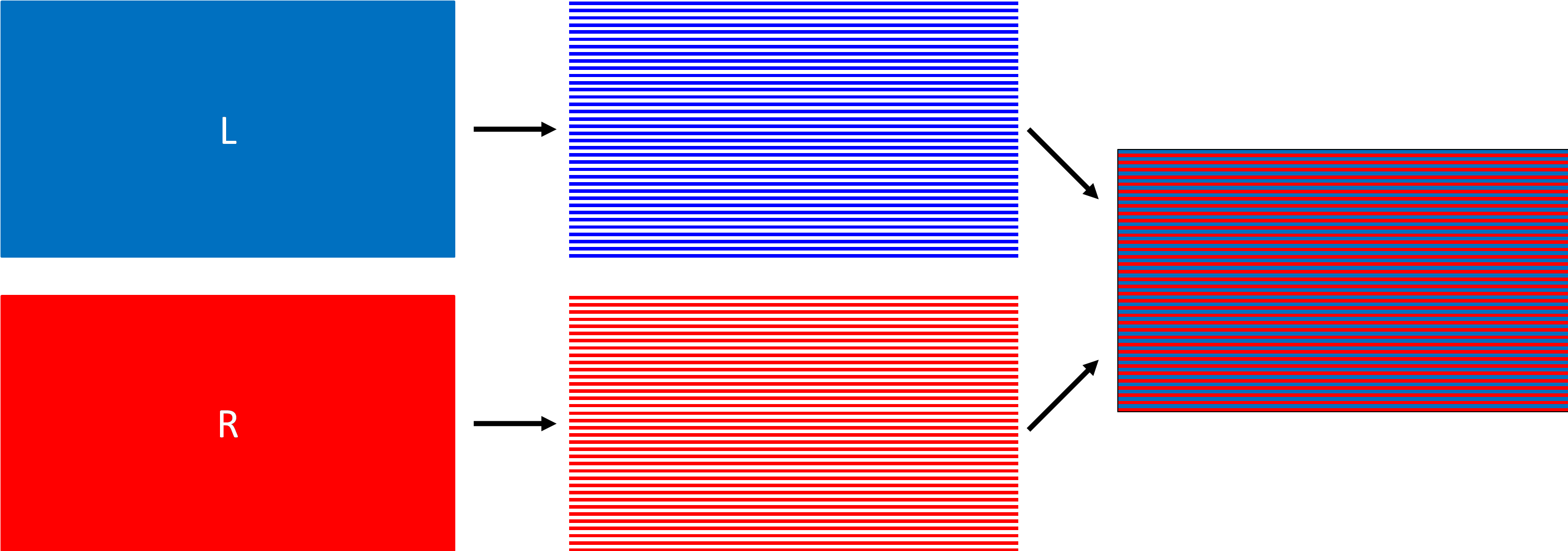

Interlaced stores the video for each eye in alternate horizontal lines. The odd lines store the picture for one eye, while the even lines store the picture for the other eye. The picture for each eye has full horizontal resolution, but half of the normal vertical resolution (1920x540 in a 1080p video frame).

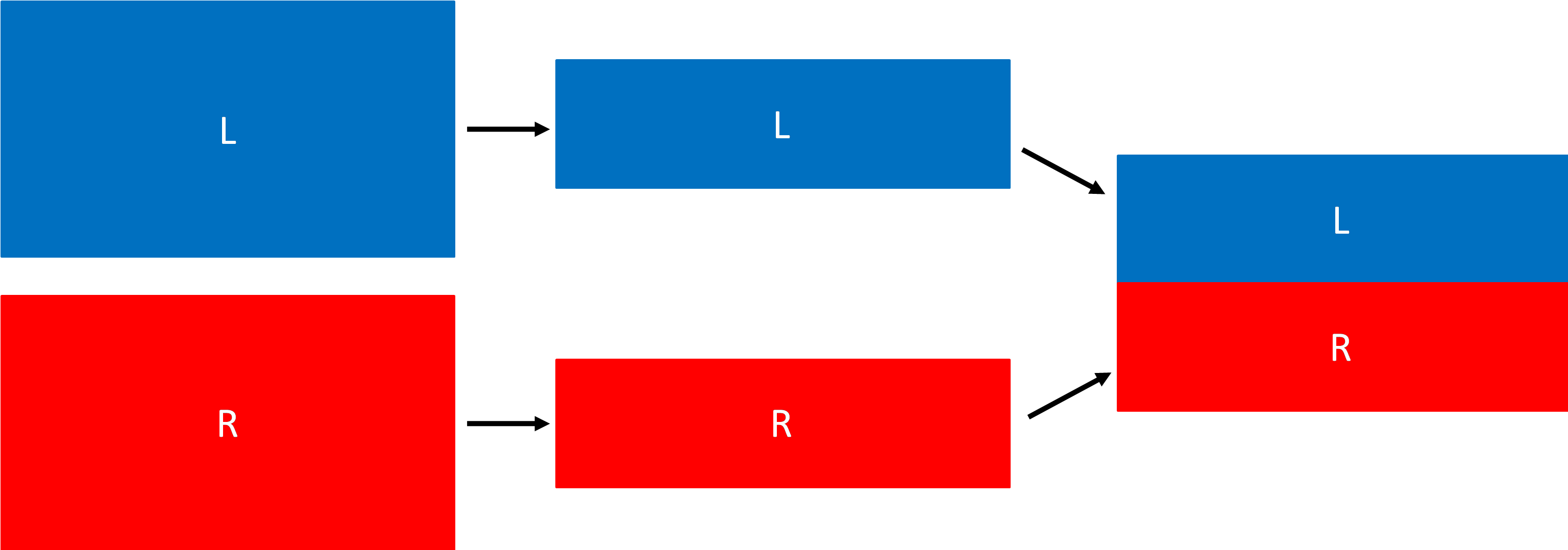

Over/Under is a format that encodes the picture for each eye with half the vertical resolution stacked on top of each other in a single video frame. The picture for the left eye is stored in the upper half of the frame, and the right eye is stored in the lower half. As with the Interlaced format, the picture for each eye has full horizontal resolution, but half of the normal vertical resolution (1920x540 pixels for a 1080p video frame).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Displaying 3D Video

A stereoscopic 3D video contains two time-aligned video channels (one for each eye). To view 3D video, the display technology and the 3D glasses must assure that the left eye sees only the video meant for the left eye, and so on for the right eye. There are a number of different technologies that are designed to accomplish this, and each technology has its own benefits, drawbacks, and costs.

Anaglyphic 3D

Mention 3D video and the image that comes to mind for many people is that of the familiar 3D glasses, with one red and one blue lens. These glasses use the anaglyphic method of displaying a 3D image.

Anaglyph images are created by using color filters to remove a portion of the visible color spectrum from the image meant for each eye. When viewed through the color filters in the 3D glasses, each eye only sees the image that contains the portion of the color spectrum not filtered out by the lens. The benefit of the anaglyphic method is that no special display is needed; any standard 2D display or TV can display an anaglyphic 3D image. The drawback of anaglyphic 3D is obvious. The overall image quality suffers as a large portion of the color spectrum is filtered out of the image for each eye.

Current page: Encoding And Delivering 3D Video Content

Prev Page Shooting 3D Video And Animated Movies Next Page 3D Displays-

JohnnyLucky Very informative primer. Lots of information that was easy to undertsand.Reply

Unfortunately I am one of those who recently purchased a new TV. It will be quite some time before I upgrade. -

TheGreatGrapeApe Nice article, but I think there's a few issues with regards to the overall balance of the information being put forth.Reply

I understand the author's preference for shutter glasses (especially since it's a certain product's preferred method of choice) even if I don't share it, the major limitation is having to buy a pair for all your friends coming over, which gets impractical until they are more commonplace.

Also polarized solutions are not limited in resolution if they are set-up beyond just the example provided in this article (like they do in the theatre with dual projectors ) and may have an improving single source future with 2K and 4K displays on the horizon. It's a question of preference, but it seems like the full story wasn't explored on that subject.

Now on to a pet peeve: I love the part about "While set-top Blu-ray players will need to be replaced, PC-based Blu-ray player software can be upgraded." as a subtle product benefit plug.

Unless it's a free upgrade, you are still replacing the software, not upgrading it (it's not a plug-in), and you're likely forking out nearly the same amount of money for the 1/100th of the cost to produce that software update, so it's not like it's a major advantage. Especially when upgrading requires a FULL upgrade to the most expensive model Power DVD (version #) Ultra 3D, and I can't simply add it to my existing PowerDVD bundles thus potentially changing my backwards compatibility (Ultra 9 already removed my HD-DVD support from Ultra 7 that I upgraded on my LG HD-DVD/BR burner

, until then it's $99 (or $94.95 for loyal saps) vs $150-200, plus with the set-top route now I have a second BR-/DVD player for another room or to give to a friend (the BR software on its own is useless to give to someone else without a drive), and that's not even compared to the free PS3 upgrade.

Also can someone explain this statement;

"Blu-ray 3D video decoding solutions can be expected for ATI Radeon 5000-series graphics in the future."

Didn't Cyberlink already show their BR-3D solution on ATi hardware last year? So what's the issue?

Also why is it limited to "GeForce 300M-series mobile graphics" when often the core is the same a previous generation 200M series (example GTS 350M / 250M )?

And this section "Full-quality 120 Hz frame-sequential 3D video (such as Blu-ray 3D) is only supported through a High Speed

HDMI cable to a HDMI 1.4-compliant TV. " seems to miss the DVI dual-link to monitor option currently being used for 3D on PCs, and also the dual 1.3 input monitors/TVs.

A nice little article for people unfamiliar with 3D, but there's a subtle under-current of product preference/placement in it, and far too many generalities with little supporting information. :??:

-

hixbot well done. I would of liked more detail on the hdmi 1.4 spec, specifically framepacking and the mandatory standards (no mandatory standard for 1080p60 framepacking).Reply

also some info on AVRs and how a 1.3 hdmi AVR might pass on 3d video and still decode bitstream audio, or not - do we need 1.4 hdmi AVRs to decode audio from a 1.4 source? we shouldn't need 1.4 receivers since the audio standards haven't change, but I'm understanding that in fact we do neeed new receivers. :/ -

ArgleBargle Unfortunately for people with heavy vision impairment (astigmatism, etc.) which require corrective lenses, such 3D technology is out of their reach for the time being, or at least next to useless. Until some enterprising company comes out with 3D "goggles", people who wear corrective lenses might as well save their money.Reply -

boletus 3D is cool, and high definition video is cool. But Sony's moving target of a BD standard is not cool, and Cyberlink's bait and switch tactics are not cool (unless you have bundles of money you can throw at them every 6-12 months). I sent back my BD disk drive (retail, with Cyberlink software) for a refund after finding out that I would have to shell out another $60-100 just so I could watch a two-year old movie. As far as I'm concerned, high definition DVD video is dead until some more open standards and reliable software emerge.Reply -

cangelini Great,Reply

This piece is a prelude to tomorrow's coverage, by Don, of Blu-ray 3D on a notebook and a desktop. Perhaps that one will answer any of the questions you were left with here?

As for AMD, Tom and I went back and forth on this piece, and we agreed that it was critical to get AMD's feedback on Blu-ray 3D readiness. The fact of the matter is that it isn't ready to discuss the technology. It's behind.

The mention of dual-link DVI was in the first revision of this piece and removed in a subsequent iteration. I've asked the author for additional clarification there and should have an answer shortly.

-

cangelini So it turns out there were two sections on this and one was cut accidentally. Should be good to go now, though--dual-link DVI is discussed with PC displays!Reply -

cleeve TheGreatGrapeApeAlso can someone explain this statement;"Blu-ray 3D video decoding solutions can be expected for ATI Radeon 5000-series graphics in the future."Didn't Cyberlink already show their BR-3D solution on ATi hardware last year? So what's the issue?Reply

It turns out the demo (I think it was at CES?) only used CPU decoding over an ATI graphics card; the Radeon did no software decoding.

The Cyberlink rep tells me that Blu-ray 3D software decoding is extremely CPU-dependant and might even require a quad-core CPU. He said all four threads were being stressed under software decoding, not sure what quad-core CPU they were using though.

Definitely something I'd like to test out in the future...

-

Alvin Smith This was a very informative and well written article BUT, I chose to skip to the last two pages ... Because ...Reply

These implementations, while ever more impressive, are still being threshed out. Because of possible physiological side effects, I think I will NOT be a first adopter, with this (particular) tech (3D).

Anyone ever watch that movie "THE JERK", with STEVE MARTIN ??

= Opti-Grab =

... I can see all these class-action suits by parents of cross-eyed gamers ... hope not, tho ... I *AM* very much looking forward to the fully refined "end game", for 3D ...

Additionally, the very best desktop workstations are only just now catching up to standard (uncompressed) HD resolution ingest and edit/render ... since that bandwidth IS shared, between both eyes, this may be a non-issue.

I will let the kiddies and 1st adopters take-on all those risks and costs.

Please let me know when it is all "fully baked" and field tested!

= Alvin = (not to mention "affordable").