Nvidia Intros Cloud-Based Light Rendering Tech

Nvidia has introduced a new a system for computing indirect lighting in the cloud to support real-time rendering on a user's local device. Called CloudLight, the abstract was thrown up on the research portion of Nvidia's website sometime in July, written by Cyril Crassin, David Luebke, Michael Mara and five other Nvidia employees.

"CloudLight maps the traditional graphics pipeline onto a distributed system," the company states. "That differs from a single-machine renderer in three fundamental ways. First, the mapping introduces potential asymmetry between computational resources available at the Cloud and local device sides of the pipeline. Second, compared to a hardware memory bus, the network introduces relatively large latency and low bandwidth between certain pipeline stages. Third, for multi-user virtual environments, a Cloud solution can amortize expensive global illumination costs across users."

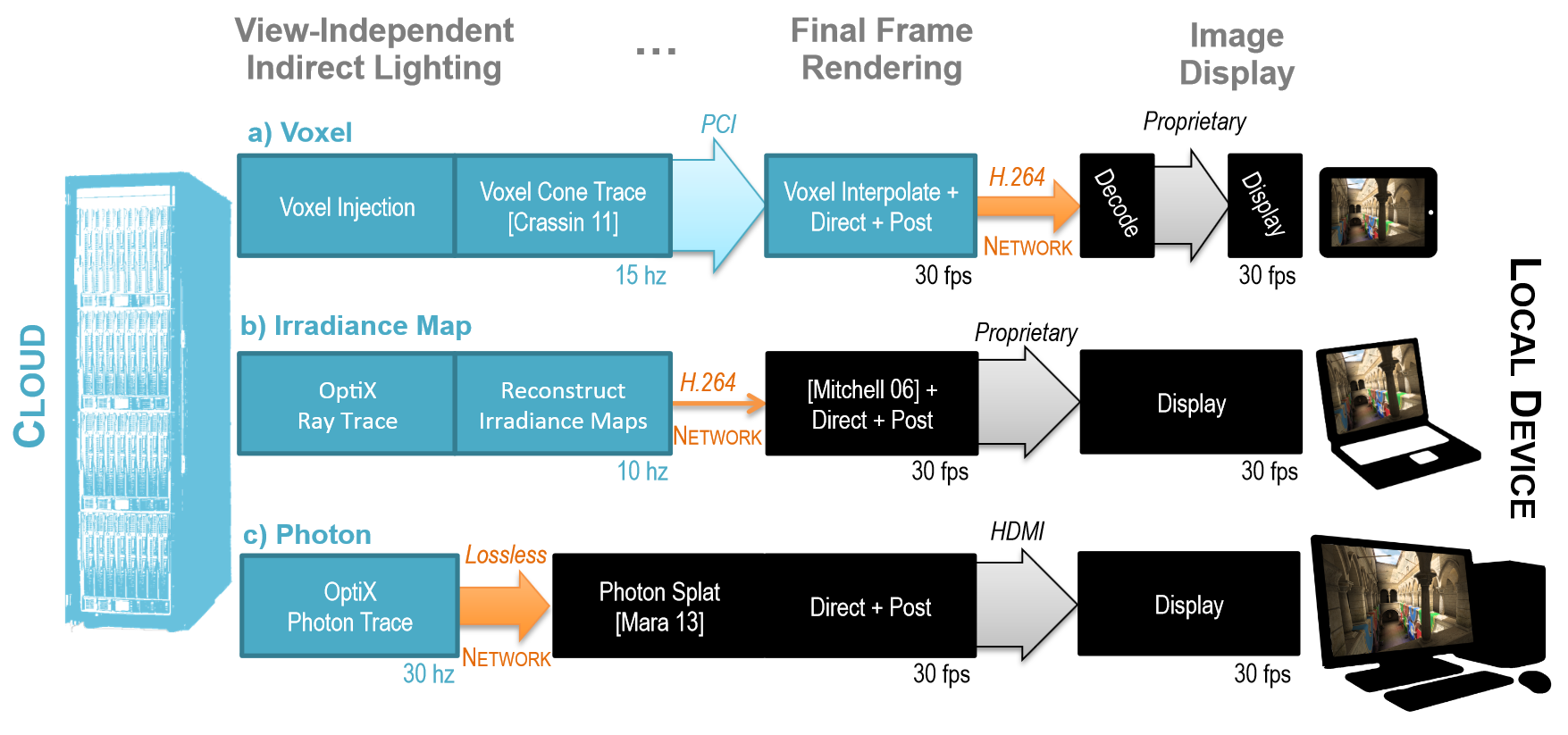

Nvidia said the new framework explores tradeoffs in different partitions of the global illumination workload between cloud and local devices, and how available network and computational power influence design decisions and image quality. The ten-page technical report, released here in PDF form, describes the tradeoffs and characteristics of mapping three known lighting algorithms – Voxel, Irradiance Map and Photon -- to Nvidia's system. It also demonstrates scaling up to 50 simultaneous CloudLight users.

For the voxel approach, the system will voxelize scene geometry offline or dynamically, inject light into and filter the sparce voxel grid, trace cones through the grid to propagate lighting, use cone traced results to generate fully-illuminated frames, encode each frame with H.264 and send to the appropriate client, and decode H.264 on the client and display the frame. Thus, everything is rendered in the cloud, encoded with H.264 to save bandwidth, and decoded on a tablet or something smaller.

The irradiance map system generates global unique texture parameterization offline, and cluster texels into basis functions offline. The system then gathers indirect light at each basis function (or texel), reconstructs per-texel irradiance from basis functions, encodes irradiance maps to H.264, and transmits to maps to the client. The data is then decoded on the client and the direct light is rendered; indirect light uses irradiance light. Nvidia used a notebook in this scenario, with the final frame rendering taking place on the device.

Finally there's photons. The photon map implementation traces photons using a cloud-based ray tracer. It then transfers a bit-packed encoding of photons to clients; old photon packets on the client are expired and replaced with new ones. Photons are scattered into the client's view to accumulate indirect light, and the system sums indirect light with locally-computed direct illumination. Photon reconstruction requires a powerful client, the company said, hence Nvidia's use of a desktop PC as an example for photon maps.

For more information about Nvidia's new CloudLight system, head here. To see CloudLight in action on all three form factors, check out the video below.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Kevin Parrish has over a decade of experience as a writer, editor, and product tester. His work focused on computer hardware, networking equipment, smartphones, tablets, gaming consoles, and other internet-connected devices. His work has appeared in Tom's Hardware, Tom's Guide, Maximum PC, Digital Trends, Android Authority, How-To Geek, Lifewire, and others.

-

hoofhearted I hate the term cloud. Why can't we just call it what is has been, distributed? Far more meaningful a term. Cloud sounds like something you leave behind after you fart.Reply -

joneb I'm not really sure exactly what all this information is getting at because it mentions incredibly high latency in some cases which is unacceptable as far as my knowledge goes for many games. The only demonstration of acceptable performance in the video is for 0 latency. I mean it all looked fine but latency still affects gameplay quality and response time. Or am I missing something?Reply -

SGTgimpy Reply11255167 said:I'm not really sure exactly what all this information is getting at because it mentions incredibly high latency in some cases which is unacceptable as far as my knowledge goes for many games. The only demonstration of acceptable performance in the video is for 0 latency. I mean it all looked fine but latency still affects gameplay quality and response time. Or am I missing something?

You’re missing something. They were demonstrating the tech to show even at high latencies it still works.

Also this is nothing new and this type of distributed offloading has been around for years. It is how super-computers are used to process huge task and delver the finished product back to your workstation. They are just using it to offload up some of the graphic work load now.

-

Estix Based on the title, I was expecting to see a lighting engine that let clouds partially/fully obscure the sun. I was rather disappointed.Reply -

cats_Paw Latency seems to work fine when there is no user input (watching something not actually playing) but when you ad human input, the lat will be doubled and the delay will be unbearable. We are too far away in internet hardware hardlines yet for this to be an effective option.Reply