Intel's New 520 Series SSD Codenamed "Cherryville"

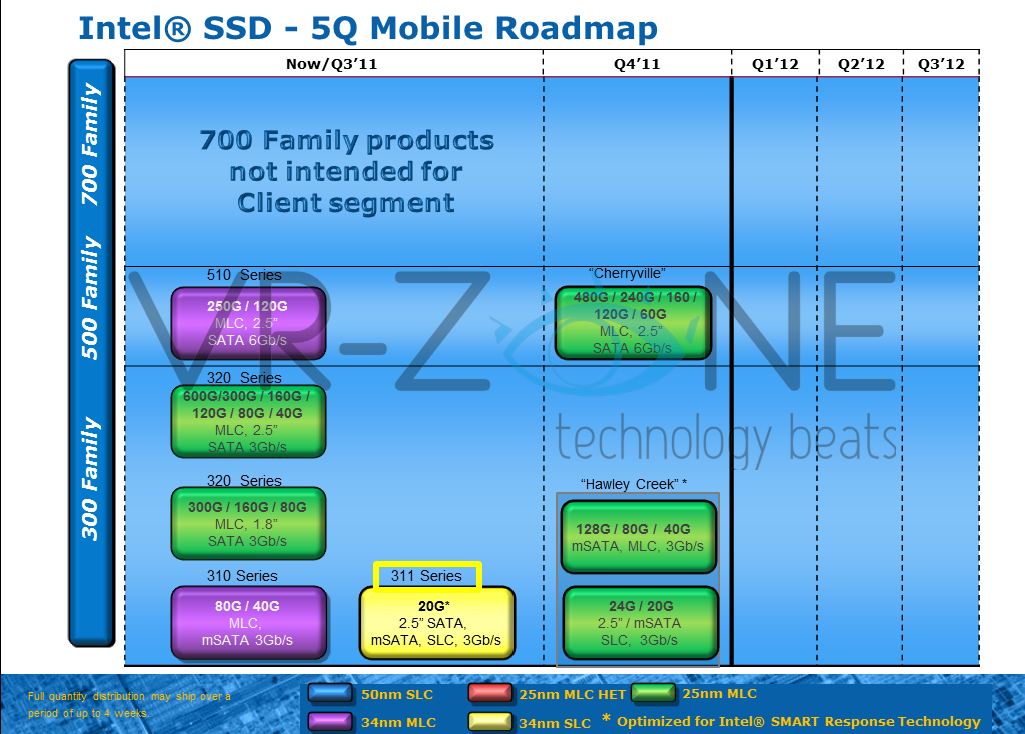

Intel continues to establish itself as a leader in the SSD market with its planned successor to the popular 510 Series SSD, the 520 Series SSD (Codenamed - "Cherryville").

"Cherryville", Intel's new high-end 520 Series SSD, is based on 2.5-inch SATA 6 Gb/s form-factor and comes in 60 GB, 120 GB, 160 GB, 240 GB, and 480 GB capacities (versus just 120 GB & 240 GB with the 510 Series). The drive will utilize 25 nm multi-level cell (MLC) NAND flash memory made by Intel and features support for TRIM, SMART, NCQ, and ACS-2 compliance. Intel looks to be setting up to battle the SandForce SF-22xx SSDs at each capacity level and price-point.

Initial performance information on "Cherryville" shows sequential and random access performance figures are expected to be up to 530 MB/s read, 490 MB/s write sequential performance; and 40,000 IOPS reads and 45,000 IOPS writes random performance. As with other SSD manufacturers, the performance information is expected to vary between capacities, so we'll have to take a wait-and-see approach on what the final release numbers are for each capacity. In addition, the SSD drives are rated with 1.2 million hours MTBF, can operate between 0 and 70°C, and withstand up to 2.7 G (RMS) vibration.

Intel is expected to begin production of the 520 Series SSD in the fourth quarter of 2011.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

amdwilliam1985 I'm still waiting for their 310 series to fall on price.Reply

Lately, I'm getting a little impatient, and I'm very tempted by the prices of the "new" Vertex Plus, does anyone know if the Vertex Plus are any good?

I'm waiting for Tom's or Anandtech to do a review on them. -

jacobdrj Newegg reviews on the Vertex Plus have not been encouraging. However, OCZ usually does well with their customer service. The prices are good, but the Kingston V+100 series seem to have similar performance numbers and price, without the reliability issues...Reply -

icepick314 can someone tell my how NCQ is implemented in SSD?Reply

I thought that was for HDD with spinning platter to read data then sequence them in logical order... -

scook9 Is this an Intel or a Marvell controller? That is the only useful piece of information I was hoping to get from this article :(Reply -

nordlead one day I'll have an SSD when the newegg reviews don't look so dire for the drives that cost ~$1/GB. I don't want to spend $200-300 on a single drive.Reply -

cakster "the SSD drives are rated with 1.2 million hours MTBF". Are they serious? Thats like 130+ years before device fails.... yea right.Reply -

x Heavy cakster, the old Raptor Enterprise 150's were rated very high prior to failure. I lost only one of 4 after almost 24/7 gaming. Back in the day they were truly monsters.Reply

Intel is going to have to lighten the load and make these drives faster, other SSD's and similar storage are approaching one gig/second. -

drwho1 cakster"the SSD drives are rated with 1.2 million hours MTBF". Are they serious? Thats like 130+ years before device fails.... yea right.Reply

1200000 / 8 (hours a day) / 365 days = 410 years

Good luck with that.

Nobody is going to live that long to prove it.