Amazon ‘Rekognition’ Falsely Identifies 28 Congress Members as Criminals (Updated)

Updated, 7/30/2018, 6:55am PT:

Amazon's Dr. Matt Wood, who is the general manager of artificial intelligence at Amazon Web Services (AWS), published a post in which he called out the ACLU for using incorrect settings of the Rekognition service. According to Wood, the default 80% confidence level that the ACLU used may work well enough for social media celebrities or identifying people in family photos, but for public safety use cases the company recommends a 99% confidence level setting. Otherwise, a high number of false positives is expected. Wood also mentioned that the 99% confidence level setting is what Amazon recommends in its documentation for law enforcement.

Wood said that he tried to recreate the ACLU test with a setting of 99% confidence level. His results showed a false positive rate that dropped to zero, despite comparing against a database of faces that was 30 times larger than the one the ACLU used. According to him, using a database that is not appropriately representative in addition to a low confidence level may skew the results.

Furthermore, Amazon's AI chief noted that the company recommends that law enforcement use the results from Rekognition only as one input across others to narrow the field and allow human agents to review and consider the options before making a judgement call. However, as machine learning improves, we could see how in the future that human element may be eliminated from the process.

Original article, 7/26/2018, 8:15am PT:

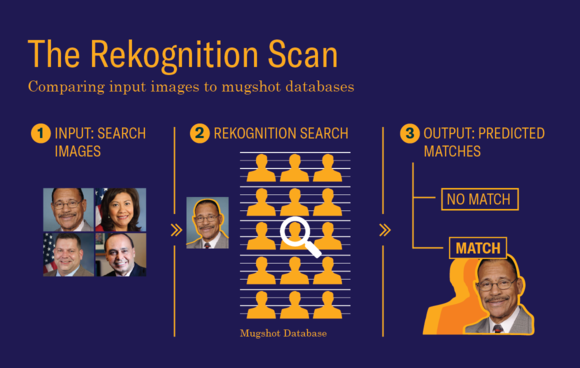

In a test recently conducted by the ACLU using Amazon’s “Rekognition” service for facial recognition, the nonprofit noticed that the software incorrectly matched the faces of 28 members of Congress with the mugshots of arrested criminals.

With this test, the ACLU was trying to prove that Amazon’s Rekognition software is not only a danger from a human rights perspective due to how it could be abused by law enforcement, but it could also be downright wrong when identifying police targets.

Amazon Rekognition Failure

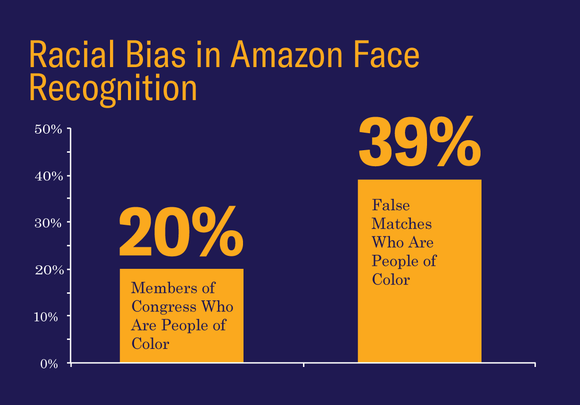

In its test, the ACLU chose both Republican and Democrat Congress members, both men and women of all ages, as well as people of color, from all across the country. The test showed 28 members of Congress were identified as arrested criminals.

Most of the incorrectly matched Congress members were people of color, including six members of the Congressional Black Caucus. One of them was civil rights legend Rep. John Lewis (D-Ga.), who the Amazon Rekognition system should have been able to properly identify, but didn’t.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The ACLU said that it used the same Rekognition service that is available to the public, and that the whole test cost the nonprofit only $12.33. The organization used a dataset of 25,000 publicly available arrest photos, which it used to test against the public photos of all Congress members.

Rekognition Controversy Continues

In a recent letter, the Congressional Black Caucus warned about the “profound negative unintended consequences” that Amazon’s Rekognition service could have when used by law enforcement against black people, immigrants, and protesters.

The ACLU believes that this test validated those concerns. The software incorrectly identified members of Congress as criminals, which means regular people could be just as easily identified as criminals, too. Additionally, people of color were found to be associated with criminals at twice the rate compared to white people.

The ACLU said that the simple fact that Amazon’s Rekognition has such a high error rate could exacerbate tensions between the police and people of color in the United States and cost people their freedom or even their lives. The nonprofit reminded everyone that this is not just a theory, but something that happens all the time, including recently in San Francisco:

"A recent incident in San Francisco provides a disturbing illustration of that risk. Police stopped a car, handcuffed an elderly Black woman and forced her to kneel at gunpoint — all because an automatic license plate reader improperly identified her car as a stolen vehicle."

Amazon Not Acting As Neutral Party

The ACLU has accused Amazon before of not simply making its facial recognition available for anyone to use, but actively pursuing law enforcement and government contracts, and making pitches about how Rekognition could help it catch criminals. Amazon hasn’t denied this, as it believes that this technology being used by law enforcement will ultimately make the society a better place.

ACLU warned that an abuse of facial recognition technology could chill free speech and threaten the practicing of religion, as well as subject immigrants to further abuse from the government. The nonprofit urged Congress to enact a moratorium on law enforcement use of face recognition until all the dangers of law enforcement using facial recognition technology can be fully considered.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

tnm2gen FAKE OUTRAGEReply

If you do some research you find the ACLU did these comparisons with the reliability set to 80% confidence when Amazon specifies 95% for facial recognition (Rekognition can also be used for objects and places for which the default setting is 80%). Run the tests again at 95% and see if there is still a story. -

arakisb wow, did they get it wrongReply

99% are criminals and the rest are crooks

the percentage of politicians indicted for one thing or another only matches that of the nfl and nba, and those are the professions that felons can thrive -

homeybaloney maybe have the test run in multiple locations to build a true value then go from there.Reply -

rbritz It's illegal in the US to take a bribe, yet politicians take PAC, SuperPAC, and other outside donations to vote specifically for issues that benefit these types of groups, and nobody says anything. The machine got it right, throw every single one of them into jail.Reply -

BryanFRitt "reliability set to 80% confidence."Reply

So Amazon ‘Rekognition’ is fairly confident that at least 4.1869% of the 535 members congress are arrested criminals.

(28/535 marked as arrested criminals)*(8/10 true positives) = 112?2675 ? 0.041869... ? 004.1869...% ? 1/25

not including false negatives

four categories: false positives, true positives, false negatives, true negatives

positive/negative is how the test turned out

true/false is what actually is