AMD-Friendly AI LLM Developer Jokes About Nvidia GPU Shortages

Nvidia’s GPU oven is too slow, but there are fresh AMD GPUs on the grill.

The co-founder and CEO of Lamini, an artificial intelligence (AI) large language model (LLM) startup, posted a video to Twitter/X poking fun at the ongoing Nvidia GPU shortage. The Lamini boss is quite smug at the moment, and this seems to be largely because the firm’s LLM runs exclusively on readily available AMD GPU architectures. Moreover, the firm claims that AMD GPUs using ROCm have reached "software parity" with the previously dominant Nvidia CUDA platform.

Just grilling up some GPUs 💁🏻♀️Kudos to Jensen for baking them first https://t.co/4448NNf2JP pic.twitter.com/IV4UqIS7ORSeptember 26, 2023

The video shows Sharon Zhou, CEO of Lamini, checking an oven in search of some AI LLM accelerating GPUs. First she ventures into a kitchen, superficially similar to Jensen Huang’s famous Californian coquina, but upon checking the oven she notes that there is “52 weeks lead time – not ready.” Frustrated, Zhou checks the grill in the yard, and there is a freshly BBQed AMD Instinct GPU ready for the taking.

We don’t know the technical reasons why Nvidia GPUs require lengthy oven cooking while AMD GPUs can be prepared on a grill. Hopefully, our readers can shine some light on this semiconductor conundrum in the comments.

On a more serious note, if we look more closely at Lamini, the headlining LLM startup, we can see they are no joke. CRN provided some background coverage of the Palo Alto, Calif.-based startup on Tuesday. Some of the important things mentioned in the coverage include the fact that Lamini CEO Sharon Zhou is a machine learning expert, and CTO Greg Diamos is a former Nvidia CUDA software architect.

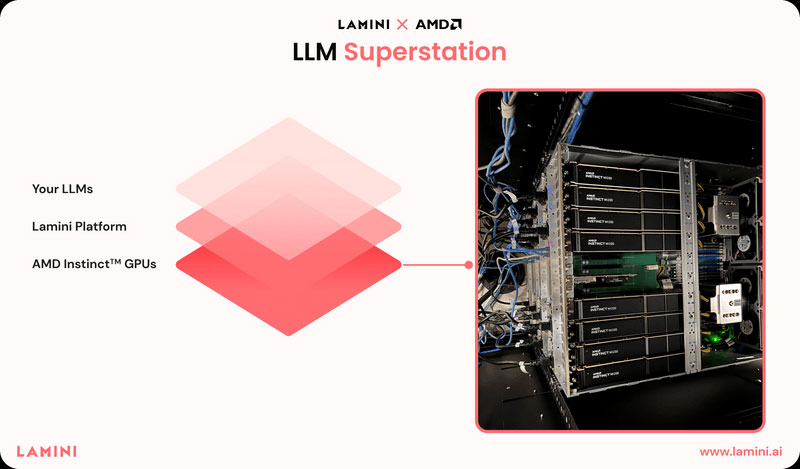

It turns out that Lamini has been “secretly” running LLMs on AMD Instinct GPUs for the past year, with a number of enterprises benefitting from private LLMs during the testing period. The most notable Lamini customer is probably AMD, who “deployed Lamini in our internal Kubernetes cluster with AMD Instinct GPUs, and are using finetuning to create models that are trained on AMD code base across multiple components for specific developer tasks.”

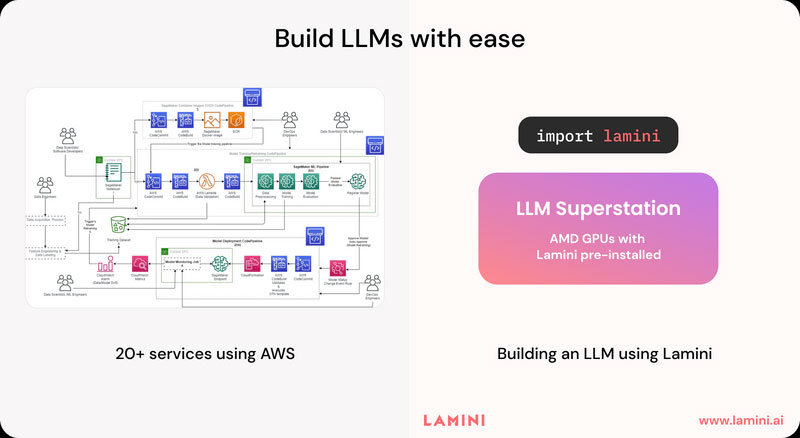

A very interesting key claim from Lamini is that it only needs “3 lines of code,” to run production-ready LLMs on AMD Instinct GPUs. Additionally, Lamini is said to have the key advantage of working on readily available AMD GPUs. CTO Diamos also asserts that Lamini’s performance isn’t overshadowed by Nvidia solutions, as AMD ROCm has achieved “software parity” with Nvidia CUDA for LLMs.

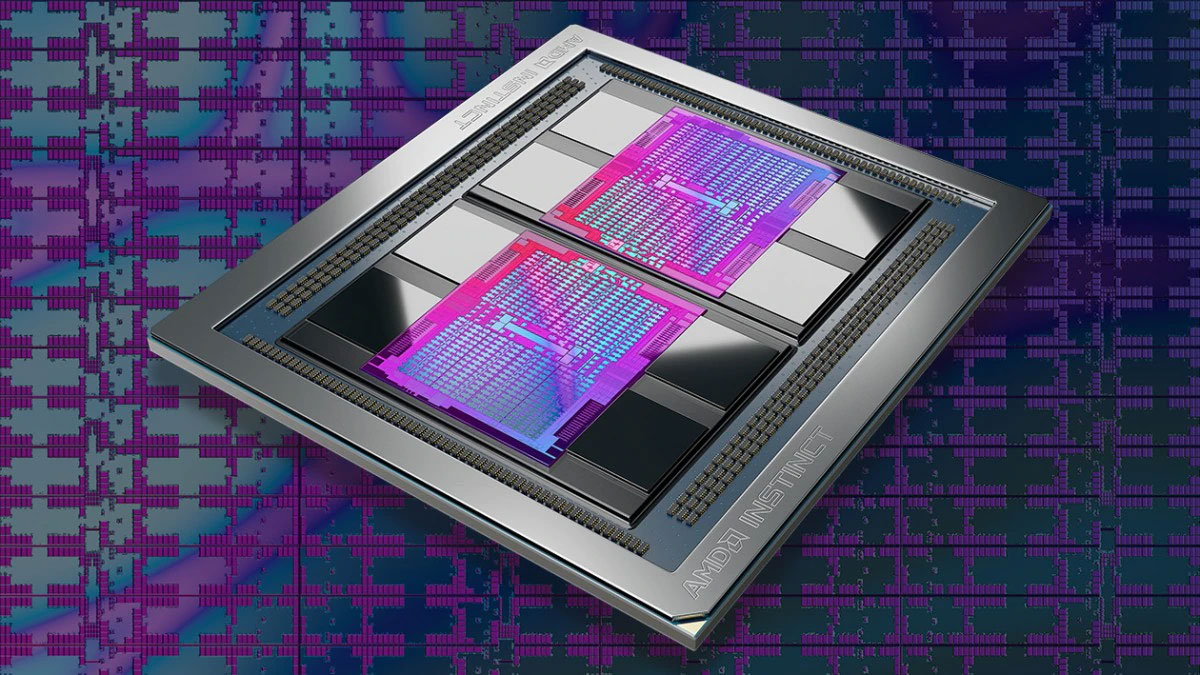

We'd expect as much from a company focused on providing LLM solutions using AMD hardware, though they're not inherently wrong. AMD Instinct GPUs can be competitive with Nvidia A100 and H100 GPUs, particularly if you have enough of them. The Instinct MI250 for example offers up to 362 teraflops of peak BF16/FP16 compute for AI workloads, and the MI250X pushes that to 383 teraflops. Both have 128GB of HBM2e memory as well, which can be critical for running LLMs.

AMD's upcoming Instinct MI300X meanwhile bumps the memory capacity up to 192GB, double what you can get with Nvidia's Hopper H100. However, AMD hasn't officially revealed the compute performance of MI300 yet — it's a safe bet it will be higher than the MI250X, but how much higher isn't fully known.

By way of comparison, Nvidia's A100 offers up to 312 teraflops of BF16/FP16 compute, or 624 teraflops peak compute with sparsity — basically, sparsity "skips" multiplication by zero calculations as the answer is known, potentially doubling throughput. The H100 has up to 1979 teraflops of BF16/FP16 compute with sparsity (and half that without sparsity). On paper, then, AMD can take on A100 but falls behind H100. But that assumes you can actually get H100 GPUs, which as Lamini notes currently means wait times of a year or more.

The alternative in the meantime is to run LLMs on AMD's Instinct GPUs. A single MI250X might not be a match for H100, but five of them, running optimized ROCm code, should prove competitive. There's also the question of how much memory the LLMs require, and as noted, 128GB is more than 80GB or 94GB (the maximum on current H100, unless you include the dual-GPU H100 NVL). An LLM that needs 800GB of memory, like ChatGPT, would potentially need a cluster of ten or more H100 or A100 GPUs, or seven MI250X GPUs.

It's only natural that an AMD partner like Lamini is going to highlight the best of its solution, and cherry pick data / benchmarks to reinforce its stance. It cannot be denied, though, that the current ready availability of AMD GPUs and the non-scarcity pricing means the red team’s chips may deliver the best price per teraflop, or the best price per GB of GPU memory.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

scottslayer Ah yes, a social media post by someone who has AMD as their customer.Reply

Thanks for the quality "news" yet again Tom's. -

The Hardcard Reply

Actually, statements from companies, verifying the functionality of ROCm software stack is important news. The biggest part of the AI battle is software.scottslayer said:Ah yes, a social media post by someone who has AMD as their customer.

Thanks for the quality "news" yet again Tom's.

A lot of companies have matrix math (tensor) units that are capable of doing everything iNvidia GPUs can do. (minus sparsity accelerators added to Ampere and the transformer hardware added to Hopper) . The software stack and documentation are what is lacking for a number of companies, including AMD.

The post makes it more likely the AMD will get enough of this together long before Nvidia can satisfy demand for its hardware. This means that one: AMD is going to get to jam fists into the AI loot box and two: consumers will have more access given that AMD already has an established consumer side of this business. -

Makaveli Reply

The post yours above I would classify as " Can't see the forest for the trees"The Hardcard said:Actually, statements from companies, verifying the functionality of ROCm software stack is important news. The biggest part of the AI battle is software.

A lot of companies have matrix math (tensor) units that are capable of doing everything iNvidia GPUs can do. (minus sparsity accelerators added to Ampere and the transformer hardware added to Hopper) . The software stack and documentation are what is lacking for a number of companies, including AMD.

The post makes it more likely the AMD will get enough of this together long before Nvidia can satisfy demand for its hardware. This means that one: AMD is going to get to jam fists into the AI loot box and two: consumers will have more access given that AMD already has an established consumer side of this business.

And your follow up post was excellent! -

scottslayer Apologies to all organic posters, I did not scroll down to see the actual news that is in the last half or so of the article because I was severely unimpressed with the whatnot in the first half.Reply

Consider me appropriately chastised. -

ivan_vy Reply

also means Nvidia hardware is no longer essential, AMD will fill the needs of many consumers and that leads to lower prices on hardware, a win for all, except for some leather jacket enthusiasts.The Hardcard said:Actually, statements from companies, verifying the functionality of ROCm software stack is important news. The biggest part of the AI battle is software.

A lot of companies have matrix math (tensor) units that are capable of doing everything iNvidia GPUs can do. (minus sparsity accelerators added to Ampere and the transformer hardware added to Hopper) . The software stack and documentation are what is lacking for a number of companies, including AMD.

The post makes it more likely the AMD will get enough of this together long before Nvidia can satisfy demand for its hardware. This means that one: AMD is going to get to jam fists into the AI loot box and two: consumers will have more access given that AMD already has an established consumer side of this business. -

Steve Nord_ Would have liked to see more links to those cards/frames on sale, oh well I will just build out the solar panels and PowerWall -ish storage until my magic grill pans out.Reply -

Li Ken-un Here’s to the end of Nvidia’s grip on the market. :beercheers:Reply

Normally, hardware manufacturers use software to add value (and thus sell more) hardware. Nvidia, sells the hardware and then charges subscriptions on top of that for already baked in hardware functionality. LOL! -

russell_john The problem here is Nvidia isn't using CUDA for LLMs, it's using tensor core neural networks for LLMs and AMD basically has nothing that can compete in that area.Reply

Nvidia having a shortage of GPUs is actually a good thing, it means they are selling them faster than they can make them ...... AMD having plenty on hand means poorer sales and overstock ..... Same overstock we have seen in the consumer markets that caused a 8 month delay in the release of the 7800XT .... Every discounted 6950XT sold this year equals one less 7800XT that will be sold this year.

Nice try at spin, I'm sure it fools a lot of people who don't really understand how market dynamics work. AMDs overstock of last gen products has made them even less competitive because they were forced to cannibalize the sales of their current gen. -

Blacksad999 Oh, nice!!Reply

These companies can now buy FIVE mediocre GPU's to do the work of one!! What a score!! lol I'm sure they're just as "power efficient" as the rest of the AMD lineup. XD -

The Hardcard Replyrussell_john said:The problem here is Nvidia isn't using CUDA for LLMs, it's using tensor core neural networks for LLMs and AMD basically has nothing that can compete in that area.

Nvidia having a shortage of GPUs is actually a good thing, it means they are selling them faster than they can make them ...... AMD having plenty on hand means poorer sales and overstock ..... Same overstock we have seen in the consumer markets that caused a 8 month delay in the release of the 7800XT .... Every discounted 6950XT sold this year equals one less 7800XT that will be sold this year.

Nice try at spin, I'm sure it fools a lot of people who don't really understand how market dynamics work. AMDs overstock of last gen products has made them even less competitive because they were forced to cannibalize the sales of their current gen.

Tensor cores are hardware matrix math units. CUDA is a software stack to do math on that hardware. CUDA is used for machine learning tasks, including LLM‘s. While tensor cores can be used without CUDA, no one has invested the tens of thousands of engineering man hours it would take to write an alternative software stack to drive them. For now, CUDA is the only way the tensor cores are used.

AMD has had matrix math units in their data center GPUs for nearly three years, and RDNA 3 also has them.

You also don’t need tensor/matrix units to run neural networks. They run on anything that can do math - GPU shaders, and even in CPUs. They just require significant amounts of data movement without matrix units, using up critical memory bandwidth that the main bottleneck in most neural network performance - LLMs in particular.