AMD Throws Down Gauntlet to Nvidia with Instinct MI250 Benchmarks

Winning across the board.

In an unexpected move, AMD this week published detailed performance numbers of its Instinct MI250 accelerator compared to Nvidia's A100 compute GPU. AMD's card predictably outperformed Nvidia's board in all cases by two or three times. But while it is not uncommon for hardware companies to demonstrate their advantages, detailed performance numbers versus competition are rarely published on official websites. When they do it, it usually means one thing: very high confidence in its products.

Up to Three Times More Performance

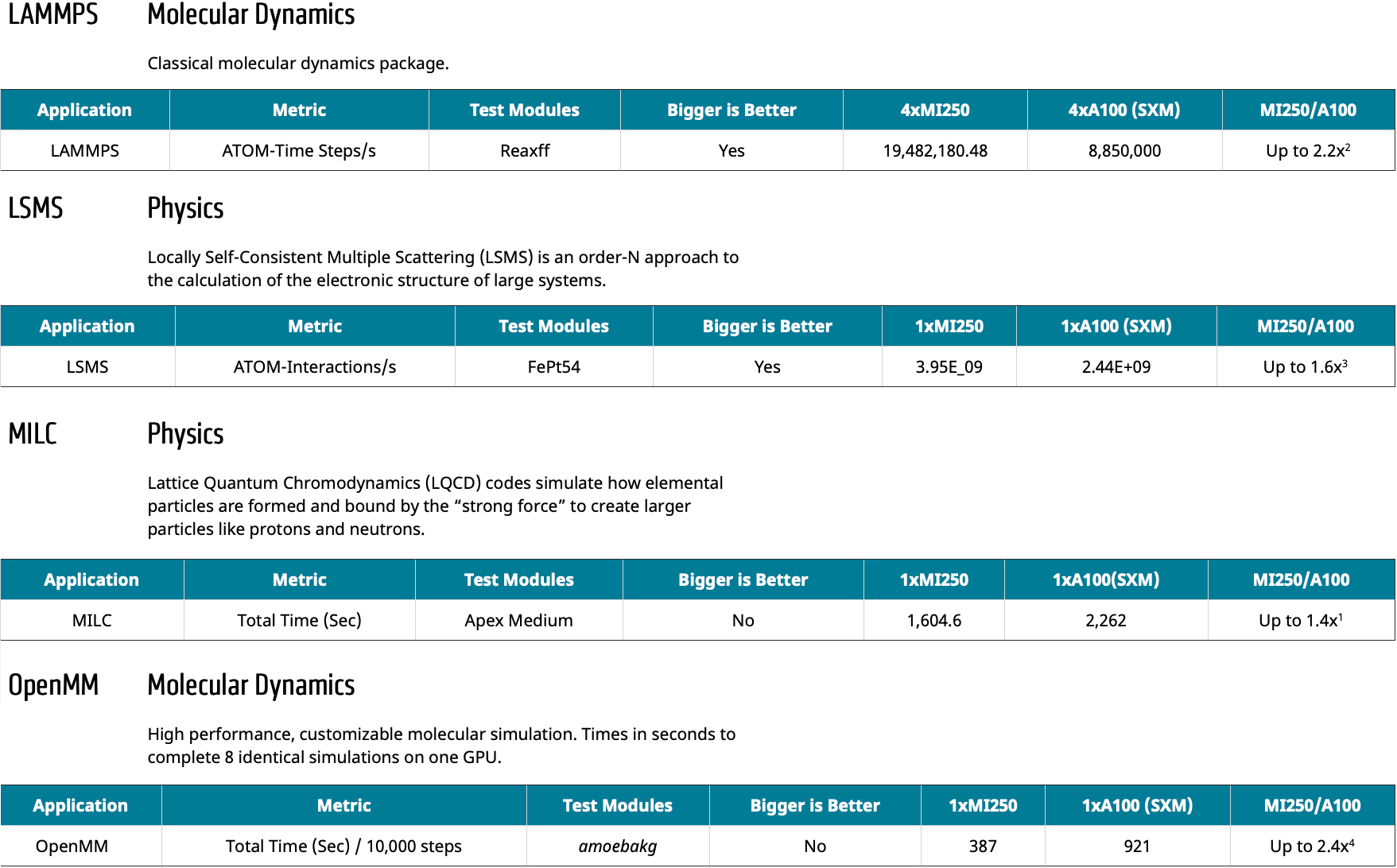

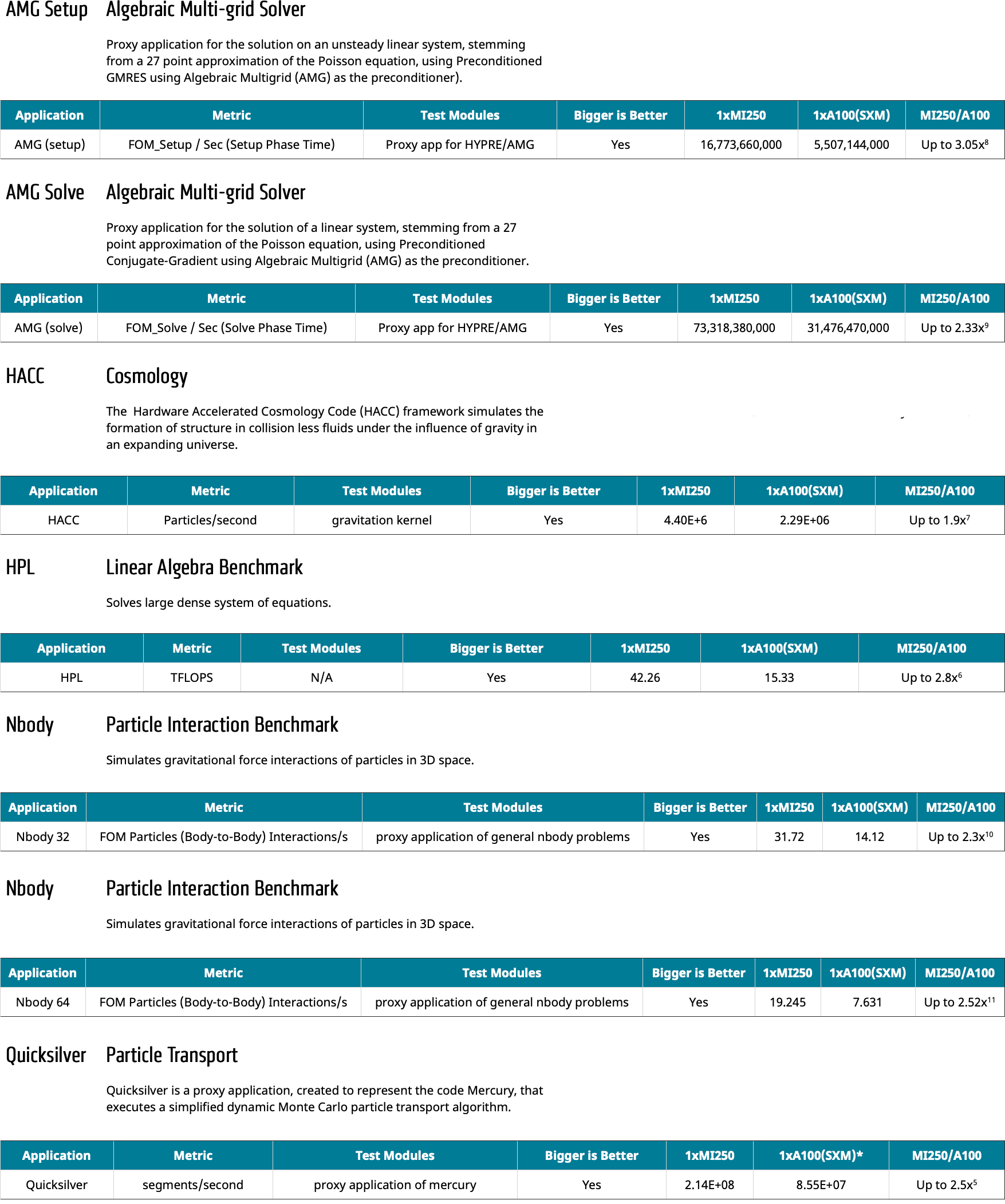

Since AMD's Instinct MI200 is aimed primarily at HPC and AI workloads (and obviously AMD tailored its CDNA 2 more for HPC and supercomputers rather than for AI), AMD tested the competing accelerators in various HPC applications and benchmarks dealing with algebra, physics, cosmology, molecular dynamics, and particle interaction.

There are a number of physics and molecular dynamics HPC applications that are used widely and have industry-recognized tests, such as LAMMPS and OpenMM. These may be considered as real-world workloads and here AMD's MI250X can outperform Nvidia's A100 by 1.4 – 2.4 times.

There are also numerous HPC benchmarks that can mimic real-world algebraic, cosmology, and particle interaction workloads. In these cases, AMD's top-of-the-range compute accelerator is 1.9 – 3.05 times faster than Nvidia's flagship accelerator.

Keeping in mind that AMD's MI250X has considerably more ALUs running at high clocks than Nvidia's A100, it is not surprising that the new card dramatically outperforms its rival. Meanwhile, it is noteworthy that AMD did not run any AI benchmarks.

New Architecture, More ALUs

AMD's Instinct MI200 accelerators are powered by the company's latest CDNA 2 architecture that is optimized for high-performance computing (HPC) and will power the upcoming Frontier supercomputer that promises to deliver about 1.5 FP64 TFLOPS of sustained performance The MI200-series OAM boards use AMD's Aldebaran compute GPU that consists of two graphics compute dies (GCDs) that each pack 29.1 billion of transistors, which is slightly more compared to 26.8 billion transistors inside the Navi 21. The GCDs are made using TSMC's N6 fabrication process that enabled AMD to pack slightly more transistors and simplify production process by using extreme ultraviolet lithography on more layers.

AMD's flagship Instinct MI250X accelerator features 14,080 stream processors (220 compute units) and is equipped with 128GB of HBM2E memory. The MI250X compute GPU is rated for 95.7 FP32/FP64 TFLOPS performance (same performance for matrix operations) as well as 383 BF16/INT8/INT4 TFLOPS/TOPS performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

By contrast, Nvidia's A100 GPU consists of 54.2 billion transistors, has 6,912 active CUDA cores, and is paired with 80GB of HBM2E memory. Performance wise, the accelerator offers 19.5 FP32 TFLOPS, 9.7 FP64 TFLOPS, 19.5 FP64 Tensor TFLOPS, 312 FP16/BF16 TFLOPS, and up to 624 INT8 TOPS (or 1248 TOPS with sparsity).

Even on paper, AMD's Instinct MI200-series offers more performance in traditional HPC and matrix workloads, but Nvidia has an edge in AI cases. These peak performance numbers can be explained with a considerably higher ALU count in case of AMD's MI200-series

To demonstrate how good its flagship compute accelerator Instinct MI250X 128GB HBM2E is, AMD used 1P or 2P 64-core AMD EPYC 7742-based systems equipped with one or four AMD Instinct MI250X 128GB HBM2E compute GPU or one or four Nvidia A100 80GB HBM2E. The company used AMD-optimized and CUDA-optimized software.

Summary

For now, AMD's Instinct MI250X is the world's highest-performing HPC accelerator, according to its own data. Considering the fact that the Aldebaran has a whopping 14,080 ALUs and is rated for 95.7 FP32/FP64 TFLOPS performance, it is indeed the fastest compute GPU around.

Meanwhile, AMD launched its Instinct MI250X about 1.5 years after Nvidia's A100 and several months before Intel's Ponte Vecchio. It is natural for a 2021 compute accelerator to outperform its rival introduced over a year ago, but what we are curious about is how this GPU will stack against Intel's supercomputer-bound compute Ponte Vecchio GPU.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

ezst036 Great. Now let's see AMD mining GPUs with higher hash performance than gaming GPUs so we can obtain market divergence.Reply -

JarredWaltonGPU Reply

I bet these will actually be very potent mining GPUs, but they're for datacenters -- the PCIe versions are coming later. With 3.2 TB/s of memory bandwidth and 128GB of HBM2e memory, these should probably do something in the vicinity of 300MH/s. 😲ezst036 said:Great. Now let's see AMD mining GPUs with higher hash performance than gaming GPUs so we can obtain market divergence. -

Historical Fidelity Reply

I like this concept you described. Etherium likes MEM bandwidth so AMD would attract the majority of miners if they came out with a specific mining card with a mining specific warranty (also adding to regular graphics cards that if inspection of a normal non-mining graphics card determines that mining is the cause for the warranty service then it is denied (I know some gamers like to mine during idle so I won’t say that mining straight up voids your warranty even if a game caused the break)) and layout using hbm2e or even hbm3 when it’s ready, AMD could corner the market for mining while relieving supply of gaming oriented products. Plus nvidia’s mining specific product stack is laughably worse than just buying a 3000 series. Finally if the mining boom collapses, then the left over AMD mining cards could be rebranded for compute with minimal effort.JarredWaltonGPU said:I bet these will actually be very potent mining GPUs, but they're for datacenters -- the PCIe versions are coming later. With 3.2 TB/s of memory bandwidth and 128GB of HBM2e memory, these should probably do something in the vicinity of 300MH/s. 😲 -

deksman ReplyKrotow said:Don't care. Where are GPU cards for MSRP price?

These aren't GPU's. They are accelerators for data centers.

The consumer versions which use MC M will follow next year it seems.

MSRP price?

Yeah, good luck finding those GPU's from either AMD or NV. Why? Mainly because the auto industry gobbled up all of the chip production because its stuck on manufacturing outdated technology for car innards.

At any rate, I'd like to see gaming GPU's with MCM which have far superior compute capabilities and that professional software devs actually integrate support for USING AMD gpu's when it comes to accelerating professional workloads using something more modern like Metal. -

escksu They will be extremely fast mining GPUs... Of course, the main thing is being profitable. At the price, its not really worth.Reply -

d0x360 Replydeksman said:These aren't GPU's. They are accelerators for data centers.

The consumer versions which use MC M will follow next year it seems.

MSRP price?

Yeah, good luck finding those GPU's from either AMD or NV. Why? Mainly because the auto industry gobbled up all of the chip production because its stuck on manufacturing outdated technology for car innards.

At any rate, I'd like to see gaming GPU's with MCM which have far superior compute capabilities and that professional software devs actually integrate support for USING AMD gpu's when it comes to accelerating professional workloads using something more modern like Metal.

I uh... I got a 3080ti FTW3 Ultra for MSRP 2 weeks ago. Paid the same price for it as I did my 2080ti FTW3 Ultra at launch.

As for mining... I don't want AMD cornering the market because I'd like to be able to buy a GPU without having to hunt for one for months.

There is also a new crypto coming that loves LE cache and they are already talking about Ryzen shortages that will happen, especially the refresh with 3d stacked vcache.

Markup on GPU's even with the shortages wouldn't be anywhere near what they are if it weren't for miners. Miners are the ones paying scalper prices on ebay which keeps prices high. I'm going to benefit from that and end up breaking even and despite that I'm still sick of it.

People who will actually use hardware can't get it because 90% of it is going to miners. When I say use I mean actually use, not setup to mine and walk away.

Even if AMD made a mining card it doesn't matter. Every card made for mining is 1 less gpu available for someone to buy. Yeah they use chips that are defective for a certain sku but in basically every instance that chip would be fine for a mid or low end gpu... The largest market by far for gamers but also the sweet spot for miners.

Miners should be forced to use old ps3's that can still run Linux. Make a cluster and have at it. -

VforV Now imagine some of this Instinct GPU tech scaled down to desktop, as RDNA3... can't wait.Reply -

lazyabum Reply

No DirectX or OpenGL anywhere on these cards.deksman said:These aren't GPU's. They are accelerators for data centers.

The consumer versions which use MC M will follow next year it seems.

MSRP price?

Yeah, good luck finding those GPU's from either AMD or NV. Why? Mainly because the auto industry gobbled up all of the chip production because its stuck on manufacturing outdated technology for car innards.

At any rate, I'd like to see gaming GPU's with MCM which have far superior compute capabilities and that professional software devs actually integrate support for USING AMD gpu's when it comes to accelerating professional workloads using something more modern like Metal.