AMD Unveils Nvidia DGX A100 Specs in Formal Announcement

Didn't see that one coming, did you?

Just prior to Nvidia's online GTC keynote, a trademark filing looked to refer to the company's next-generation Ampere-based DGX system called the A100. The keynote confirmed the arrival of this system, but full specifications weren't available yet -- we knew about the Ampere A100 GPUs, but CPU information was still missing. Now, Nvidia has given AMD the honor of sharing the last bits of the spec sheet in a formal announcement.

Now before you ask why AMD? Well, that's because of the two 64-core AMD Epyc 7742 processors that are installed. Tally that up, and you'll soon realize that the DGX A100 systems pack a total of 128 cores and a whopping 256 threads, all running at 3.4 GHz.

“The NVIDIA DGX A100 delivers a tremendous leap in performance and capabilities,” said Charlie Boyle, VP and GM for DGX systems at NVIDIA. “The 2nd Gen AMD EPYC processors used in DGX A100 provide high performance and support for PCIe Gen4. NVIDIA has put those features to work to create the world’s most powerful AI system while maintaining compatibility with the GPU-optimized software stack used across the entire DGX family.”

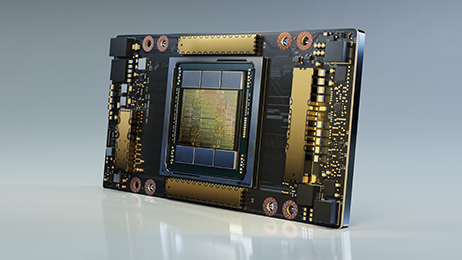

The Nvidia DGX A100 packs a total of eight Nvidia A100 GPUs (which are no longer called Tesla to avoid confusion with the automaker). Each GPU measures 826 square mm and packs 54-billion transistors, and all eight are linked through 600 GB/s NVSwitch links. In total, the eight GPUs deliver a jolly 5 petaflops of power.

Nvidia's DGX systems are aimed at scientific and data center use, intended to be used for machine learning and artificial intelligence simulations. Pricing for a DGX system starts at just $199,000.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Niels Broekhuijsen is a Contributing Writer for Tom's Hardware US. He reviews cases, water cooling and pc builds.

-

hobobot I found the data sheet and it's a max power draw of 6.5 kW.......... which isn't terrible efficiency for flops/watt but god damn.... the cooling needed....Reply -

bit_user Reply

That's a little above where I estimated. I think I was saying somewhere around 5 kW.hobobot said:I found the data sheet and it's a max power draw of 6.5 kW.......... which isn't terrible efficiency for flops/watt but god damn.... the cooling needed....

Did they say how tall it is? Looks like it could be 6U, which would be a little less than 1.1 kW per U. That's not unreasonable, and plenty big enough for some large fans. Though, when it really gets cranking, I'm sure it will sound like a hovercraft. -

bit_user Heh, I just had this image of a server with an automotive radiator bolted on. Someone should definitely do that, if it hasn't been done already.Reply

Edit: of course it's been done.

https://forums.tomshardware.com/threads/can-i-use-a-car-radiator-in-my-water-loop.3217668/ -

spongiemaster Reply

Jensen Huang catch phrases aside, $200,000 is a bargain compared to what it would cost to build a comparable system with previous generation hardware.spentshells said:Starts at 199,999.... oooof -

bit_user Reply

Almost anything is a bargain, by that definition.spongiemaster said:$200,000 is a bargain compared to what it would cost to build a comparable system with previous generation hardware.

It will be telling to see how it stacks up & prices against a comparable system from Intel/Habana Labs. -

bit_user Reply

Depends on why you need multi-GPU. What makes DGX special is the high-speed GPU-to-GPU interconnects. This can be useful for training and inferencing extremely large networks. However, if your network is smaller or you just need to accelerate inferencing, then you might be able to go with T4's.DotNetMaster777 said:Are there any low price analogs of Nvidia DGX ??

Another option might be to rent time on Google's TPU, if you don't need to actually own the hardware.