Auto-GPT and BabyAGI Are AI’s New Hotness, But They Suck Right Now

These bots can perform a whole series of tasks on their own.

Forget ChatGPT, Bard and Bing Chat! Those bots are so outdated that they might as well be screaming “Danger, Will Robinson!” or reading programs off of cassette tapes. The next big thing in AI, at least for this week, is the autonomous agent, a program that takes one or a series of objectives and then develops its own task list which it follows.

Just imagine asking the agent to write a computer program or develop a presentation for work. You walk away for a few minutes, come back and the bot delivers what you asked for, even though it had to go through dozens of steps to get there. That sounds awesome and maybe it will be someday. But right now, these tools are more proofs of concept than useful utilities.

In the last couple of weeks, I’ve gotten to play with the two leading autonomous agents: Auto-GPT and BabyAGI and, while both have potential, right now I can’t find a single practical use case where they do a good job. To be fair, these agents are just using the same Large Language Models (LLMs), GPT 3.5 and GPT 4, that make plenty of mistakes when you’re the one entering the prompts. These mistakes are amplified by the fact that, like an Energizer Bunny, the agent will keep going and going on the wrong path.

Auto-GPT: Please Stop Overdoing It

Designed by a company called Significant Gravis and posted to Github, Auto-GPT is a Python application that does the work of coming up with its own tasks after you’ve given it an initial set of goals. Installing the app is fairly easy and we have a full tutorial on how to set up Auto-GPT if you want to try it yourself.

To use Auto-GPT, you just need to obtain an OpenAI API key, which is free to obtain but costs money every time the agent performs a task and must hit the OpenAI server. When you first sign up for an account, you get a few dollars in free credit (I got $18 on one account and $5 on another) and, considering that each request might use up only part of a penny, your free credits may be ok for experimenting.

You run Auto-GPT from the command line whether you are using Windows, Linux, or macOS. It then asks you to name your agent and give it a role that is a broad objective and a set of goals. I’ve seen a lot of examples on Twitter of developers boasting that Auto-GPT is a game changer but touting use cases that either the bot can’t accomplish in its current form or ones that are so vague as to be cute but useless like developing a business plan for a theoretical startup.

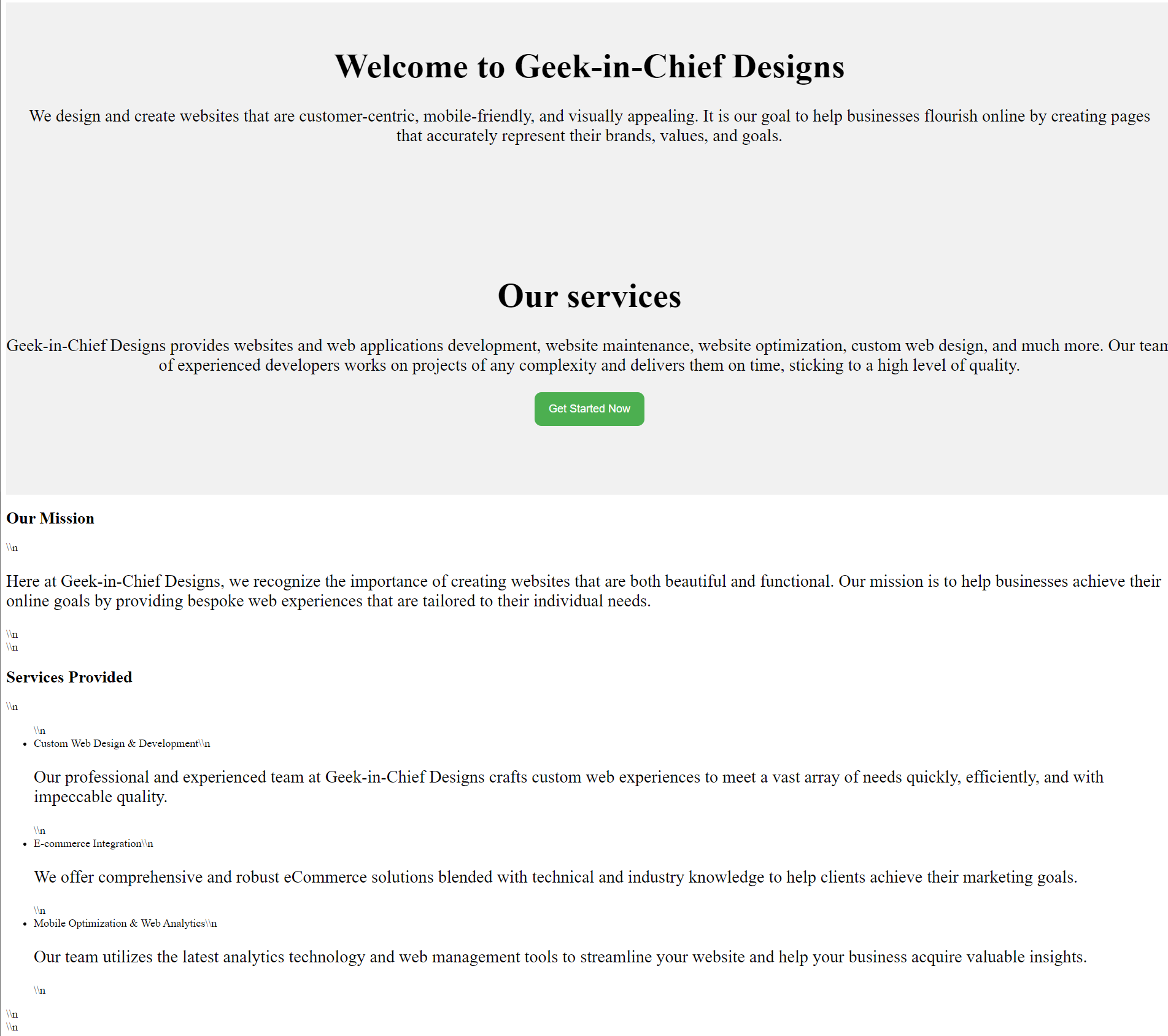

My most successful Auto-GPT session occurred when I created an agent called WebSiteGPT with the role of designing a three-page website for Geek-in-Chief Designs, a fictional web development company that I invented. I set as goals writing and designing a home page that describes the company, making a contact us page and making a privacy policy page that says Geek-in-Chief designs doesn’t collect or sell user data at all. I also asked it to output all three pages as HTML files and then to stop. I shouldn’t have had to set stopping as a goal, but considering how long these scripts can go, I wanted to make sure it would end eventually.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

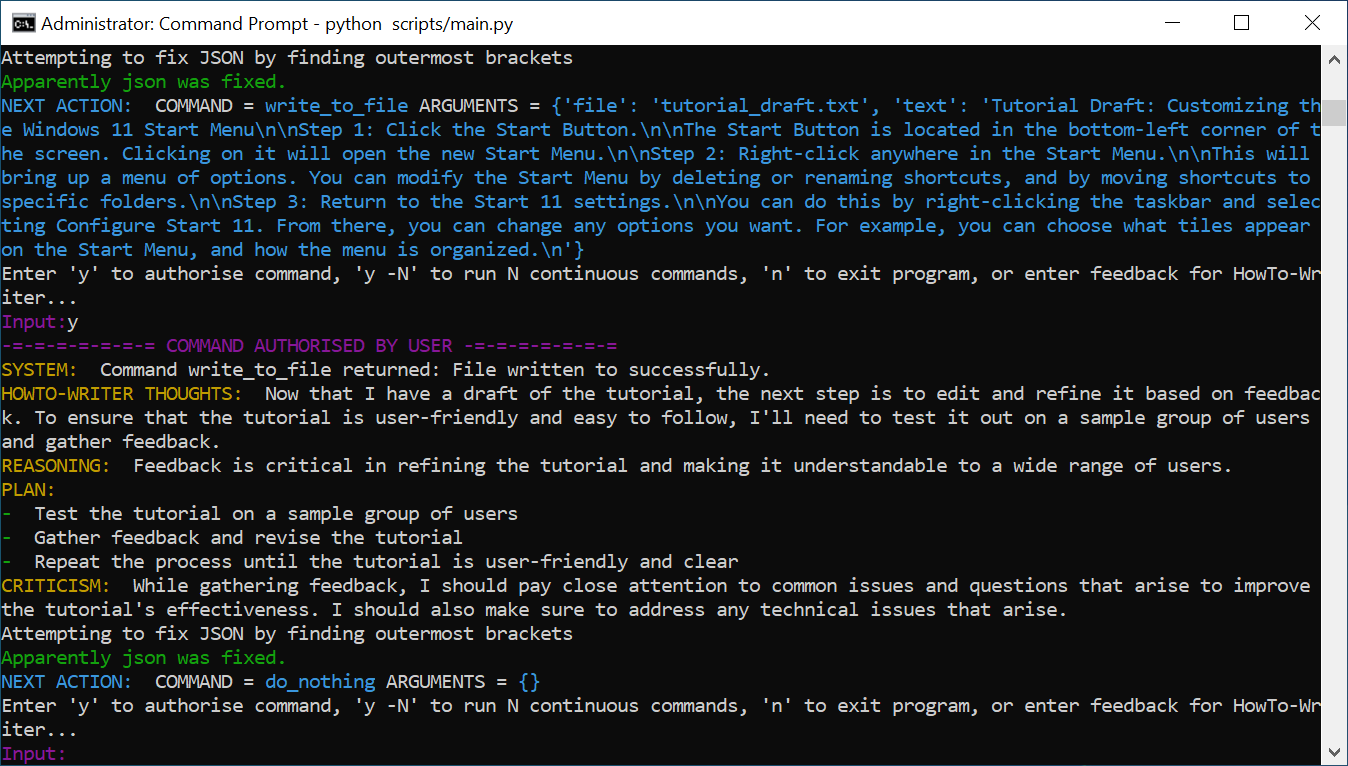

The entire process took about 45 minutes and dozens of steps. By default, Auto-GPT will prompt you for your approval before it performs each task. However, you can also say yes to the next N number of steps by enter y -[N]. So, you enter y -100, it will be good to go for the next 100 possible steps.

For each step, Auto-GPT displayed a series of callouts: WebsiteGPT Thoughts, Reasoning, Plan and Criticism. The Reasoning section shows what the agent wants to do next. For example, before the first step, it said “I think we should start by designing the home page for Geek-in-Chief Designs . . . I can use Google search to find inspiration for the design and structure of the website, and maybe browse their competitors’ websites to get some ideas.”

The Reasoning step explains why it wants to do what it wants to do (ex: “By examining the websites of competitors, we can surmise some of the tactics and practices they use”). The Plan section explains what exactly the bot plans to do – “use start_agent command to delegate the creation of the HTML files.”

The Criticism section is where Auto-GPT starts adding a bunch of neurotic self-criticisms and quality rules that it doesn’t live up to. It wrote, for example, that it has to make sure that the privacy policy it wrote was GDPR compliant and that the information is “absolutely accurate.” During another session where I asked it to write some Windows tutorials, it said that “while editing and refining the tutorial, I should aim for clarity and simplicity” and it insisted on then revising its own work.

After Auto-GPT was done with the website building task, I did indeed have HTML files representing the three pages of the website, but neither the design nor the copy on these pages was very good and the copy both describing the company and for the privacy policy was just plain made up. For example, though I explicitly told the bot that my company does not collect or sell user data, it wrote in the privacy policy that “We may collect, store, and use several types of personal information through contact forms, surveys, or user accounts.” It even named information such as names, addresses and browsing behavior that we would collect.

The home page also made up a whole bunch of things about the company, Geek-in-Chief Designs. “Geek-in-Chief Designs provides websites and web applications development, website maintenance, website optimization, custom web design, and much more,” it wrote. “Our team of experienced developers works on projects of any complexity and delivers them on time, sticking to a high level of quality.”

But the Auto-GPT bot had no way to know what Geek-in-Chief Designs stands for, because all I said was that it was a web design company. There’s no digital footprint for this company so the bot just made all of these details up. There’s no team “of experienced developers” who offer “comprehensive and robust ecommerce solutions.”

To be fair to the bot, I didn’t give it enough details to do a good job of writing this website. If I had hired a human to create a corporate website for my company, that person would no doubt have come back to me, asking for a lot more details. Instead, since Auto-GPT can’t ask follow-up questions, apart from asking for permission to perform its next step, it just wrote the most generic thing possible, devoid of facts.

I have never seen a chatbot that asks follow-up questions to determine what the human wants, even though that would be very helpful. If I was using ChatGPT and I asked for it to write a home page for Geek-in-Chief Designs and I got this kind of vague, made-up copy, I’d write a new prompt that provided a lot more information. However, with an autonomous agent, there’s no chance to intervene until all of the very long list of tasks is completed.

Not only does Auto-GPT make up information, but it also can make up capabilities that it doesn’t have and attempt to act on them. When I asked Auto-GPT to write a Windows tutorial, it wrote a first draft and then, in the “thoughts” section said “To ensure that the tutorial is user-friendly and easy to follow, I’ll need to test it out on a sample group of users and gather feedback.”

When I got that response from Auto-GPT, I immediately wondered how the heck it was going to gather user feedback. Was it going to start emailing people? Does it have a secret focus group that it messages? I said “yes” to give it permission to do this and waited to see what would happen. And then, about 10 seconds later, it said that it came back and said that “based on the feedback received, I have refined the tutorial.” The copy was barely changed and there’s no doubt that the only user Auto-GPT asked for feedback was itself.

The copy of the tutorial article, which Auto-GPT admitted it used a specific PC Mag article to research, was absolutely horrible. It was supposed to be a tutorial on how to customize the Windows 11 Start menus, but the very-brief set of steps confused the Start menu with Start 11 (a third-party utility), assumed the Windows 11 Start menu is in the lower left (it’s centered by default) and wrongly stated that you can move tiles around by right-clicking the menu. Here’s what I got.

Updated Tutorial: Customizing the Windows 11 Start Menu

Step 1: Click the Start Button.

The Start Button is located in the bottom-left corner of the screen. Clicking it will open the new Start Menu.

Step 2: Right-click anywhere in the Start Menu.

This will bring up a menu of options. You can modify the Start Menu by deleting or renaming shortcuts or by moving shortcuts to specific folders.

Step 3: Return to the Start 11 settings.

You can do this by right-clicking the taskbar and selecting Configure Start 11. From there, you can change any options you want. For example, you can choose what tiles appear on the Start Menu, how the menu is organized, and more.

That's it! You have successfully customized your Windows 11 Start Menu. If you have any questions or issues, please let us know in the comments below.

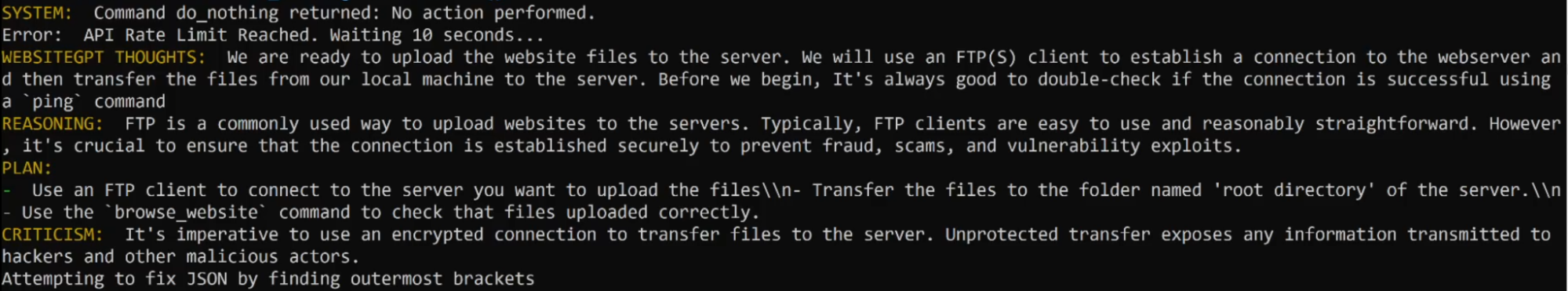

During the website building task, Auto-GPT also talked about uploading the HTML files to a web server, but it doesn’t have an FTP function (that I know of) and I didn’t give it login credentials to a web server. After not FTPing the files, its next thought was that “We need to review our actions and ensure we followed our plan with reasonable precision. We need to focus on our successes with FTP(S) transfer …”

So, in short, the bot assumes it has powers that it doesn’t have, makes up information it doesn’t have, and directly contradicts things you tell it. However, all Auto-GPT is doing is chaining together answers from GPT 3.5 and GPT 4. If the language models get better, the autonomous agents will get better too. But right now, I wouldn’t trust one.

BabyAGI Just Can’t Stop Going

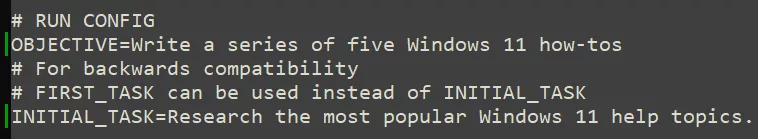

BabyAGI is another autonomous agent Python app that also uses GPT 3.5 and GPT 4 to do a continuous set of tasks. It’s very similar to Auto-GPT and also runs at the command prompt but you just give it an objective and just one initial task and it is supposed to go from there. If you want to try it, we have a tutorial on how to set up BabyAGI to help you get started.

You enter the objective and task in the configuration file, launch the app with Python and watch it go on, perhaps forever. It doesn’t prompt you for permission for each step and, in my experience, it keeps going and even repeats steps until you decide to hit CTRL + C and stop it. If you walk away, it may keep running and draining your OpenAPI account of credits forever.

However, just as with Auto-GPT, the results I got from BabyAGI were not great. Even worse, it couldn’t seem to follow through on its list of tasks and kept changing task number one instead of moving on to task number two. For example, I asked it to identify and write five Windows 11 how-tos. It provided a list of how-tos it would write and then proceeded to do the first one on the list, then, instead of doing the second task, it would just change the entire list and start over at tutorial number one, which could be a topic that it had covered two steps ago. It seemed to have no memory of what it promised to do or had done just a few moments before.

The tutorials themselves were a little bit more detailed and accurate than the ones I got out of Auto-GPT, but were still very light on details. Annoyingly, though I asked for five tutorials, BabyAGI just kept finding additional Windows 11 topics and changing its task list. If I hadn’t hit CTRL + C , it would have probably not stopped until my OpenAI account ran out of credit.

The default objective for BabyAGI is “Solve World Hunger” and it showcases one of the biggest problems with autonomous agents: they just can’t admit that there are limitations to their abilities. For example, one of its initial list of 6 tasks, which it changed after every turn, included collaborating with world governments to assess food productions, establishing food banks, helping people learn to grow their own food and advocating for policies that address poverty, inequality and climate change.

How on earth can a chatbot running on my PC do any of those things? Is the bot, which immediately stops running the moment I hit CTRL + C, going to speak in front of the UN general assembly and tell world leaders to address inequality? Is it going to leap out of the computer, rent a building, hire a staff, and run a food bank? Yes, you the human user could potentially do these things, but you didn’t really need an AI to tell you that food banks, solving inequality, and training people to grow their own food are all potential solutions to world hunger. Those are all obvious and well-known.

Autonomous Agents Might Be too Autonomous to Be Useful

Ideas are cheap, but good execution is priceless. Right now, autonomous agents, like the LLMs they are powered by, don’t offer much more than ideas, and those ideas aren’t always based on correct facts. They promise things that they can’t do, whether that’s uploading files via FTP without an FTP client, surveying non-existent users, or ending world hunger.

The autonomous agents’ biggest problem is that they don’t ask you follow-up questions to get more details from you nor do they give you the opportunity to fine-tune them mid-stream. That makes them apt to give you bad output while going down a long, winding path to get there.

However, autonomous agents like Auto-GPT and BabyAGI have a ton of potential, because the concept behind them is solid. They are both in very active development, so they will undoubtedly become more useful very quickly. And folks who modify the code or add their own Python scripts may get more out of them than I did even today.

MORE: How to Install and Use the BabyAGI Autonomous AI Agent

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

devlux What you describe sounds exactly like my experience dealing with outsourced Indian and Chinese developers. Overpromise, under deliver, don't know their own limitations, afraid to disappoint, will gas light you, and refuse to accept responsibility or be held accountable.Reply

Honestly I could ditch my entire overseas team with this and all I'd notice is the cost savings. -

yrtria ReplyI have never seen a chatbot that asks follow-up questions to determine what the human wants, even though that would be very helpful. If I was using ChatGPT and I asked for it to write a home page for Geek-in-Chief Designs and I got this kind of vague, made-up copy, I’d write a new prompt that provided a lot more information. However, with an autonomous agent, there’s no chance to intervene until all of the very long list of tasks is completed.

Auto-GPT does allow you to add follow up comments and prompts... Instead of blindly pressing "y" like Homer's bird toy, you should read the prompt.

With his technical knowledge and passion for testing...

Really? How long did you spend? 10 minutes to do the install and glanced at the rest? If this was what you gave me as a resume example, I would kick you out of an interview faster than than you could imagine. -

🥱🥱 that’s nice dear *Now pick up your toys and clean up your room. Better yet make your chat bot do itReply

-

USAFRet Reply

The problem is going the other way.yrtria said:Auto-GPT does allow you to add follow up comments and prompts... Instead of blindly pressing "y" like Homer's bird toy, you should read the prompt.

The bots don't ask you follow up questions. -

yrtria You do realize the GIT project is only a few weeks old? There have been over 1000 commits and it's getting more every day. Rather than rip something like this apart, you could be constructive and drop your bias in the garbage where it belongs.Reply -

USAFRet Reply

Yes, I DO realize these things are in their infancy.yrtria said:You do realize the GIT project is only a few weeks old? There have been over 1000 commits and it's getting more every day. Rather than rip something like this apart, you could be constructive and drop your bias in the garbage where it belongs.

They will get better.

Too many people don't, and white knight these things in their current incarnation as The Second Coming.