Bittorrent's 'Project Maelstrom' Aims To Deliver All Websites Through Torrents

Few will disagree that the arrival of the torrent protocol on the Internet represented a major event that forever changed how many people share files online. Torrents may have been adopted mostly for unauthorized sharing of paid content in its first decade of existence, but it's also been adopted by individuals, organizations and companies as a way to cut bandwidth costs or share data more securely.

Not too long ago, Bittorrent launched Bittorrent Sync, which allows individuals to sync or share files with other computers in a secure way. Now, the company wants the whole web to be rebuilt around torrents.

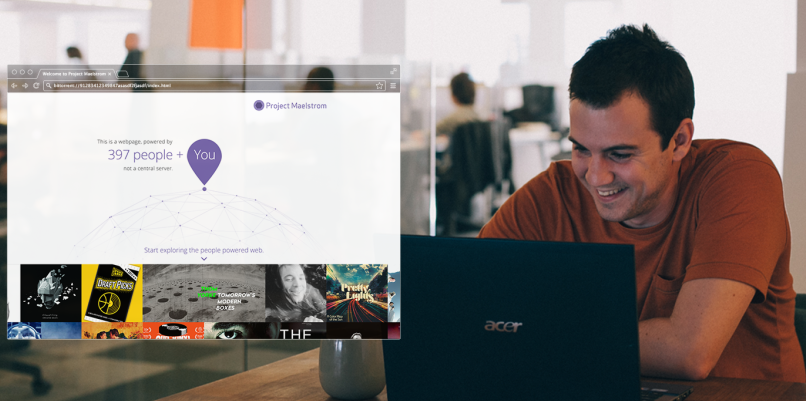

Bittorrent announced the invite-only Alpha version of its Project Maelstrom, which is a browser that fetches websites from other peers instead of a central server. Websites are delivered P2P using the torrent protocol.

"It started with a simple question. What if more of the web worked the way BitTorrent does?" said Eric Klinker, CEO of Bittorrent, in a blog post.“Project Maelstrom begins to answer that question with our first public release of a web browser that can power a new way for web content to be published, accessed and consumed. Truly an Internet powered by people, one that lowers barriers and denies gatekeepers their grip on our future."

Downloading files through torrents tends to be faster if those files are popular, and therefore shared by more people. The opposite is true with files that don't have too many people sharing them at any given time. A torrent-based browser could face the same issue. However, if the majority of today's popular websites start supporting this browser, most people are unlikely to notice a difference, because a lot of folks tend to visit websites that are already quite popular.

Klinker believes that this torrent-based web could also be the technological solution that can ensure that the Internet remains neutral, and gatekeepers are minimized or even eliminated. Further, because torrents are a more efficient way to deliver data, it could also help with congestion and other problems ISPs may be facing today due to heavy data demands.

The main questions that remain unanswered about this torrent-based browser is whether others could build similar browsers that would be compatible with this "new torrent-based web" by adopting a certain open source standard that Bittorrent releases, and whether this open standard would come with built-in encryption. Most torrents out there aren't encrypted, but the fact that Bittorrent Sync torrents are gives us some hope for built-in security.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

If you want to remain up-to-date with this project, you can sign up and wait for an invitation.

Follow us @tomshardware, on Facebook and on Google+.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

dovah-chan Indeed it is a novel concept but it doesn't solve the congestion issues that North American ISPs suffer from. That is their fault for (purposefully) not properly maintaining their infrastructure and giving enough, if any customer service and support.Reply

But still, even with bittorrent being a peer to peer protocol, they don't seem to want to mention the existence of seed boxes. While they are not quite like a dedicated server, essentially they act as one as they are usually the big seeders in a lot of torrents and host as well as seed the file being shared. Also if websites were peer to peer then it would take a long time for sites to load.

Think of it like this: let's compare a dedicated server to RAM. GDDR RAM is great for large and continuous transfer of big amounts of data, but doesn't have the same snappy feeling and optimization for many small programs transferring small amounts of data such as DRR DRAM. The same goes with the bittorrent protocol and the http protocol. It takes a noticeable amount of time for a torrent to get up to speed but you can download a 213kb jpeg in your browser in a snap.

This is due to the phenomena of the TCP handshake. In http, it's just you and a dedicated server. In bittorrent you have to ping a larger number of peers and then begin receiving data from them.

Anyways, if this does get large amounts of support then these issues could possibly be resolved but companies are unwilling to just give up the millions of dollars they invest into their servers. -

jimbo007 Defeating Internet censorship through P2P free web comes to the mind as an application.Reply -

jdwii Reply14783165 said:Indeed it is a novel concept but it doesn't solve the congestion issues that North American ISPs suffer from. That is their fault for (purposefully) not properly maintaining their infrastructure and giving enough, if any customer service and support.

But still, even with bittorrent being a peer to peer protocol, they don't seem to want to mention the existence of seed boxes. While they are not quite like a dedicated server, essentially they act as one as they are usually the big seeders in a lot of torrents and host as well as seed the file being shared. Also if websites were peer to peer then it would take a long time for sites to load.

Think of it like this: let's compare a dedicated server to RAM. GDDR RAM is great for large and continuous transfer of big amounts of data, but doesn't have the same snappy feeling and optimization for many small programs transferring small amounts of data such as DRR DRAM. The same goes with the bittorrent protocol and the http protocol. It takes a noticeable amount of time for a torrent to get up to speed but you can download a 213kb jpeg in your browser in a snap.

This is due to the phenomena of the TCP handshake. In http, it's just you and a dedicated server. In bittorrent you have to ping a larger number of peers and then begin receiving data from them.

Anyways, if this does get large amounts of support then these issues could possibly be resolved but companies are unwilling to just give up the millions of dollars they invest into their servers.

Very good explanation Dovah-chan i couldn't explain it better myself. Perhaps they should let you work here?

Edit if i did add anything to your statement it would be upload speeds are still a problem here in the U.S(not sure around the world)

If this is how the web worked what if they were trying to pull information from a exede base customer with high ping times. Not to mention will this eat bandwidth from normal users since we are all getting caps on are limit. -

wavetrex What about dynamic content (which is pretty much 99.9% of the web today)?Reply

The page that is delivered is customized to the user that is accessing it, and not "static" like the old pure HTML websites.

How torrents would solve this ? It won't obviously...

Maybe they want to use torrents for larger stuff like pictures or videos, but again... instead of loading a picture almost instantly you would have to wait until your host pings everyone else in the swarm and ask for the pieces.

Not really a good idea to be honest, the company is daydreaming... -

bit_user A distributed P2P web sounds great, but ISPs can and will keep it from ever getting far off the ground.Reply

Dovah, it's possible to design a fully distributed system, where you aren't reliant on any single seed. The problem is reliability. As long as the content is popular enough, you can get enough copies of all the blocks to be distributed among the peers that viewers of the content won't have much trouble assembling a complete copy. But it would only tend to work for short-lived, fairly popular content.

How do you convince people to devote their HDD space & bandwidth to storing & serving random chunks of content, you ask? Much like bittorrent works today, peers would favor those who serve them more blocks. So, the more you store and seed, the better your download speeds will tend to be.

That said, I revert to my original point: the ISPs are too powerful. Also, wavetrex has a good point about dynamic & personalized content.

-

Pinhedd Reply14785153 said:A distributed P2P web sounds great, but ISPs can and will keep it from ever getting far off the ground.

Dovah, it's possible to design a fully distributed system, where you aren't reliant on any single seed. The problem is reliability. As long as the content is popular enough, you can get enough copies of all the blocks to be distributed among the peers that viewers of the content won't have much trouble assembling a complete copy. But it would only tend to work for short-lived, fairly popular content.

How do you convince people to devote their HDD space & bandwidth to storing & serving random chunks of content, you ask? Much like bittorrent works today, peers would favor those who serve them more blocks. So, the more you store and seed, the better your download speeds will tend to be.

That said, I revert to my original point: the ISPs are too powerful. Also, wavetrex has a good point about dynamic & personalized content.

Stop blaming it on the ISPs and start blaming it on the tools that want to create a square wheel just to rebel against the man.

Static files can be delivered wonderfully through the bit torrent protocol because it doesn't matter where the data comes from, what order it arrives in, how long it takes, how much jitter there is, what the skew between sequential blocks is, etc...

Dynamic or time sensitive data on the other hand has not ever and will not ever work properly over bit torrent. Client developers have been integrating streaming services into their clients for years and none of them work properly because the stream requires data to arrive in a certain order and within a certain time window. Since the torrent client loads blocks in a best effort fashion it can never stay steady long enough to calculate and fill a buffer.

This will also be a complete nightmare for synchronous and encrypted connections that expect data to follow a predictable route with minimal jitter and minimal packet loss.

A distributed P2P web sounds great only to coffee-shop hipsters who have no idea what's actually involved and have gleaned all of their insight from blog posts written by journalists who have misunderstood something that they read about on wikipedia or buzzfeed. For those of us who actually know how things work, this joins the ranks of ridiculously stupid ideas alongside "solar roadways" and "thorium powered cars".

In fact, there's already a very similar implementation of this available called Freenet. Unsurprisingly it works with only static content and is used primarily to distribute illegal material.