CPU-Based Deep Learning Breakthrough Could Ease Pressure on GPU Market

Deep learning company Deci says it can more than double CPU AI processing performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

Israel-based deep learning and artificial intelligence (AI) specialist Deci announced this week that it achieved "breakthrough deep learning performance" using CPUs. GPUs have traditionally been the natural choice for deep learning and AI processing. However, with Deci's claimed 2x improvement delivered to cheaper CPU-only processing solutions, it looks like pressure on the GPU market could be eased.

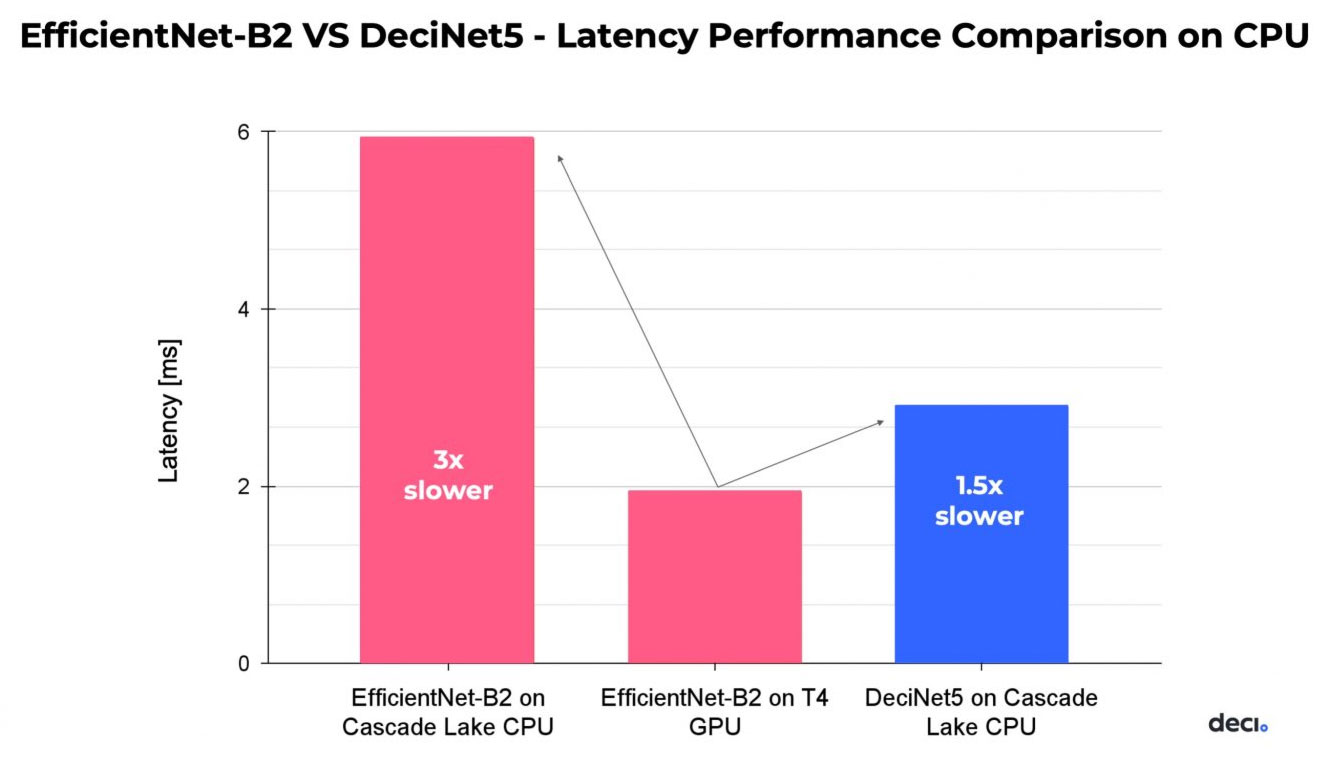

DeciNets are Deci's innovative industry-leading image classification models currently optimized for use on Intel Cascade Lake CPUs. They use Deci's proprietary Automated Neural Architecture Construction (AutoNAC) technology, and running on CPUs they are over two times faster and more accurate than using Google-developed EfficientNets.

"As deep learning practitioners, our goal is not only to find the most accurate models, but to uncover the most resource-efficient models which work seamlessly in production – this combination of effectiveness and accuracy constitutes the 'holy grail' of deep learning," said Yonatan Geifman, co-founder and CEO of Deci. "AutoNAC creates the best computer vision models to date, and now, the new class of DeciNets can be applied and effectively run AI applications on CPUs."

Image classification and recognition, as addressed by DeciNets, is one of the major tasks to which deep learning and AI systems are applied in the present day. Such tasks are important consumer-facing tech like Google Photos, Facebook, and driver assistance systems. However, it is also critical for industrial, medical, governmental and other applications.

Suppose DeciNets can actually "close the gap significantly between GPU and CPU performance" for running convolutional neural networks, with better accuracy, and they can run on cheaper, more energy-efficient systems. In that case, we could see a major shift in the data center industry. Such a significant industry moving away from requiring expensive multi-GPU packing servers for AI processing could take pressure off the GPU market.

Notably, Deci has been working with Intel to optimize deep learning inference on Intel Architecture (IA) CPUs for nearly a year. Meanwhile, it says that multiple customers have already adopted its AutoNAC technology in production industries.

It is important to remember that companies like Deci are naturally full of optimism for their new technologies and this comes through in their news releases. However, while the performance and efficiency claims might be accurate, established industries have tremendous inertia, lengthening the time to move away from proven solutions.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In April 2021, we reported on a new algorithm, developed by Rice's Brown School of Engineering, that could make CPUs perform even faster than GPUs in some deep learning applications. The scientists demonstrated their advances with a specialized use case. In comparison, Deci seems to have targeted a very much in-demand deep learning / AI processing task (image classification and recognition), so we should see broader interest from the tech industry.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.