New Algorithm Makes CPUs 15 Times Faster Than GPUs in Some AI Work

CPUs can beat GPUs in some AI workloads

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

GPUs are known for being significantly better than most CPUs when it comes to AI deep neural networks (DNNs) training simply because they have more execution units (or cores). But a new algorithm proposed by computer scientists from Rice University is claimed to actually flip the tables and make CPUs a whopping 15 times faster than some leading-edge GPUs.

The most complex compute challenges are usually solved using brute force methods, like either throwing more hardware at them or inventing special-purpose hardware that can solve the task. DNN training is without any doubt among the most compute-intensive workloads nowadays, so if programmers want maximum training performance, they use GPUs for their workloads. This happens to a large degree because it is easier to achieve high performance using compute GPUs as most algorithms are based on matrix multiplications.

Anshumali Shrivastava, an assistant professor of computer science at Rice's Brown School of Engineering, and his colleagues have presented an algorithm that can greatly speed up DNN training on modern AVX512 and AVX512_BF16-enabled CPUs.

"Companies are spending millions of dollars a week just to train and fine-tune their AI workloads," said Shrivastava in a conversation with TechXplore. "The whole industry is fixated on one kind of improvement — faster matrix multiplications. Everyone is looking at specialized hardware and architectures to push matrix multiplication. People are now even talking about having specialized hardware-software stacks for specific kinds of deep learning. Instead of taking a [computationally] expensive algorithm and throwing the whole world of system optimization at it, I'm saying, 'Let's revisit the algorithm.'"

To prove their point, the scientists took SLIDE (Sub-LInear Deep Learning Engine), a C++ OpenMP-based engine that combines smart hashing randomized algorithms with modest multi-core parallelism on CPU, and optimized it heavily for Intel's AVX512 and AVX512-bfloat16-supporting processors.

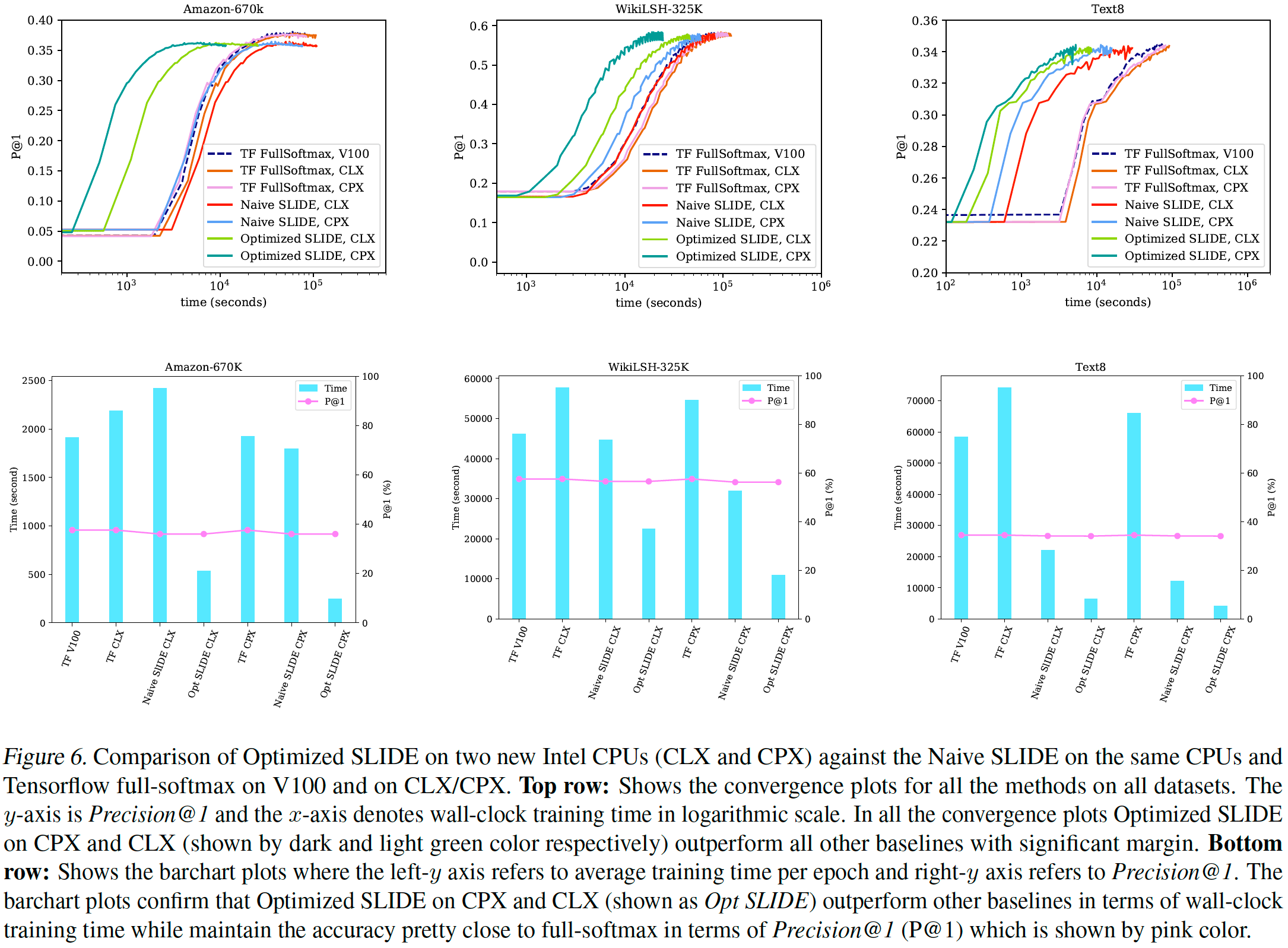

The engine employs Locality Sensitive Hashing (LSH) to identify neurons during each update adaptively, which optimizes compute performance requirements. Even without modifications, it can be faster in training a 200-million-parameter neural network, in terms of wall clock time, than the optimized TensorFlow implementation on an Nvidia V100 GPU, according to the paper.

"Hash table-based acceleration already outperforms GPU, but CPUs are also evolving," said study co-author Shabnam Daghaghi.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

To make hashing faster, the researchers vectorized and quantized the algorithm so that it could be better handled by Intel's AVX512 and AVX512_BF16 engines. They also implemented some memory optimizations.

"We leveraged [AVX512 and AVX512_BF16] CPU innovations to take SLIDE even further, showing that if you aren't fixated on matrix multiplications, you can leverage the power in modern CPUs and train AI models four to 15 times faster than the best specialized hardware alternative."

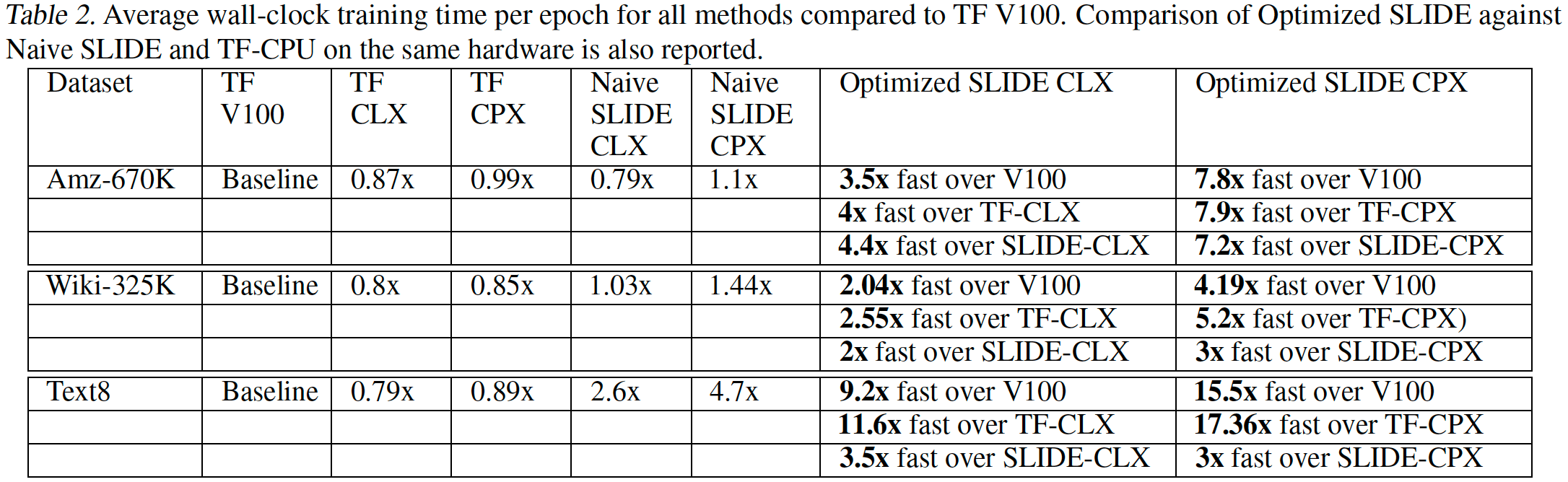

The results they obtained with Amazon-670K, WikiLSHTC-325K, and Text8 datasets are indeed very promising with the optimized SLIDE engine. Intel's Cooper Lake (CPX) processor can outperform Nvidia's Tesla V100 by about 7.8 times with Amazon-670K, by approximately 5.2 times with WikiLSHTC-325K, and by roughly 15.5 times with Text8. In fact, even an optimized Cascade Lake (CLX) processor can be 2.55–11.6 times faster than Nvidia's Tesla V100.

Without any doubt, optimized DNN algorithms for AVX512 and AVX512_BF16-enabled CPUs make a lot of sense since processors are pervasive as they are used by client devices, data center servers, and HPC machines. To that end, it is very important to take advantage of all of their capabilities.

But there might be a catch when it comes to absolute performance, so let's speculate for a moment. Nvidia's A100 promises to be 3–6 times faster than Nvidia's Tesla V100 used by researchers for comparison (perhaps because getting an A100 is hard) in training. Unfortunately, we do not have any A100 numbers with Amazon-670K, WikiLSHTC-325K, and Text8 datasets. Perhaps, an A100 cannot beat Intel's Cooper Lake when it uses an optimized algorithm, but these AVX512_BF16-enabled CPUs are not exactly widely available (like the A100). So, the question is, how does Nvidia's A100 stack up against Intel's Cascade Lake and Ice Lake CPUs?

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.