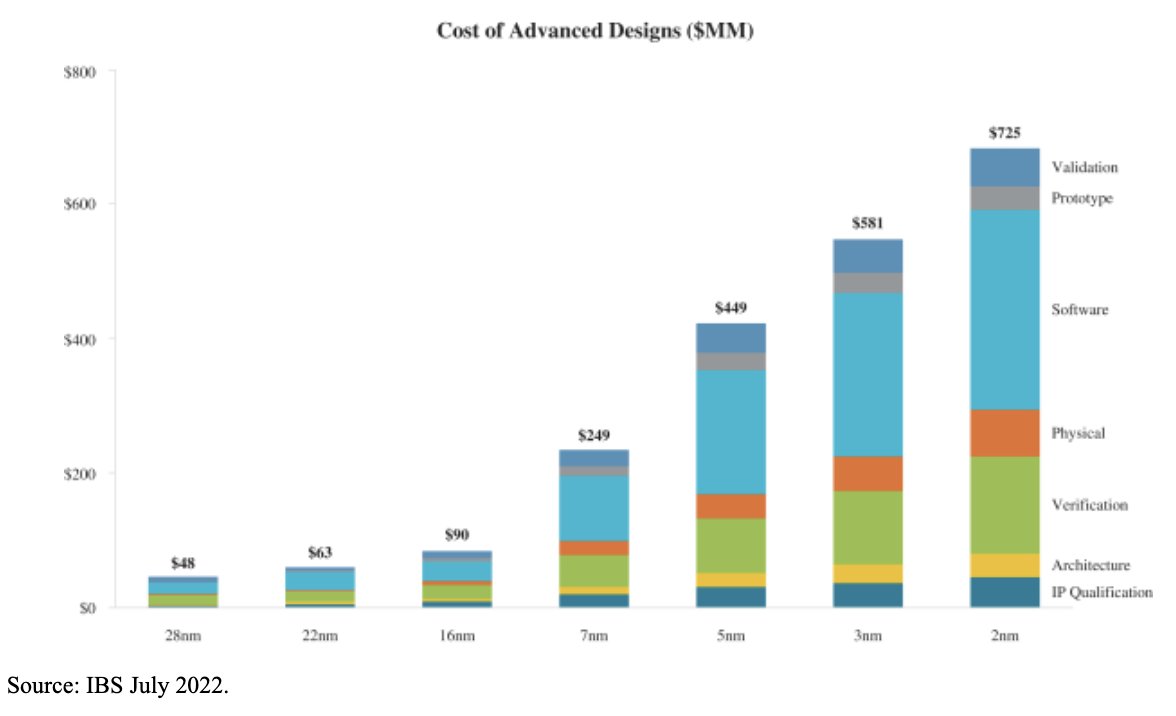

Firm Estimates a 2nm Chip Now Costs $725 Million to Design

At least, for a large chip.

Chip design costs began to skyrocket with the introduction of FinFET transistors back in 2014 and got particularly high in recent years with 7nm and 5nm-class process technologies. International Business Strategies (IBS) recently published estimates regarding 2nm-class chip designs, and it turns out that the development of a fairly large 2nm chip will total $725 million, according to a slide published by The Transcript.

Software development and verification accounts for the lion's share of chip design development costs — approximately $314 million for software and about $154 million for verification.

While the chip design costs are increasing, and we can hardly argue about this fact, there is a major catch about estimates by IBS. They reflect the development costs of a fairly large chip from scratch by a company that does not have any IP and has to develop everything from scratch.

While there are startup companies that manage to develop huge designs (e.g., Graphcore), most of them develop something significantly smaller. Furthermore, startups tend to license whatever they can and therefore have to design and verify only their differentiating IP and then validate the whole design. These companies do not spend $724 million on a chip (or even a platform) simply because they do not have such resources.

Large companies that have resources for extremely complex chips already have loads of IP and lines of code that work, so they do not have to spend $724 million on a single chip. Yet, they tend to spend hundreds of millions, or even billions, developing platforms. For example, when Nvidia develops its new product lineups (e.g., Ada Lovelace for gaming and Hopper for compute GPUs), it spends enormous amounts of money on microarchitectures and then on actual physical implementations of chips.

Another aspect of the estimates is that they presume traditional chip design methods without using AI-enabled electronic design automation tools and other software that significantly cuts down development time and costs. However, these estimates emphasize the importance of AI-enabled tools by Ansys, Cadence, and Synopsys and imply that it will be close to impossible to build a leading-edge chip without using software featuring artificial intelligence in the near future.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Co BIY Those AI assisted development tools are undoubtedly essential and reduce development time but I don't think they come cheap.Reply