Glaze 1.0 Modifies Art to Block AI-Generated Imitations

The tool shifts pixels around to prevent artwork from being used as training data.

Just as text-based GAI engines like Google SGE can plagiarize writers, image-generation tools like Stable Diffusion and Mid-Journey can swipe content from visual artists. Bots (or copycat humans) go out onto the open-web, grab images from artists and use them as training data without consent or compensation to the human who made them. Then, users can go into a prompt and ask for a painting or illustration "in the style" of the original artist.

The taking of art as training data is already the subject of several lawsuits, with a group of artists currently suing Stablity AI, DeviantArt and Midjourney. However, as we wait for the courts and the law to catch up, a group of researchers at the University of Chicago has developed Glaze. This open-source tool shifts pixels around on images, making them more difficult for AIs to ingest. Today, after several months in public beta, Glaze 1.0 has launched.

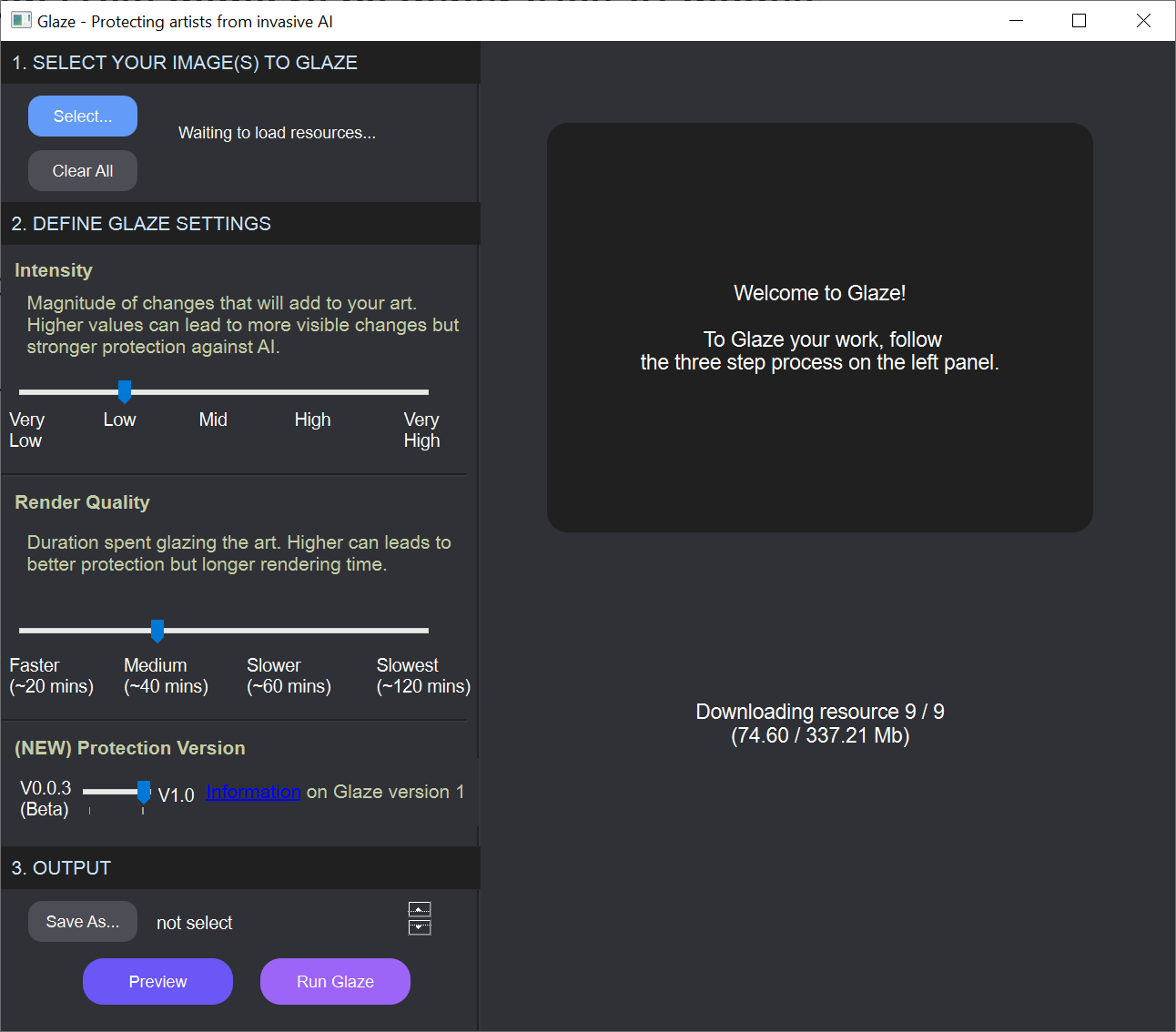

As of today, you can download Glaze 1.0 for free and run it on Windows or macOS (for Apple or Intel silicon). You can use it either with or without a discrete GPU. I downloaded the GPU version for Windows and tried it on three public-domain paintings from Claude Monet: the Japanese Footbridge, the Houses of Parliament and the Bridge at Argenteuil.

The UI is very straightforward, allowing you to select the images themselves, an output folder for the modified copies and sliders to let you choose the intensity of the changes and the render quality.

Turning up the intensity of the changes can make the output image seem more different than the original but offers better protection. Turning up the Render quality increases the time it takes to complete the process.

The tool has a "Preview" button, but it doesn't actually work, giving a message that version 1.0 doesn't support previewing. So, to see how your images will appear, you have to generate them.

I found that at "Faster" rendering speeds, the system took about 90 seconds to "glaze" two images on my desktop (45 seconds each), which is running an RTX 3080 GPU and a Ryzen 9 5900X CPU. At the "Slowest" rendering speed, a single image took one minute and 40 seconds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

As part of the process, the tool also evaluates the strength of the protection it has generated to let you know whether it thinks your image has been modified enough to fool AIs. The first several times I modified Monet's "Japanese Footbridge," I received an error saying it wasn't protected enough (though it did output the images). When I increased the intensity to "Very High," I no longer got the error message.

Below you'll see the original Japanese Footbridge, followed by the Very High / Slowest version (best protection) and the Very Low / Fastest render (which generated an error for not being protective enough). The differences, even in the most protective version, are pretty subtle.

Original Japanese Footbridge

Very High / Slowest Glaze Protection

Low / Fastest Glaze protection

Below, you can see the other two Monet images, with each original followed by its Very High / Slowest version.

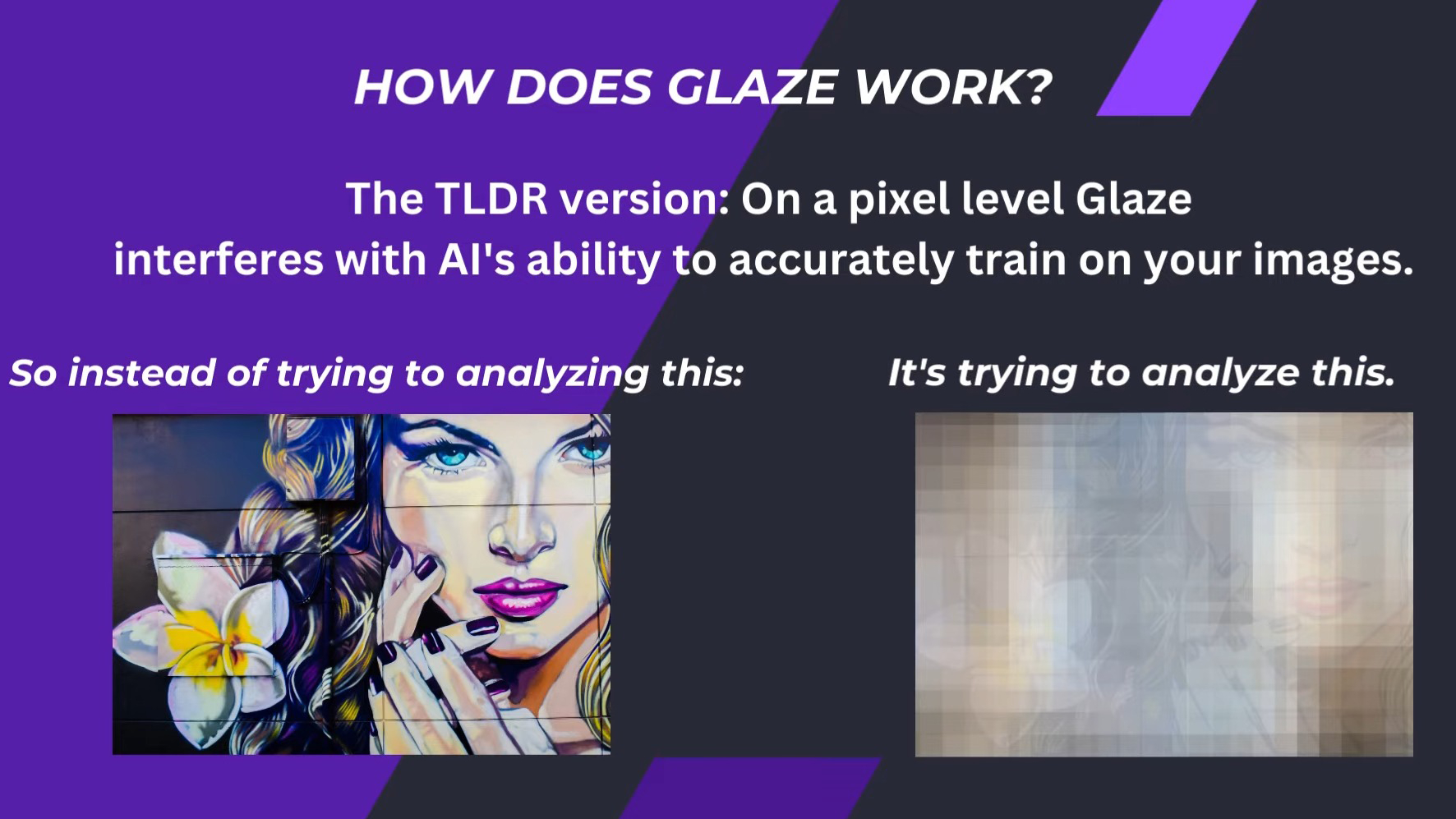

So how exactly does Glaze protect your images? The team behind the tool has made this helpful YouTube video to explain it in detail. However, the short answer is that, though the human eye sees these pixel shifts as subtle, the bots have a much harder time with them.

I didn't get to test various drawing types, so some might look less authentic after modification than my Monet samples did. It's also clear that photographs are unlikely to be protected by Glaze because they don't have enough of a unique visual style to modify.

The result is that bots can train on the images, but they shouldn't accurately pick up on the artists' style and what makes it unique. For example, when trained on the Japanese Footbridge, an AI might make a similar bridge on request but not with the same brush strokes. I say "might" because I didn't have a reliable means of testing Glaze-outputted images as training data for this story, and, even if I did have one, it's possible that a different AI tool would do a better job of copying it.

Glaze's makers know that AI bots are evolving and that their solution will need to continue evolving. There's an arms race to protect artists' images, and, given that AI companies have lots of money and developers, they have the advantage.

However, Glaze is a good step forward for artists who have to show their work online but don't want it to be used to put them out of business.

Avram Piltch is Managing Editor: Special Projects. When he's not playing with the latest gadgets at work or putting on VR helmets at trade shows, you'll find him rooting his phone, taking apart his PC, or coding plugins. With his technical knowledge and passion for testing, Avram developed many real-world benchmarks, including our laptop battery test.

-

I'm not confident whether creating a "cloaked" version of the original image is going to be foolproof for 'AI mimicry'.Reply

Although the cloaked artwork would appear identical and original to humans, but few changes made to certain artworks that have flat/dull/plain colours and smooth backgrounds, such as animation styles, are much more visible.

Human face pictures/photos have few distinct features, but art is much more complex, with an artistic style defined by numerous things, including brushstrokes, colour palettes, light and shadow as well as texture and positioning.

So in order to confuse AI tools and make sure they would not be able to read the artistic style and/or replicate it, we need to first isolate which parts of a piece of art were being highlighted as the key style indicators by AI art tools. I guess using a “fight fire with fire” approach is what these researchers are doing. -

bit_user Heh, of course the samples are on rather impressionistic artwork. Try one of these and I'll bet the protected version will look like garbage:Reply

https://en.wikipedia.org/wiki/Realism_(arts)

Even for impressionistic style, I still think the zoomed version looks pretty bad.

Ultimately, it won't be much more than a bump in the road, for AI image generators. Either they'll devise a filter to detect and try to undo the distortion, or they'll just skip the small percentage of artwork using it. It won't make much difference, in the end.

In my opinion, this is either a well-intentioned but ultimately rather futile effort, or maybe even an exploitative cash-grab. What it's not is a meaningful counterattack. -

bit_user Reply

No, they're quite visible. You need to click the zoom button and see at least a 1024x1024 version of the images. If you do, I'm sure you'll easily see the effect.Metal Messiah. said:Although the cloaked artwork would appear identical and original to humans,

This is a good point. A lot of these cues can be gleaned from even the "protected" images.Metal Messiah. said:Human face pictures/photos have few distinct features, but art is much more complex, with an artistic style defined by numerous things, including brushstrokes, colour palettes, light and shadow as well as texture and positioning. -

cryoburner This seems like snake oil. I highly doubt it would be effective. Even if it managed to somehow confuse today's AI training algorithms, tomorrow's might be able to see through it easily, at which point all the images you posted online using it will be unprotected. So you will have been going out of your way to present reduced-quality versions of your art that look worse without any real gain. And if you have already ever posted any of your works online, those images detailing your style are already out there, with numerous copies stored across the Internet, and have probably already been used for training AI.Reply

And really, an artist's threat from AI is less that someone will copy their exact style, but rather simply that anyone being able to produce a detailed image in a matter of seconds with a simple text prompt means that the demand for original works that one spent days or weeks creating will be diminished. That cat is already out of the bag, and attempts to hide a small portion of images from AI is not going to change it. And this doesn't just go for images, but also for written works, music, and eventually most other creative and communicative endeavors, especially those that don't require complex interactions with the physical world to produce, where AI will have a massive speed advantage. -

InvalidError Reply

I wouldn't go that far. If the goal is to stop AI from mimicking an artist's original style, this will work in teaching AIs the AI-modified style instead of the original art as long as the artist doesn't publish the unmodified original style.cryoburner said:This seems like snake oil.

In other words, to protect your original style, you basically cannot publish it anywhere and as far as everyone else knows, your style is the AI-modified stuff. -

setx Reply

I didn't even need to zoom in – in-article images are enough to see the ugly distortion. It looks like... something AI-generated? lolbit_user said:No, they're quite visible. You need to click the zoom button and see at least a 1024x1024 version of the images. If you do, I'm sure you'll easily see the effect.

Who really wants to protect their style better not show the images to anyone else. -

cryoburner Reply

Except realistically, pretty much everyone who might use this has uploaded lots of their art already, and AI has probably already trained on it, or will train on cached copies in the future. And if the artists were to never post their original style anywhere, and only posted the distorted style, then what difference would it make if the AI copied their distorted style? The stuff they are posting wouldn't be any better. And if they make undistorted copies of their art available anywhere, it will likely get uploaded by someone else.InvalidError said:I wouldn't go that far. If the goal is to stop AI from mimicking an artist's original style, this will work in teaching AIs the AI-modified style instead of the original art as long as the artist doesn't publish the unmodified original style.

In other words, to protect your original style, you basically cannot publish it anywhere and as far as everyone else knows, your style is the AI-modified stuff.

The modified works also look kind of bad up close, a bit like they're coated in wet wrinkled saran wrap. If an artist is already at a disadvantage competing against AI, it's probably not going to be to their advantage to pass their art through a filter that makes it look worse. They might as well just compress it as a 50% quality Jpeg and call it a day. And if disfiguring one's artwork like this became common, it probably wouldn't be hard for the AI to recognize and filter it out.

And once more, even if it were to stop AI from cloning a person's style, it's not likely to make their art any more valuable, and they will still be competing against technology that can produce detailed artworks in almost no time and at virtually zero cost. And if you have a popular style, other people are bound to produce similar works, and the AI will just train off them instead. -

InvalidError Reply

The problem of AI copying artists' style does exist. The "solution" of only publishing AI-modified artwork on the other hand is questionable since the "protection" goes away the instant the unmodified originals are made public and you are only known for your anti-AI-altered style until then, which kind of defeats the point.IamNotChatGpt said:Solution to a problem that doesn't exist. -

IamNotChatGpt Reply

There is no problem since copying other Artists' style is absolutely normal. This is how we learn literally anything from day 0.InvalidError said:The problem of AI copying artists' style does exist. The "solution" of only publishing AI-modified artwork on the other hand is questionable since the "protection" goes away the instant the unmodified originals are made public and you are only known for your anti-AI-altered style until then, which kind of defeats the point.

If you post something public, then you must expect web crawling.

This becomes very different, the moment we talk about closed spaces. For example training off of private coding repositories, closed artist spheres, pirated media etc.