Google's AutoML AI Won't Destroy The World (Yet)

A popular concept in science fiction is the singularity, a moment of explosive accelerating growth in technology and artificial intelligence that rewrites the world. One of the better explanations for how this could happen is described by the Scottish sci-fi author Charles Stross as “a hard take-off singularity in which a human-equivalent AI rapidly bootstraps itself to de-facto god-hood.”

To translate: If an AI is capable of improving (“boostrapping”) itself, or of building another, smarter AI, then that next version can do the same, and soon you have exponential growth. In theory this could lead to a system rapidly surpassing human intelligence, and, if you’re in a Stross novel, probably a computer that’s going to start eating people’s brains.

The singularity still seems to be a long ways off (until we crack Moore’s Law), but at Google I/O, we got a glimpse of our future robot overlords from Google CEO Sundar Pichai.

Lifelong Learning

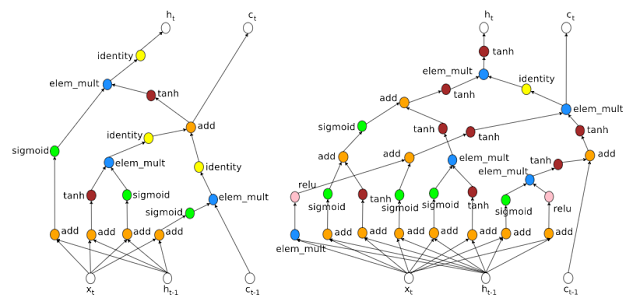

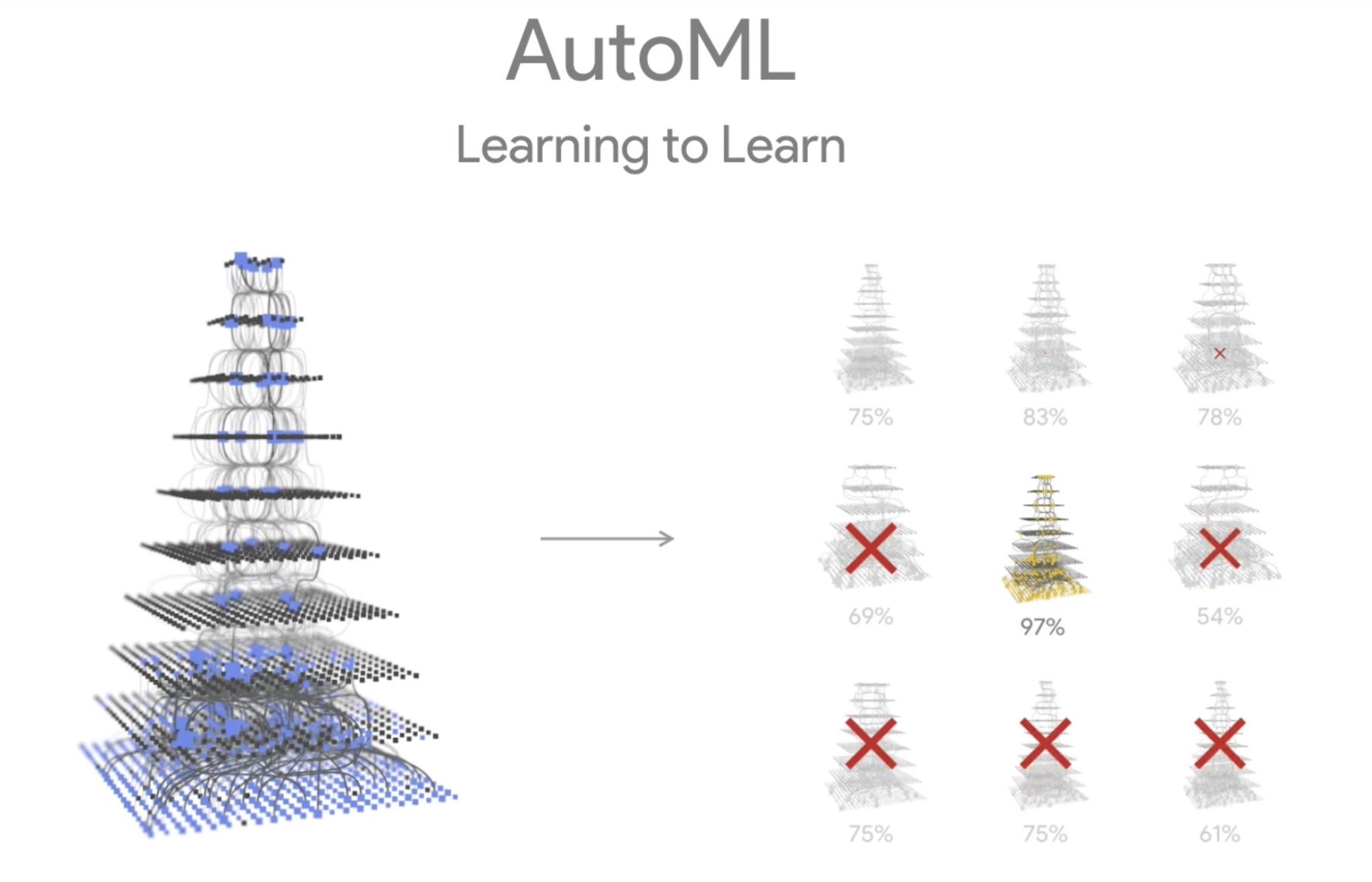

The new technology is called AutoML, and it uses a machine learning system (ML) to make other machine learning systems faster or more efficient. Essentially, it’s a program that teaches other programs how to learn, without actually teaching them any specific skills (it’s the liberal arts college of algorithms).

AutoML comes from the Google Brain division (not to be confused with DeepMind, the other Google AI project). Whereas DeepMind is more focused on general-purpose AI that can adapt to new tasks and situations, Google Brain is focused on deep learning, which is all about specializing and excelling in narrowly defined tasks.

According to Google, AutoML has already been used to design neural networks for speech and image recognition. (Fun fact: The networks to accomplish these two tasks are usually nearly identical. Images are typically analyzed by looking at repeating patterns in pixels, and speech is analyzed by turning sound into a graph of frequency over time that’s analyzed the same way). Designed by AutoML, the image recognition algorithms were as good as those designed by humans, and the speech recognition algorithms were, as of February 2017, “0.09 percent better and 1.05x faster than the previous state-of-the-art model.”

Using AI to build machine learning systems has been a hot area of research since 2016, as researchers at Google, UC Berkeley, OpenAI, and MIT have worked to reduce the time needed to set up and test new neural architectures.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Google’s plan is not to bring about the AI apocalypse, but to lower the barrier to entry for companies interested in machine learning research or products. Instead of “automated” or “self-reinforcing,” Google’s AutoML software might be better said to be “self-assembling,” or “self-optimizing” (the term Berkeley researchers used for their similar algorithm).

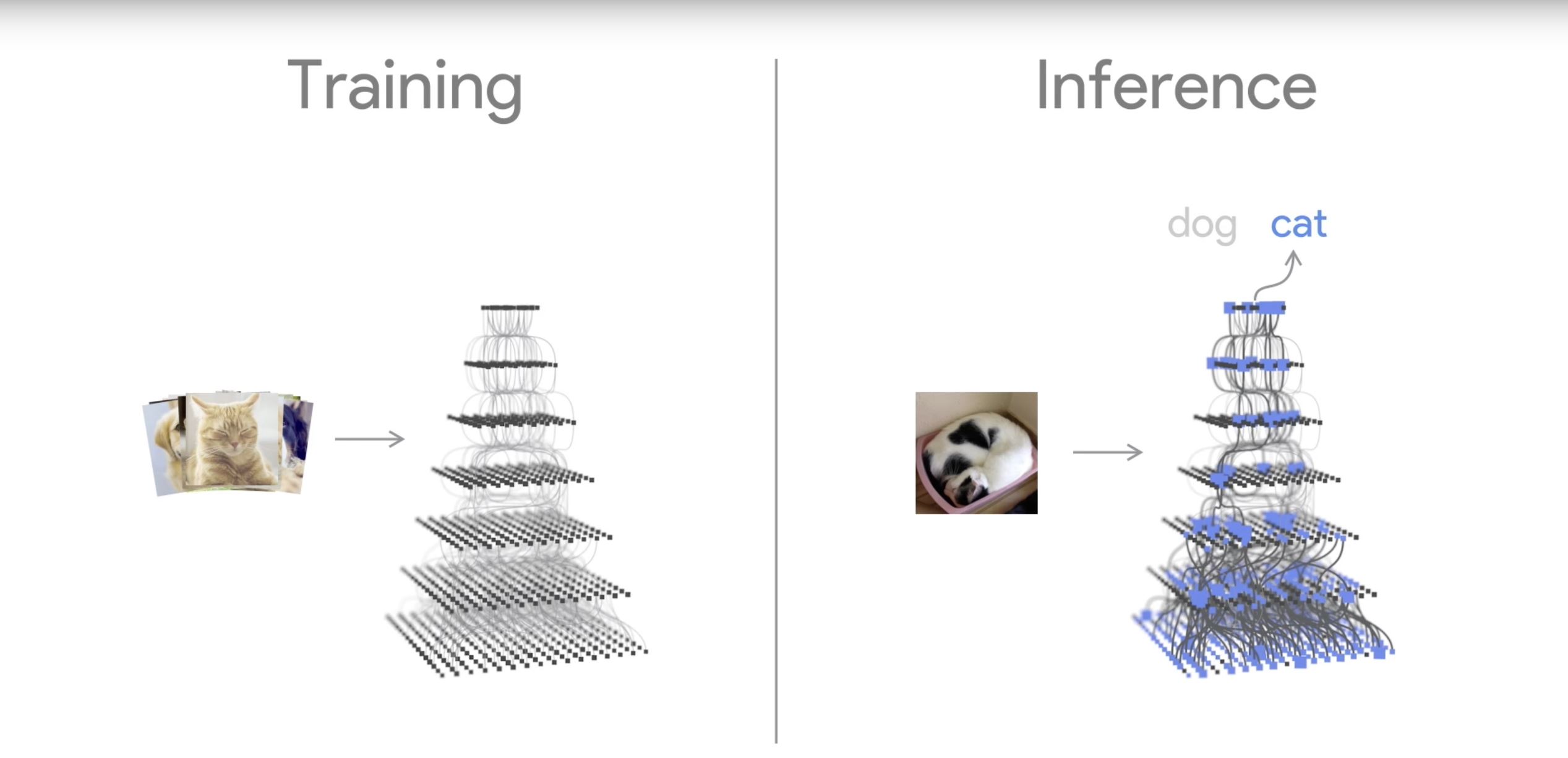

No, That's A Civet

Traditional machine learning takes two main approaches. A computer is either fed thousands of labeled pieces of data (say, photos of a cat, and photos not of a cat), and eventually it will build a system to differentiate “cat” from “not a cat.” This system may be unique, and we won’t necessarily be able understand exactly how it’s working, but at the end it’ll reliably identify a tabby.

The other method is the way computers can be trained to solve more flexible problems, like an efficient way to walk or how to escape a virtual maze. The computer is given a set of parameters to work in as well as a failure condition. The computer will then be set to experiment. At first it will work at random, but as it rules out more and more failed attempts, it’ll zero in on a solution. A combination of these methods was how AlphaGo learned to play board games.

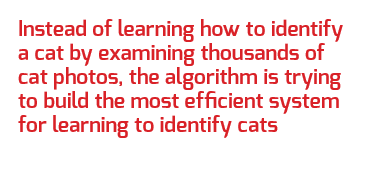

Automated machine learning is just one more layer of abstraction. Instead of learning how to identify a cat by examining thousands of cat photos, the algorithm is trying to build the most efficient system for learning to identify cats.

This has a few major benefits. One is that deep learning systems need to be tuned to the inputs they will be analyzing. Although any machine learning system improves itself over time, a poorly designed algorithm may never be as fast or as accurate as a well designed one, and designing an optimized algorithm is hard. Some programmers swear they operate by intuition, and no matter what the method, the task of tuning and refining an algorithm takes time and expertise. Also, a well designed algorithm may be quicker to train, and require less time and input until it’s proficient at a task.

Clouds on the Horizon

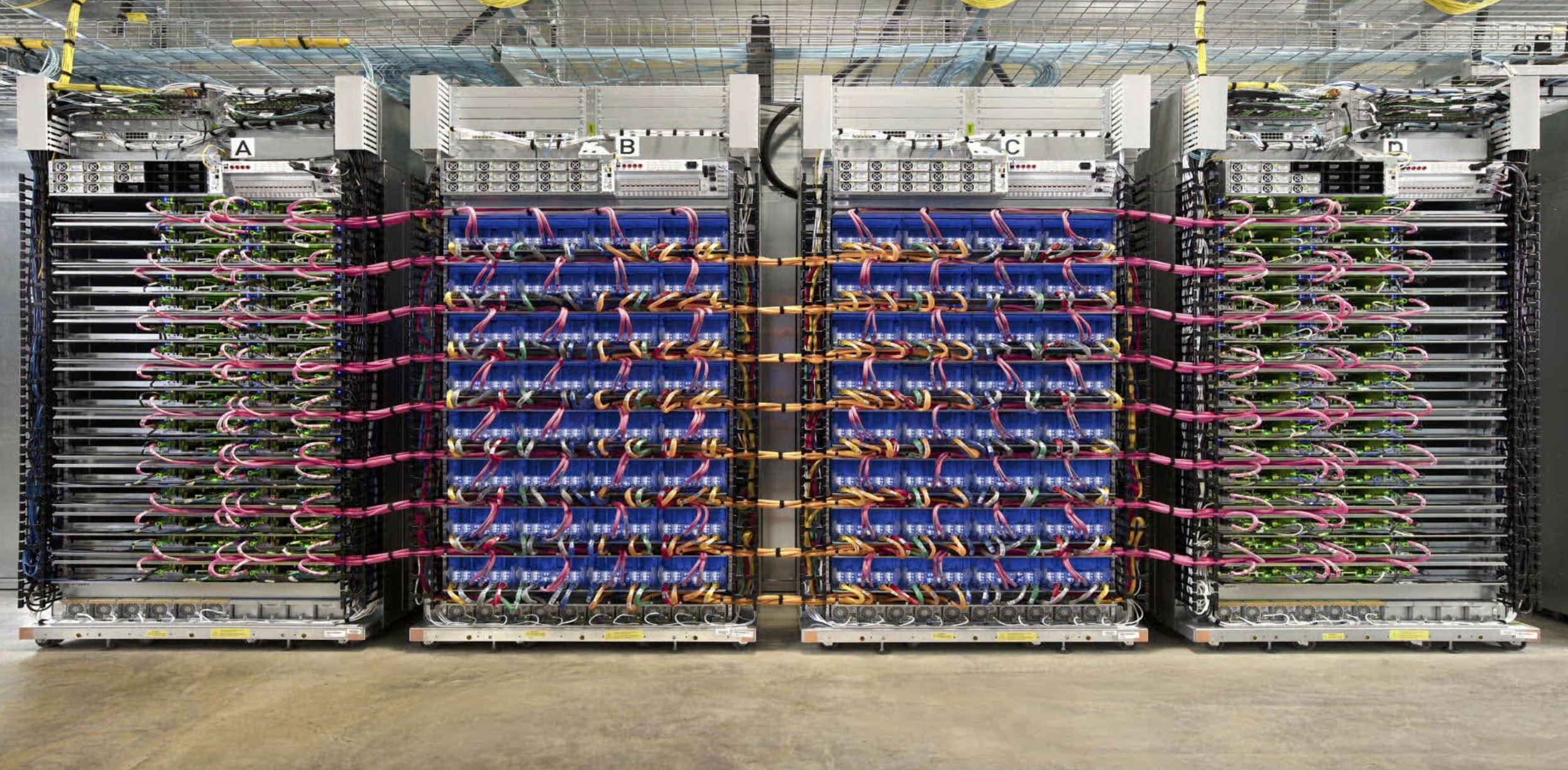

As you can probably imagine, the process of using a neural net to create and test a set of other neural nets is incredibly expensive in terms of time and computation. To create the image and speech recognition algorithms designed by AutoML, Google reportedly let a cluster of 800 GPUs iterate and crunch numbers for weeks.

This is likely not going to be a tool that you can run on your laptop, but it may become a selling point for Google Cloud. Access to AutoML and the ability to create and refine a machine learning system without a strong background in AI, could be a tool to give Google a leg up over Amazon, whose AWS cloud service Google has long trailed behind.

AutoML and similar tools may be the key to making machine learning accessible to a range of scientists and could help bring AI to new fields of study.

If it doesn’t eat our brains first, that is.

-

lionheart051 Interesting yet dangerous at the same time. Transhumanism on the rise and CTs becoming true. However, it would be foolish to think a machine can ever be smarter than a human, algorithms are incapable of free will (But thats a good thing).Reply -

Tairaa Why are you so sure that no sufficiently complex system of algorithms cannot possibly acquire free will at least to the extent that we have such freedom ourselves?Reply -

Verisimilitude_1 Machines have already shown to be smarter than humans in some respects, but as to free will, that will only happen when and if machines get sentience and become conscious of their own existence.Reply -

Nei1 > algorithms are incapable of free willReply

No problem if algorithms can't unilaterally destroy us. As humans run the algorithms, we may use them to destroy ourselves. A subset of "garbage in garbage out." -

The brain is a machine, it would be foolish to think it is a "unique" one. Things are moving so fast, hard AI will probably be here within another few decades.Reply

-

DavidC1 "The brain is a machine, it would be foolish to think it is a "unique" one. Things are moving so fast, hard AI will probably be here within another few decades."Reply

Not a very convincing argument. We barely understand ourselves. The idea that some compute power will naturally turn into sentient beings borders on the fantasy akin to popular sci-fi plots. Technology advancement happened not because randomly, but because its understood well. It takes great understanding to make simple devices but randomness for something far greater as a sentient machine? -

DavidC1 " Transhumanism on the rise and CTs becoming true."Reply

The problem with us is that the leaders worry about practically inconsequential problems like these but refuse/ignore real world problems. -

dogofwars When you think about it in some place of the world the singularity did happen when they got the first cell phone out ;) LOLReply -

bit_user Reply

Okay, but nobody said that.19748023 said:Not a very convincing argument. We barely understand ourselves. The idea that some compute power will naturally turn into sentient beings borders on the fantasy akin to popular sci-fi plots.

One of the interesting things about deep learning is highlighted in the article - that it's often the case that people who train a neural network to do a certain task often don't actually understand how it performs that task. This lets some air out of the idea that we actually need to understand everything about the human brain, in order to emulate it. A lot is currently understood about how human brains work, and I'll go out on a limb and say that if we had hardware with the equivalent computational performance, it wouldn't be so hard to teach it abstract reasoning, the ability to form hypothesis, and other higher-order cognitive tasks.19748023 said:Technology advancement happened not because randomly, but because its understood well. It takes great understanding to make simple devices but randomness for something far greater as a sentient machine?

What's so interesting about Google's new development is that they're enabling people to design neural networks to accomplish a given task, with even less understanding of how neural networks function. To the extent that this technology can be applied to higher-order cognitive tasks, we might find that true machine intelligence is a bit closer than we might've assumed.

And I agree with everyone who said that the most dangerous aspect of deep learning is the things people will use it for. Like cyber-criminals or terrorists who might use it to help them bring down the grid or gain access to some country's nukes.