Google admits the Gemini AI demo hands-on video was staged

Voice prompts were dubbed afterward, and the video was not recorded in real-time.

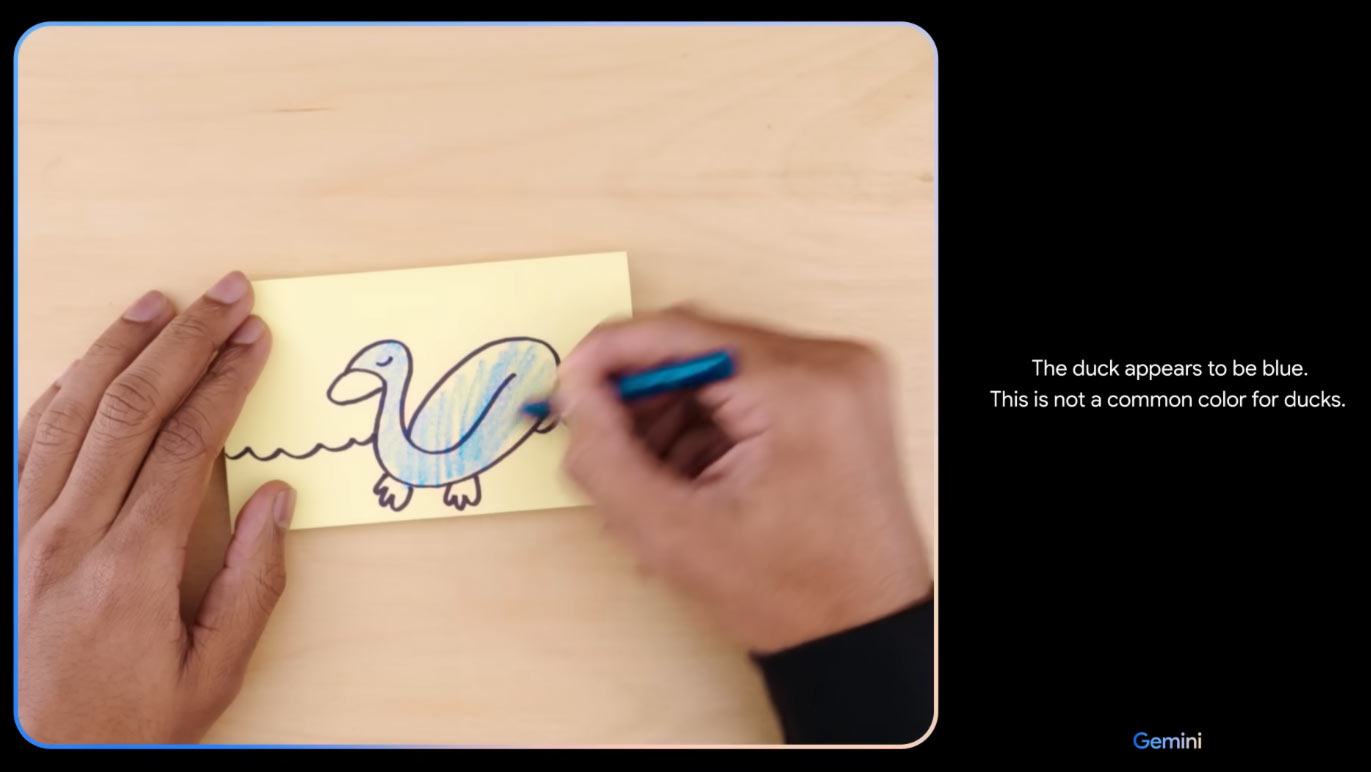

Google’s Hands-on with Gemini video was one of the most impressive aspects of the firm’s new AI large language model (LLM) launch. However, Bloomberg has talked to a Google spokesperson who admitted that the video was not recorded in real-time. Moreover, voice prompts were not even used, the vocal interaction with Gemini that you hear was dubbed in later. Google has also released a blog post, at the same time as the demo, which illustrates how the video was made.

Sundar Pichai, the Google CEO, shared the hands-on video on Thursday, as he said the best way to understand “Gemini’s underlying amazing capabilities is to see them in action.” A hint that not all was as it seemed was included in the YouTube description of the video. “For the purposes of this demo, latency has been reduced and Gemini outputs have been shortened for brevity,” reads a footnote.

Seeing some qs on what Gemini *is* (beyond the zodiac :). Best way to understand Gemini’s underlying amazing capabilities is to see them in action, take a look ⬇️ pic.twitter.com/OiCZSsOnCcDecember 6, 2023

That footnote might be described as an understatement, or diversion from the truth, though. As the video wasn’t just shortened, there was no real interaction during the recording. Google’s spokesperson told Bloomberg that the hands-on video was cobbled together with “using still image frames from the footage, and prompting via text.” Thus, Gemini only responded to typed in prompts and still images that were uploaded to it. The conversational flow, with the human speaking, drawing, showing objects, playing with cups and other objects, was seemingly just staged for the demo video.

If we look back at the video, the spokesperson’s explanation smashes the natural conversational assistant impression we got during first exposure to the demo.

Some more explanation regarding the ‘Hands-on with Gemini’ video came from VP of Research & Deep Learning Lead, Google DeepMind, Oriol Vinyals, earlier today. “The video illustrates what the multimodal user experiences built with Gemini could look like,” Vinyals reasoned. “We made it to inspire developers.” The Google DeepMind VP’s post drew a lot of fire for repeating the claim that the video was “real, shortened for brevity.”

Really happy to see the interest around our “Hands-on with Gemini” video. In our developer blog yesterday, we broke down how Gemini was used to create it. https://t.co/50gjMkaVc0We gave Gemini sequences of different modalities — image and text in this case — and had it respond… pic.twitter.com/Beba5M5dHPDecember 7, 2023

Hopefully, Google’s video can inspire developers – at Google – to make Gemini function just like it does in the demo video. If not, people may feel a little deceived, or even cheated, by the gulf between the hands-on video demo and reality.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

hotaru251 We need actual laws about "bending the truth" on advertising a product.Reply

Any claim that isnt actually true is effectively snake oil....a lie.

Shouldnt be allowed to lie to a person to sell product as thats scamming a person. -

PEnns Replyhotaru251 said:We need actual laws about "bending the truth" on advertising a product.

Any claim that isnt actually true is effectively snake oil....a lie.

Shouldnt be allowed to lie to a person to sell product as thats scamming a person.

Indeed. But I bet they will use the Fox News excuse.... -

Valerum Reply

Maybe we can have an artificial intelligence model that is designed to publicly callout lies, deceptions, scams, disinformation, and such. Eventually, I can see this model being used in courtrooms, police investigations, and relationships in general. Imagine walking around with a super lie detector that reads facial and body expressions, looks up facts in real time, and is never biased. Just a thought.hotaru251 said:We need actual laws about "bending the truth" on advertising a product.

Any claim that isnt actually true is effectively snake oil....a lie.

Shouldnt be allowed to lie to a person to sell product as thats scamming a person. -

edzieba So it still performed the reasoning they were demonstrating, but they diked out the speech-to-text and clip-an-image-from-a-video portions of the ingest process? That seems more just streamlining for demo reliability than any major change to actual functionality.Reply -

atomicWAR Reply

No thank you. I'd prefer not giving our future AI overlords an "in" to help reulate society just to gain a false sense of security. I think the AI community needs to slow down and learn to better understand how these systems can and will continue to evolve. Past evolution in AI, as far as we know at least, has been do to human intervention (ie new hardware and or new code). I fear future where AI evolution could be independent of human intervention. At that point we will have birthed Skynet and can only hope our new AI overlords are more benevolent than we are.Valerum said:Maybe we can have an artificial intelligence model that is designed to publicly callout lies, deceptions, scams, disinformation, and such. Eventually, I can see this model being used in courtrooms, police investigations, and relationships in general. Imagine walking around with a super lie detector that reads facial and body expressions, looks up facts in real time, and is never biased. Just a thought. -

ThomasKinsley I must not be their target audience because I wasn't impressed with the faked demo. If I'm drawing a blue duck, the last thing I want is a droning voice telling me it's uncommon to see a blue duck and that most ducks are white or brown. I get the point is that it can understand visuals, but then couldn't they provide a more natural demo showing a real scenario in which the AI is helpful? Especially if they're going to fake it, then go all the way and show something special.Reply