HPE Demonstrates Exascale Hardware: AMD's and Intel's Platforms Exposed

HPE showcases the latest supercomputer hardware.

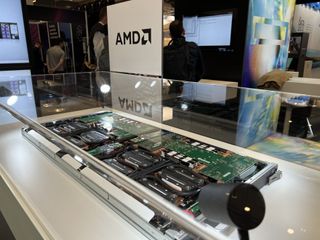

At the International Supercomputer (ISC 2022) trade show, HPE demonstrated blade systems that will power two exascale supercomputers set to come online this year — Frontier and Aurora. Unfortunately, HPE had to use sophisticated and power-hungry hardware to get unprecedented computing performance. Hence, both machines use liquid cooling, but even massive water blocks cannot hide some interesting design peculiarities the blades feature.

Both Frontier and Aurora supercomputers are built by HPE using its Cray EX architecture. While the machines leveraged AMD and Intel hardware, respectively, they use high-performance x86 CPUs to run general tasks, and GPU-based compute accelerators to run highly parallel supercomputing and AI workloads.

The Frontier supercomputer builds upon HPE's Cray EX235a nodes powered by two AMD's 64-core EPYC 'Trento' processors featuring the company's Zen 3 microarchitecture enhanced with 3D V-Cache and optimized for high clocks. The Frontier Blades also come with eight of AMD's Instinct MI250X accelerators featuring 14,080 stream processors and 128GB of HBM2E memory. Each node offers peak FP64/FP32 vector performance of around 383 TFLOPS and peak 765 FP64/FP32 matrix performance of approximately 765 TFLOPS. Both CPUs and compute GPUs used by HPE's Frontier blade use a unified liquid cooling system with two nozzles on the front of the node.

The Aurora blade is currently called just like that, carries an Intel badge, and does not have HPE's Cray Ex model number yet, possibly because it still needs some polishing. HPE's Aurora Blades utilize two Intel Xeon Scalable 'Sapphire Rapids' processors with over 40 cores and 64GB of HBM2E memory per socket (in addition to DDR5 memory). The nodes also feature six of Intel's Ponte Vecchio accelerators, but Intel is quiet about the exact specifications of these beasts that pack over 100 billion transistors each.

One thing that catches the eye with the Aurora blade set to be used with the 2 ExaFLOPS Aurora supercomputers is mysterious black boxes with a triangular 'hot surface' sign located next to Sapphire Rapids CPUs and Ponte Vecchio compute GPUs. We do not know what they are, but they may be modular sophisticated power supply circuitry for additional flexibility. After all, back in the day, VRMs were removable, so using them for highly power-hungry components might make some sense even today (assuming that the correct voltage tolerances are met), especially with pre-production hardware.

Again, the Aurora blade uses liquid cooling for its CPUs and GPUs, though this cooling system is entirely different from the one used by Frontier blades. Intriguingly, it looks like Ponte Vecchio compute GPUs in the Aurora blade use different water blocks than Intel demonstrated a few weeks ago though we can only wonder about possible reasons for that.

Interestingly, the DDR5 memory modules Intel-based blade uses come with rather formidable heat spreaders that look bigger than those used on enthusiast-grade memory modules. Keeping in mind that DDR5 RDIMMs also carry a power management IC and voltage regulating module, they naturally need better cooling than DDR4 sticks, especially in space-constrained environments like blade servers.

Stay On the Cutting Edge: Get the Tom's Hardware Newsletter

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a Freelance News Writer at Tom’s Hardware US. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

domih Meanwhile NVIDIA is mourning about its failed attempt to buy ARM. It needed to own a CPU architecture to be able, like INTEL and AMD, to present a complete integrated vertical solution for these data center racks.Reply

NVIDIA has CUDA, NVLink and Mellanox but no CPU line. Bad. Meanwhile INTEL and AMD work hard getting their data center GPU to high level and both acquired multiple companies for high bandwidth and low latency interconnect. Both have a CPU line. Good.

Note also that HPE has its own Slingshot NIC for 100 and 200 GbE interconnect. So no Mellanox InfiniBand for Frontier and (finally coming up "soon") Aurora.

NVIDIA is definitely not the favorite in the data center market domination war and that's bad for them. Their revenues from data center sales now exceed the gamer cards revenues. In addition NVIDIA still keeps for itself the % of the gamer card sales that are in fact crypto currency related.

So after the ARM episode, NVIDIA has a serious conundrum to solve if it wants to stay relevant for the next decade. I still wonder why the NVIDIA people thought they could buy ARM without being noticed and then stopped by basically the rest of the world.

The regular Tom's Hardware gamer reader preoccupations about "which graphics card is the best" is barely a footnote in the history of the data center compute business. -

spongiemaster Reply

Might want to keep up with current events.domih said:Meanwhile NVIDIA is mourning about its failed attempt to buy ARM. It needed to own a CPU architecture to be able, like INTEL and AMD, to present a complete integrated vertical solution for these data center racks.

NVIDIA has CUDA, NVLink and Mellanox but no CPU line. Bad.

https://www.nvidia.com/en-us/data-center/grace-cpu/

Most Popular