AMD's Instinct MI250X OAM Card Pictured: Aldebaran's Massive Die Revealed

Big and powerful

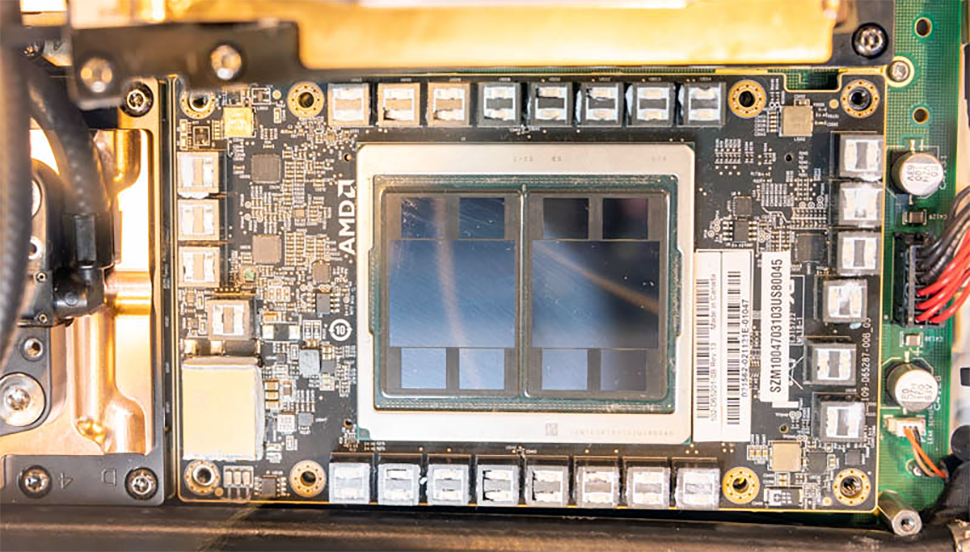

AMD's Instinct MI250X compute accelerator is undoubtedly one of the most impressive products the company has released in recent years. This card will power the industry's first exascale supercomputer called Frontier, as well as smaller upcoming high-performance computing (HPC) deployments. Unfortunately, very few of us will get to see this OAM board (and other compute accelerators) in real life, but Patrick Kennedy from ServeTheHome filled this gap this week with pictures of the system on display. Our rough math says that each of the two GPU dies measures ~790mm^2, putting them among the largest GPUs made. That large die is rumored to consume 550W of power.

All three American exascale supercomputers announced to date will use HPE's Cray Shasta supercomputer architecture. Two of them (Frontier and El Capitan) will be powered by AMD's EPYC processors and Instinct accelerators, whereas the third will be based on Intel's Xeon Scalable CPUs and Ponte Vecchio compute GPUs (Aurora). Since AMD is set to power the world's first (at least as far as official numbers are concerned) exascale system that is due to be deployed in the coming weeks or months, the company naturally demonstrated HPE's Cray EX235a node featuring its EPYC processor and Instinct MI200-series accelerators at SuperComputing 21.

AMD's Instinct MI250X compute GPU codenamed Aldebaran consists of two graphics compute dies (GCDs) that each packs 29.1 billion transistors and is equipped with 64GB of HBM2e memory connected using a 4096-bit interface (128GB HBM2e over an 8192-bit interface in total). With 14,080 stream processors and 96 FP64 TFLOPS performance, the Instinct MI250X is the highest-performing HPC accelerator released to date. The part comes in an open accelerator module (OAM) form factor and measures 102mm x 165mm, which is pretty large.

Each GCD has its own set of supporting chips, including power controllers, voltage regulating modules, and firmware. We have no idea about the function that the huge white box in the lower-left corner of the card performs, but we will do our best to find out if we get to play with this card someday. Make sure to visit ServeTheHome for more AMD Instinct MI250X pictures.

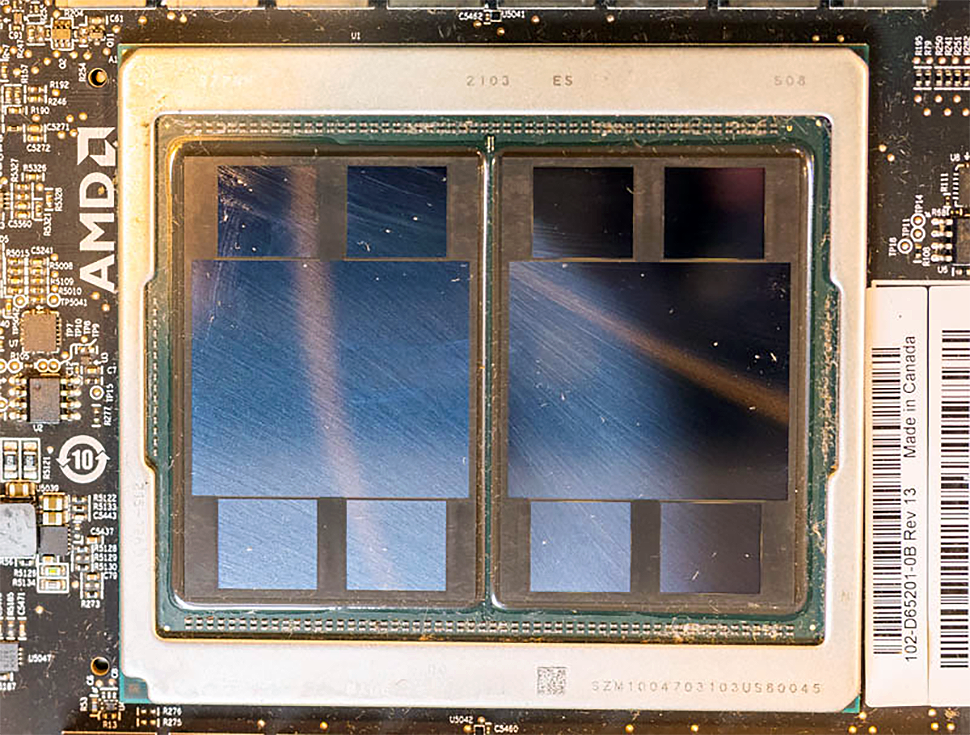

Knowing the dimensions of the card and the dimensions of some of the chips used on it (e.g., a SOIC-8 chip located on the left to the GPU package), we can make some very rough guesses about the dimensions of the Aldebaran CGDs. Of course, this type of napkin math isn't very accurate, especially on images like these, but it looks like we are dealing with chips that have die sizes of around 745 mm^2 ~ 790 mm^2.

To put these die sizes into context, Nvidia's A100 is 826 mm^2. Keeping in mind how many FP64 stream processors each Aldebaran packs (7040 SPs) and the fact that these SPs need to be fed with plenty of data, we understand that the design is very SRAM intensive, which is why the die size is huge (since SRAM barely scales these days).

Complex processors tend to consume a lot of power, and the OAM form-factor is just what the doctor ordered for such accelerators as it can supply up to 700W of power. Rumor has it that AMD's Instinct MI250X consumes up to 550W delivered via a 26-phase voltage regulating module. To cool down such a beast, HPE plans to use a liquid cooling system. It remains to be seen what kind of cooling other types of systems will use.

One interesting thing about the demonstrated card is that it still carries an ES (engineering sample) marking, even though AMD has shipped its Instinct MI200-series compute GPUs for revenue since the second quarter. So, perhaps the pictured card is not final and commercial boards are slightly different.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Another important detail is that the card was made in Canada, where AMD's current graphics division ATI Technologies used to be headquartered. Apparently, AMD still has a big presence in Canada and even makes (or at least prototypes) some of its most important products there. That's an important detail because the Instinct MI250X cards will be installed into exascale supercomputers that are set to be used for some of the most complex computations, including those that concern national security.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Jim90 In keeping with Tom's recent paid-for clickbait articles (Ryzen has Fallen, Intel crushes AMD etc) - where's the equivalent Nvidia has Fallen, AMD crushes Nvidia titles...???Reply

Lol. -

islandwalker Reply

So...you're suggesting we should proclaim Nvidia "crushed" or "defeated" based on...a photo? And you're accusing us of bias? I hope it's comfortable there in your reality bubble.Jim90 said:In keeping with Tom's recent paid-for clickbait articles (Ryzen has Fallen, Intel crushes AMD etc) - where's the equivalent Nvidia has Fallen, AMD crushes Nvidia titles...???

Lol. -

dalek1234 ReplyJim90 said:In keeping with Tom's recent paid-for clickbait articles (Ryzen has Fallen, Intel crushes AMD etc) - where's the equivalent Nvidia has Fallen, AMD crushes Nvidia titles...???

Lol.

I wouldn't hold my breath. Tom's has been pro-Intel and pro-Nvidia since Tom sold the website. -

Eximo Odd attitude, not like anyone is actually going to be able to buy the thing. Not going out of my way to buy an A100 for several thousand dollars, certainly not going to look for (what appears to be a non-standard form factor) MI250X at some undisclosed price.Reply

Based on the number of build requests, people are going to buy 12th gen. When AMD launches 3d cache chips and Zen 4, similar articles, backed with evidence, should have similar tones.

All article titles are clickbait, everyone should be used to it by now. -

P1nky ReplyAMD's Instinct MI250X OAM Card Pictured: Aldebaran's Massive Die Revealed

I guess you missed the big reveal done by Lisa on the stage while holding the chip.

That large die is rumored to consume 550W of power.

Rumored? It's in the official specs sheet. And it's 560W.