Beyond Rome: AMD's EPYC and Radeon to Power World's Fastest Exascale Supercomputer

AMD announced today that it had been selected to power Frontier, which is set to be the world's fastest exascale-class supercomputer when it comes online in 2021. The new supercomputer, which will be built with Cray's Shasta supercomputer blades, is being developed by Cray under a $600 million contract for the U.S. Department of Energy (DOE) for the Oak Ridge National Laboratory. All told, the supercomputer is projected to be faster than the top 160 supercomputers in the world, combined.

The new Frontier supercomputer is expected to deliver a leading 1.5 exaflops of performance powered by next-generation variants of AMD's EPYC processors and Radeon Instinct GPUs. The announcement comes on the heels of Intel's recent disclosure that its graphics cards featuring the Xe Architecture will power Aurora, which will be the first exascale-class supercomputer, though its projected speed doesn't match the Frontier supercomputer.

The new Frontier supercomputer builds upon AMD's rising presence in the supercomputing market, which we recently covered in our Ryze of EPYC feature. Notably, Frontier marks the second exascale-class system that doesn't wield Nvidia's GPUs that have long dominated the supercomputer market.

EPYC and Radeon Combine

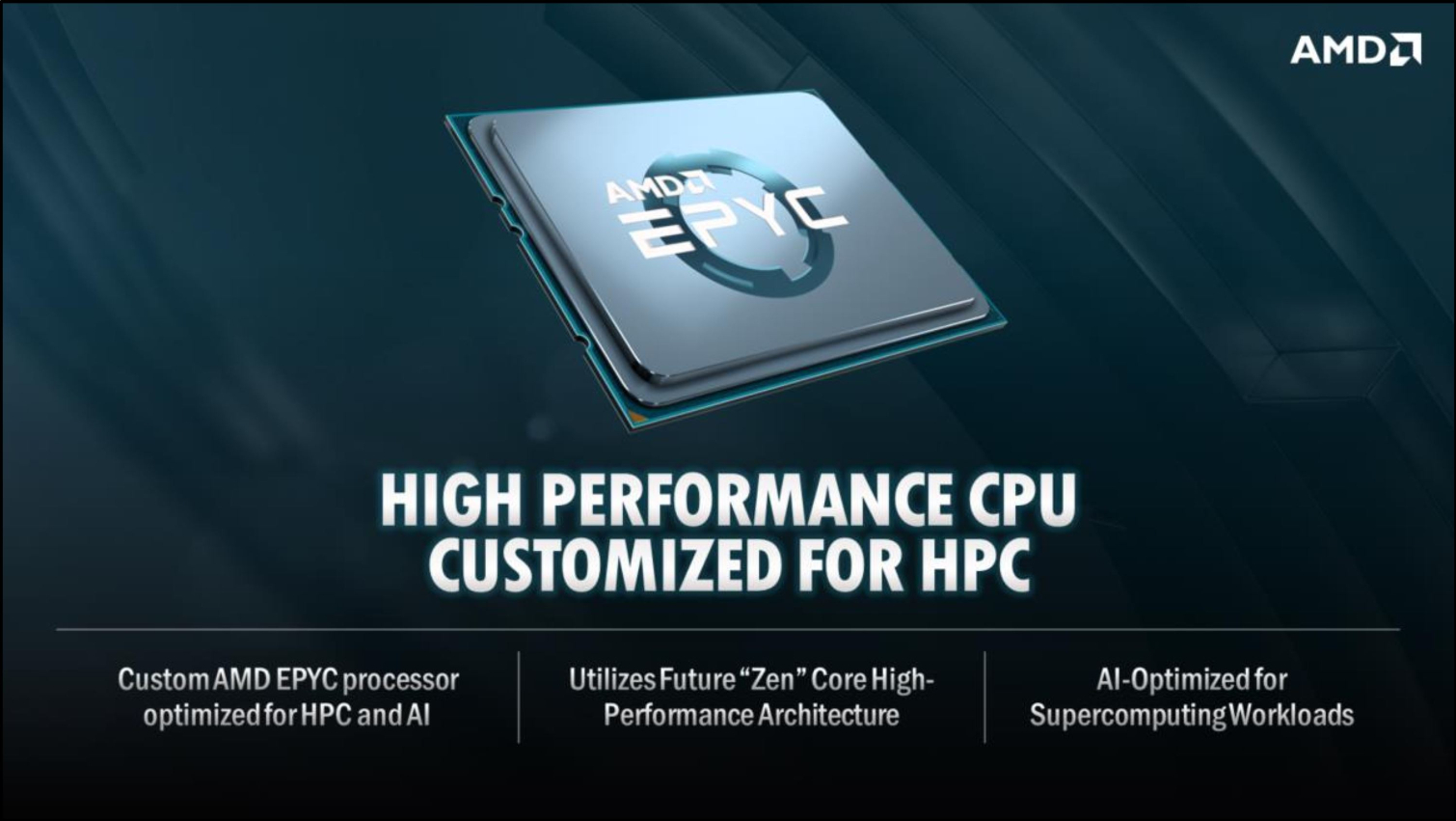

AMD didn't reveal which specific generation of its GPUs and CPUs would power the system, although CEO Lisa Su did announce that both components are customized for the deployment.

AMD optimized its custom EPYC processor with support for new instructions that provide optimal performance in AI and supercomputing workloads. "It is a future version of our Zen architecture. So think of it as beyond..what we put into Rome," said Su.

That could indicate that AMD will use a custom variant of its next-next-gen EPYC Milan processors for the task, but that remains unconfirmed. Those processors have already been tapped for the DOE's Perlmutter supercomputer that will also be built with Cray's Shasta building blocks. We have extensive coverage of that design here.

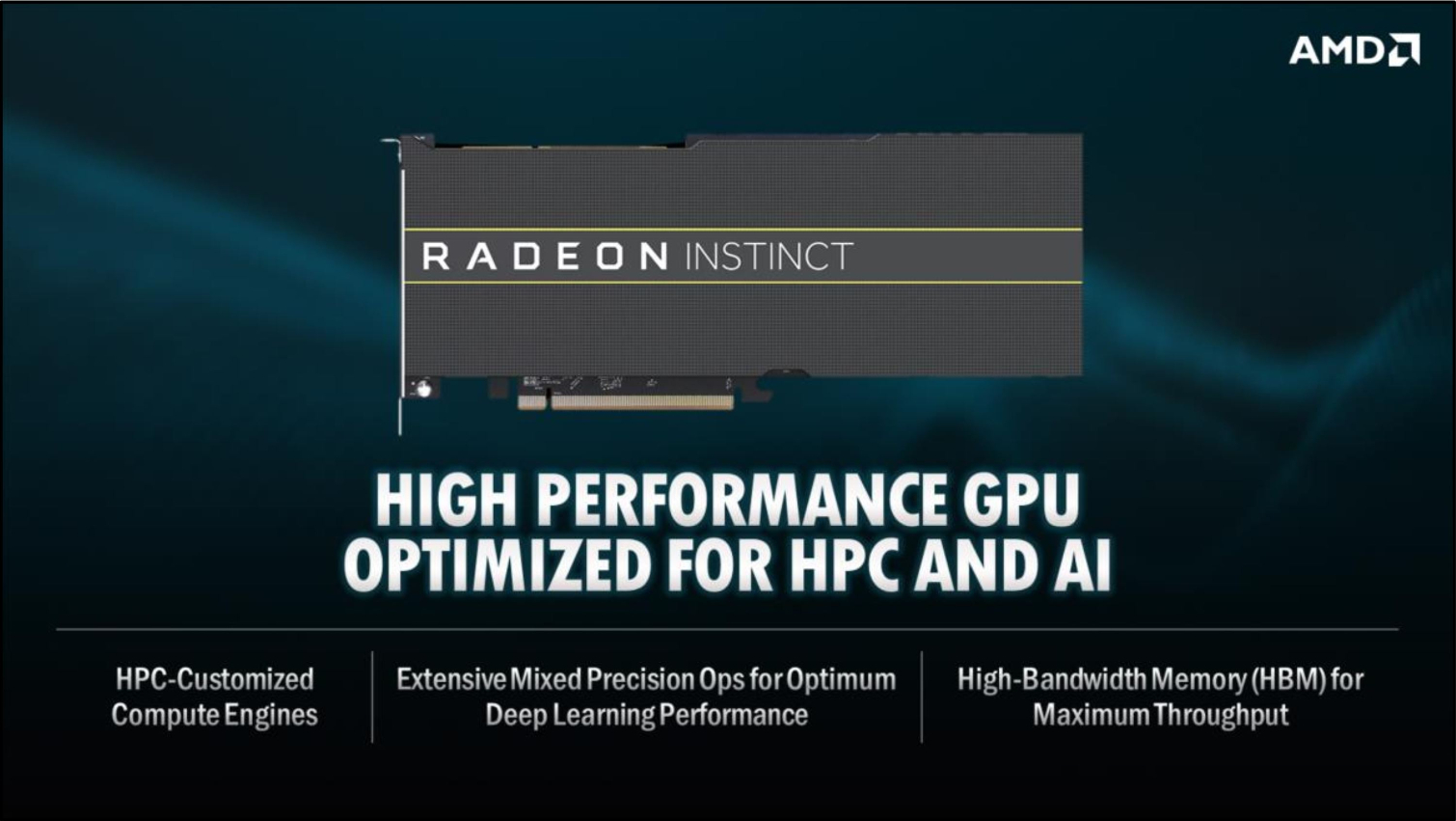

The CPUs will be combined with high-performance Radeon GPU accelerators that Su said have extensive mixed-precision compute capabilities and high bandwidth memory (HBM). Su specified that the GPU will come to market in the future, but didn't elaborate about the future of the custom-designed EPYC processor.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Infinity Fabric Ties it Together

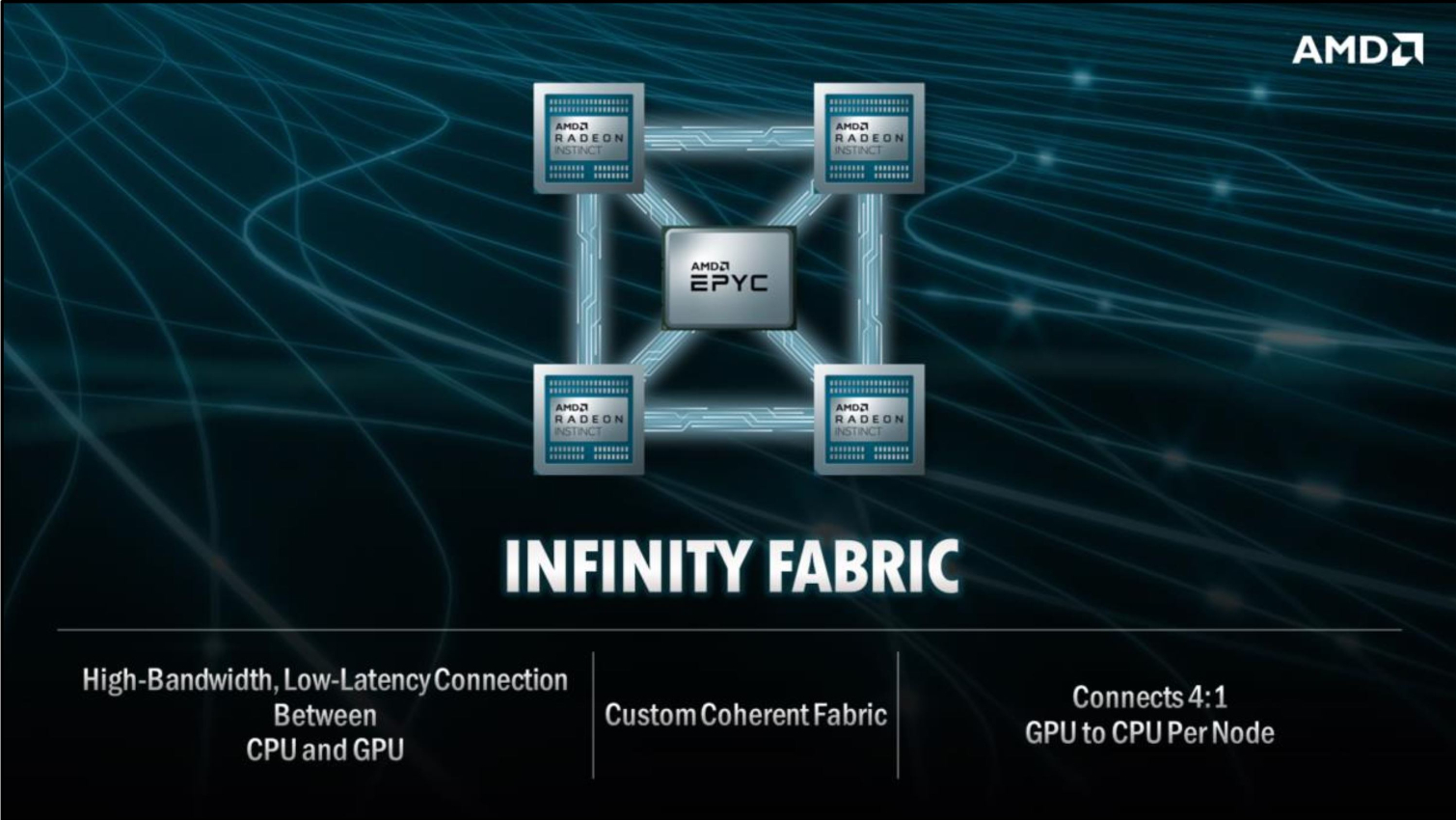

AMD will connect each EPYC CPU to four Radeon Instinct GPUs via a custom high-bandwidth low-latency coherent Infinity Fabric. This is an evolution of AMD's foundational Infinity Fabric technology that it currently uses to tie together CPU and GPU die inside its processors, but now AMD has extended it to operate over the PCIe bus.

AMD previously announced this new capability for its MI60 7nm Radeon Instinct accelerators. That version of the enhanced protocol provides up to 100 GB/s of CPU-to-GPU bandwidth over the PCIe 4.0 bus, but it isn't clear if the Frontier supercomputer will wield a future generation of the technology.

Cray will employ an enhanced version of AMD's open-source ROCm programming environment for Frontier, marking an important step forward for AMD's suite of programming tools. Nvidia's CUDA has become the defacto programming environment of choice for GPU accelerators. That entrenchment provides Nvidia an advantage in the parallel computing market, so AMD's forward progress on this front will help it in the broader ecosystem.

The Frontier Building Blocks

Frontier will consist of 100 cabinets of Shasta supercomputer blades, with each cabinet drawing up to 300kW of power. The entire system is projected to consume 40 MW of power and cover 7,300 square feet (approximately two basketball courts). The system will also have over 90 miles of cabling and require 5,900 gallons of water per minute for cooling.

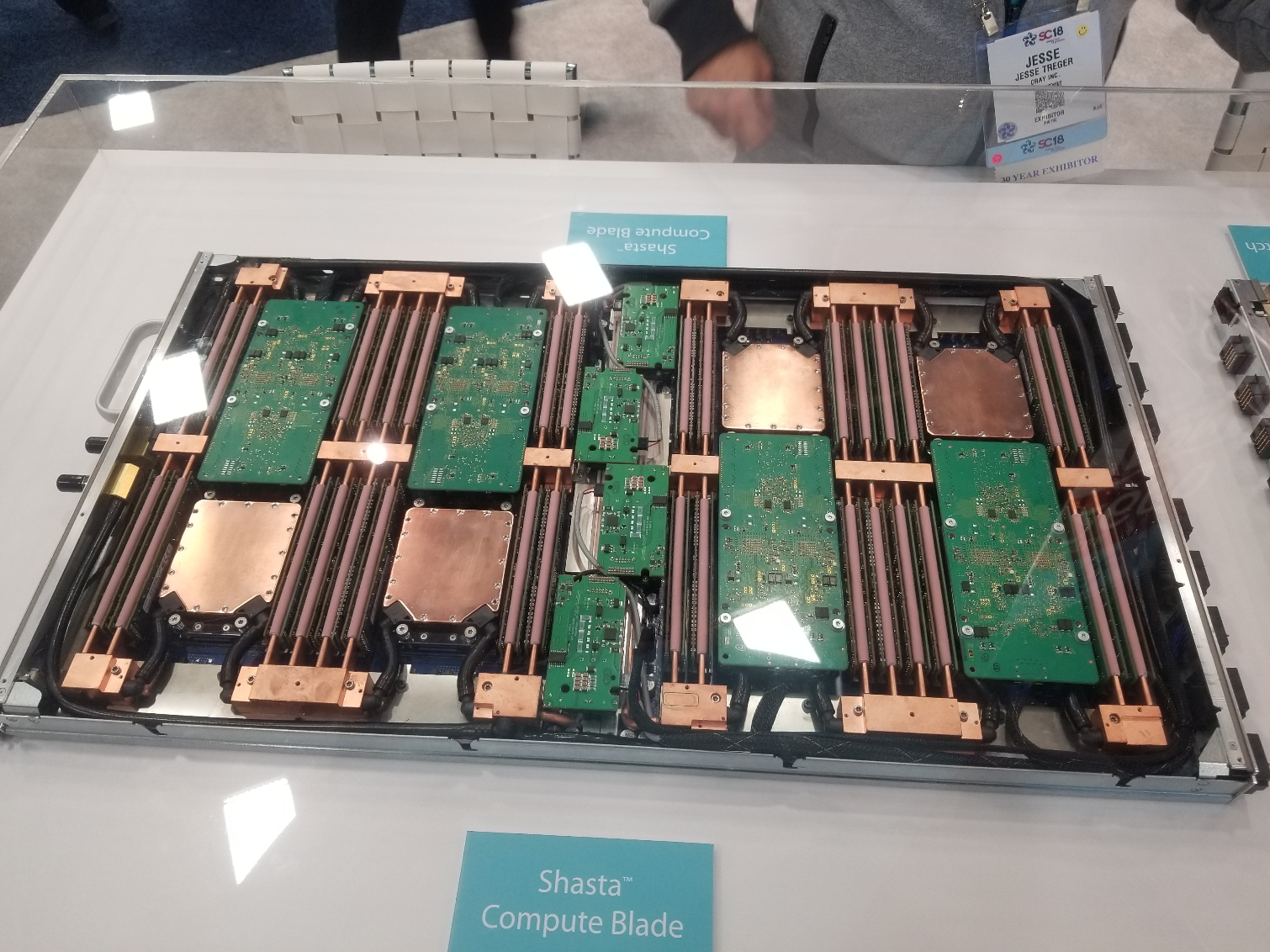

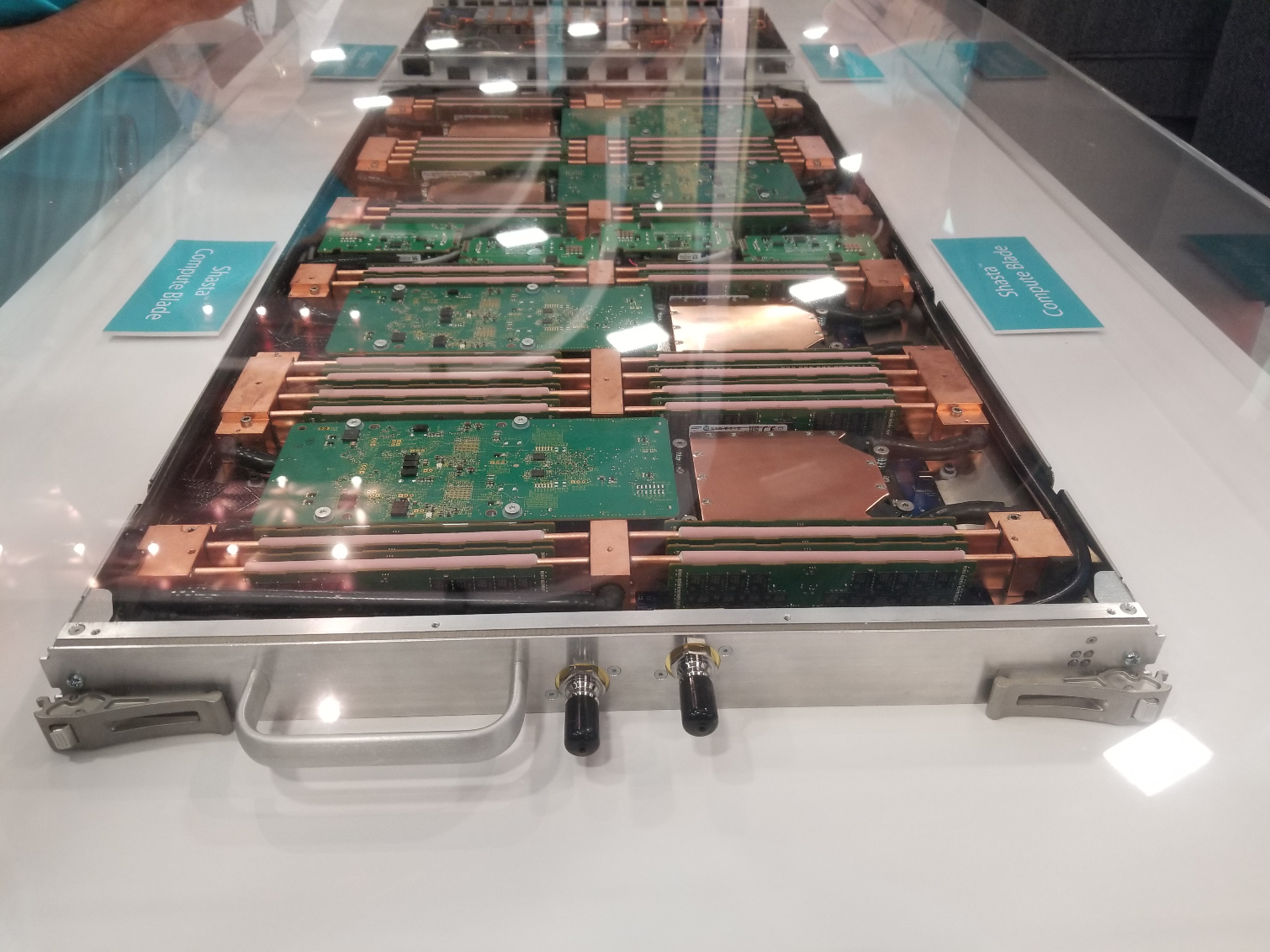

We recently took a look at Cray's current lineup of Shasta blades at the Supercomputing 2018 conference. Frontier will use Shasta compute blades that currently house up to eight compute sockets and a full complement of memory DIMMs and networking. Cray has specified that the current generation (image above) of its Shasta blades will not be used in Frontier, instead a new undisclosed variant will be pushed into service. Currently the Shasta blades consist of CPU, GPU, and networking blades, but it is unclear if the company will stick to that design philosophy for Frontier.

Like the current generation of blades, Cray will use its proprietary Slingshot fabric to connect the nodes to integrated top-of-rack switches. This networking fabric uses an enhanced low-latency protocol that includes intelligent routing mechanisms to alleviate congestion. The interconnect supports optical links, but it is primarily designed to support low-cost copper wiring.

Keeping it in Perspective

Frontier builds on Oak Ridge National Labs' long heritage of hosting a string of the most powerful supercomputers in the world, including previous title-holders Jaguar and Titan, with the latter weighing in at 17.6 petaFLOPS of performance. The lab also hosts Summit, the world's current title holder with 143.5 petaflops of performance. Frontier is theoretically cable of more than seven times more performance.

To put Frontier's performance into perspective, the supercomputer will be able to crunch up to 1.5 quintillion operations per second, which is equivalent to solving 1.5 quintillion mathematical problems every second. Cray also touts the performance of its networking solution as offering 24,000,000 times the bandwidth of the fastest home internet connection, or equivalent to being able to download 100,000 full HD movies in one second.

The system is projected to come online in 2021, though a firm date hasn't been announced. AMD CEO Lisa Su commented "we intend to deliver on-time, on-schedule, and on-performance," which is an important distinction in a supercomputer industry that can be plagued with delays, much like Aurora's timeline was repeatedly pushed back, and then completely redesigned to use Intel's Xe Architecture, due to issues with the Xeon Phi Knight's Hill accelerators. In an odd coincidence, Intel announced the retirement of the last of its Xeon Phi lineup today as it turns its eyes to its Xe Graphics-powered future.

With AMD and Intel both continuing to make headway into the supercomputing realm and Nvidia's presence seemingly receding for newer supercomputers, the next few years could mark a fundamental shift to the established pecking order.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

digitalgriffin That explains where the 7nm thread ripper dies went... :DReply

What's curious here is the choice of GPU's. Instinct has excellent FP throughput. It's no match for NVIDIA's GV100 on AI metrics where matrix math is required.

It means they are not going to be using it for AI type math. So no learning algorithms. Most likely cell based algorithms that take more time to process each node the further you break it down.

��

Tesla V100 (SXM2)Tesla V100 (PCIe)Double Precision7.5 TFLOPS7 TFLOPSTensor Performance (Deep Learning)120 TFLOPS112 TFLOPSGPUGV100

MI25edit]The MI25 is a Vega based card, utilizing HBM2 memory. The MI25 performance is expected to be 12.3 TFLOPS using FP32 numbers. In contrast to the MI6 and MI8, the MI25 is able to increase performance when using lower precision numbers, and accordingly is expected to reach 24.6 TFLOPS when using FP16 numbers. The MI25 is rated at <300W TDP with passive cooling. The MI25 also provides 768 GFLOPS peak double precision (FP64) at 1/16th rate. -

prtskg Reply

Wouldn't MI50 and 60 would provide better comaprison?digitalgriffin said:That explains where the 7nm thread ripper dies went... :D

What's curious here is the choice of GPU's. Instinct has excellent FP throughput. It's no match for NVIDIA's GV100 on AI metrics where matrix math is required.

It means they are not going to be using it for AI type math. So no learning algorithms. Most likely cell based algorithms that take more time to process each node the further you break it down.

��

Tesla V100 (SXM2)Tesla V100 (PCIe)Double Precision7.5 TFLOPS7 TFLOPSTensor Performance (Deep Learning)120 TFLOPS112 TFLOPSGPUGV100

MI25edit]The MI25 is a Vega based card, utilizing HBM2 memory. The MI25 performance is expected to be 12.3 TFLOPS using FP32 numbers. In contrast to the MI6 and MI8, the MI25 is able to increase performance when using lower precision numbers, and accordingly is expected to reach 24.6 TFLOPS when using FP16 numbers. The MI25 is rated at <300W TDP with passive cooling. The MI25 also provides 768 GFLOPS peak double precision (FP64) at 1/16th rate.

https://www.tomshardware.com/news/amd-radeon-instinct-mi60-mi50-7nm-gpus,38031.htmlMI60 has 7.4TFLOPS of FP64 performance and very efficient (perhaps one of the most efficient right now) for HPC. -

digitalgriffin Replyprtskg said:Wouldn't MI50 and 60 would provide better comaprison?

https://www.tomshardware.com/news/amd-radeon-instinct-mi60-mi50-7nm-gpus,38031.htmlMI60 has 7.4TFLOPS of FP64 performance and very efficient (perhaps one of the most efficient right now) for HPC.

You're quite right. Good catch. It's still lacking though on the AI side. -

bit_user Reply

Why single out AMD? As the article cited, an Intel-based machine was already announced - also slated to come online in 2021.meriax1029 said:SkyNet goes live 2021. Thanks AMD! :mad:

And what's so special about 1.5 exaflops? Why is that the magic number for building Skynet? -

bit_user Reply

This won't be a current-gen GPU, so it will probably also have something like tensor cores. In Anandtech's coverage, they specifically cited AI as a target workload:digitalgriffin said:What's curious here is the choice of GPU's. Instinct has excellent FP throughput. It's no match for NVIDIA's GV100 on AI metrics where matrix math is required. It means they are not going to be using it for AI type math. So no learning algorithms.

Accordingly, the lab is expecting the supercomputer to be used for a wide range of projects across numerous disciplines, including not only traditional modeling and simulation tasks, but also more data-driven techniques for artificial intelligence and data analytics.

https://www.anandtech.com/show/14302/us-dept-of-energy-announces-frontier-supercomputer-cray-and-amd-1-5-exaflops

And it's worth noting that Nvidia's Tensor cores aren't general-purpose matrix math processors - they're too low precision for most uses of matrix math, really only accelerating matrix multiplications of the sort used in deep learning.

Why are you comparing with MI25? MI60 is based on the latest 7nm Vega, matches the V100 on fp64 and beats it on memory bandwidth.digitalgriffin said:��

Tesla V100 (SXM2)Tesla V100 (PCIe)Double Precision7.5 TFLOPS7 TFLOPSTensor Performance (Deep Learning)120 TFLOPS112 TFLOPSGPUGV100

MI25edit]The MI25 is a Vega based card, utilizing HBM2 memory. The MI25 performance is expected to be 12.3 TFLOPS using FP32 numbers. In contrast to the MI6 and MI8, the MI25 is able to increase performance when using lower precision numbers, and accordingly is expected to reach 24.6 TFLOPS when using FP16 numbers. The MI25 is rated at <300W TDP with passive cooling. The MI25 also provides 768 GFLOPS peak double precision (FP64) at 1/16th rate.

Still, it's not really a great comparison, since both companies will be at least one generation beyond those chips, by 2021. -

prtskg Reply

True thatbit_user said:This won't be a current-gen GPU, so it will probably also have something like tensor cores. In Anandtech's coverage, they specifically cited AI as a target workload:

https://www.anandtech.com/show/14302/us-dept-of-energy-announces-frontier-supercomputer-cray-and-amd-1-5-exaflops

Still, it's not really a great comparison, since both companies will be at least one generation beyond those chips, by 2021.