Intel Ups Aurora's Performance to 2 ExaFLOPS, Engages in ZettaFLOPS Race

Intel's Ponte Vecchio performs better than expected.

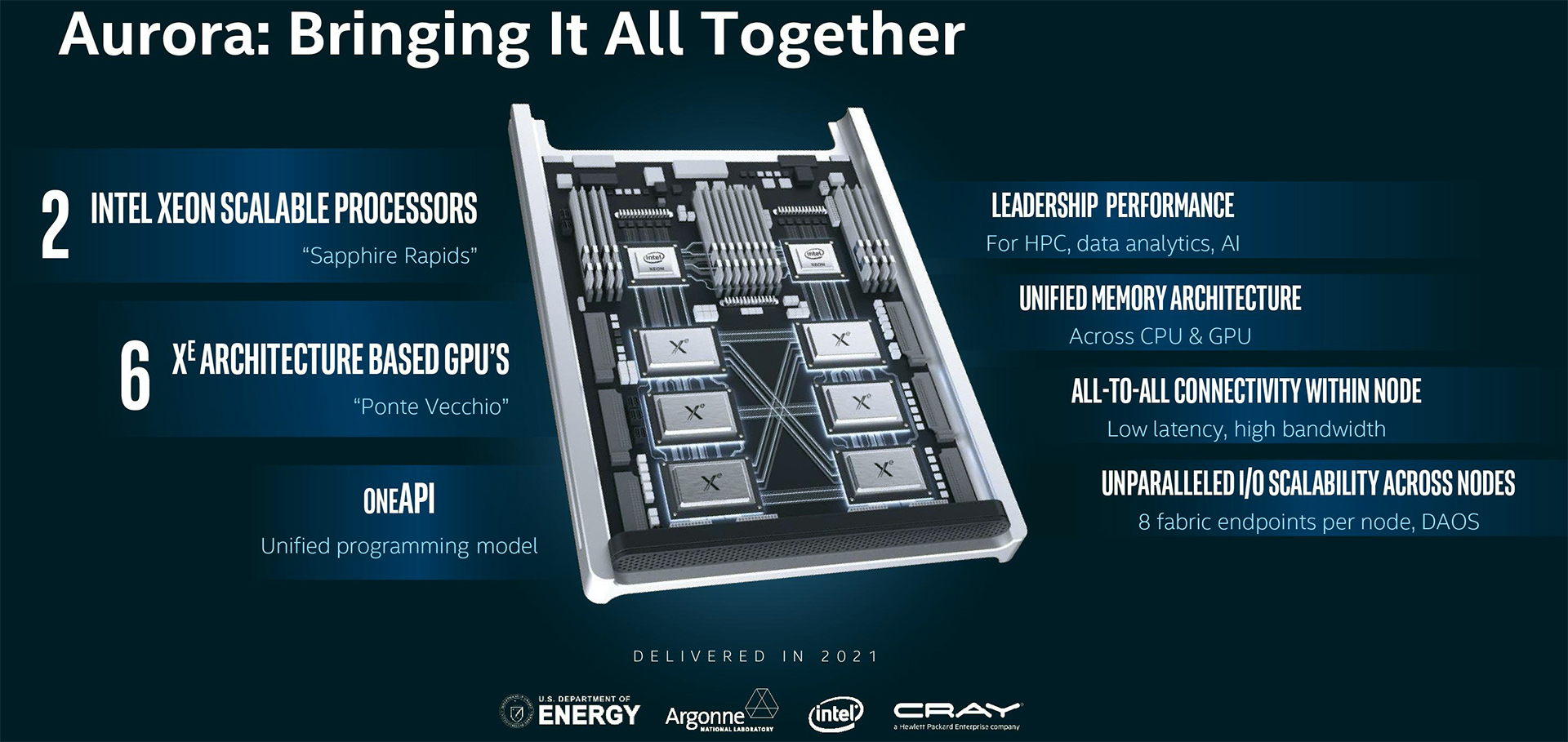

Argonne National Laboratory’s Aurora was meant to be the first supercomputer with sustainable performance of over 1 ExaFLOPS in Linpack benchmark, but after being delayed this left the title to the Frontier supercomputer. However, Aurora will be the industry's first 2 ExaFLOPS supercomputer thanks to higher-than-expected performance of Intel's Ponte Vecchio compute GPU, the company said. Also, Ponte Vecchio will power the Europe's first exascale system. In addition, Intel says that it will deliver technologies for a ZettaFLOPS machine by 2027.

Aurora: World's First 2 ExaFLOPS Supercomputer

As Intel's Sapphire Rapids CPU and Ponte Vecchio GPU near their launch, the company now has a better understanding of their performance, which is why it ups the performance target of Aurora to 2 FP64 ExaFLOPS (Rpeak) from around 1 ExaFLOPS. All of a sudden, Aurora becomes a rival for Lawrence Livermore National Laboratory's El Capitan supercomputer that is set to reach throughput of 2 ExaFLOPS in 2023.

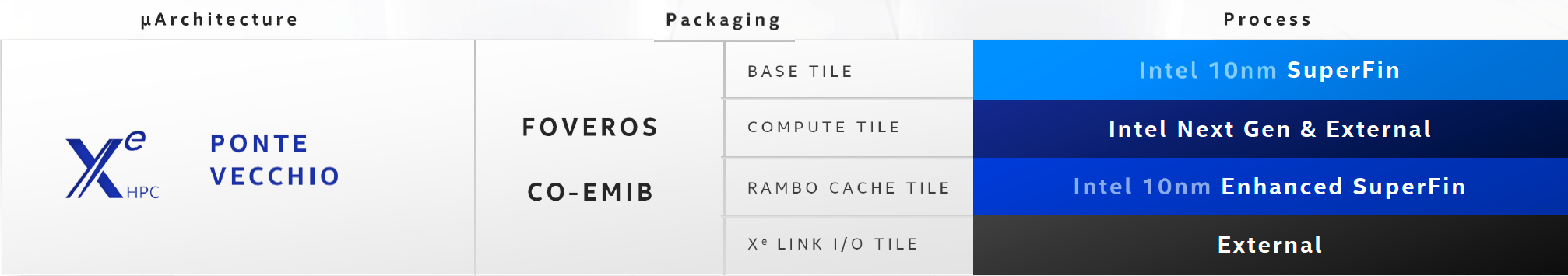

The Aurora Supercomputer has a rather bumpy history. The system was first announced in 2015, was set to be powered by Intel's Xeon Phi accelerators and deliver around 180 PetaFLOPS in 2018. But then Intel axed its Xeon Phi in favor of compute GPUs and had to renegotiate the deal with Argonne National Laboratory and deliver an ExaFLOPS system in 2021. Then the company had to postpone delivery of the system again because of the delay of its 7nm (now called Intel 4) fabrication process and necessity to produce Ponte Vecchio's compute tile both internally (using what is now called Intel 4) and externally (using TSMC's N5 node).

Intel's 7nm technology had to be redesigned in a bid to hit defect density and yields targets. Earlier this year Intel indicated that the revamped version of its 7nm process extensively uses extreme ultraviolet (EUV) lithography which eliminates usage of multipatterning (with four or more exposures) and therefore simplifies production and reduces the number of defects.

Since Intel's initial performance projections for Ponte Vecchio included a compute tile that was supposed to be made on a different node, these projections are no longer valid. Apparently, with a compute tile produced using TSMC's N5 (and Intel 4 later on), Ponte Vecchio can hit considerably higher performance than the initial version. This is conditioned by a number of reasons, including higher compute tile clocks, higher number of active EUs within a tile, and higher number of GPUs.

"On the 2 ExaFLOPS versus 1 ExaFLOPS bill, largely Ponte Vecchio, the core of the machine, is outperforming the original contractual milestones," said Pat Gelsinger, the head of Intel, reports NextPlatform. "So, when we set it up to have a certain number of processors — and you can go do the math to see what 2 ExaFLOPS is — we essentially overbuilt the number of sockets required to comfortably exceed 1 exaflops. Now that Ponte Vecchio is coming in well ahead of those performance objectives, for some of the workloads that are in the contract, we are now comfortably over 2 ExaFLOPS. So it was pretty exciting at that point that we will go from 1 ExaFLOPS to 2 ExaFLOPS pretty fast."

Ponte Vecchio to Power European Exascale Supercomputers

Intel's Ponte Vecchio compute GPU will be used for the Aurora supercomputer first, but eventually it will power other high-performance computing (HPC) systems too.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Europe's first supercomputers are set to use SiPearl's Rhea system-on-chip (SoC) designed specifically for them under the European Processor Initiative. The chip is projected to feature up to 72 Arm Neoverse Zeus cores interconnected using a mesh network, but it will still need an HPC accelerator to hit performance targets.

SiPearl decided to use Intel’s Ponte Vecchio GPUs within the system’s HPC node and for that reason will adopt Intel's oneAPI to its software stack.

ZettaScale: The Race Is On

It took the industry more than 12 years to get from petascale to exascale supercomputing computing to a large degree because the industry had to make multiple transitions to reach the new milestone. The first PetaScale system — IBM Roadrunner — was powered by AMD's dual-core Opteron processors connected to IBM's heterogeneous PowerXCell 8i accelerators. Since 2008, the industry has widely adopted heterogeneous supercomputers with GPU or special-purpose accelerators. These HPC accelerators use HBM memory and are now among the most complex chips in the industry. Since evolution of process technologies is slowing down, HPC accelerators for exascale systems now use multi-chiplet design.

Intel believes that advances of compute architectures, system architectures, packaging technologies, and software will make the path towards zettascale systems much shorter. The company said that it had challenged itself to make it to zetta in five years, by 2027. The chip giant does not disclose how it intends to rise performance of its HPC solutions by 1000 times in five years, but it looks like it has a plan.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JayNor "The system was first announced in 2015, was set to be powered by Intel's Xeon Phi accelerators and deliver around 180 PetaFLOPS in 2018."Reply

I believe those Xeon Phi accelerators were something similar to the Gracemont cores on the Alder Lake. Maybe this design will again be evaluated again, as leaks say the efficient core counts on the Alder Lake successors will increase from 8 to 16 in 2022, and to 24 in 2023. -

JayNor Intel stated that Aurora node processing was memory bandwidth limited with just HBM attached to Ponte Vecchio. They added the SRAM Rambo Cache as a solution.Reply

I've seen large improvements in HBM performance specs recently. I wonder if the Aurora node design is still at a point where they can evaluate those type of changes. -

dalek1234 ReplyIntegr8d said:You have to remember; these are ‘Intel years’.

It reminds me of Uber minutes. I think there are 120 seconds in each minute, last time I counted (yesterday)