Getting Immersed: DTI’s Tom Curtin, Man On A 3D Mission

Long-awaited, and long overdue, advances in autostereoscopic (glasses-free) 3D technology that have reached the consumer market in recent years were met with considerable skepticism from the AR/VR community. Limitations such as sweet spots for viewing, dead zones, and single-player restrictions have made glasses-free 3D less than appealing. So far, 3D game enthusiasts have mostly opted to stick with their glasses.

Dimension Technologies Inc. (DTI), the Rochester, NY-based engineering and development company best known for developing the first switchable 2D/autostereoscopic 3D display for NASA back in 1989 (along with 14 generations of product refinements for various industries), thinks its technology can change the game.

DTI's Director of Business Development Tom Curtin has spent the past six months taking that technology on the road—demonstrating at conferences and gamer meetups all over the world—on a mission to convince developers and players alike that DTI's glasses-free 3D technology is the real deal. The next stop is Immersed, an AR/VR industry conference being held November 23-24 in Toronto, where Curtin promises to make some big announcements about a Kickstarter campaign, price points and expected delivery dates.

Curtin spoke to Tom's Hardware about the challenges of creating a true glasses-free 3D experience for gamers and how DTI plans to succeed where others have failed.

Tom's Hardware: Tell us how autostereoscopic (glasses-free) 3D technology works.

Tom Curtin: There have been many approaches to this. Essentially, you have to create a right eye view and a left eye view—a stereo view—of whatever image you are trying to display. You have to direct that to the appropriate eye, and then the optic nerve and the brain do the assimilation to create the 3D with the depth of field and the spatial relationships that you're trying to deliver.

In the past, most of the technology has been focused in front of the screen, with lenticulars and parallax barriers. You're breaking up an image into the right eye and left eye views, and then delivering it to the appropriate eye. But all of those approaches typically involve blocking light and altering pixels, diminishing the resolution and brightness. And anytime you put anything on the front of a screen, you're going to compromise the 2D viewing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

TH: How does DTI's approach to glasses-free 3D differ?

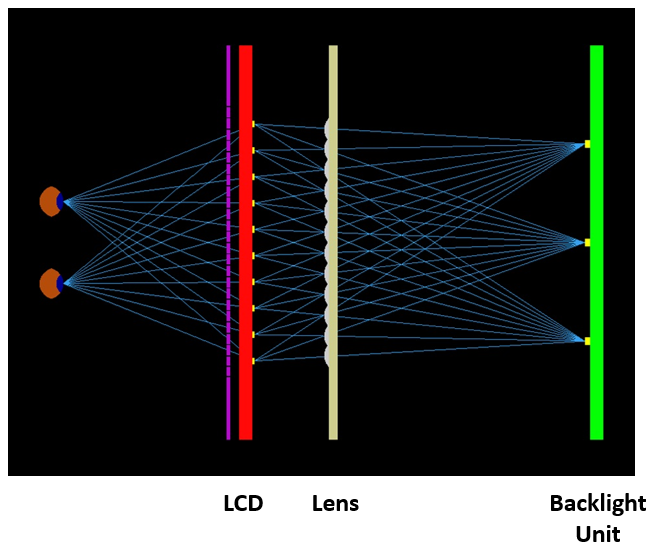

TC: Our approach is completely behind the screen. With our patented Time Multiplexed Backlight, what we do is create the right eye and left eye views by directing light lines at the pixels. That's the significant difference for the way we create the 3D image, and that's why our depth of field is really impressive. We get "wows" from people who have looked at 3D for a lot of years and have had both glasses-based and glasses-free experiences. So, they really know what they're looking at. The reason is that we create the 3D exclusively through the backlight by directing light at the pixels, so we don't disrupt any of the native performance of the display.

The backlight is a component that we can swap out on an existing commercially available display, install ours, and upgrade that display to a 2D/3D display and get the full native performance. So if it's a 120 Hz 1920 x 1080p, you will get 1920 x 1080p 120 Hz performance. That's why our 3D is going to be a really good solution for people who want to have glasses-free 3D gaming.

TH: Can you tell us about the work DTI has done for NASA and other government agencies, and how it can be applied to 3D gaming?

TC: We've been doing this work for NASA and a bunch of high-end government agencies that wanted to explore both the human factors and the ability to consume information in different situations. NASA astronauts are in cockpits looking at displays with a lot of different data coming in. Can we de-clutter that information? Can we give them reliable spatial relationship information so that they know where objects are relative to each other? This also has applications in remote operations of drones and robots. Anytime an operator can look at a screen and see with accuracy the relative position of objects next to each other allowing for time and space and movement, these are all the areas we've been developing for the government.

And when you think about it, this is all very relevant to the gamer who needs the exact same kind of visual information: the relationship of objects near each other, how fast things are moving, accuracy, depth of field, spatial relationships. These are all things that are critical to the operation of equipment—flying a plane, operating a spaceship. And, of course, we think these things will transfer very nicely to a gaming experience. It will be unlike anything that people have seen so far.

TH: Is it fair to say that gaming is the next frontier for DTI?

TC: Yes. We're stepping into the ring. We've been working in a closely held engineering environment for quite a while now. We've looked at the consumer market a couple of different times, and each time what we found was that the market simply wasn't ready yet. Everything was sort of out of sync in terms of the display technology.

Ultimately, it comes down to the user experience, and the gamers just were not going to accept the compromises that were required either from the displays or the 3D technology. There also wasn't a lot of 3D content available until the second half of the 2000s, when it really started to explode. And, with the renewed interest in movies as well, we think there is now a really good convergence of price points coming down for display technology and display performance specs reaching heights that are acceptable to gamers.

And I think the really interesting thing is that our technology, because it is focused exclusively on the backlight, is not disruptive to the upstream marketplace. We don't require anyone to do anything different from what they are already doing. And, as display technology performance goes up and the price comes down, we are a component swap-out for the backlight in an existing commercially available display. So, we think that's a fairly clear path for us. We still have challenges and issues, but we think we're on a very promising path to deliver a gamer ready 27-inch display.

TH: What are some of the challenges?

TC: Right now, we're working to remove the sweet spot limitations and support multiple viewers. We've had two approaches on that. The first one was to create what we call a five-view. It's a multiple viewer, 22-inch diagonal 3D display. It really breaks down that sweet spot restriction. People can look from any aspect of the viewable range of the display and see the 3D imagery coming off the screen.

And that's great, but it wasn't suitable or adaptable to the gaming audience because it really did require customized content. We had to take content and then create the five-view for projection on the display. And we weren't going to be asking game developers and game publishers and graphics to re-do what they're doing to support our five-view approach.

So, the next thing that we looked at—and the path we're pursuing—is eye-tracking. And, of course, eye-tracking has been a real hotbed of activity for a lot of reasons. With Kinect and gesture technology, all of those technologies require some form of understanding where the person is and what they're doing. And, for us, of course, the critical issue is, where are the eyes?

We look at eye tracking as a way of being able to direct our stereoscopic image to the person who's viewing based on their eye position. And we can track it and anticipate it. That's the best path we're on in testing and integrating an eye-tracking solution that will solve the sweet spot issue for glasses-free 3D. And that will be a major breakthrough.

TH: How is DTI going about perfecting its glasses-free 3D technology for gaming?

TC: When we made the commitment to deliver a high resolution 2D/3D monitor for gaming, the first thing we did was try to gain an understanding of gamer requirements so we could set our display performance targets. We invited Neil Schneider from Meant to be Seen 3D down to Rochester, NY to see the technology and give us feedback and insight. We attended SIGGRAPH 2014 in Vancouver and demoed our technology to 300 registered guests at a Global VR Meetup, and gained further insight from experts such as Jon Peddie.

We've shown our technology all over the world in the last six months. So, it really became a mission of building credibility for the technology based on getting it in front of enough experts that would say good things and would provide other people a confidence factor that we were in fact delivering the goods when it comes to the promises we make about depth of field and viewer experience.

We now have a spec that we believe gamers will find very appealing: 27-inch high-resolution 1920 x 1080p 2D/3D switchable monitor with eye-tracking to remove the sweet spot limitation and support multiple viewers; 120Hz and less than 5ms gray-to-gray to support first-person shooter gaming without ghosting or lag time.

We require no special software or modifications to games, movies or data content. And we're plug-and-play compatible with the upstream ecosystem: game developers, content creators, Intel, NVIDIA, AMD GPUs, DDD Tri-Def drivers. We are currently short-listing available eye tracking solutions and testing the integration with our patented Time Multiplexed Backlight.

TH: So, what’s next on your road to the delivery of this technology to the masses? There's been talk of a Kickstarter campaign. Can you give us an update on that?

TC: When the idea of a Kickstarter first surfaced, we realized the incredible dilemma we faced. We had to convince people that we had a completely new and different glasses-free 3D experience; but they had to see it with their own eyes. There was no other way. So, the only way we could be successful is if we could build enough credibility for our technology by showing it to 3D experts such as Neil Schneider, Jon Peddie, 3D movie editors and animators like Fangwei Lee, who saw our demo at SIGGRAPH and fell in love with it.

Our next stop is Immersed in Toronto, on November 23—a first of its kind event for 3D and Virtual Reality. There, we'll be making a big announcement about our Kickstarter, the price point for our 27-inch 3D/2D display, and expected delivery.

*****

Tom's Hardware is a media sponsor for the Immersed conference. Stay tuned for more coverage and interviews with Immersed speakers ahead of the November 23-24 show.

If you wish to register, there's a temporary promotion code ("TomsHardwareImmersed") that will knock $100 off the registration cost. Primarily, Immersed will consist of industry folks, but there is a free public exhibition on Sunday afternoon, November 23.

In any case, space is limited, so register in advance if you want to claim a spot. The Immersed conference is running registration through the Eventbrite page.

Follow us @tomshardware, on Facebook and on Google+

-

srsly I don't get it, how would this work? If I understand correctly, it can only vary individual pixel illumination between eyes, not actually change the value of pixels between eyes, so you don't actually get a stereo view, just a rough approximation of stereo lighting?Reply

And how do you do this without software? Wouldn't you still need to know information about the scene being rendered in order to meaningfully modulate the backlight? -

Tom Curtin @srsly.Reply

The optics and imaging science behind DTI's patented Time Multiplexed Backlight is very difficult to communicate and comprehend. The most important fact is that it works. And you can see it for yourself at Immersed in Toronto. -

srsly Thanks for response but you're on a hardware enthusiast site where many of the readers would welcome an explanation of Time Multiplexed Backlighting beyond the fact that it works. Thanks to Oculus Rift I would expect most people interested in AR/VR have a pretty good understanding of optics by nowReply -

Tom Curtin @srslyReply

Here's a more technical description.

DTI’s system sends light through the LCD in such a way that the two eyes see all of the pixels of the LCD at different times. When the light goes through the pixels to the left eye, the pixels are displaying the left eye view of a stereo pair; when light goes through the pixels to right eye, the pixels are displaying the right eye view of a stereo pair. This happens rapidly enough that no flicker is seen. Thus the user sees a true stereoscopic image. Internal firmware in the display makes sure that the lights and image display on the LCD pixels stay in sync with one another. The lighting system and related optics in the backlight are designed to send light to two observers simultaneously. An onboard eye tracking system will keep track of where the observers are and make sure that the light is aimed at their eyes.

The display will use the same type of field sequential output that is used when viewing with 3D shutter glasses – a left eye view followed by a right eye view every 1/120th second. In fact any game software, NVidia graphics card, and driver combination that works with 3D shutter glasses will be compatible with this display.

-

srsly awesome, thanks for the details. That makes significantly more sense. With this technology you would need to render separate images for each viewer, correct? That could prove rather taxing on graphics card. And you would need the 3d application to understand stereoscopic rendering so I don't understand how "We require no special software or modifications to games, movies or data content" unless you're just talking about content that's already stereoscopic-enabled. Nonetheless, this sounds like a very nice way to do 3d.Reply -

smeezekitty I just installed Windows 10 on a spare hard drive plugged in to my main rig.Reply

I have to say that the Windows setup experience is one of the worst I have

ever had. Requiring a Windows account as well as my full name and date of birth.

I find this "Always online" trend very alarming. It also defaults to setting up

Microsoft Onedrive (cloud storage). Sorry but no. I am not going to trust M$ or anyone else with my data.

Once that was finished, the finished product is better but still it is clearly

a mobile OS and not designed for desktops or even traditional laptops.

Usage of the word "Swipe" clearly implies it is designed for a touch screen.

I also dislike "apps". And the fact that news is on the start menu (but I am sure glad to have the start button back!)

I would say it is an improvement over 8 but still not up to snuff. It is way

too invasive and internet dependent