Intel Arc GPU performance momentum continues — 2.7X boost in AI-driven Stable Diffusion, largely thanks to Microsoft's Olive

Microsoft and Intel at work.

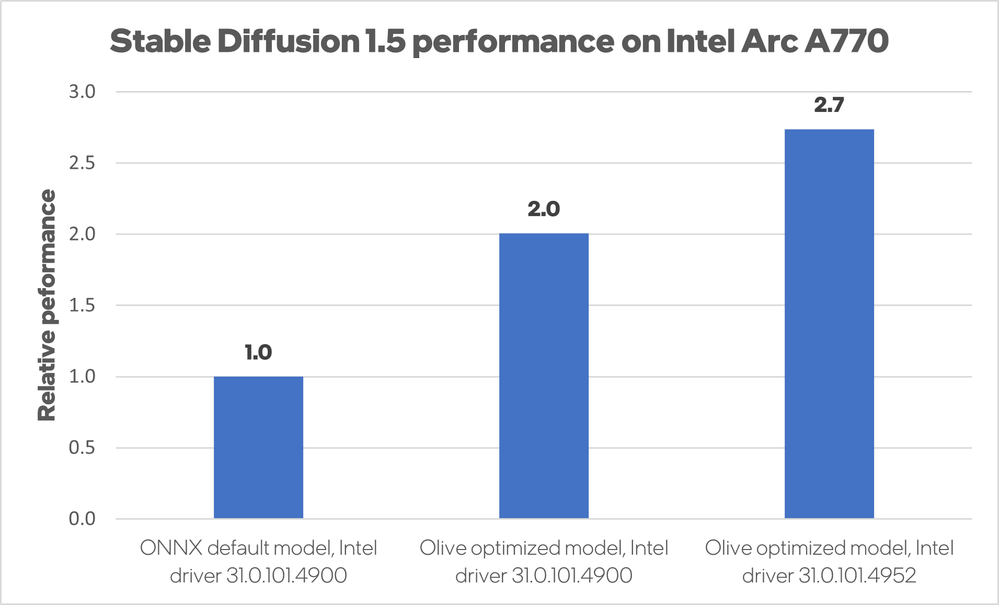

Intel's latest Arc Alchemist drivers feature a performance boost of 2.7X in AI image generator Stable Diffusion. Although some of that boost was thanks to good old-fashioned optimization, which the Intel driver team is well known for, most of the uplift was thanks to Microsoft Olive. Microsoft's machine learning optimization toolchain doubled Arc GPU performance in Stable Diffusion on its own, and it's not even the first time we've seen it happen in GPU drivers.

Microsoft Olive is a piece of software that basically takes an AI or machine learning model and then finds all the ways different hardware can accelerate it. While this isn't impossible without Olive, the AI-focused toolchain makes it so much easier. There is a large amount of AI hardware on the market made by different companies, and being able to skip a big step in the optimization process is really handy.

Intel Arc Alchemist GPUs are equipped with Xe Matrix Extensions (or XMX) cores, which are essentially Intel's version of Nvidia's Tensor cores. These cores can accelerate AI workloads like Stable Diffusion. However, previous drivers didn't fully take advantage of the XMX cores, and Olive on its own boosted performance by two times when applied to Intel's existing software.

The remaining performance uplift was accomplished by Intel optimizing the model that Microsoft Olive created, and the end result was a 2.7X boost in Stable Diffusion.

This isn't the first time we've seen such a large performance gain in an AI app, and not even the first time we've seen it happen specifically with a GPU and Microsoft Olive. Nvidia used the exact same toolchain to optimize its drivers for Stable Diffusion and also achieved double the performance.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

SSGBryan Every couple of weeks, my faith in the Arc series of cards is reinforced.Reply

Buying this card was a great decision. -

jkhoward Reply

Agreed, got mine for $280 and it’s a beast!SSGBryan said:Every couple of weeks, my faith in the Arc series of cards is reinforced.

Buying this card was a great decision. -

bit_user The question we always have to ask is: what was the tradeoff? Model optimization usually comes at the expense of accuracy. In the case of something like generative AI, how do they characterize & quantify that loss in accuracy?Reply -

palladin9479 ReplySSGBryan said:Every couple of weeks, my faith in the Arc series of cards is reinforced.

Buying this card was a great decision.

While I don't think it's quite ready for prime time yet, I'm very happy that Intel is going hard in the paint this time around. Focusing on the abandoned low to mid tier segments was a pretty smart move. I'm very interested to see what Battlemage looks like when it comes out. -

Alvar "Miles" Udell Could finally start seeing Arc discrete GPUs in office and student oriented laptops and desktops currently only covered by weak iGPUs, finally.Reply -

Broly MAXIMUMER I'm 99% certain AMD does indeed have some sort of "AI Accelerators" in RDNA 3, the just haven't given them some fancy acronym or name.Reply -

bit_user Reply

Yeah, it seems like they're just bolted on to the execution pipeline, since they use the existing VGPR (Vector General Purpose Registers) and you access them with instructions like WMMA (Wave Matrix Multiply Accumulate).Broly MAXIMUMER said:I'm 99% certain AMD does indeed have some sort of "AI Accelerators" in RDNA 3, the just haven't given them some fancy acronym or name.

You can read a little about them in Jarred's excellent RDNA3 writeup:

https://www.tomshardware.com/news/amd-rdna-3-gpu-architecture-deep-dive-the-ryzen-moment-for-gpus#section-amd-rdna-3-compute-unit-enhancements

To be honest, that + the ray tracing improvements of RDNA 3 are the only reasons I haven't jumped on a discounted RX 6800 card. If you want to do anything with AI, you're going to be much better off with RDNA 3.